Howto: Clustering JMS with JOnAS

Introduction

Generalities about Clustering JMS

The JMS API provides a separate domain for each messaging approach, point-to-point or

publish/subscribe. The point-to-point domain is built around the concept of queues, senders and receivers.

The publish/subscribe domain is built around the concept of topic, publisher and subscriber.

Additionally it provides a unified domain with common interfaces that enable the use of queue and topic.

This domain defines the concept of producers and consumers.

The classic sample uses a very simple configuration (centralized) made of one server hosting a queue and a topic.

The server is administratively configured for accepting connection requests from the anonymous user.

JMS clustering aims to offer a solution for both the scalability and the high availability for the JMS accesses.

This document gives an overview of the JORAM capabilities for clustering a JMS application in the J2EE context.

The load-balancing and fail-over mechanisms are described and a user guide describing how to build such a configuration

is provided. Further information is available in the JORAM documentation here.

Configuration

The following information will be presented:

- Load balancing throw cluster topic and cluster queue.

The distributed capabilities of JORAM will be examined.

- How to present the JORAM HA enabling to ensure the high availability of the JORAM server.

- How to build an MDB clustering architecture with both JOnAS and JORAM enabling to build

an MDB based application ensuring the high availability.

Getting started :

- newjc will be used for generating the initial configuration of the JMS cluster. The tool must be run with the default inputs except for the architecture (bothWebEjb) and number of nodes (2). See here for further information about the newjc tool.

- JOnAS's examples newsamplemdb and newsamplemdb2 will be used to illustrate the configuration. First they must be compiled. Go to $JONAS_ROOT/examples/src and do ant install.

Load balancing

General Information:

First, ensure that the JONAS_BASE and JONAS_ROOT are correct.

If the two instances are run on different computers, the following steps must be performed. In all examples, replace localhost by the two IP addresses of the two computers.

Go to JONAS_ROOT/examples/sampleCluster2/newjc and launch newjc.sh.

The example uses the directory $JONAS_BASE/jb, the architecture "bothWebEjb" and two instances of JOnAS.

Now copy JONAS_BASE to $JONAS_BASE/jb/jb1.

General scenario for Topic

The following scenario and general settings are proposed:

- Two instances of JOnAS are run (JOnAS "1" and JOnAS "2").

- Each JOnAS instance has a dedicated collocated JORAM server: server

"S0" for JOnAS A, "S1" for JOnAS B. Those two servers are aware of each

other.

- The topic is hosted by JORAM server S0.

- A client will send 10 messages to the topic.

Why use clustered Topic?

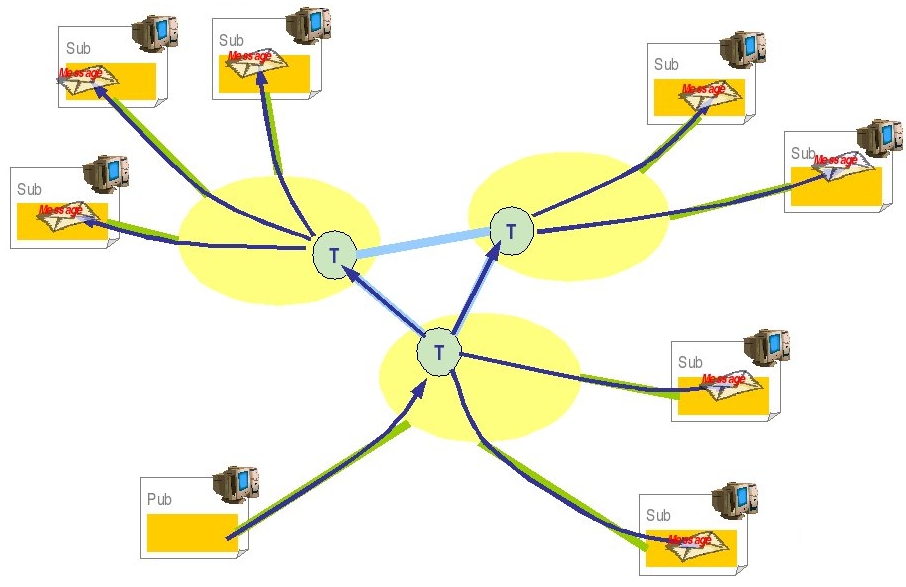

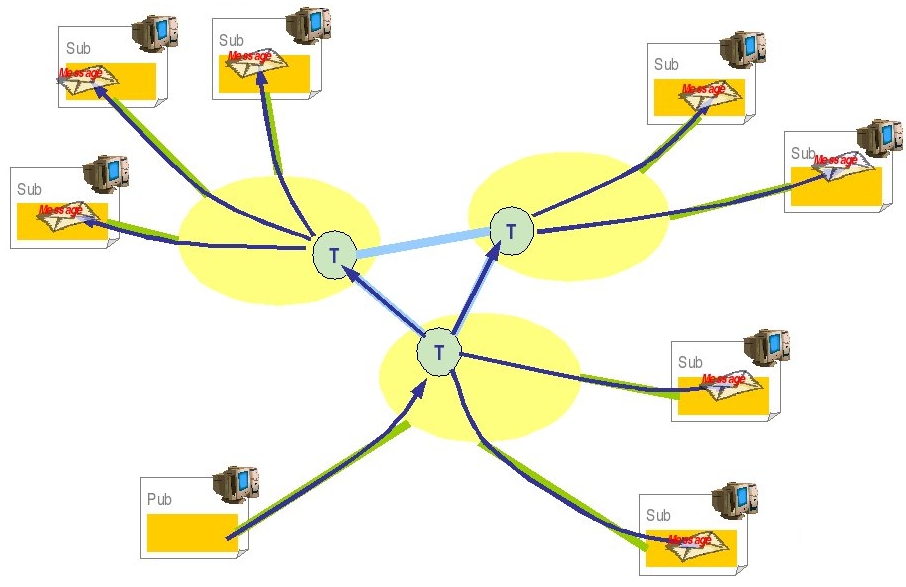

A non hierarchical topic might also be distributed among many servers. Such a topic, to be

considered as a single logical topic, is made of topic representatives, one per server. Such an architecture allows a publisher to publish messages on a representative of the topic. In the example, the publisher works with the representative on server 0. If a subscriber subscribed to any other representative (on server 1 in the example), it will get the messages produced by the publisher.

Load balancing of topics is very useful because it allows distributed topic subscriptions across

the cluster.

|

How to configure everything is now explained in greater detail.

1) Go to $JONAS_BASE/conf and edit the a3servers.xml file.

<?xml version="1.0"?>

<config

<domain name="D1"/>

<server id="0" name="S0" hostname="localhost">

<network domain="D1" port="16301"/>

<service class="org.objectweb.joram.mom.proxies.ConnectionManager"

args="root root"/>

<service class="org.objectweb.joram.mom.proxies.tcp.TcpProxyService"

args="16010"/>

</server>

<server id="1" name="S1" hostname="localhost">

<network domain="D1" port="16302"/>

<service class="org.objectweb.joram.mom.proxies.ConnectionManager"

args="root root"/>

<service class="org.objectweb.joram.mom.proxies.tcp.TcpProxyService"

args="16020"/>

</server>

</config>

2) Then copy this file to $HOME/jb/jb2/conf and replace the previous version.

3) After that, add the cluster view (with the topic) in the $HOME/jb/jb1/conf/joramAdmin.xml file. Add the following lines:

<Cluster>

<Topic name="mdbTopic"

serverId="0">

<freeReader/>

<freeWriter/>

<jndi name="mdbTopic"/>

</Topic>

<Topic name="mdbTopic"

serverId="1">

<freeReader/>

<freeWriter/>

<jndi name="mdbTopic"/>

</Topic>

<freeReader/>

<freeWriter/>

<reader user="anonymous"/>

<writer user="anonymous"/>

</Cluster>

4) Then verify the ra.xml and jornas-ra.xml files for the two instances. Verify that the server id, server name... are correct.

Now launch runCluster

Then deploy the application, for example, create a deploy.sh file:

#!/bin/ksh

export JONAS_BASE=$PWD/jb1

cp $JONAS_ROOT/examples/output/ejbjars/newsamplemdb.jar $JONAS_BASE/ejbjars/

jonas admin -a newsamplemdb.jar -n node1

export JONAS_BASE=$PWD/jb2

cp $JONAS_ROOT/examples/output/ejbjars/newsamplemdb.jar $JONAS_BASE/ejbjars/

jonas admin -a newsamplemdb.jar -n node2

Finally launch the client

jclient newsamplemdb.MdbClient

Something similar to this should appear:

ClientContainer.info : Starting client...

JMS client: tcf = TCF:localhost-16010

JMS client: topic = topic#1.1.1026

JMS client: tc = Cnx:#0.0.1026:5

MDBsample is Ok

In addition, the following should appear on the other JOnAS:

Message received: Message6

MdbBean onMessage

Message received: Message10

MdbBean onMessage

Message received: Message9

The fact that both of the messages appear on the two different JOnASes shows the load balancing. It is more effective for Queue because only one part of the whole message will be in the first JOnAS base and the other part in the second JOnAS base.

General scenario for Queue

Globally, the load balancing in the context of queues may be meaningless in comparison of load balancing topic.

It would be a bit like load balancing a stateful session bean instance (which just requires failover). But the

JORAM distributed architecture enables distributing the load of the queue access between several JORAM server nodes.

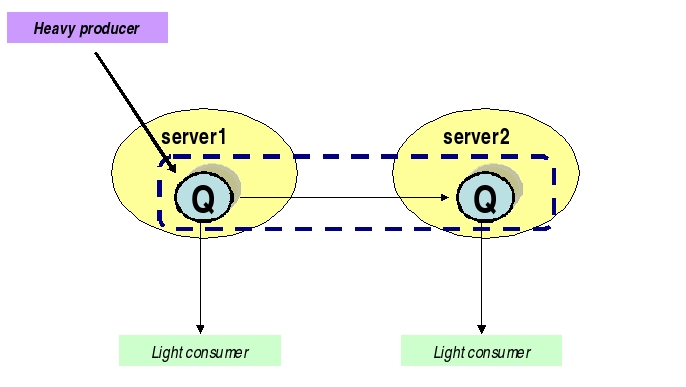

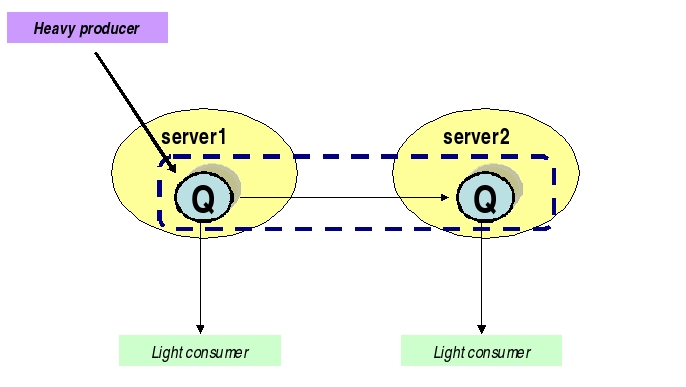

Here is a diagram of what is going to happen for the Queue and the message:

|

A load balancing message queue may be needed for a high rate of messages.

A clustered queue is a cluster of queues exchanging messages depending on their load. The example has a cluster of two queues. A heavy producer accesses its local queue and sends messages. It quickly becomes loaded and decides to forward messages to the other queue of its cluster which is not under heavy load.

For this case some parameters must be set:

period: period (in ms) of activation of the load factor evaluation routine for a queue

producThreshold: number of messages above which a queue is considered loaded, a load factor evaluation launched, messages forwarded to other queues of the cluster

consumThreshold: number of pending "receive" requests above which a queue will request messages from the other queues of the cluster

autoEvalThreshold: set to "true" for requesting an automatic revaluation of the queues' thresholds values according to their activity

waitAfterClusterReq: time (in ms) during which a queue that requested something from the cluster is not authorized to do it again

For further information, see the JORAM documentation

The scenario for Queue is similar to the previous one. In fact, step 1) and step 2) are the same. Make the following changes for step 3):

<Cluster>

<Queue name="mdbQueue"

serverId="0"

className="org.objectweb.joram.mom.dest.ClusterQueue">

<freeReader/>

<freeWriter/>

<property name="period" value="10000"/>

<property name="producThreshold" value="50"/>

<property name="consumThreshold" value="2"/>

<property name="autoEvalThreshold" value="false"/>

<property name="waitAfterClusterReq" value="1000"/>

<jndi name="mdbQueue"/>

</Queue>

<Queue name="mdbQueue"

serverId="1"

className="org.objectweb.joram.mom.dest.ClusterQueue">

<freeReader/>

<freeWriter/>

<property name="period" value="10000"/>

<property name="producThreshold" value="50"/>

<property name="consumThreshold" value="2"/>

<property name="autoEvalThreshold" value="false"/>

<property name="waitAfterClusterReq" value="1000"/>

<jndi name="mdbQueue"/>

</Queue>

<freeReader/>

<freeWriter/>

<reader user="anonymous"/>

<writer user="anonymous"/>

</Cluster>

JORAM HA and JOnAS

Generality

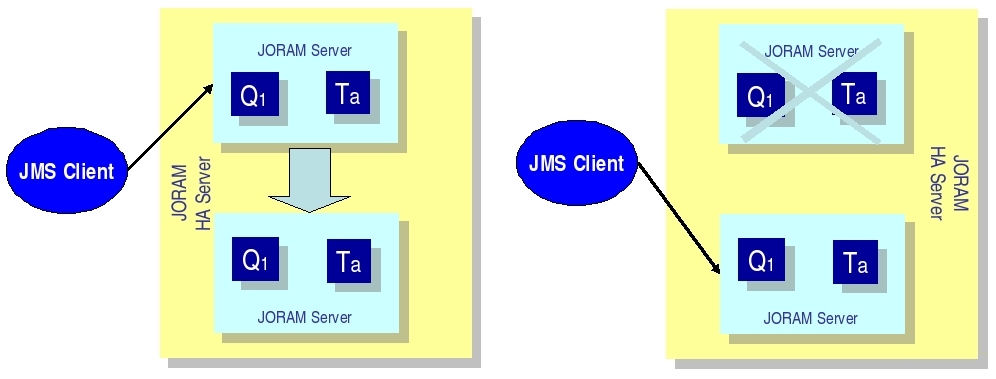

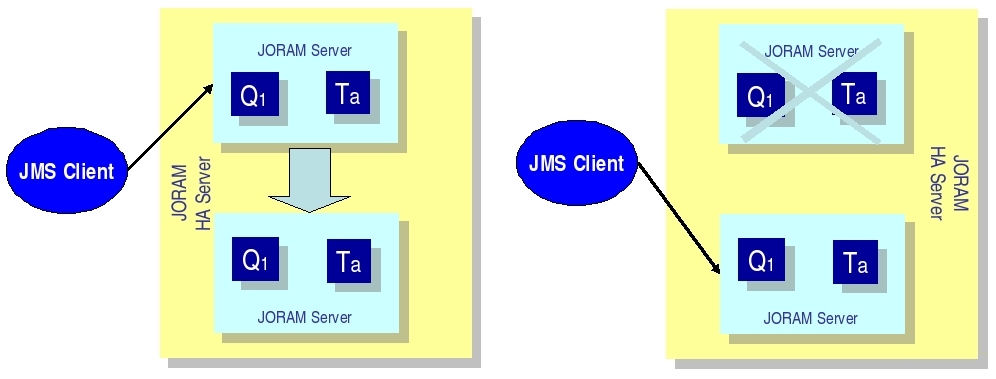

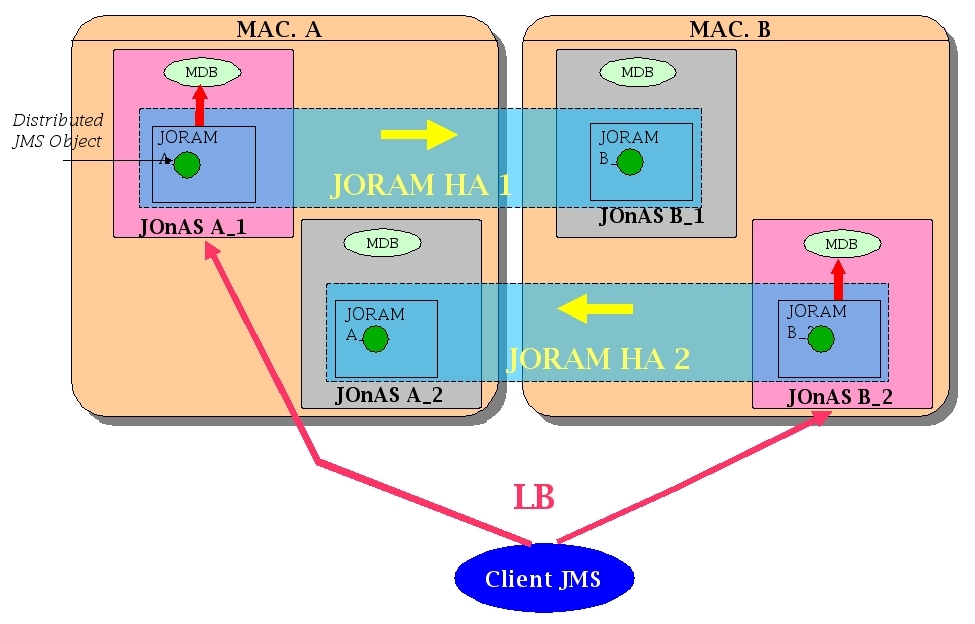

|

An HA server is actually a group of servers, one of which is the master server that coordinates the other slave servers. An external server that communicates with the HA server is actually connected to the master server.

Each replicated JORAM server executes the same code as a standard server except for the communication with the clients.

In the example, the collocated clients use a client module (newsamplemdb). If the server replica is the master, then the connection is active enabling the client to use the HA JORAM server. If the replica is a slave, then the connection opening is blocked until the replica becomes the master.

Configuration

Several files must be changed to create a JORAM HA configuration:

a3servers.xml

A clustered server is defined by the element "cluster". A cluster owns an identifier and a name defined by the attributes "id" and "name" (exactly like a standard server). Two properties must be defined:

"Engine" must be set to "fr.dyade.aaa.agent.HAEngine" which is the class name of the engine that provides high availability.

"nbClusterExpected" defines the number of replicas that must be connected to the group communication channel used before this replica starts. By default it is set to 2. If there are more than two clusters, this specification must be inserted in the configuration file. If there are two clusters, this specification is not required.

In the case of one server and one replica, the value must be set to 1.

<?xml version="1.0"?/>

<config>

<domain name="D1"/>

<property name="Transaction" value="fr.dyade.aaa.util.NullTransaction"/>

<cluster id="0" name="s0">

<property name="Engine" value="fr.dyade.aaa.agent.HAEngine" />

<property name="nbClusterExpected" value="1" />

For each replica, an element "server" must be added. The attribute "id" defines the identifier of the replica inside the cluster. The attribute "hostname" gives the address of the host where the replica is running. The network is used by the replica to communicate with external agent servers, i.e., servers located outside of the cluster and not replicas.

This is the entire configuration for the a3servers.xml file of the first JOnAS instance jb1:

<?xml version="1.0"?>

<config<

<domain name="D1"/>

<property name="Transaction" value="fr.dyade.aaa.util.NullTransaction"/>

<cluster id="0" name="s0">

<property name="Engine" value="fr.dyade.aaa.agent.HAEngine" />

<property name="nbClusterExpected" value="1" />

<server id="0" hostname="localhost">

<network domain="D1" port="16300"/>

<service class="org.objectweb.joram.mom.proxies.ConnectionManager" args="root root"/>

<service class="org.objectweb.joram.mom.proxies.tcp.TcpProxyService" args="16010"/>

<service class="org.objectweb.joram.client.jms.ha.local.HALocalConnection"/>

</server>

<server id="1" hostname="localhost">

<network domain="D1" port="16301"/>

<service class="org.objectweb.joram.mom.proxies.ConnectionManager" args="root root"/>

<service class="org.objectweb.joram.mom.proxies.tcp.TcpProxyService" args="16020"/>

<service class="org.objectweb.joram.client.jms.ha.local.HALocalConnection"/>

</server>

</cluster>

</config>

The cluster id = 0 and the name S0. It is exactly the same file for the second instance of JOnAS.

joramAdmin.xml

Here is the joramAdmin.xml file configuration:

<?xml version="1.0"?>

<JoramAdmin>

<AdminModule>

<collocatedConnect name="root" password="root"/>

</AdminModule>

<ConnectionFactory className="org.objectweb.joram.client.jms.ha.tcp.HATcpConnectionFactory">

<hatcp url="hajoram://localhost:16010,localhost:16020" reliableClass="org.objectweb.joram.client.jms.tcp.ReliableTcpClient"/>

<jndi name="JCF"/>

</ConnectionFactory>

<ConnectionFactory className="org.objectweb.joram.client.jms.ha.tcp.QueueHATcpConnectionFactory">

<hatcp url="hajoram://localhost:16010,localhost:16020" reliableClass="org.objectweb.joram.client.jms.tcp.ReliableTcpClient"/>

<jndi name="JQCF"/>

</ConnectionFactory>

<ConnectionFactory className="org.objectweb.joram.client.jms.ha.tcp.TopicHATcpConnectionFactory">

<hatcp url="hajoram://localhost:16010,localhost:16020" reliableClass="org.objectweb.joram.client.jms.tcp.ReliableTcpClient"/>

<jndi name="JTCF"/>

</ConnectionFactory>

Each connection factory has its own specification. One is in case of the Queue, one for Topic, and one for no define arguments. Each time the hatcp url must be entered, the url of the two instances. In the example, it is localhost:16010 and localhost:16020. It allows the client to change the instance when the first one is dead.

After this definition the user, the queue and topic can be created.

ra and jonas-ra.xml files

First, in order to recognize the cluster, a new parameter must be declared in these files.

<config-property>

<config-property-name>ClusterId</config-property-name>

<config-property-type>java.lang.Short</config-property-type>

<config-property-value>0</config-property-value>

</config-property>

Here the name is not really appropriate but in order to keep some coherence this name was used. In fact it represents a replica so it would have been better to call it replicaId.

Consequently, for the first JOnAS instance, copy the code just above. For the second instance, change the value to 1 (in order to signify this is another replica).

Illustration

First launch the two JOnAS bases. Create a runHa.sh file in which the following code will be added:

export JONAS_BASE=$PWD/jb1

export CATALINA_BASE=$JONAS_BASE

rm -f $JONAS_BASE/logs/*

jonas start -win -n node1 -Ddomain.name=HA

Then do the same for the second JOnAS base. After that launch the script.

One of the two JOnAS bases (the one which is the slowest) will be in a waiting state when reading the joramAdmin.xml

JoramAdapter.start : - Collocated JORAM server has successfully started.

JoramAdapter.start : - Reading the provided admin file: joramAdmin.xml

whereas the other one is launched successfully.

Then launch (through a script or not) the newsamplemdb example:

jclient -cp /JONAS_BASE/jb1/ejbjars/newsamplemdb.jar:/JONAS_ROOT/examples/classes -carolFile clientConfig/carol.properties newsamplemdb.MdbClient

Messages are sent on the JOnAS base which was launched before. Launch it again and kill the current JOnAS. The second JOnAS will automatically wake up and take care of the other messages.

MDB Clustering

Generality

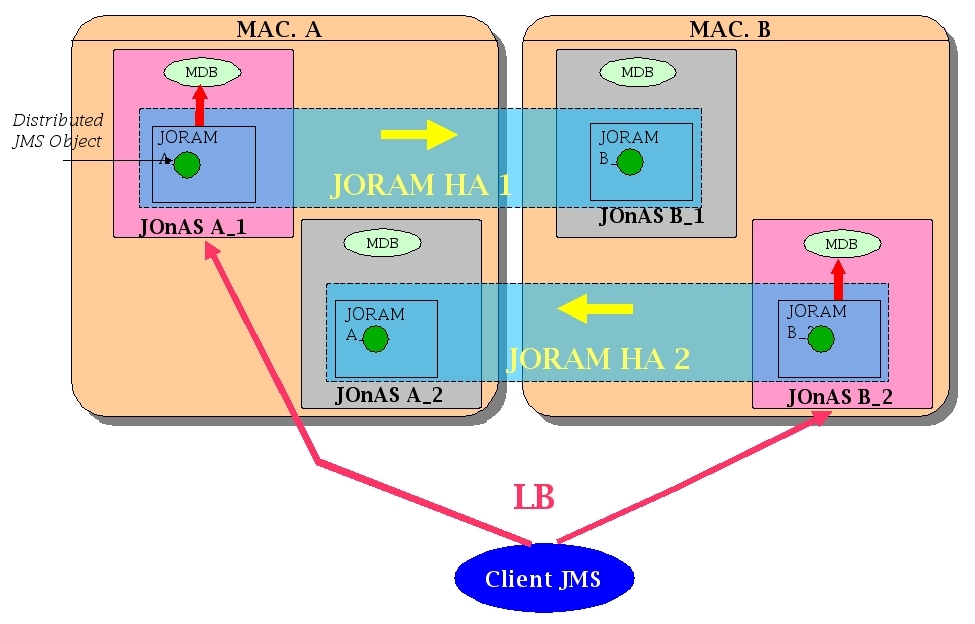

This is a proposal for building an MDB clustering based application.

This is like contested Queues. i.e., there is more than one receiver on different machines receiving from the queue. This load balances the work done by the queue receiver, not the queue itself.

The HA mechanism can be mixed with the load balancing policy based on clustered destinations. The load is balanced between several HA servers. Each element of a clustered destination is deployed on a separate HA server.

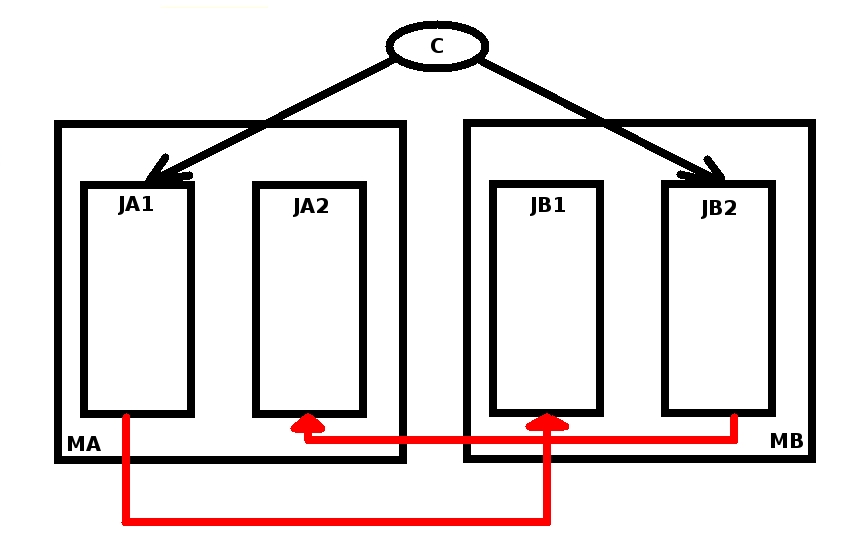

|

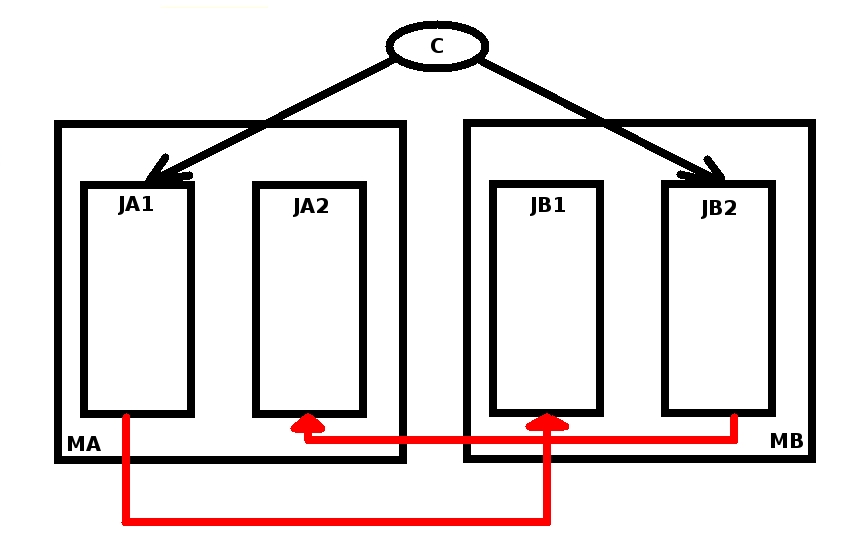

Configuration

Here is the supposed configuration (supposed because it has not been verifed).

|

Illustration

The configuration must now be tested, as follows:

First make JA1 crash and verify that messages are spread between JB1 and JB2.

Then make JB2 crash and verify that messages are spread between JA1 and JA2.

Finally make JA1 and JB2 crash and verify that messages are spread between JA2 and JB1.