|

vCenter Requirement

|

Value

|

Notes

|

|---|---|---|

|

vCenter User

|

|

This user must have admin privileges.

|

|

vCenter User Password

|

|

Password for the above user.

|

|

vCenter Datacenter Name

|

|

Name of the datacenter.

|

|

vCenter Cluster Name

|

|

Name of the cluster.

|

|

VLAN Information

|

Value

|

Notes

|

|---|---|---|

|

ESXi VLAN

|

|

VLAN on which all your ESXi hypervisors reside.

|

|

ESXI VLAN IP Address

|

|

IP Address Range in the ESXi VLAN. One address per Virtual Router is used from this range.

|

|

ESXi VLAN IP Gateway

|

|

|

|

ESXi VLAN Netmask

|

|

|

|

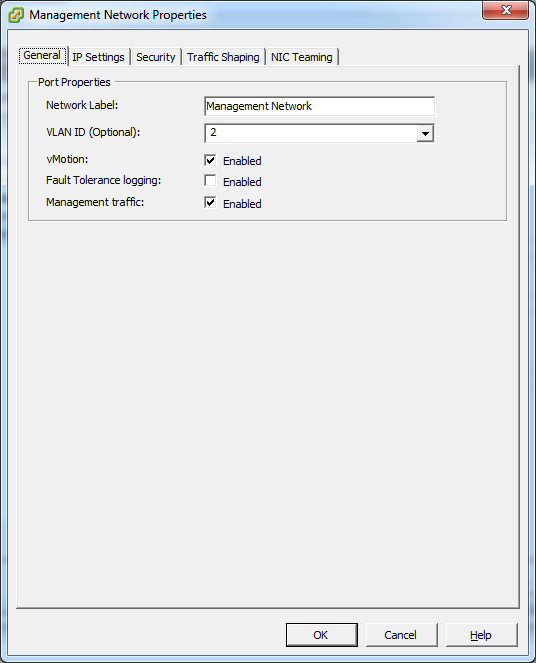

Management Server VLAN

|

|

VLAN on which the CloudStack Management server is installed.

|

|

Public VLAN

|

|

VLAN for the Public Network.

|

|

Public VLAN Gateway

|

|

|

|

Public VLAN Netmask

|

|

|

|

Public VLAN IP Address Range

|

|

Range of Public IP Addresses available for CloudStack use. These addresses will be used for virtual router on CloudStack to route private traffic to external networks.

|

|

VLAN Range for Customer use

|

|

A contiguous range of non-routable VLANs. One VLAN will be assigned for each customer.

|

|

Required

|

Optional

|

|---|---|

|

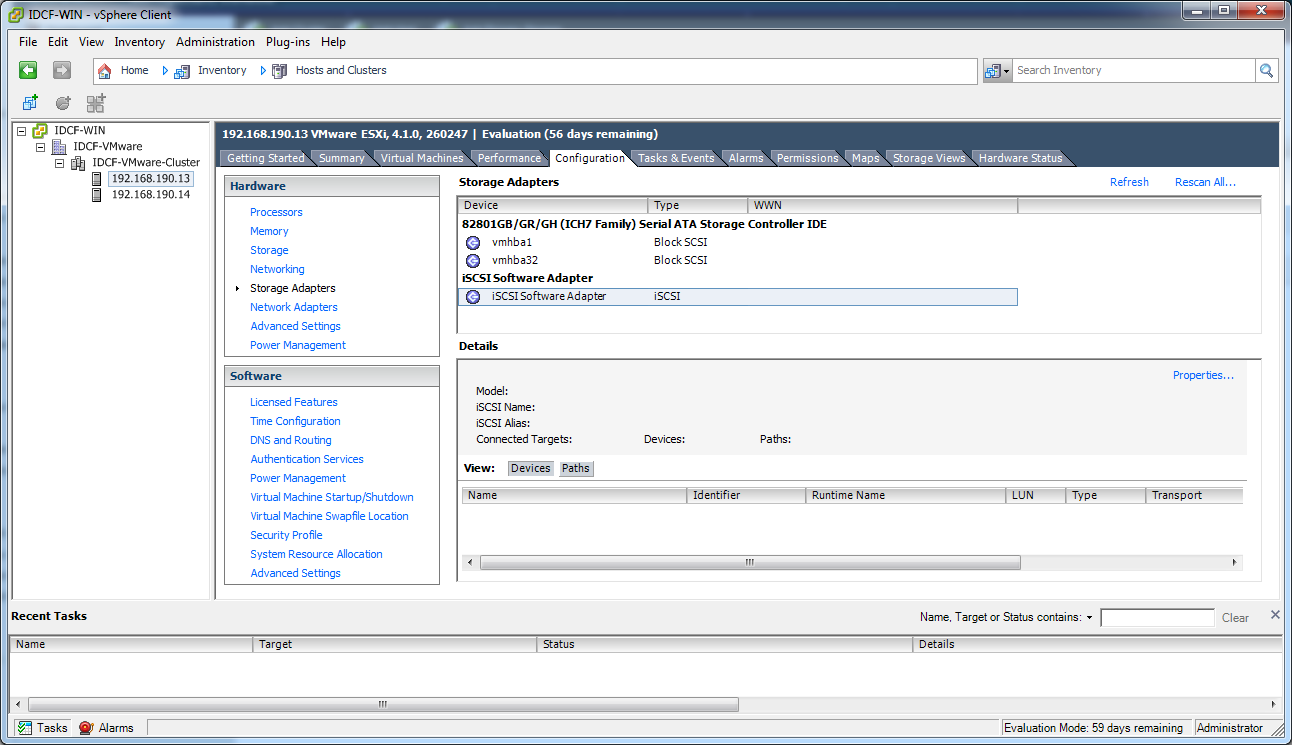

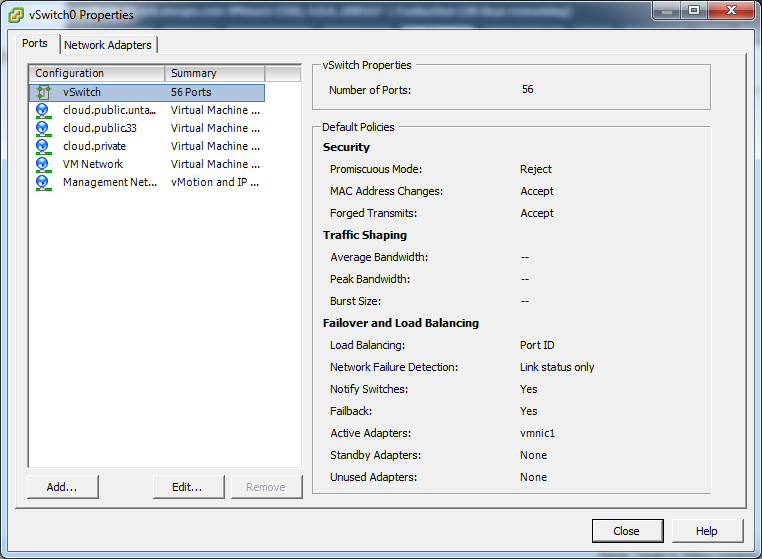

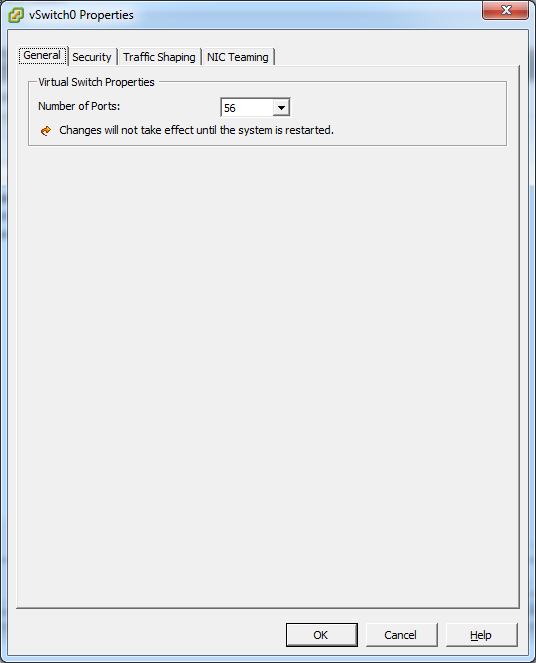

ESXi host setup

|

NIC bonding

|

|

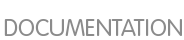

Configure host physical networking, virtual switch, vCenter Management Network, and extended port range

|

Multipath storage

|

|

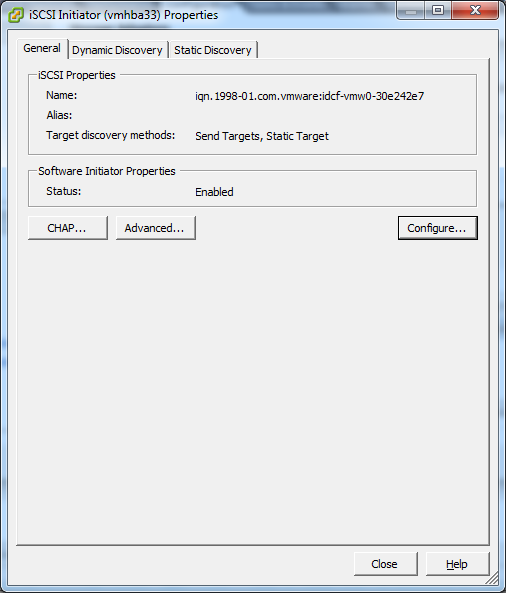

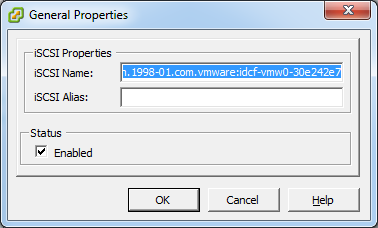

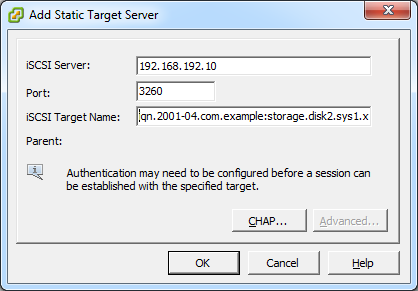

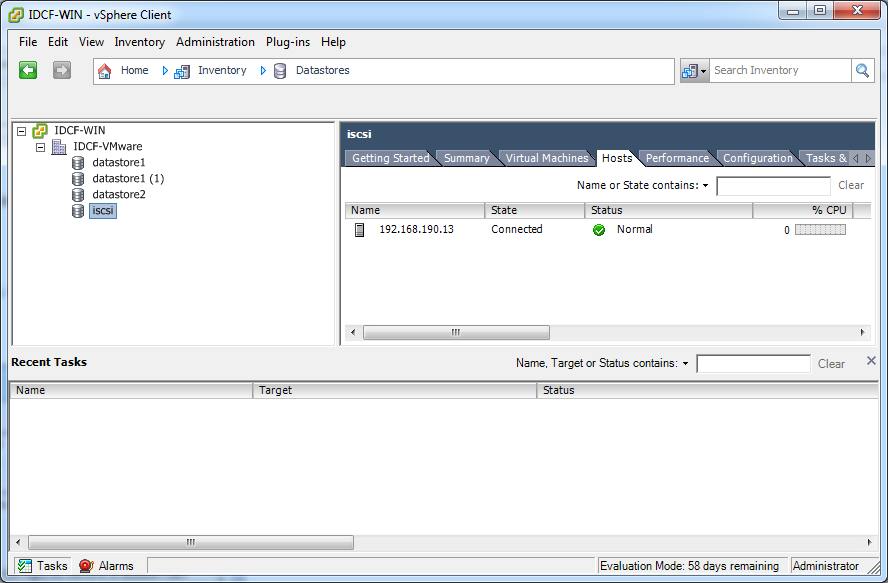

Prepare storage for iSCSI

|

|

|

Configure clusters in vCenter and add hosts to them, or add hosts without clusters to vCenter

|

|

esxcfg-firewall -o 59000-60000,tcp,in,vncextras esxcfg-firewall -o 59000-60000,tcp,out,vncextras