CloudStack is an open source software platform that pools computing resources to build public, private, and hybrid Infrastructure as a Service (IaaS) clouds. CloudStack manages the network, storage, and compute nodes that make up a cloud infrastructure. Use CloudStack to deploy, manage, and configure cloud computing environments.

Typical users are service providers and enterprises. With CloudStack, you can:

Set up an on-demand, elastic cloud computing service. Service providers can sell self service virtual machine instances, storage volumes, and networking configurations over the Internet.

Set up an on-premise private cloud for use by employees. Rather than managing virtual machines in the same way as physical machines, with CloudStack an enterprise can offer self-service virtual machines to users without involving IT departments.

1.2. What Can CloudStack Do?

Multiple Hypervisor Support

CloudStack works with a variety of hypervisors, and a single cloud deployment can contain multiple hypervisor implementations. The current release of CloudStack supports pre-packaged enterprise solutions like Citrix XenServer and VMware vSphere, as well as KVM or Xen running on Ubuntu or CentOS.

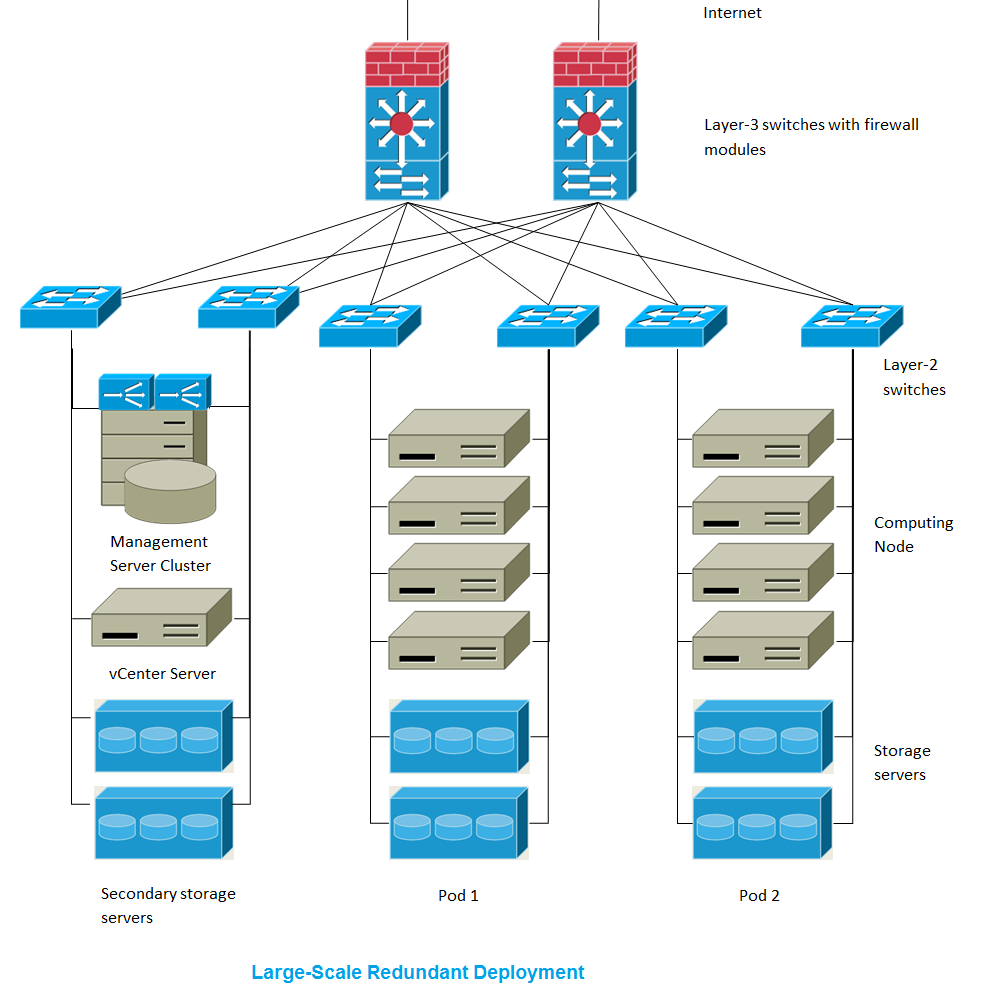

Massively Scalable Infrastructure Management

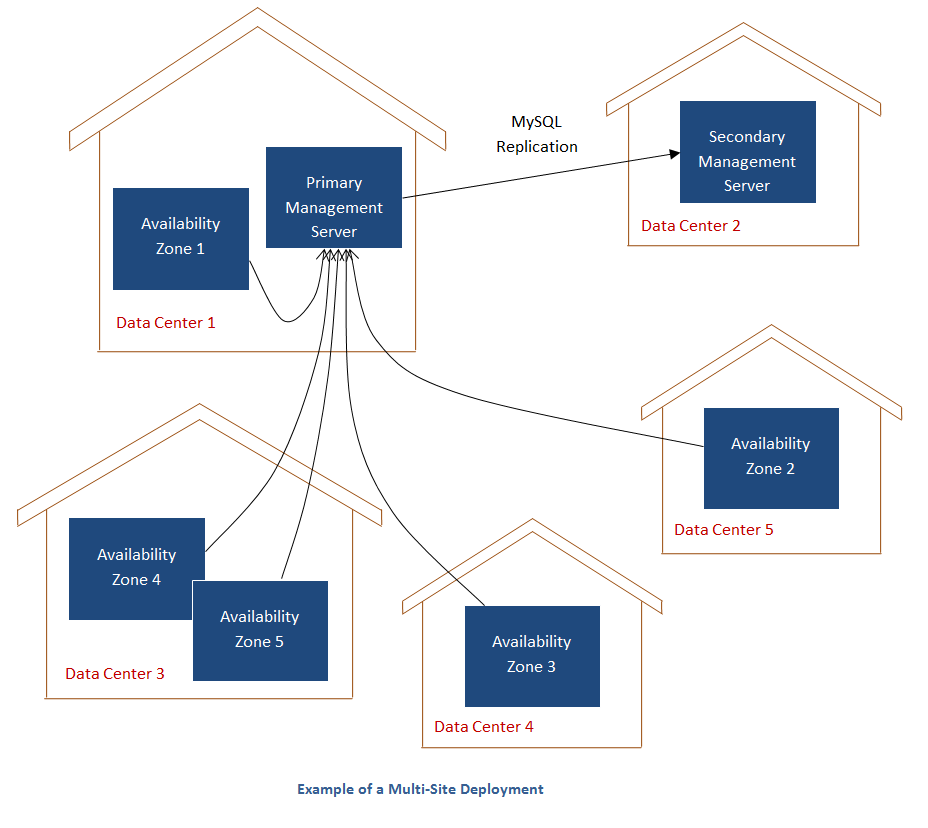

CloudStack can manage tens of thousands of servers installed in multiple geographically distributed datacenters. The centralized management server scales linearly, eliminating the need for intermediate cluster-level management servers. No single component failure can cause a cloud-wide outage. Periodic maintenance of the management server can be performed without affecting the functioning of virtual machines running in the cloud.

Automatic Configuration Management

CloudStack automatically configures each guest virtual machine’s networking and storage settings.

CloudStack internally manages a pool of virtual appliances to support the cloud itself. These appliances offer services such as firewalling, routing, DHCP, VPN access, console proxy, storage access, and storage replication. The extensive use of virtual appliances simplifies the installation, configuration, and ongoing management of a cloud deployment.

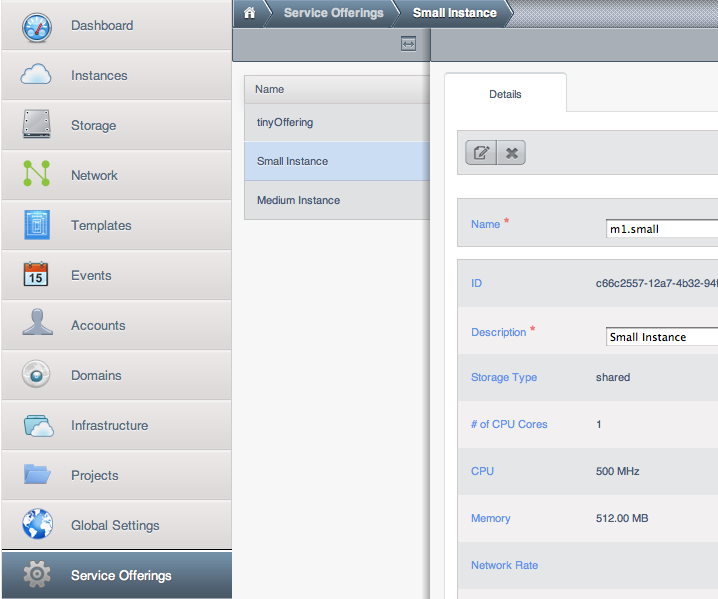

Graphical User Interface

CloudStack offers an administrator's Web interface, used for provisioning and managing the cloud, as well as an end-user's Web interface, used for running VMs and managing VM templates. The UI can be customized to reflect the desired service provider or enterprise look and feel.

API and Extensibility

CloudStack provides an API that gives programmatic access to all the management features available in the UI. The API is maintained and documented. This API enables the creation of command line tools and new user interfaces to suit particular needs. See the Developer’s Guide and API Reference, both available at

Apache CloudStack Guides and

Apache CloudStack API Reference respectively.

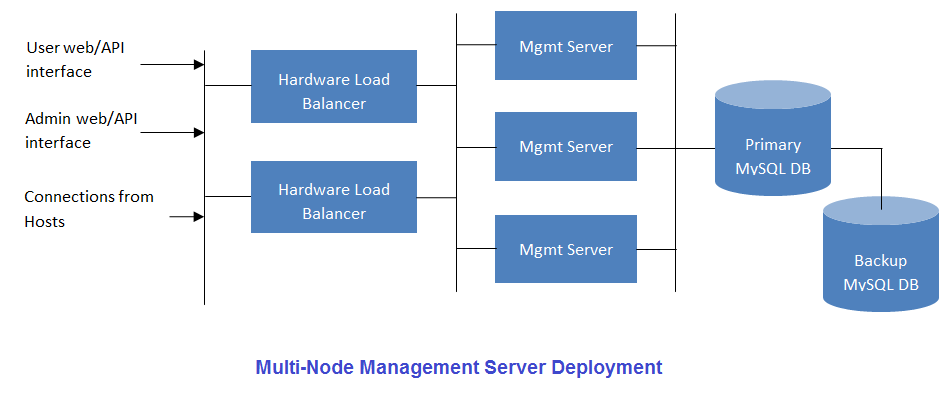

High Availability

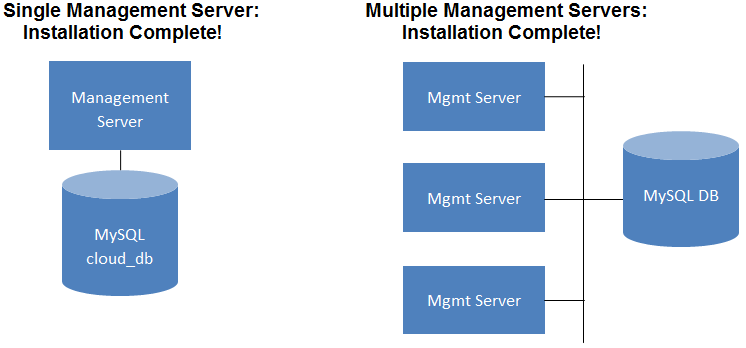

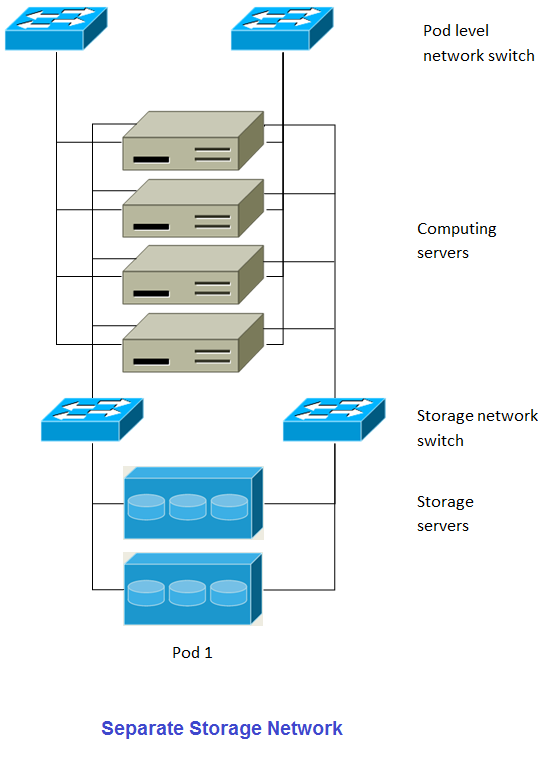

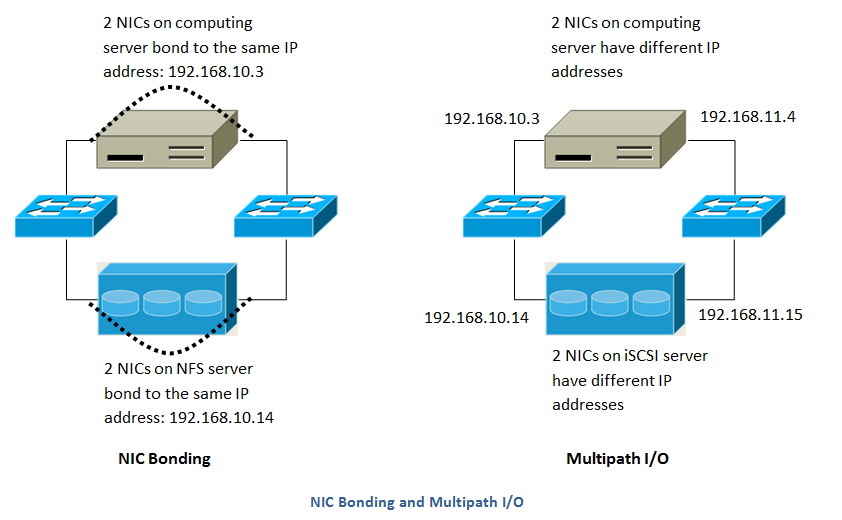

CloudStack has a number of features to increase the availability of the system. The Management Server itself may be deployed in a multi-node installation where the servers are load balanced. MySQL may be configured to use replication to provide for a manual failover in the event of database loss. For the hosts, CloudStack supports NIC bonding and the use of separate networks for storage as well as iSCSI Multipath.

1.3. Deployment Architecture Overview

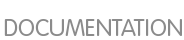

A CloudStack installation consists of two parts: the Management Server and the cloud infrastructure that it manages. When you set up and manage a CloudStack cloud, you provision resources such as hosts, storage devices, and IP addresses into the Management Server, and the Management Server manages those resources.

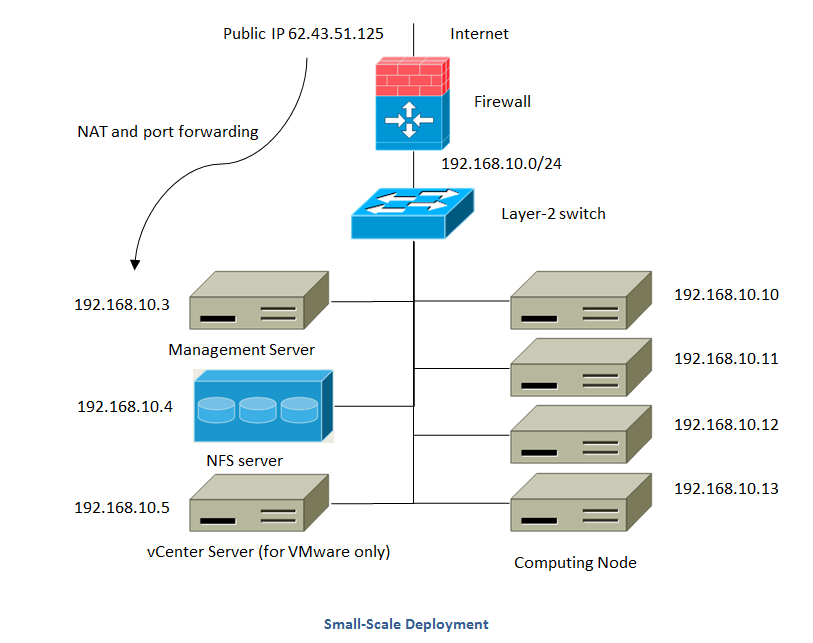

The minimum production installation consists of one machine running the CloudStack Management Server and another machine to act as the cloud infrastructure (in this case, a very simple infrastructure consisting of one host running hypervisor software). In its smallest deployment, a single machine can act as both the Management Server and the hypervisor host (using the KVM hypervisor).

A more full-featured installation consists of a highly-available multi-node Management Server installation and up to tens of thousands of hosts using any of several advanced networking setups. For information about deployment options, see the "Choosing a Deployment Architecture" section of the CloudStack Installation Guide.

1.3.1. Management Server Overview

The Management Server is the CloudStack software that manages cloud resources. By interacting with the Management Server through its UI or API, you can configure and manage your cloud infrastructure.

The Management Server runs on a dedicated server or VM. It controls allocation of virtual machines to hosts and assigns storage and IP addresses to the virtual machine instances. The Management Server runs in a Tomcat container and requires a MySQL database for persistence.

The machine must meet the system requirements described in System Requirements.

The Management Server:

Provides the web user interface for the administrator and a reference user interface for end users.

Provides the APIs for CloudStack.

Manages the assignment of guest VMs to particular hosts.

Manages the assignment of public and private IP addresses to particular accounts.

Manages the allocation of storage to guests as virtual disks.

Manages snapshots, templates, and ISO images, possibly replicating them across data centers.

Provides a single point of configuration for the cloud.

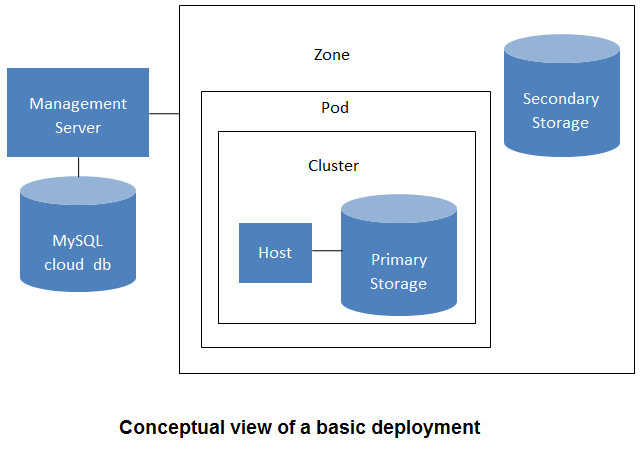

1.3.2. Cloud Infrastructure Overview

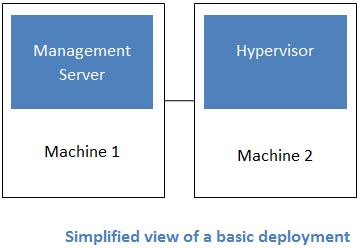

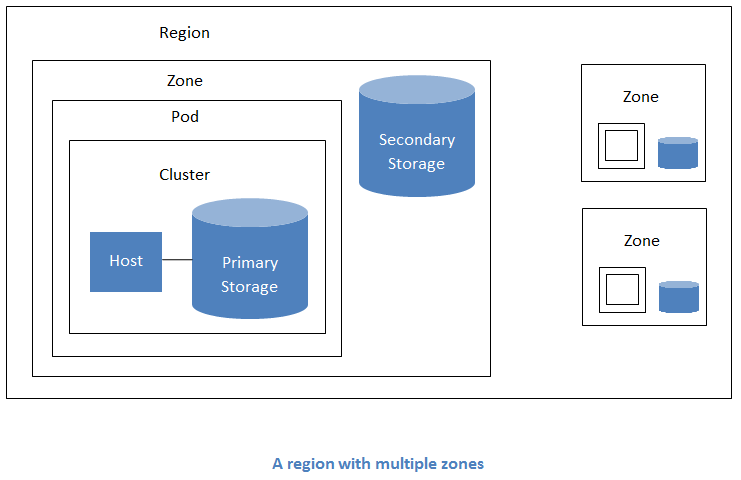

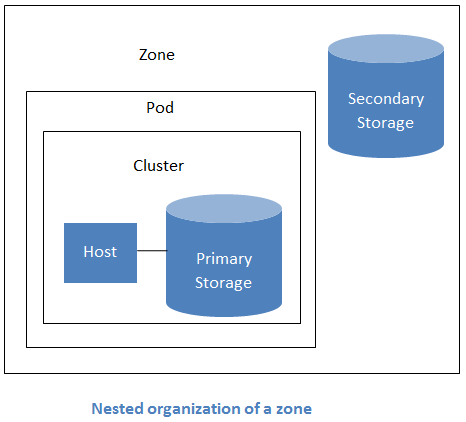

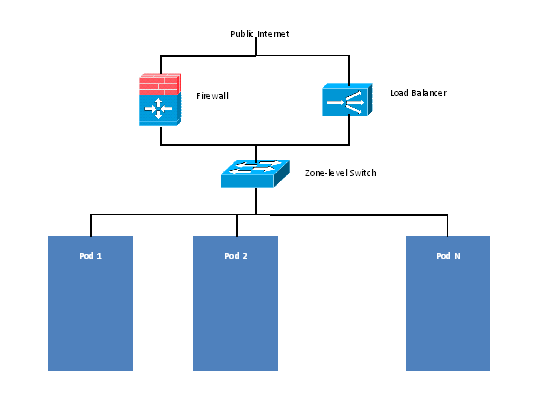

The Management Server manages one or more zones (typically, datacenters) containing host computers where guest virtual machines will run. The cloud infrastructure is organized as follows:

Zone: Typically, a zone is equivalent to a single datacenter. A zone consists of one or more pods and secondary storage.

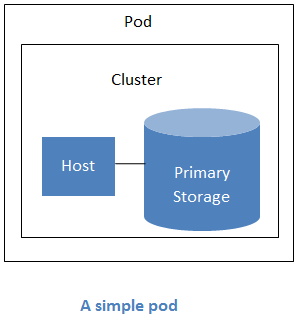

Pod: A pod is usually one rack of hardware that includes a layer-2 switch and one or more clusters.

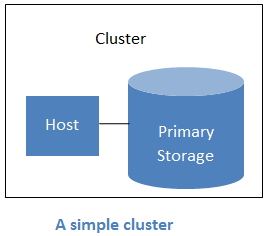

Cluster: A cluster consists of one or more hosts and primary storage.

Host: A single compute node within a cluster. The hosts are where the actual cloud services run in the form of guest virtual machines.

Primary storage is associated with a cluster, and it stores the disk volumes for all the VMs running on hosts in that cluster.

Secondary storage is associated with a zone, and it stores templates, ISO images, and disk volume snapshots.

More Information

For more information, see documentation on cloud infrastructure concepts.

1.3.3. Networking Overview

CloudStack offers two types of networking scenario:

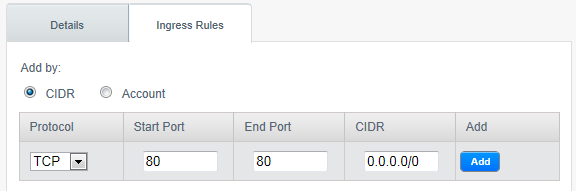

Basic. For AWS-style networking. Provides a single network where guest isolation can be provided through layer-3 means such as security groups (IP address source filtering).

Advanced. For more sophisticated network topologies. This network model provides the most flexibility in defining guest networks.

For more details, see Network Setup.

Chapter 2. Cloud Infrastructure Concepts

To increase reliability of the cloud, you can optionally group resources into multiple geographic regions. A region is the largest available organizational unit within a CloudStack deployment. A region is made up of several availability zones, where each zone is roughly equivalent to a datacenter. Each region is controlled by its own cluster of Management Servers, running in one of the zones. The zones in a region are typically located in close geographical proximity. Regions are a useful technique for providing fault tolerance and disaster recovery.

By grouping zones into regions, the cloud can achieve higher availability and scalability. User accounts can span regions, so that users can deploy VMs in multiple, widely-dispersed regions. Even if one of the regions becomes unavailable, the services are still available to the end-user through VMs deployed in another region. And by grouping communities of zones under their own nearby Management Servers, the latency of communications within the cloud is reduced compared to managing widely-dispersed zones from a single central Management Server.

Usage records can also be consolidated and tracked at the region level, creating reports or invoices for each geographic region.

Regions are visible to the end user. When a user starts a guest VM on a particular CloudStack Management Server, the user is implicitly selecting that region for their guest. Users might also be required to copy their private templates to additional regions to enable creation of guest VMs using their templates in those regions.

A zone is the second largest organizational unit within a CloudStack deployment. A zone typically corresponds to a single datacenter, although it is permissible to have multiple zones in a datacenter. The benefit of organizing infrastructure into zones is to provide physical isolation and redundancy. For example, each zone can have its own power supply and network uplink, and the zones can be widely separated geographically (though this is not required).

A zone consists of:

One or more pods. Each pod contains one or more clusters of hosts and one or more primary storage servers.

A zone may contain one or more primary storage servers, which are shared by all the pods in the zone.

Secondary storage, which is shared by all the pods in the zone.

Zones are visible to the end user. When a user starts a guest VM, the user must select a zone for their guest. Users might also be required to copy their private templates to additional zones to enable creation of guest VMs using their templates in those zones.

Zones can be public or private. Public zones are visible to all users. This means that any user may create a guest in that zone. Private zones are reserved for a specific domain. Only users in that domain or its subdomains may create guests in that zone.

Hosts in the same zone are directly accessible to each other without having to go through a firewall. Hosts in different zones can access each other through statically configured VPN tunnels.

For each zone, the administrator must decide the following.

How many pods to place in each zone.

How many clusters to place in each pod.

How many hosts to place in each cluster.

(Optional) How many primary storage servers to place in each zone and total capacity for these storage servers.

How many primary storage servers to place in each cluster and total capacity for these storage servers.

How much secondary storage to deploy in a zone.

When you add a new zone using the CloudStack UI, you will be prompted to configure the zone’s physical network and add the first pod, cluster, host, primary storage, and secondary storage.

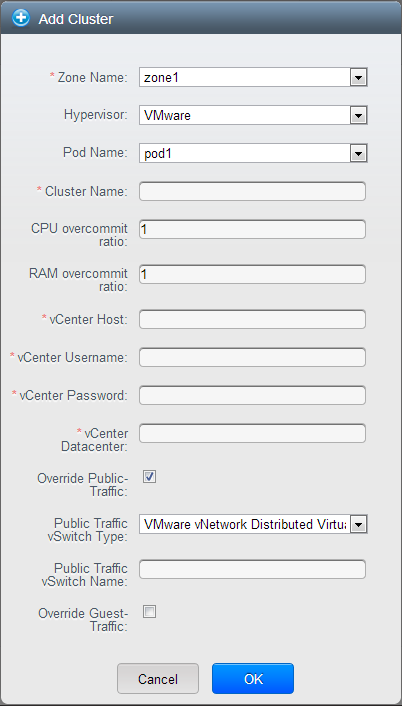

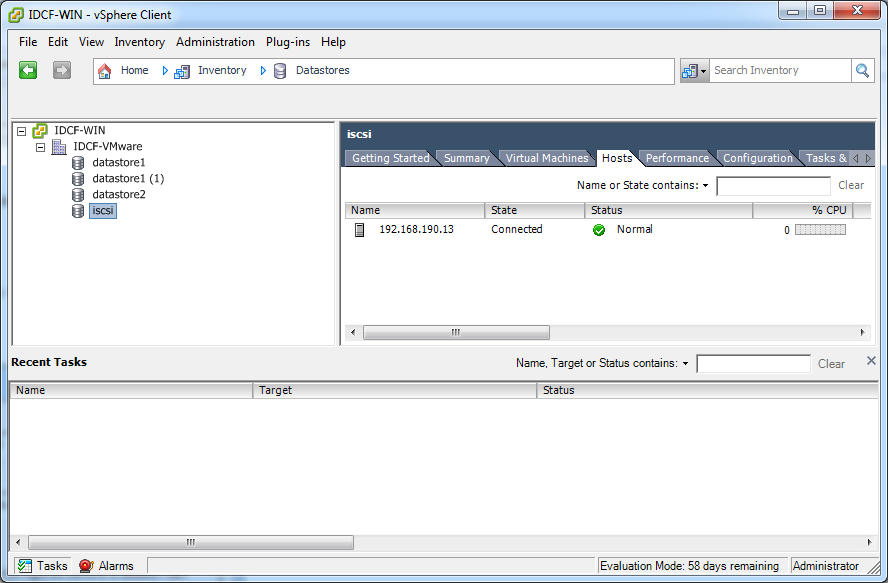

In order to support zone-wide functions for VMware, CloudStack is aware of VMware Datacenters and can map each Datacenter to a CloudStack zone. To enable features like storage live migration and zone-wide primary storage for VMware hosts, CloudStack has to make sure that a zone contains only a single VMware Datacenter. Therefore, when you are creating a new CloudStack zone, you can select a VMware Datacenter for the zone. If you are provisioning multiple VMware Datacenters, each one will be set up as a single zone in CloudStack.

If you are upgrading from a previous CloudStack version, and your existing deployment contains a zone with clusters from multiple VMware Datacenters, that zone will not be forcibly migrated to the new model. It will continue to function as before. However, any new zone-wide operations, such as zone-wide primary storage and live storage migration, will not be available in that zone.

A pod often represents a single rack. Hosts in the same pod are in the same subnet. A pod is the second-largest organizational unit within a CloudStack deployment. Pods are contained within zones. Each zone can contain one or more pods. A pod consists of one or more clusters of hosts and one or more primary storage servers. Pods are not visible to the end user.

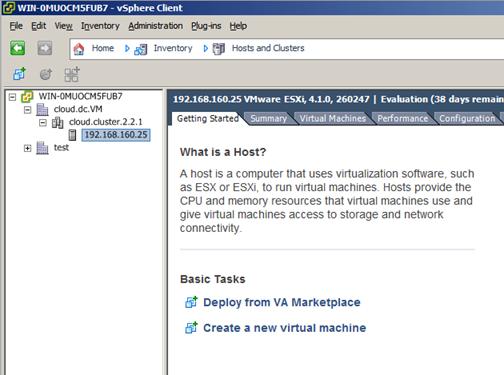

A cluster provides a way to group hosts. To be precise, a cluster is a XenServer server pool, a set of KVM servers, , or a VMware cluster preconfigured in vCenter. The hosts in a cluster all have identical hardware, run the same hypervisor, are on the same subnet, and access the same shared primary storage. Virtual machine instances (VMs) can be live-migrated from one host to another within the same cluster, without interrupting service to the user.

A cluster is the third-largest organizational unit within a CloudStack deployment. Clusters are contained within pods, and pods are contained within zones. Size of the cluster is limited by the underlying hypervisor, although the CloudStack recommends less in most cases; see Best Practices.

A cluster consists of one or more hosts and one or more primary storage servers.

CloudStack allows multiple clusters in a cloud deployment.

Even when local storage is used exclusively, clusters are still required organizationally, even if there is just one host per cluster.

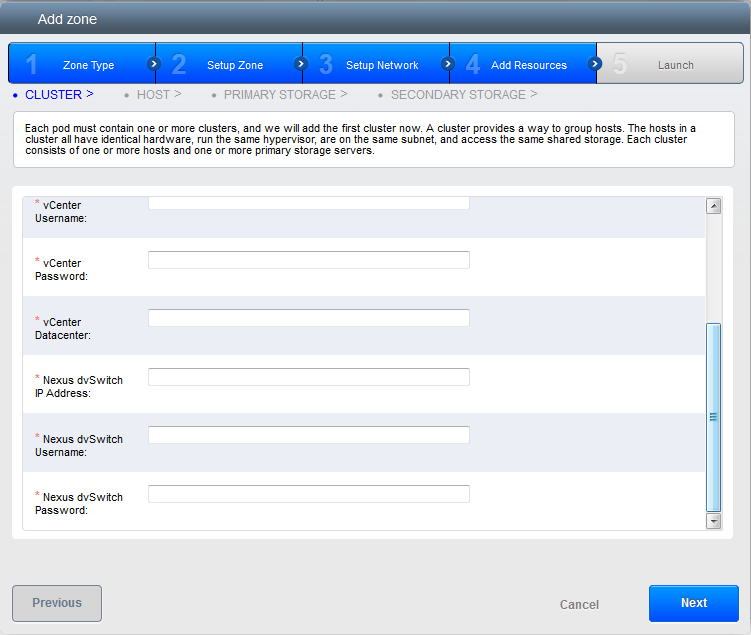

When VMware is used, every VMware cluster is managed by a vCenter server. An Administrator must register the vCenter server with CloudStack. There may be multiple vCenter servers per zone. Each vCenter server may manage multiple VMware clusters.

A host is a single computer. Hosts provide the computing resources that run the guest virtual machines. Each host has hypervisor software installed on it to manage the guest VMs. For example, a Linux KVM-enabled server, a Citrix XenServer server, and an ESXi server are hosts.

The host is the smallest organizational unit within a CloudStack deployment. Hosts are contained within clusters, clusters are contained within pods, and pods are contained within zones.

Hosts in a CloudStack deployment:

Provide the CPU, memory, storage, and networking resources needed to host the virtual machines

Interconnect using a high bandwidth TCP/IP network and connect to the Internet

May reside in multiple data centers across different geographic locations

May have different capacities (different CPU speeds, different amounts of RAM, etc.), although the hosts within a cluster must all be homogeneous

Additional hosts can be added at any time to provide more capacity for guest VMs.

CloudStack automatically detects the amount of CPU and memory resources provided by the Hosts.

Hosts are not visible to the end user. An end user cannot determine which host their guest has been assigned to.

For a host to function in CloudStack, you must do the following:

Install hypervisor software on the host

Assign an IP address to the host

Ensure the host is connected to the CloudStack Management Server

2.6. About Primary Storage

Primary storage is associated with a cluster and/or a zone. It stores the disk volumes for all of the VMs running on hosts in that cluster. You can add multiple primary storage servers to a cluster or a zone (at least one is required at the cluster level). Primary storage is typically located close to the hosts for increased performance. CloudStack manages the allocation of guest virtual disks to particular primary storage devices.

Primary storage uses the concept of a storage tag. A storage tag is a label that is used to identify the primary storage. Each primary storage can be associated with zero, one, or more storage tags. When a VM is spun up or a data disk attached to a VM for the first time, these tags, if supplied, are used to determine which primary storage can support the VM or data disk (ex. say you need to guarantee a certain number of IOPS to a particular volume).

Primary storage can be either static or dynamic. Static primary storage is what CloudStack has traditionally supported. In this model, the administrator must present CloudStack with a certain amount of preallocated storage (ex. a volume from a SAN) and CloudStack can place many of its volumes on this storage. In the newer, dynamic model, the administrator can present CloudStack with a storage system itself (ex. a SAN). CloudStack, working in concert with a plug-in developed for that storage system, can dynamically create volumes on the storage system. A valuable use for this ability is Quality of Service (QoS). If a volume created in CloudStack can be backed by a dedicated volume on a SAN (i.e. a one-to-one mapping between a SAN volume and a CloudStack volume) and the SAN provides QoS, then CloudStack can provide QoS.

CloudStack is designed to work with all standards-compliant iSCSI and NFS servers that are supported by the underlying hypervisor, including, for example:

If you intend to use only local disk for your installation, you can skip to Add Secondary Storage.

2.7. About Secondary Storage

Secondary storage stores the following:

Templates — OS images that can be used to boot VMs and can include additional configuration information, such as installed applications

ISO images — disc images containing data or bootable media for operating systems

Disk volume snapshots — saved copies of VM data which can be used for data recovery or to create new templates

The items in secondary storage are available to all hosts in the scope of the secondary storage, which may be defined as per zone or per region.

To make items in secondary storage available to all hosts throughout the cloud, you can add object storage in addition to the zone-based NFS Secondary Staging Store. It is not necessary to copy templates and snapshots from one zone to another, as would be required when using zone NFS alone. Everything is available everywhere.

CloudStack provides plugins that enable both OpenStack Object Storage (Swift,

swift.openstack.org) and Amazon Simple Storage Service (S3) object storage. When using one of these storage plugins, you configure Swift or S3 storage for the entire CloudStack, then set up the NFS Secondary Staging Store for each zone. The NFS storage in each zone acts as a staging area through which all templates and other secondary storage data pass before being forwarded to Swift or S3. The backing object storage acts as a cloud-wide resource, making templates and other data available to any zone in the cloud.

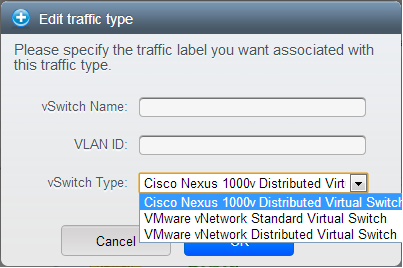

2.8. About Physical Networks

Part of adding a zone is setting up the physical network. One or (in an advanced zone) more physical networks can be associated with each zone. The network corresponds to a NIC on the hypervisor host. Each physical network can carry one or more types of network traffic. The choices of traffic type for each network vary depending on whether you are creating a zone with basic networking or advanced networking.

A physical network is the actual network hardware and wiring in a zone. A zone can have multiple physical networks. An administrator can:

Add/Remove/Update physical networks in a zone

Configure VLANs on the physical network

Configure a name so the network can be recognized by hypervisors

Configure the service providers (firewalls, load balancers, etc.) available on a physical network

Configure the IP addresses trunked to a physical network

Specify what type of traffic is carried on the physical network, as well as other properties like network speed

2.8.1. Basic Zone Network Traffic Types

When basic networking is used, there can be only one physical network in the zone. That physical network carries the following traffic types:

Guest. When end users run VMs, they generate guest traffic. The guest VMs communicate with each other over a network that can be referred to as the guest network. Each pod in a basic zone is a broadcast domain, and therefore each pod has a different IP range for the guest network. The administrator must configure the IP range for each pod.

Management. When CloudStack's internal resources communicate with each other, they generate management traffic. This includes communication between hosts, system VMs (VMs used by CloudStack to perform various tasks in the cloud), and any other component that communicates directly with the CloudStack Management Server. You must configure the IP range for the system VMs to use.

We strongly recommend the use of separate NICs for management traffic and guest traffic.

Public. Public traffic is generated when VMs in the cloud access the Internet. Publicly accessible IPs must be allocated for this purpose. End users can use the CloudStack UI to acquire these IPs to implement NAT between their guest network and the public network, as described in Acquiring a New IP Address.

Storage. While labeled "storage" this is specifically about secondary storage, and doesn't affect traffic for primary storage. This includes traffic such as VM templates and snapshots, which is sent between the secondary storage VM and secondary storage servers. CloudStack uses a separate Network Interface Controller (NIC) named storage NIC for storage network traffic. Use of a storage NIC that always operates on a high bandwidth network allows fast template and snapshot copying. You must configure the IP range to use for the storage network.

In a basic network, configuring the physical network is fairly straightforward. In most cases, you only need to configure one guest network to carry traffic that is generated by guest VMs. If you use a NetScaler load balancer and enable its elastic IP and elastic load balancing (EIP and ELB) features, you must also configure a network to carry public traffic. CloudStack takes care of presenting the necessary network configuration steps to you in the UI when you add a new zone.

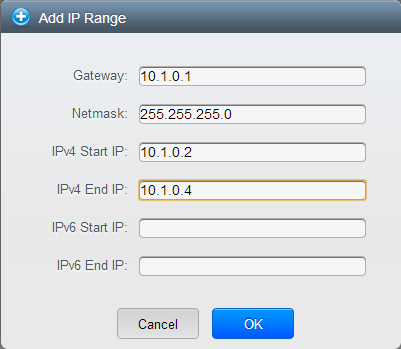

2.8.2. Basic Zone Guest IP Addresses

When basic networking is used, CloudStack will assign IP addresses in the CIDR of the pod to the guests in that pod. The administrator must add a Direct IP range on the pod for this purpose. These IPs are in the same VLAN as the hosts.

2.8.3. Advanced Zone Network Traffic Types

When advanced networking is used, there can be multiple physical networks in the zone. Each physical network can carry one or more traffic types, and you need to let CloudStack know which type of network traffic you want each network to carry. The traffic types in an advanced zone are:

Guest. When end users run VMs, they generate guest traffic. The guest VMs communicate with each other over a network that can be referred to as the guest network. This network can be isolated or shared. In an isolated guest network, the administrator needs to reserve VLAN ranges to provide isolation for each CloudStack account’s network (potentially a large number of VLANs). In a shared guest network, all guest VMs share a single network.

Management. When CloudStack’s internal resources communicate with each other, they generate management traffic. This includes communication between hosts, system VMs (VMs used by CloudStack to perform various tasks in the cloud), and any other component that communicates directly with the CloudStack Management Server. You must configure the IP range for the system VMs to use.

Public. Public traffic is generated when VMs in the cloud access the Internet. Publicly accessible IPs must be allocated for this purpose. End users can use the CloudStack UI to acquire these IPs to implement NAT between their guest network and the public network, as described in “Acquiring a New IP Address” in the Administration Guide.

Storage. While labeled "storage" this is specifically about secondary storage, and doesn't affect traffic for primary storage. This includes traffic such as VM templates and snapshots, which is sent between the secondary storage VM and secondary storage servers. CloudStack uses a separate Network Interface Controller (NIC) named storage NIC for storage network traffic. Use of a storage NIC that always operates on a high bandwidth network allows fast template and snapshot copying. You must configure the IP range to use for the storage network.

These traffic types can each be on a separate physical network, or they can be combined with certain restrictions. When you use the Add Zone wizard in the UI to create a new zone, you are guided into making only valid choices.

2.8.4. Advanced Zone Guest IP Addresses

When advanced networking is used, the administrator can create additional networks for use by the guests. These networks can span the zone and be available to all accounts, or they can be scoped to a single account, in which case only the named account may create guests that attach to these networks. The networks are defined by a VLAN ID, IP range, and gateway. The administrator may provision thousands of these networks if desired. Additionally, the administrator can reserve a part of the IP address space for non-CloudStack VMs and servers.

2.8.5. Advanced Zone Public IP Addresses

When advanced networking is used, the administrator can create additional networks for use by the guests. These networks can span the zone and be available to all accounts, or they can be scoped to a single account, in which case only the named account may create guests that attach to these networks. The networks are defined by a VLAN ID, IP range, and gateway. The administrator may provision thousands of these networks if desired.

2.8.6. System Reserved IP Addresses

In each zone, you need to configure a range of reserved IP addresses for the management network. This network carries communication between the CloudStack Management Server and various system VMs, such as Secondary Storage VMs, Console Proxy VMs, and DHCP.

The reserved IP addresses must be unique across the cloud. You cannot, for example, have a host in one zone which has the same private IP address as a host in another zone.

The hosts in a pod are assigned private IP addresses. These are typically RFC1918 addresses. The Console Proxy and Secondary Storage system VMs are also allocated private IP addresses in the CIDR of the pod that they are created in.

Make sure computing servers and Management Servers use IP addresses outside of the System Reserved IP range. For example, suppose the System Reserved IP range starts at 192.168.154.2 and ends at 192.168.154.7. CloudStack can use .2 to .7 for System VMs. This leaves the rest of the pod CIDR, from .8 to .254, for the Management Server and hypervisor hosts.

In all zones:

Provide private IPs for the system in each pod and provision them in CloudStack.

For KVM and XenServer, the recommended number of private IPs per pod is one per host. If you expect a pod to grow, add enough private IPs now to accommodate the growth.

In a zone that uses advanced networking:

For zones with advanced networking, we recommend provisioning enough private IPs for your total number of customers, plus enough for the required CloudStack System VMs. Typically, about 10 additional IPs are required for the System VMs. For more information about System VMs, see the section on working with SystemVMs in the Administrator's Guide.

When advanced networking is being used, the number of private IP addresses available in each pod varies depending on which hypervisor is running on the nodes in that pod. Citrix XenServer and KVM use link-local addresses, which in theory provide more than 65,000 private IP addresses within the address block. As the pod grows over time, this should be more than enough for any reasonable number of hosts as well as IP addresses for guest virtual routers. VMWare ESXi, by contrast uses any administrator-specified subnetting scheme, and the typical administrator provides only 255 IPs per pod. Since these are shared by physical machines, the guest virtual router, and other entities, it is possible to run out of private IPs when scaling up a pod whose nodes are running ESXi.

To ensure adequate headroom to scale private IP space in an ESXi pod that uses advanced networking, use one or both of the following techniques:

Specify a larger CIDR block for the subnet. A subnet mask with a /20 suffix will provide more than 4,000 IP addresses.

Create multiple pods, each with its own subnet. For example, if you create 10 pods and each pod has 255 IPs, this will provide 2,550 IP addresses.

Chapter 3. Building from Source

The official CloudStack release is always in source code form. You will likely be able to find "convenience binaries," the source is the canonical release. In this section, we'll cover acquiring the source release and building that so that you can deploy it using Maven or create Debian packages or RPMs.

Note that building and deploying directly from source is typically not the most efficient way to deploy an IaaS. However, we will cover that method as well as building RPMs or Debian packages for deploying CloudStack.

The instructions here are likely version-specific. That is, the method for building from source for the 4.0.x series is different from the 4.1.x series.

If you are working with a unreleased version of CloudStack, see the INSTALL.md file in the top-level directory of the release.

Prior releases are available via archive.apache.org as well. See the downloads page for more information on archived releases.

You'll notice several links under the 'Latest release' section. A link to a file ending in tar.bz2, as well as a PGP/GPG signature, MD5, and SHA512 file.

The tar.bz2 file contains the Bzip2-compressed tarball with the source code.

The .asc file is a detached cryptographic signature that can be used to help verify the authenticity of the release.

The .md5 file is an MD5 hash of the release to aid in verify the validity of the release download.

The .sha file is a SHA512 hash of the release to aid in verify the validity of the release download.

3.2. Verifying the downloaded release

There are a number of mechanisms to check the authenticity and validity of a downloaded release.

To enable you to verify the GPG signature, you will need to download the

KEYS file.

You next need to import those keys, which you can do by running:

# gpg --import KEYS

The CloudStack project provides a detached GPG signature of the release. To check the signature, run the following command:

$ gpg --verify apache-cloudstack-4.0.0-incubating-src.tar.bz2.asc

If the signature is valid you will see a line of output that contains 'Good signature'.

In addition to the cryptographic signature, CloudStack has an MD5 checksum that you can use to verify the download matches the release. You can verify this hash by executing the following command:

$ gpg --print-md MD5 apache-cloudstack-4.0.0-incubating-src.tar.bz2 | diff - apache-cloudstack-4.0.0-incubating-src.tar.bz2.md5

If this successfully completes you should see no output. If there is any output from them, then there is a difference between the hash you generated locally and the hash that has been pulled from the server.

In addition to the MD5 hash, the CloudStack project provides a SHA512 cryptographic hash to aid in assurance of the validity of the downloaded release. You can verify this hash by executing the following command:

$ gpg --print-md SHA512 apache-cloudstack-4.0.0-incubating-src.tar.bz2 | diff - apache-cloudstack-4.0.0-incubating-src.tar.bz2.sha

If this command successfully completes you should see no output. If there is any output from them, then there is a difference between the hash you generated locally and the hash that has been pulled from the server.

3.3. Prerequisites for building Apache CloudStack

There are a number of prerequisites needed to build CloudStack. This document assumes compilation on a Linux system that uses RPMs or DEBs for package management.

You will need, at a minimum, the following to compile CloudStack:

Maven (version 3)

Java (OpenJDK 1.6 or Java 7/OpenJDK 1.7)

Apache Web Services Common Utilities (ws-commons-util)

MySQL

MySQLdb (provides Python database API)

Tomcat 6 (not 6.0.35)

genisoimage

rpmbuild or dpkg-dev

3.5. Building DEB packages

In addition to the bootstrap dependencies, you'll also need to install several other dependencies. Note that we recommend using Maven 3, which is not currently available in 12.04.1 LTS. So, you'll also need to add a PPA repository that includes Maven 3. After running the command add-apt-repository, you will be prompted to continue and a GPG key will be added.

$ sudo apt-get update

$ sudo apt-get install python-software-properties

$ sudo add-apt-repository ppa:natecarlson/maven3

$ sudo apt-get update

$ sudo apt-get install ant debhelper openjdk-6-jdk tomcat6 libws-commons-util-java genisoimage python-mysqldb libcommons-codec-java libcommons-httpclient-java liblog4j1.2-java maven3

While we have defined, and you have presumably already installed the bootstrap prerequisites, there are a number of build time prerequisites that need to be resolved. CloudStack uses maven for dependency resolution. You can resolve the buildtime depdencies for CloudStack by running:

$ mvn3 -P deps

Now that we have resolved the dependencies we can move on to building CloudStack and packaging them into DEBs by issuing the following command.

$ dpkg-buildpackage -uc -us

This command will build the following debian packages. You should have all of the following:

cloudstack-common-4.2.0.amd64.deb

cloudstack-management-4.2.0.amd64.deb

cloudstack-agent-4.2.0.amd64.deb

cloudstack-usage-4.2.0.amd64.deb

cloudstack-awsapi-4.2.0.amd64.deb

cloudstack-cli-4.2.0.amd64.deb

cloudstack-docs-4.2.0.amd64.deb

3.5.1. Setting up an APT repo

After you've created the packages, you'll want to copy them to a system where you can serve the packages over HTTP. You'll create a directory for the packages and then use dpkg-scanpackages to create Packages.gz, which holds information about the archive structure. Finally, you'll add the repository to your system(s) so you can install the packages using APT.

The first step is to make sure that you have the dpkg-dev package installed. This should have been installed when you pulled in the debhelper application previously, but if you're generating Packages.gz on a different system, be sure that it's installed there as well.

$ sudo apt-get install dpkg-dev

The next step is to copy the DEBs to the directory where they can be served over HTTP. We'll use /var/www/cloudstack/repo in the examples, but change the directory to whatever works for you.

sudo mkdir -p /var/www/cloudstack/repo/binary

sudo cp *.deb /var/www/cloudstack/repo/binary

sudo cd /var/www/cloudstack/repo/binary

sudo dpkg-scanpackages . /dev/null | tee Packages | gzip -9 > Packages.gz

You can safely ignore the warning about a missing override file.

Now you should have all of the DEB packages and Packages.gz in the binary directory and available over HTTP. (You may want to use wget or curl to test this before moving on to the next step.)

3.5.2. Configuring your machines to use the APT repository

Now that we have created the repository, you need to configure your machine to make use of the APT repository. You can do this by adding a repository file under /etc/apt/sources.list.d. Use your preferred editor to create /etc/apt/sources.list.d/cloudstack.list with this line:

deb http://server.url/cloudstack/repo binary ./

Now that you have the repository info in place, you'll want to run another update so that APT knows where to find the CloudStack packages.

$ sudo apt-get update

You can now move on to the instructions under Install on Ubuntu.

3.6. Building RPMs from Source

# yum groupinstall "Development Tools"

# yum install java-1.6.0-openjdk-devel.x86_64 genisoimage mysql mysql-server ws-commons-util MySQL-python tomcat6 createrepo

Next, you'll need to install build-time dependencies for CloudStack with Maven. We're using Maven 3, so you'll want to

grab a Maven 3 tarball and uncompress it in your home directory (or whatever location you prefer):

$ tar zxvf apache-maven-3.0.4-bin.tar.gz

$ export PATH=/usr/local/apache-maven-3.0.4//bin:$PATH

Maven also needs to know where Java is, and expects the JAVA_HOME environment variable to be set:

$ export JAVA_HOME=/usr/lib/jvm/jre-1.6.0-openjdk.x86_64/

Verify that Maven is installed correctly:

You probably want to ensure that your environment variables will survive a logout/reboot. Be sure to update ~/.bashrc with the PATH and JAVA_HOME variables.

Building RPMs for CloudStack is fairly simple. Assuming you already have the source downloaded and have uncompressed the tarball into a local directory, you're going to be able to generate packages in just a few minutes.

If you've created packages for CloudStack previously, you should be aware that the process has changed considerably since the project has moved to using Apache Maven. Please be sure to follow the steps in this section closely.

Now that we have the prerequisites and source, you will cd to the packaging/centos63/ directory.

$ cd packaging/centos63

Generating RPMs is done using the

package.sh script:

$./package.sh

That will run for a bit and then place the finished packages in dist/rpmbuild/RPMS/x86_64/.

You should see the following RPMs in that directory:

cloudstack-agent-4.2.0.el6.x86_64.rpm

cloudstack-awsapi-4.2.0.el6.x86_64.rpm

cloudstack-cli-4.2.0.el6.x86_64.rpm

cloudstack-common-4.2.0.el6.x86_64.rpm

cloudstack-docs-4.2.0.el6.x86_64.rpm

cloudstack-management-4.2.0.el6.x86_64.rpm

cloudstack-usage-4.2.0.el6.x86_64.rpm

3.6.1.1. Creating a yum repo

While RPMs is a useful packaging format - it's most easily consumed from Yum repositories over a network. The next step is to create a Yum Repo with the finished packages:

$ mkdir -p ~/tmp/repo

$ cp dist/rpmbuild/RPMS/x86_64/*rpm ~/tmp/repo/

$ createrepo ~/tmp/repo

The files and directories within ~/tmp/repo can now be uploaded to a web server and serve as a yum repository.

3.6.1.2. Configuring your systems to use your new yum repository

Now that your yum repository is populated with RPMs and metadata we need to configure the machines that need to install CloudStack. Create a file named

/etc/yum.repos.d/cloudstack.repo with this information:

[apache-cloudstack]

name=Apache CloudStack

baseurl=http://webserver.tld/path/to/repo

enabled=1

gpgcheck=0

Completing this step will allow you to easily install CloudStack on a number of machines across the network.

If you need support for the VMware, NetApp, F5, NetScaler, SRX, or any other non-Open Source Software (nonoss) plugins, you'll need to download a few components on your own and follow a slightly different procedure to build from source.

Some of the plugins supported by CloudStack cannot be distributed with CloudStack for licensing reasons. In some cases, some of the required libraries/JARs are under a proprietary license. In other cases, the required libraries may be under a license that's not compatible with

Apache's licensing guidelines for third-party products.

To build the Non-OSS plugins, you'll need to have the requisite JARs installed under the deps directory.

Because these modules require dependencies that can't be distributed with CloudStack you'll need to download them yourself. Links to the most recent dependencies are listed on the

How to build on master branch page on the wiki.

You may also need to download

vhd-util, which was removed due to licensing issues. You'll copy vhd-util to the

scripts/vm/hypervisor/xenserver/ directory.

Once you have all the dependencies copied over, you'll be able to build CloudStack with the nonoss option:

$ mvn clean

$ mvn install -Dnonoss

Chapter 13. Network Setup

Achieving the correct networking setup is crucial to a successful CloudStack installation. This section contains information to help you make decisions and follow the right procedures to get your network set up correctly.

13.1. Basic and Advanced Networking

CloudStack provides two styles of networking:.

For AWS-style networking. Provides a single network where guest isolation can be provided through layer-3 means such as security groups (IP address source filtering).

For more sophisticated network topologies. This network model provides the most flexibility in defining guest networks, but requires more configuration steps than basic networking.

Each zone has either basic or advanced networking. Once the choice of networking model for a zone has been made and configured in CloudStack, it can not be changed. A zone is either basic or advanced for its entire lifetime.

The following table compares the networking features in the two networking models.

The two types of networking may be in use in the same cloud. However, a given zone must use either Basic Networking or Advanced Networking.

Different types of network traffic can be segmented on the same physical network. Guest traffic can also be segmented by account. To isolate traffic, you can use separate VLANs. If you are using separate VLANs on a single physical network, make sure the VLAN tags are in separate numerical ranges.

13.2. VLAN Allocation Example

VLANs are required for public and guest traffic. The following is an example of a VLAN allocation scheme:

13.3. Example Hardware Configuration

This section contains an example configuration of specific switch models for zone-level layer-3 switching. It assumes VLAN management protocols, such as VTP or GVRP, have been disabled. The example scripts must be changed appropriately if you choose to use VTP or GVRP.

The following steps show how a Dell 62xx is configured for zone-level layer-3 switching. These steps assume VLAN 201 is used to route untagged private IPs for pod 1, and pod 1’s layer-2 switch is connected to Ethernet port 1/g1.

The Dell 62xx Series switch supports up to 1024 VLANs.

Configure all the VLANs in the database.

vlan database

vlan 200-999

exit

Configure Ethernet port 1/g1.

interface ethernet 1/g1

switchport mode general

switchport general pvid 201

switchport general allowed vlan add 201 untagged

switchport general allowed vlan add 300-999 tagged

exit

The statements configure Ethernet port 1/g1 as follows:

The following steps show how a Cisco 3750 is configured for zone-level layer-3 switching. These steps assume VLAN 201 is used to route untagged private IPs for pod 1, and pod 1’s layer-2 switch is connected to GigabitEthernet1/0/1.

Setting VTP mode to transparent allows us to utilize VLAN IDs above 1000. Since we only use VLANs up to 999, vtp transparent mode is not strictly required.

vtp mode transparent

vlan 200-999

exit

Configure GigabitEthernet1/0/1.

interface GigabitEthernet1/0/1

switchport trunk encapsulation dot1q

switchport mode trunk

switchport trunk native vlan 201

exit

The statements configure GigabitEthernet1/0/1 as follows:

VLAN 201 is the native untagged VLAN for port GigabitEthernet1/0/1.

Cisco passes all VLANs by default. As a result, all VLANs (300-999) are passed to all the pod-level layer-2 switches.

The layer-2 switch is the access switching layer inside the pod.

It should trunk all VLANs into every computing host.

It should switch traffic for the management network containing computing and storage hosts. The layer-3 switch will serve as the gateway for the management network.

This section contains example configurations for specific switch models for pod-level layer-2 switching. It assumes VLAN management protocols such as VTP or GVRP have been disabled. The scripts must be changed appropriately if you choose to use VTP or GVRP.

The following steps show how a Dell 62xx is configured for pod-level layer-2 switching.

Configure all the VLANs in the database.

vlan database

vlan 300-999

exit

VLAN 201 is used to route untagged private IP addresses for pod 1, and pod 1 is connected to this layer-2 switch.

interface range ethernet all

switchport mode general

switchport general allowed vlan add 300-999 tagged

exit

The statements configure all Ethernet ports to function as follows:

The following steps show how a Cisco 3750 is configured for pod-level layer-2 switching.

Setting VTP mode to transparent allows us to utilize VLAN IDs above 1000. Since we only use VLANs up to 999, vtp transparent mode is not strictly required.

vtp mode transparent

vlan 300-999

exit

Configure all ports to dot1q and set 201 as the native VLAN.

interface range GigabitEthernet 1/0/1-24

switchport trunk encapsulation dot1q

switchport mode trunk

switchport trunk native vlan 201

exit

By default, Cisco passes all VLANs. Cisco switches complain of the native VLAN IDs are different when 2 ports are connected together. That’s why you must specify VLAN 201 as the native VLAN on the layer-2 switch.

13.5.1. Generic Firewall Provisions

The hardware firewall is required to serve two purposes:

Protect the Management Servers. NAT and port forwarding should be configured to direct traffic from the public Internet to the Management Servers.

Route management network traffic between multiple zones. Site-to-site VPN should be configured between multiple zones.

To achieve the above purposes you must set up fixed configurations for the firewall. Firewall rules and policies need not change as users are provisioned into the cloud. Any brand of hardware firewall that supports NAT and site-to-site VPN can be used.

13.5.2. External Guest Firewall Integration for Juniper SRX (Optional)

Available only for guests using advanced networking.

CloudStack provides for direct management of the Juniper SRX series of firewalls. This enables CloudStack to establish static NAT mappings from public IPs to guest VMs, and to use the Juniper device in place of the virtual router for firewall services. You can have one or more Juniper SRX per zone. This feature is optional. If Juniper integration is not provisioned, CloudStack will use the virtual router for these services.

The Juniper SRX can optionally be used in conjunction with an external load balancer. External Network elements can be deployed in a side-by-side or inline configuration.

CloudStack requires the Juniper to be configured as follows:

Supported SRX software version is 10.3 or higher.

Install your SRX appliance according to the vendor's instructions.

Connect one interface to the management network and one interface to the public network. Alternatively, you can connect the same interface to both networks and a use a VLAN for the public network.

Make sure "vlan-tagging" is enabled on the private interface.

Record the public and private interface names. If you used a VLAN for the public interface, add a ".[VLAN TAG]" after the interface name. For example, if you are using ge-0/0/3 for your public interface and VLAN tag 301, your public interface name would be "ge-0/0/3.301". Your private interface name should always be untagged because the CloudStack software automatically creates tagged logical interfaces.

Create a public security zone and a private security zone. By default, these will already exist and will be called "untrust" and "trust". Add the public interface to the public zone and the private interface to the private zone. Note down the security zone names.

Make sure there is a security policy from the private zone to the public zone that allows all traffic.

Note the username and password of the account you want the CloudStack software to log in to when it is programming rules.

Make sure the "ssh" and "xnm-clear-text" system services are enabled.

If traffic metering is desired:

a. Create an incoming firewall filter and an outgoing firewall filter. These filters should be the same names as your public security zone name and private security zone name respectively. The filters should be set to be "interface-specific". For example, here is the configuration where the public zone is "untrust" and the private zone is "trust":

root@cloud-srx# show firewall

filter trust {

interface-specific;

}

filter untrust {

interface-specific;

}

Add the firewall filters to your public interface. For example, a sample configuration output (for public interface ge-0/0/3.0, public security zone untrust, and private security zone trust) is:

ge-0/0/3 {

unit 0 {

family inet {

filter {

input untrust;

output trust;

}

address 172.25.0.252/16;

}

}

}

Make sure all VLANs are brought to the private interface of the SRX.

After the CloudStack Management Server is installed, log in to the CloudStack UI as administrator.

In the left navigation bar, click Infrastructure.

In Zones, click View More.

Choose the zone you want to work with.

Click the Network tab.

In the Network Service Providers node of the diagram, click Configure. (You might have to scroll down to see this.)

Click SRX.

Click the Add New SRX button (+) and provide the following:

IP Address: The IP address of the SRX.

Username: The user name of the account on the SRX that CloudStack should use.

Password: The password of the account.

Public Interface. The name of the public interface on the SRX. For example, ge-0/0/2. A ".x" at the end of the interface indicates the VLAN that is in use.

Private Interface: The name of the private interface on the SRX. For example, ge-0/0/1.

Usage Interface: (Optional) Typically, the public interface is used to meter traffic. If you want to use a different interface, specify its name here

Number of Retries: The number of times to attempt a command on the SRX before failing. The default value is 2.

Timeout (seconds): The time to wait for a command on the SRX before considering it failed. Default is 300 seconds.

Public Network: The name of the public network on the SRX. For example, trust.

Private Network: The name of the private network on the SRX. For example, untrust.

Capacity: The number of networks the device can handle

Dedicated: When marked as dedicated, this device will be dedicated to a single account. When Dedicated is checked, the value in the Capacity field has no significance implicitly, its value is 1

Click OK.

Click Global Settings. Set the parameter external.network.stats.interval to indicate how often you want CloudStack to fetch network usage statistics from the Juniper SRX. If you are not using the SRX to gather network usage statistics, set to 0.

13.5.3. External Guest Firewall Integration for Cisco VNMC (Optional)

Cisco Virtual Network Management Center (VNMC) provides centralized multi-device and policy management for Cisco Network Virtual Services. You can integrate Cisco VNMC with CloudStack to leverage the firewall and NAT service offered by ASA 1000v Cloud Firewall. Use it in a Cisco Nexus 1000v dvSwitch-enabled cluster in CloudStack. In such a deployment, you will be able to:

Configure Cisco ASA 1000v firewalls. You can configure one per guest network.

Use Cisco ASA 1000v firewalls to create and apply security profiles that contain ACL policy sets for both ingress and egress traffic.

Use Cisco ASA 1000v firewalls to create and apply Source NAT, Port Forwarding, and Static NAT policy sets.

CloudStack supports Cisco VNMC on Cisco Nexus 1000v dvSwich-enabled VMware hypervisors.

13.5.3.1. Using Cisco ASA 1000v Firewall, Cisco Nexus 1000v dvSwitch, and Cisco VNMC in a Deployment

Cisco ASA 1000v firewall is supported only in Isolated Guest Networks.

Cisco ASA 1000v firewall is not supported on VPC.

Cisco ASA 1000v firewall is not supported for load balancing.

When a guest network is created with Cisco VNMC firewall provider, an additional public IP is acquired along with the Source NAT IP. The Source NAT IP is used for the rules, whereas the additional IP is used to for the ASA outside interface. Ensure that this additional public IP is not released. You can identify this IP as soon as the network is in implemented state and before acquiring any further public IPs. The additional IP is the one that is not marked as Source NAT. You can find the IP used for the ASA outside interface by looking at the Cisco VNMC used in your guest network.

Use the public IP address range from a single subnet. You cannot add IP addresses from different subnets.

Only one ASA instance per VLAN is allowed because multiple VLANS cannot be trunked to ASA ports. Therefore, you can use only one ASA instance in a guest network.

Only one Cisco VNMC per zone is allowed.

Supported only in Inline mode deployment with load balancer.

The ASA firewall rule is applicable to all the public IPs in the guest network. Unlike the firewall rules created on virtual router, a rule created on the ASA device is not tied to a specific public IP.

Use a version of Cisco Nexus 1000v dvSwitch that support the vservice command. For example: nexus-1000v.4.2.1.SV1.5.2b.bin

Cisco VNMC requires the vservice command to be available on the Nexus switch to create a guest network in CloudStack.

13.5.3.1.2. Prerequisites

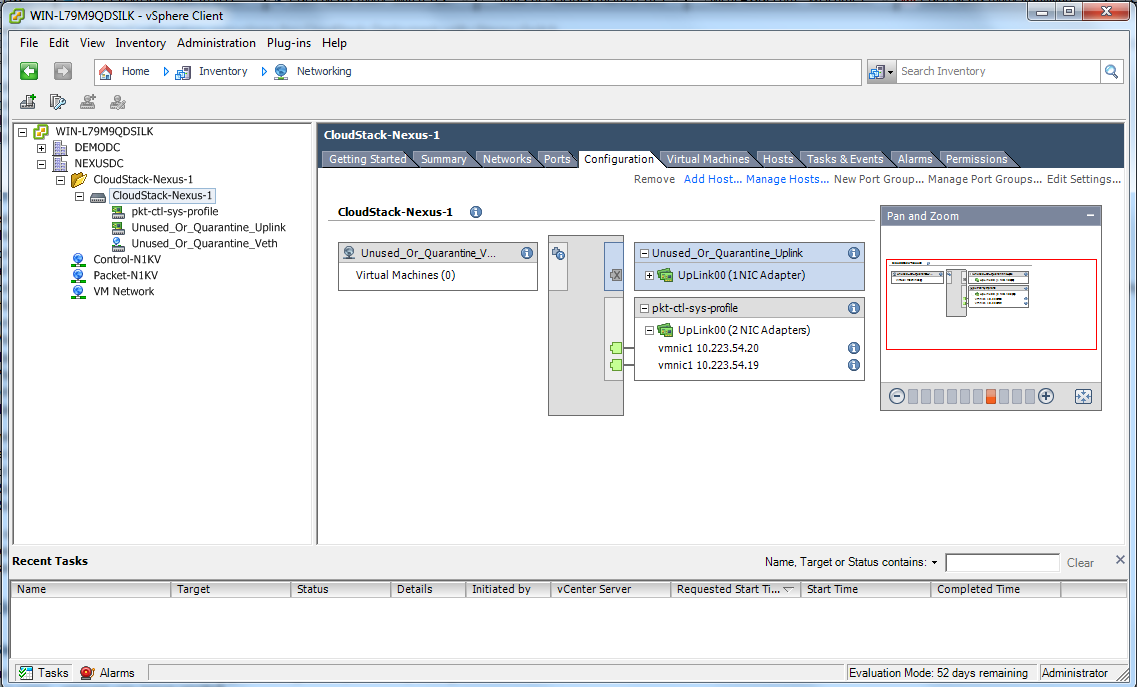

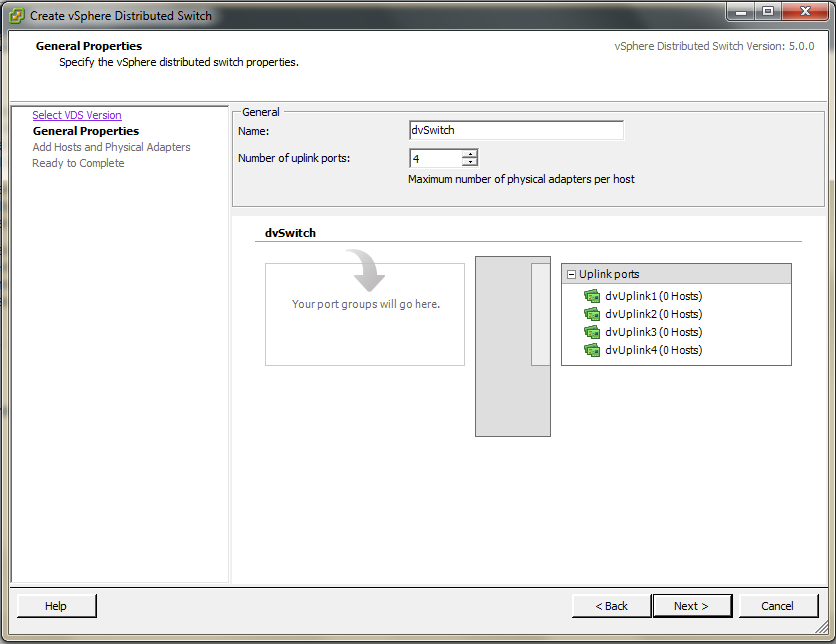

Configure Cisco Nexus 1000v dvSwitch in a vCenter environment.

Create Port profiles for both internal and external network interfaces on Cisco Nexus 1000v dvSwitch. Note down the inside port profile, which needs to be provided while adding the ASA appliance to CloudStack.

Deploy and configure Cisco VNMC.

Register Cisco Nexus 1000v dvSwitch with Cisco VNMC.

Create Inside and Outside port profiles in Cisco Nexus 1000v dvSwitch.

Deploy and Cisco ASA 1000v appliance.

Typically, you create a pool of ASA 1000v appliances and register them with CloudStack.

Specify the following while setting up a Cisco ASA 1000v instance:

VNMC host IP.

Ensure that you add ASA appliance in VNMC mode.

Port profiles for the Management and HA network interfaces. This need to be pre-created on Cisco Nexus 1000v dvSwitch.

Internal and external port profiles.

The Management IP for Cisco ASA 1000v appliance. Specify the gateway such that the VNMC IP is reachable.

Administrator credentials

VNMC credentials

Register Cisco ASA 1000v with VNMC.

After Cisco ASA 1000v instance is powered on, register VNMC from the ASA console.

13.5.3.1.3. Using Cisco ASA 1000v Services

Ensure that all the prerequisites are met.

Add a VNMC instance.

Add a ASA 1000v instance.

Create a Network Offering and use Cisco VNMC as the service provider for desired services.

Create an Isolated Guest Network by using the network offering you just created.

13.5.3.2. Adding a VNMC Instance

Log in to the CloudStack UI as administrator.

In the left navigation bar, click Infrastructure.

In Zones, click View More.

Choose the zone you want to work with.

Click the Physical Network tab.

In the Network Service Providers node of the diagram, click Configure.

You might have to scroll down to see this.

Click Cisco VNMC.

Click View VNMC Devices.

Click the Add VNMC Device and provide the following:

Host: The IP address of the VNMC instance.

Username: The user name of the account on the VNMC instance that CloudStack should use.

Password: The password of the account.

Click OK.

13.5.3.3. Adding an ASA 1000v Instance

Log in to the CloudStack UI as administrator.

In the left navigation bar, click Infrastructure.

In Zones, click View More.

Choose the zone you want to work with.

Click the Physical Network tab.

In the Network Service Providers node of the diagram, click Configure.

You might have to scroll down to see this.

Click Cisco VNMC.

Click View ASA 1000v.

Click the Add CiscoASA1000v Resource and provide the following:

Host: The management IP address of the ASA 1000v instance. The IP address is used to connect to ASA 1000V.

Inside Port Profile: The Inside Port Profile configured on Cisco Nexus1000v dvSwitch.

Cluster: The VMware cluster to which you are adding the ASA 1000v instance.

Ensure that the cluster is Cisco Nexus 1000v dvSwitch enabled.

Click OK.

13.5.3.4. Creating a Network Offering Using Cisco ASA 1000v

To have Cisco ASA 1000v support for a guest network, create a network offering as follows:

Log in to the CloudStack UI as a user or admin.

From the Select Offering drop-down, choose Network Offering.

Click Add Network Offering.

In the dialog, make the following choices:

Name: Any desired name for the network offering.

Description: A short description of the offering that can be displayed to users.

Network Rate: Allowed data transfer rate in MB per second.

Traffic Type: The type of network traffic that will be carried on the network.

Guest Type: Choose whether the guest network is isolated or shared.

Persistent: Indicate whether the guest network is persistent or not. The network that you can provision without having to deploy a VM on it is termed persistent network.

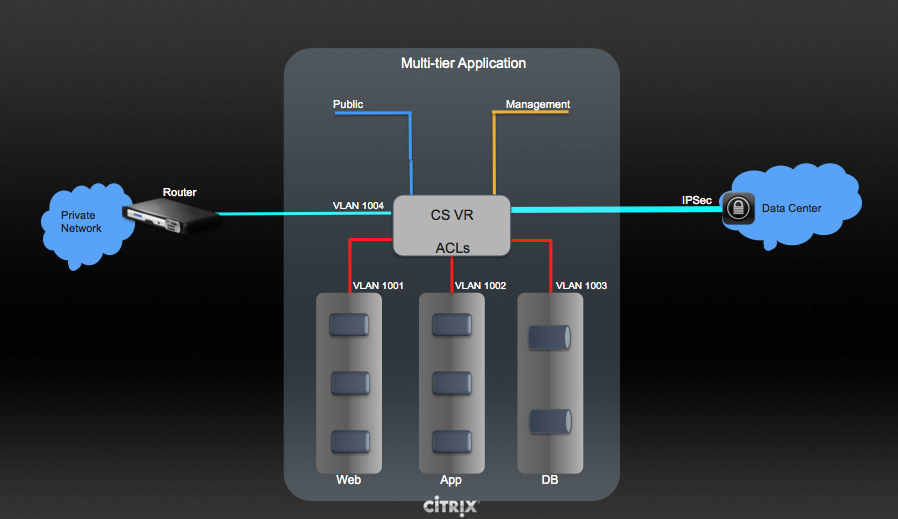

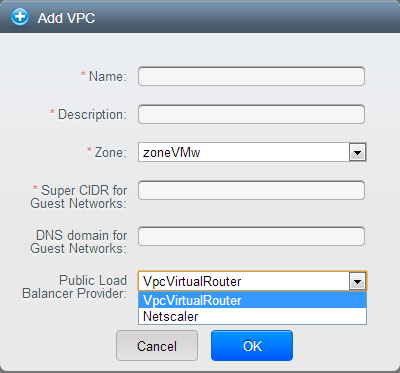

VPC: This option indicate whether the guest network is Virtual Private Cloud-enabled. A Virtual Private Cloud (VPC) is a private, isolated part of CloudStack. A VPC can have its own virtual network topology that resembles a traditional physical network. For more information on VPCs, see

Section 15.27.1, “About Virtual Private Clouds”.

Specify VLAN: (Isolated guest networks only) Indicate whether a VLAN should be specified when this offering is used.

Supported Services: Use Cisco VNMC as the service provider for Firewall, Source NAT, Port Forwarding, and Static NAT to create an Isolated guest network offering.

System Offering: Choose the system service offering that you want virtual routers to use in this network.

Conserve mode: Indicate whether to use conserve mode. In this mode, network resources are allocated only when the first virtual machine starts in the network.

Click OK

The network offering is created.

13.5.3.5. Reusing ASA 1000v Appliance in new Guest Networks

You can reuse an ASA 1000v appliance in a new guest network after the necessary cleanup. Typically, ASA 1000v is cleaned up when the logical edge firewall is cleaned up in VNMC. If this cleanup does not happen, you need to reset the appliance to its factory settings for use in new guest networks. As part of this, enable SSH on the appliance and store the SSH credentials by registering on VNMC.

Open a command line on the ASA appliance:

Run the following:

ASA1000V(config)# reload

You are prompted with the following message:

System config has been modified. Save? [Y]es/[N]o:"

Enter N.

You will get the following confirmation message:

"Proceed with reload? [confirm]"

Restart the appliance.

Register the ASA 1000v appliance with the VNMC:

ASA1000V(config)# vnmc policy-agent

ASA1000V(config-vnmc-policy-agent)# registration host vnmc_ip_address

ASA1000V(config-vnmc-policy-agent)# shared-secret key where key is the shared secret for authentication of the ASA 1000V connection to the Cisco VNMC

13.5.4. External Guest Load Balancer Integration (Optional)

CloudStack can optionally use a Citrix NetScaler or BigIP F5 load balancer to provide load balancing services to guests. If this is not enabled, CloudStack will use the software load balancer in the virtual router.

To install and enable an external load balancer for CloudStack management:

Set up the appliance according to the vendor's directions.

Connect it to the networks carrying public traffic and management traffic (these could be the same network).

Record the IP address, username, password, public interface name, and private interface name. The interface names will be something like "1.1" or "1.2".

Make sure that the VLANs are trunked to the management network interface.

After the CloudStack Management Server is installed, log in as administrator to the CloudStack UI.

In the left navigation bar, click Infrastructure.

In Zones, click View More.

Choose the zone you want to work with.

Click the Network tab.

In the Network Service Providers node of the diagram, click Configure. (You might have to scroll down to see this.)

Click NetScaler or F5.

Click the Add button (+) and provide the following:

For NetScaler:

IP Address: The IP address of the SRX.

Username/Password: The authentication credentials to access the device. CloudStack uses these credentials to access the device.

Type: The type of device that is being added. It could be F5 Big Ip Load Balancer, NetScaler VPX, NetScaler MPX, or NetScaler SDX. For a comparison of the NetScaler types, see the CloudStack Administration Guide.

Public interface: Interface of device that is configured to be part of the public network.

Private interface: Interface of device that is configured to be part of the private network.

Number of retries. Number of times to attempt a command on the device before considering the operation failed. Default is 2.

Capacity: The number of networks the device can handle.

Dedicated: When marked as dedicated, this device will be dedicated to a single account. When Dedicated is checked, the value in the Capacity field has no significance implicitly, its value is 1.

Click OK.

The installation and provisioning of the external load balancer is finished. You can proceed to add VMs and NAT or load balancing rules.

13.6. Management Server Load Balancing

CloudStack can use a load balancer to provide a virtual IP for multiple Management Servers. The administrator is responsible for creating the load balancer rules for the Management Servers. The application requires persistence or stickiness across multiple sessions. The following chart lists the ports that should be load balanced and whether or not persistence is required.

Even if persistence is not required, enabling it is permitted.

In addition to above settings, the administrator is responsible for setting the 'host' global config value from the management server IP to load balancer virtual IP address. If the 'host' value is not set to the VIP for Port 8250 and one of your management servers crashes, the UI is still available but the system VMs will not be able to contact the management server.

13.7. Topology Requirements

13.7.1. Security Requirements

The public Internet must not be able to access port 8096 or port 8250 on the Management Server.

13.7.2. Runtime Internal Communications Requirements

The Management Servers communicate with each other to coordinate tasks. This communication uses TCP on ports 8250 and 9090.

The console proxy VMs connect to all hosts in the zone over the management traffic network. Therefore the management traffic network of any given pod in the zone must have connectivity to the management traffic network of all other pods in the zone.

The secondary storage VMs and console proxy VMs connect to the Management Server on port 8250. If you are using multiple Management Servers, the load balanced IP address of the Management Servers on port 8250 must be reachable.

13.7.3. Storage Network Topology Requirements

The secondary storage NFS export is mounted by the secondary storage VM. Secondary storage traffic goes over the management traffic network, even if there is a separate storage network. Primary storage traffic goes over the storage network, if available. If you choose to place secondary storage NFS servers on the storage network, you must make sure there is a route from the management traffic network to the storage network.

13.7.4. External Firewall Topology Requirements

When external firewall integration is in place, the public IP VLAN must still be trunked to the Hosts. This is required to support the Secondary Storage VM and Console Proxy VM.

13.7.5. Advanced Zone Topology Requirements

With Advanced Networking, separate subnets must be used for private and public networks.

13.7.6. XenServer Topology Requirements

The Management Servers communicate with XenServer hosts on ports 22 (ssh), 80 (HTTP), and 443 (HTTPs).

13.7.7. VMware Topology Requirements

The Management Server and secondary storage VMs must be able to access vCenter and all ESXi hosts in the zone. To allow the necessary access through the firewall, keep port 443 open.

The Management Servers communicate with VMware vCenter servers on port 443 (HTTPs).

The Management Servers communicate with the System VMs on port 3922 (ssh) on the management traffic network.

13.7.8. KVM Topology Requirements

The Management Servers communicate with KVM hosts on port 22 (ssh).

13.7.9. LXC Topology Requirements

The Management Servers communicate with LXC hosts on port 22 (ssh).

13.8. Guest Network Usage Integration for Traffic Sentinel

To collect usage data for a guest network, CloudStack needs to pull the data from an external network statistics collector installed on the network. Metering statistics for guest networks are available through CloudStack’s integration with inMon Traffic Sentinel.

Traffic Sentinel is a network traffic usage data collection package. CloudStack can feed statistics from Traffic Sentinel into its own usage records, providing a basis for billing users of cloud infrastructure. Traffic Sentinel uses the traffic monitoring protocol sFlow. Routers and switches generate sFlow records and provide them for collection by Traffic Sentinel, then CloudStack queries the Traffic Sentinel database to obtain this information

To construct the query, CloudStack determines what guest IPs were in use during the current query interval. This includes both newly assigned IPs and IPs that were assigned in a previous time period and continued to be in use. CloudStack queries Traffic Sentinel for network statistics that apply to these IPs during the time period they remained allocated in CloudStack. The returned data is correlated with the customer account that owned each IP and the timestamps when IPs were assigned and released in order to create billable metering records in CloudStack. When the Usage Server runs, it collects this data.

To set up the integration between CloudStack and Traffic Sentinel:

On your network infrastructure, install Traffic Sentinel and configure it to gather traffic data. For installation and configuration steps, see inMon documentation at

Traffic Sentinel Documentation.

In the Traffic Sentinel UI, configure Traffic Sentinel to accept script querying from guest users. CloudStack will be the guest user performing the remote queries to gather network usage for one or more IP addresses.

Click File > Users > Access Control > Reports Query, then select Guest from the drop-down list.

On CloudStack, add the Traffic Sentinel host by calling the CloudStack API command addTrafficMonitor. Pass in the URL of the Traffic Sentinel as protocol + host + port (optional); for example, http://10.147.28.100:8080. For the addTrafficMonitor command syntax, see the API Reference at

API Documentation.

Log in to the CloudStack UI as administrator.

Select Configuration from the Global Settings page, and set the following:

direct.network.stats.interval: How often you want CloudStack to query Traffic Sentinel.

13.9. Setting Zone VLAN and Running VM Maximums

In the external networking case, every VM in a zone must have a unique guest IP address. There are two variables that you need to consider in determining how to configure CloudStack to support this: how many Zone VLANs do you expect to have and how many VMs do you expect to have running in the Zone at any one time.

Use the following table to determine how to configure CloudStack for your deployment.

Based on your deployment's needs, choose the appropriate value of guest.vlan.bits. Set it as described in Edit the Global Configuration Settings (Optional) section and restart the Management Server.

Chapter 15. Managing Networks and Traffic

In a CloudStack, guest VMs can communicate with each other using shared infrastructure with the security and user perception that the guests have a private LAN. The CloudStack virtual router is the main component providing networking features for guest traffic.

A network can carry guest traffic only between VMs within one zone. Virtual machines in different zones cannot communicate with each other using their IP addresses; they must communicate with each other by routing through a public IP address.

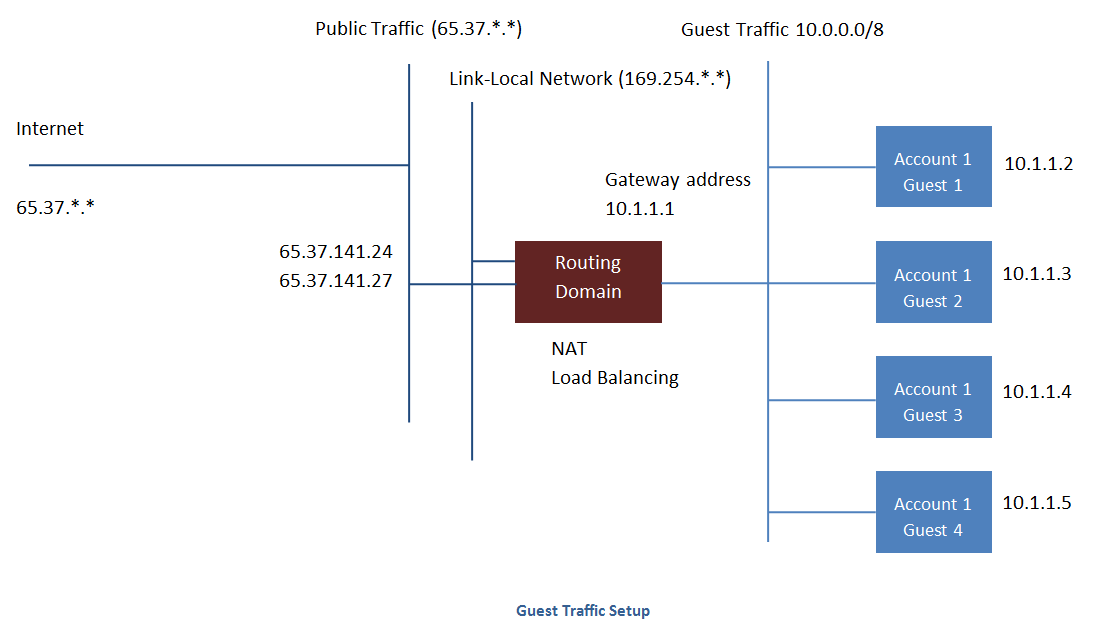

See a typical guest traffic setup given below:

Typically, the Management Server automatically creates a virtual router for each network. A virtual router is a special virtual machine that runs on the hosts. Each virtual router in an isolated network has three network interfaces. If multiple public VLAN is used, the router will have multiple public interfaces. Its eth0 interface serves as the gateway for the guest traffic and has the IP address of 10.1.1.1. Its eth1 interface is used by the system to configure the virtual router. Its eth2 interface is assigned a public IP address for public traffic. If multiple public VLAN is used, the router will have multiple public interfaces.

The virtual router provides DHCP and will automatically assign an IP address for each guest VM within the IP range assigned for the network. The user can manually reconfigure guest VMs to assume different IP addresses.

Source NAT is automatically configured in the virtual router to forward outbound traffic for all guest VMs

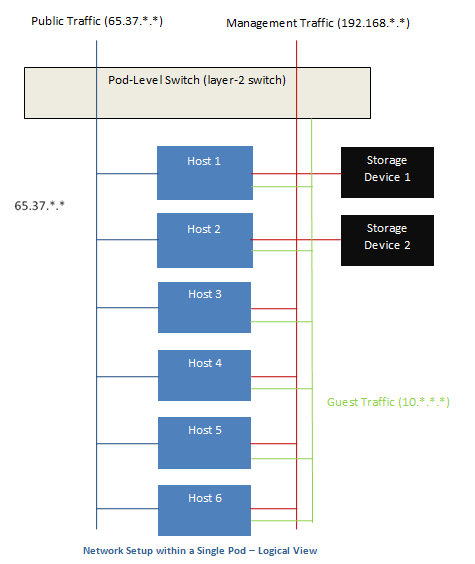

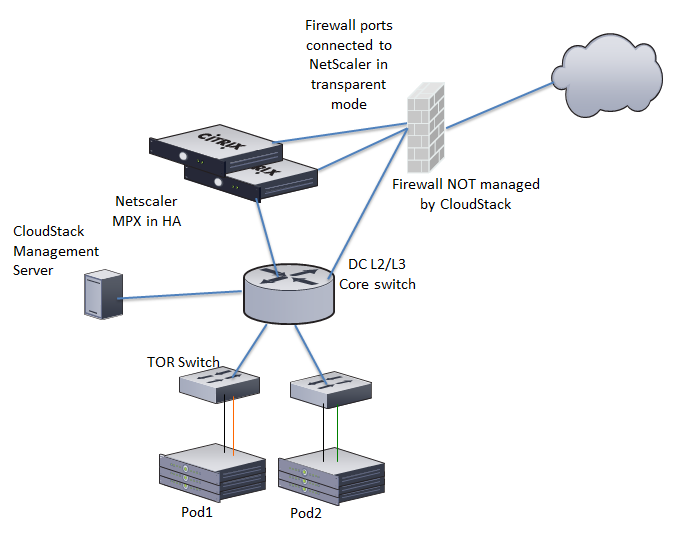

15.2. Networking in a Pod

The figure below illustrates network setup within a single pod. The hosts are connected to a pod-level switch. At a minimum, the hosts should have one physical uplink to each switch. Bonded NICs are supported as well. The pod-level switch is a pair of redundant gigabit switches with 10 G uplinks.

Servers are connected as follows:

Storage devices are connected to only the network that carries management traffic.

Hosts are connected to networks for both management traffic and public traffic.

Hosts are also connected to one or more networks carrying guest traffic.

We recommend the use of multiple physical Ethernet cards to implement each network interface as well as redundant switch fabric in order to maximize throughput and improve reliability.

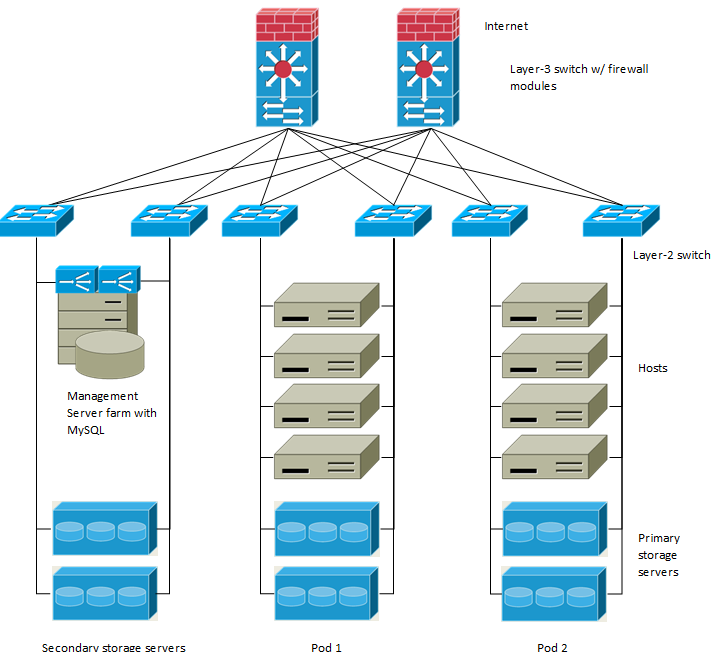

15.3. Networking in a Zone

The following figure illustrates the network setup within a single zone.

A firewall for management traffic operates in the NAT mode. The network typically is assigned IP addresses in the 192.168.0.0/16 Class B private address space. Each pod is assigned IP addresses in the 192.168.*.0/24 Class C private address space.

Each zone has its own set of public IP addresses. Public IP addresses from different zones do not overlap.

15.4. Basic Zone Physical Network Configuration

In a basic network, configuring the physical network is fairly straightforward. You only need to configure one guest network to carry traffic that is generated by guest VMs. When you first add a zone to CloudStack, you set up the guest network through the Add Zone screens.

15.5. Advanced Zone Physical Network Configuration

Within a zone that uses advanced networking, you need to tell the Management Server how the physical network is set up to carry different kinds of traffic in isolation.

15.5.3. Configuring a Shared Guest Network

Log in to the CloudStack UI as administrator.

In the left navigation, choose Infrastructure.

On Zones, click View More.

Click the zone to which you want to add a guest network.

Click the Physical Network tab.

Click the physical network you want to work with.

On the Guest node of the diagram, click Configure.

Click the Network tab.

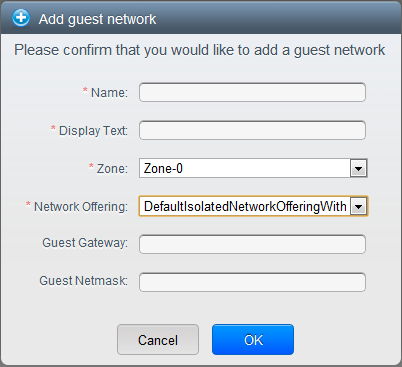

Click Add guest network.

The Add guest network window is displayed.

Specify the following:

Name: The name of the network. This will be visible to the user.

Description: The short description of the network that can be displayed to users.

VLAN ID: The unique ID of the VLAN.

Isolated VLAN ID: The unique ID of the Secondary Isolated VLAN.

Scope: The available scopes are Domain, Account, Project, and All.

Domain: Selecting Domain limits the scope of this guest network to the domain you specify. The network will not be available for other domains. If you select Subdomain Access, the guest network is available to all the sub domains within the selected domain.

Account: The account for which the guest network is being created for. You must specify the domain the account belongs to.

Project: The project for which the guest network is being created for. You must specify the domain the project belongs to.

All: The guest network is available for all the domains, account, projects within the selected zone.

Network Offering: If the administrator has configured multiple network offerings, select the one you want to use for this network.

Gateway: The gateway that the guests should use.

Netmask: The netmask in use on the subnet the guests will use.

IP Range: A range of IP addresses that are accessible from the Internet and are assigned to the guest VMs.

If one NIC is used, these IPs should be in the same CIDR in the case of IPv6.

IPv6 CIDR: The network prefix that defines the guest network subnet. This is the CIDR that describes the IPv6 addresses in use in the guest networks in this zone. To allot IP addresses from within a particular address block, enter a CIDR.

Network Domain: A custom DNS suffix at the level of a network. If you want to assign a special domain name to the guest VM network, specify a DNS suffix.

Click OK to confirm.

15.6. Using Multiple Guest Networks

In zones that use advanced networking, additional networks for guest traffic may be added at any time after the initial installation. You can also customize the domain name associated with the network by specifying a DNS suffix for each network.

A VM's networks are defined at VM creation time. A VM cannot add or remove networks after it has been created, although the user can go into the guest and remove the IP address from the NIC on a particular network.

Each VM has just one default network. The virtual router's DHCP reply will set the guest's default gateway as that for the default network. Multiple non-default networks may be added to a guest in addition to the single, required default network. The administrator can control which networks are available as the default network.

Additional networks can either be available to all accounts or be assigned to a specific account. Networks that are available to all accounts are zone-wide. Any user with access to the zone can create a VM with access to that network. These zone-wide networks provide little or no isolation between guests.Networks that are assigned to a specific account provide strong isolation.

15.6.1. Adding an Additional Guest Network

Log in to the CloudStack UI as an administrator or end user.

In the left navigation, choose Network.

Click Add guest network. Provide the following information:

Name: The name of the network. This will be user-visible.

Display Text: The description of the network. This will be user-visible.

Zone. The name of the zone this network applies to. Each zone is a broadcast domain, and therefore each zone has a different IP range for the guest network. The administrator must configure the IP range for each zone.

Network offering: If the administrator has configured multiple network offerings, select the one you want to use for this network.

Guest Gateway: The gateway that the guests should use.

Guest Netmask: The netmask in use on the subnet the guests will use.

Click Create.

15.6.2. Reconfiguring Networks in VMs

CloudStack provides you the ability to move VMs between networks and reconfigure a VM's network. You can remove a VM from a network and add to a new network. You can also change the default network of a virtual machine. With this functionality, hybrid or traditional server loads can be accommodated with ease.

This feature is supported on XenServer, VMware, and KVM hypervisors.

Ensure that vm-tools are running on guest VMs for adding or removing networks to work on VMware hypervisor.

15.6.2.2. Adding a Network

Log in to the CloudStack UI as an administrator or end user.

In the left navigation, click Instances.

Choose the VM that you want to work with.

Click the NICs tab.

Click Add network to VM.

The Add network to VM dialog is displayed.

In the drop-down list, select the network that you would like to add this VM to.

A new NIC is added for this network. You can view the following details in the NICs page:

ID

Network Name

Type

IP Address

Gateway

Netmask

Is default

CIDR (for IPv6)

15.6.2.3. Removing a Network

Log in to the CloudStack UI as an administrator or end user.

In the left navigation, click Instances.

Choose the VM that you want to work with.

Click the NICs tab.

Locate the NIC you want to remove.

Click Remove NIC button.

Click Yes to confirm.

15.6.2.4. Selecting the Default Network

Log in to the CloudStack UI as an administrator or end user.

In the left navigation, click Instances.

Choose the VM that you want to work with.

Click the NICs tab.

Locate the NIC you want to work with.

Click the Set default NIC button.

Click Yes to confirm.

15.6.3. Changing the Network Offering on a Guest Network

A user or administrator can change the network offering that is associated with an existing guest network.

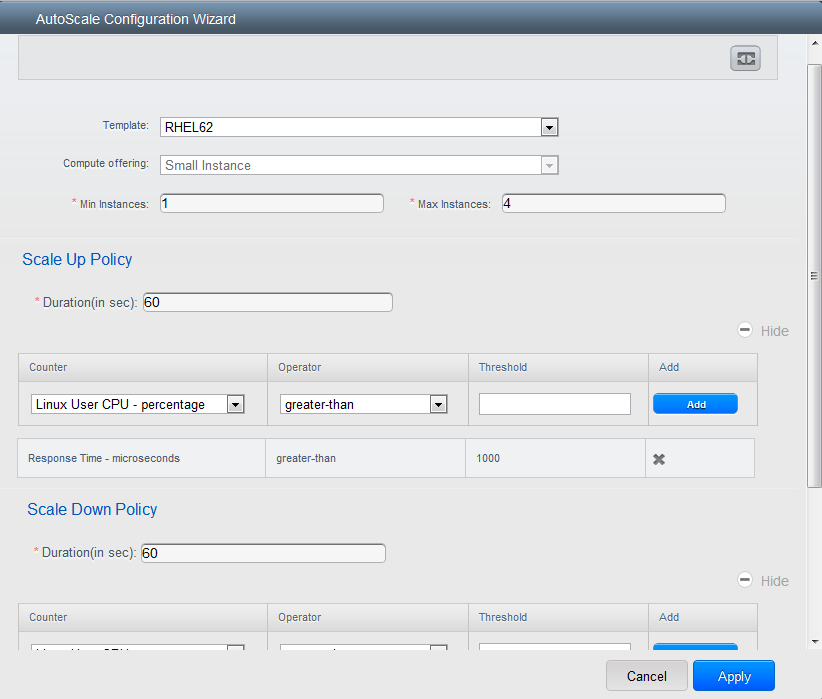

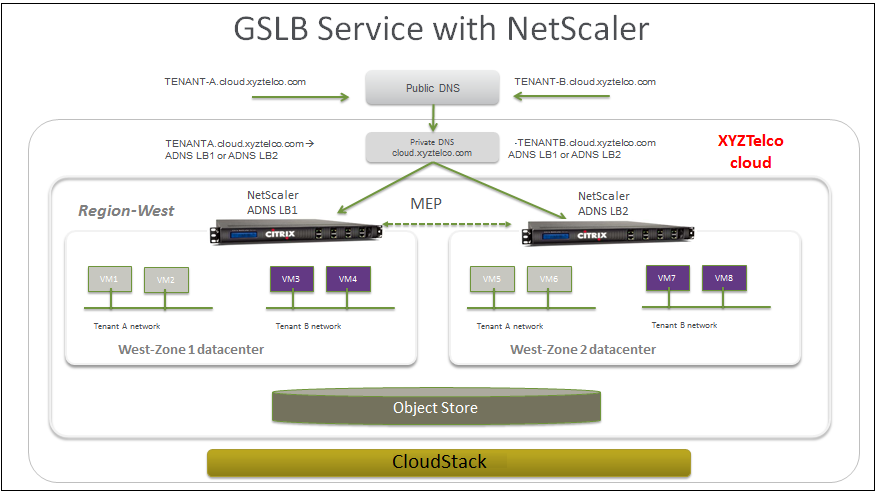

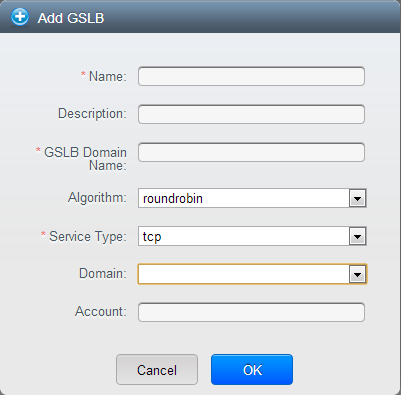

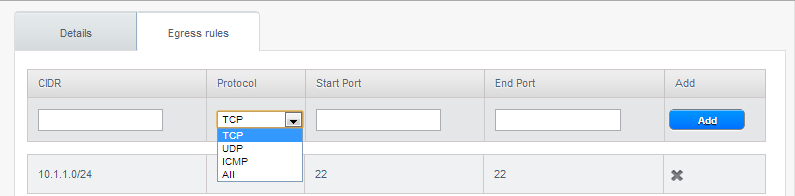

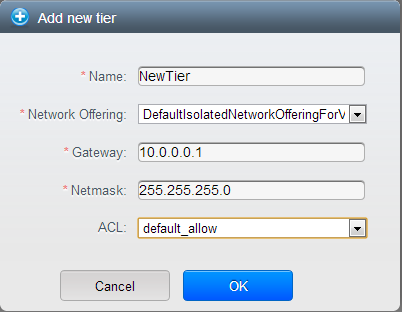

Log in to the CloudStack UI as an administrator or end user.