What is the Confluent Platform?¶

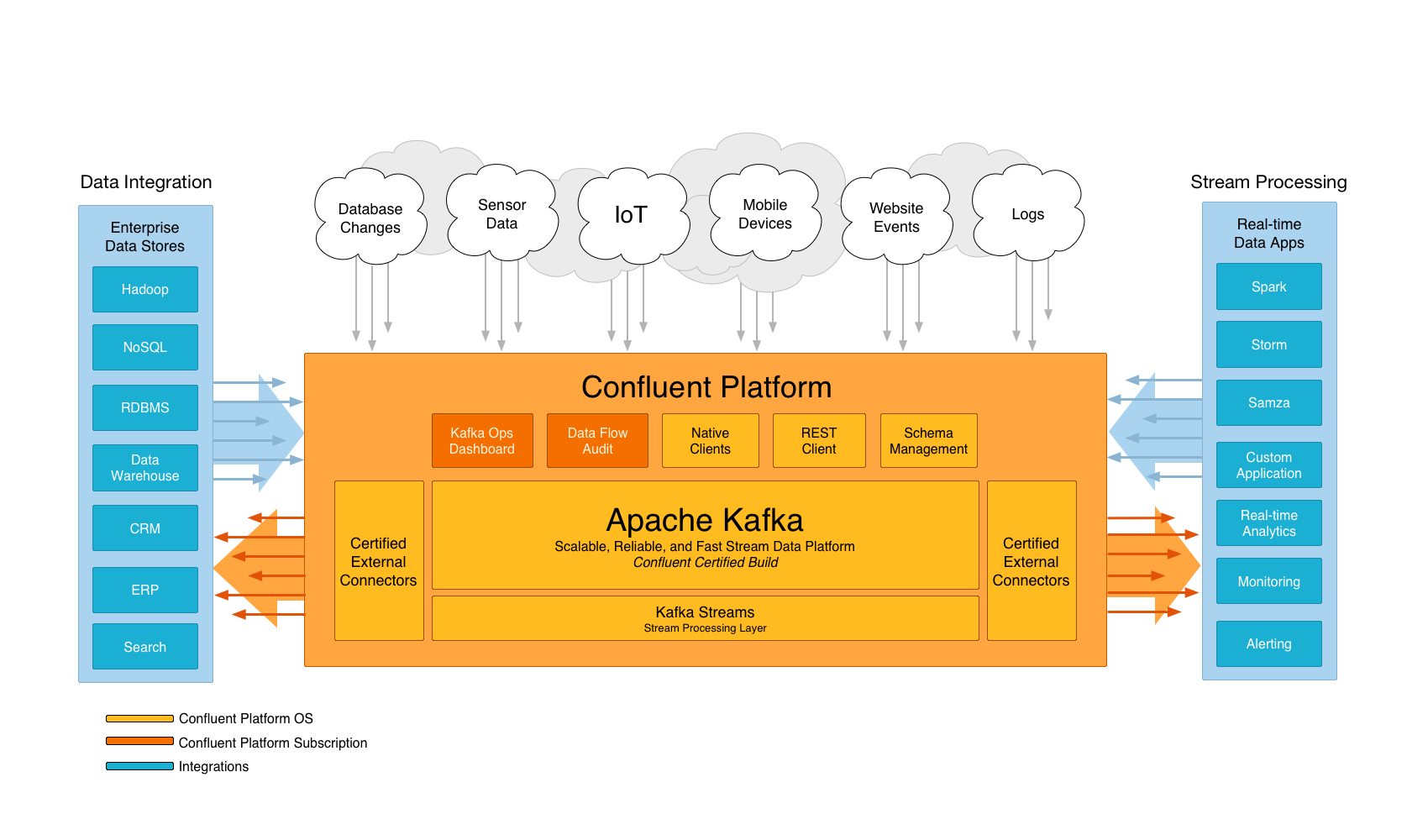

The Confluent Platform is a stream data platform that enables you to organize and manage the massive amounts of data that arrive every second at the doorstep of a wide array of modern organizations in various industries, from retail, logistics, manufacturing, and financial services, to online social networking. With Confluent, this growing barrage of, often unstructured but nevertheless incredibly valuable, data becomes an easily accessible, unified stream data platform that’s always readily available for many uses throughout your entire organization. These uses can easily range from enabling batch Big Data analysis with Hadoop and feeding realtime monitoring systems, to more traditional large volume data integration tasks that require a high-throughput, industrial-strength extraction, transformation, and load (ETL) backbone.

What is included in the Confluent Platform?¶

The Confluent Platform is a collection of infrastructure services, tools, and guidelines for making all of your company’s data readily available as realtime streams. By integrating data from disparate IT systems into a single central stream data platform or “nervous system” for your company, the Confluent Platform lets you focus on how to derive business value from your data rather than worrying about the underlying mechanics of how data is shuttled, shuffled, switched, and sorted between various systems.

At its core, the Confluent Platform leverages Apache Kafka, a proven open source technology created by the founders of Confluent while at LinkedIn. Kafka acts as a realtime, fault tolerant, highly scalable messaging system and is already widely deployed for use cases ranging from collecting user activity data, system logs, application metrics, stock ticker data, and device instrumentation signals. Its key strength is its ability to make high volume data available as a realtime stream for consumption in systems with very different requirements – from batch systems like Hadoop, to realtime systems that require low-latency access, to stream processing engines that transform data streams immediately, as they arrive.

Out of the box, the Confluent Platform also includes a Schema Registry, a REST Proxy, and integration with Camus, a MapReduce implementation that dramatically eases continuous upload of data into Hadoop clusters. The capabilities of these tools are discussed in more detail in the following sections. Collectively, the integrated components in the Confluent Platform give your team a simple and clear path towards establishing a consistent yet flexible approach for building an enterprise-wide stream data platform for a wide array of use cases.

These Guides, Quickstarts, and API References help you get started easily, describe best practices both for the deployment and management of Kafka, and show you how to use the Confluent Platform tools to get the most out of your Kafka deployment - with the least amount of risk and hassle.

Apache Kafka¶

Apache Kafka is a realtime, fault tolerant, highly scalable messaging system. It is widely adopted for many use cases ranging from collecting user activity data, logs, application metrics, stock ticker data, and device instrumentation. Kafka’s unifying abstraction, a partitioned and replicated low latency commit log, allows these applications with very different throughput and latency requirements to be implemented on a single messaging system. It also encourages clean, loosely coupled architectures by acting as a highly reliable mediator between systems.

Kafka is a powerful and flexible tool that forms the foundation of the Confluent Platform. However, it is not a complete stream data platform: like a database, it provides the data storage and interfaces for reading and writing that data, but does not directly help you integrate with other services.

Confluent’s Commitment to Open Source¶

Confluent is committed to maintaining, enhancing, and supporting the open source Apache Kafka project. As the documentation here discusses, the version of Kafka in the Confluent Platform contains patches over the matching open source version but is fully compatible with the matching open source version. An existing Kafka cluster can be upgraded easily by performing a rolling restart of Kafka brokers.

Kafka Connect¶

Kafka Connect is a tool for scalably and reliably streaming data between Apache Kafka and other data systems. It makes it simple to quickly define connectors that move large data sets into and out of Kafka. Kafka Connect can ingest entire databases or collect metrics from all your application servers into Kafka topics, making the data available for stream processing with low latency. An export connector can deliver data from Kafka topics into secondary indexes like Elasticsearch or into batch systems such as Hadoop for offline analysis.

Kafka Connect can run either as a standalone process for testing and one-off jobs, or as a distributed, scalable, fault tolerant service supporting an entire organization. This allows it to scale down to development, testing, and small production deployments with a low barrier to entry and low operational overhead, and to scale up to support a large organization’s data pipeline.

Kafka Connect JDBC Connector¶

The JDBC connector allows you to import data from any relational database with a JDBC driver into Kafka topics. By using JDBC, this connector can support a wide variety of databases without requiring custom code for each one.

Kafka Connect HDFS Connector¶

The HDFS connector allows you to export data from Kafka topics to HDFS files in a variety of formats and integrates with Hive to make data immediately available for querying with HiveQL.

C/C++ library: librdkafka¶

librdkafka is a C/C++ library implementation of the Apache Kafka protocol, containing both Producer and Consumer support. It was designed with message delivery, reliability and high performance in mind; current figures exceed 800000 msgs/second for the producer and 3 million msgs/second for the consumer.

Proactive Support¶

Proactive Support is a component of the Confluent Platform that improves Confluent’s support for the platform by collecting and reporting support metrics (“Metrics”) to Confluent. Proactive Support is enabled by default in the Confluent Platform. We do this to provide proactive support to our customers, to help us build better products, to help customers comply with support contracts, and to help guide our marketing efforts.

Schema Registry¶

One of the most difficult challenges with loosely coupled systems is ensuring compatibility of data and code as the system grows and evolves. With a messaging service like Kafka, services that interact with each other must agree on a common format, called a schema, for messages. In many systems, these formats are ad hoc, only implicitly defined by the code, and often are duplicated across each system that uses that message type. But as requirements change, it becomes necessary to evolve these formats. With only an ad-hoc definition, it is very difficult for developers to determine what the impact of their change might be.

The Schema Registry enables safe, zero downtime evolution of schemas by centralizing the management of schemas written for the Avro serialization system. It tracks all versions of schemas used for every topic in Kafka and only allows evolution of schemas according to user-defined compatibility settings. This gives developers confidence that they can safely modify schemas as necessary without worrying that doing so will break a different service they may not even be aware of.

The Schema Registry also includes plugins for Kafka clients that handle schema storage and retrieval for Kafka messages that are sent in the Avro format. This integration is seamless – if you are already using Kafka with Avro data, using the Schema Registry only requires including the serializers with your application and changing one setting.

REST Proxy¶

Every organization standardizes on their own set of tools, and many use languages that do not have high quality Kafka clients. Only a couple of languages have very good client support because writing high performance Kafka clients is very challenging compared to clients for other systems because of its very general, flexible pub-sub model.

The REST Proxy makes it easy to work with Kafka from any language by providing a RESTful HTTP service for interacting with Kafka clusters. The REST Proxy supports all the core functionality: sending messages to Kafka, reading messages, both individually and as part of a consumer group, and inspecting cluster metadata, such as the list of topics and their settings. You can get the full benefits of the high quality, officially maintained Java clients from any language.

The REST Proxy also integrates with the Schema Registry. It can read and write Avro data, registering and looking up schemas in the Schema Registry. Because it automatically translates JSON data to and from Avro, you can get all the benefits of centralized schema management from any language using only HTTP and JSON.

Camus¶

Camus is a MapReduce job that provides automatic, zero data-loss ETL from Kafka into HDFS. By running Camus periodically, you can be sure that all the data that was stored in Kafka has also been delivered to your data warehouse in a convenient time-partitioned format and will be ready for offline batch processing.

Confluent Platform’s Camus is also integrated with the Schema Registry. With this integration, Camus automatically decodes the data before storing it in HDFS and ensures it is in a consistent format for each time partition, even if the data contains data using different schemas. By integrating the Schema registry at every step from data creation to delivery into the data wharehouse, you can avoid the expensive, labor-intensive pre-processing often required to get your data into a usable state.

Note

By combining the tools included in the Confluent Platform, you get a fully-automated, low-latency, end-to-end ETL pipeline with online schema evolution.

Migrating an existing Kafka deployment¶

Confluent is committed to supporting and developing the open source Apache Kafka project. The version of Kafka in the Confluent Platform is fully compatible with the matching open source version and only contains additional patches for critical bugs when the Confluent Platform and Apache Kafka release schedules do not align. An existing cluster can be upgraded easily by performing a rolling restart of Kafka brokers.

The rest of the Confluent Platform can be added incrementally. Start by adding the Schema Registry and updating your applications to use the Avro serializer. Next, add the REST Proxy to support applications that may not have access to good Kafka clients or Avro libraries. Finally, run periodic Camus jobs to automatically load data from Kafka into HDFS.