What is the Confluent Platform?¶

The Confluent Platform is a stream data platform that enables you to organize and manage data from many different sources with one reliable, high performance system.

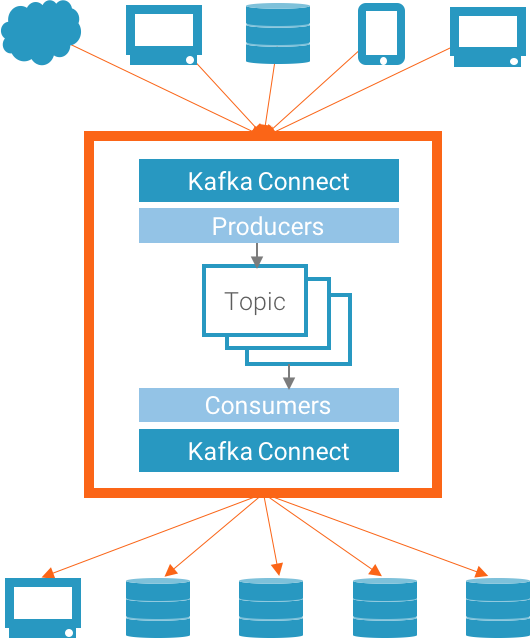

Stream Data Platform

A stream data platform provides not only the system to transport data, but all of the tools needed to connect data sources, applications, and data sinks to the platform.

What is included in the Confluent Platform?¶

Overview¶

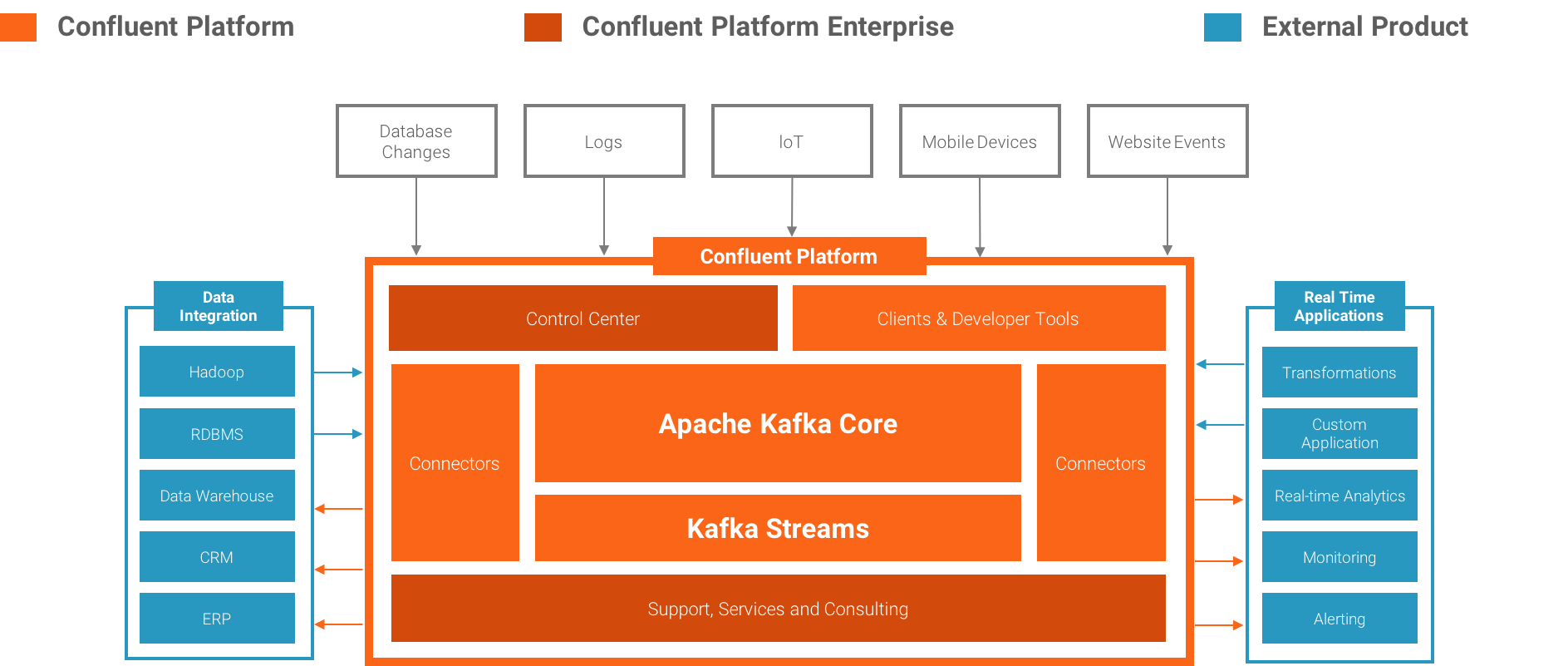

The Confluent Platform makes it easy build real-time data pipelines and streaming applications. By integrating data from multiple sources and locations into a single, central stream data platform for your company, the Confluent Platform lets you focus on how to derive business value from your data rather than worrying about the underlying mechanics such as how data is being transported or massaged between various systems. Specifically, the Confluent Platform simplifies connecting data sources to Kafka, building applications with Kafka, as well as securing, monitoring, and managing your Kafka infrastructure.

Confluent Platform 3.0 Components

As its backbone, the Confluent Platform leverages Apache Kafka, the most popular open source real-time messaging system. Apache Kafka is a low-latency, highly scalable, distributed messaging system. It is used by hundreds of companies for many different use cases including collecting user activity data, system logs, application metrics, stock ticker data, and device instrumentation signals.

The Apache Kafka open source project consists of several key components:

- Kafka Brokers (open source). Kafka brokers that form the mesaging, data persistency and storage tier of Apache Kafka

- Kafka Java Clients (open source). Java libraries for writing messagres to Kafka and reading messages from Kafka.

- Kafka Streams (open source). Kafka Streams is a library that turns Apache Kafka into a full featured, modern stream processing system.

- Kafka Connect (open source). A framework for scalably and reliably connecting Kafka with external systems such as databases, key-value stores, search indexes, and file systems.

Beyond Apache Kafka, the Confluent Platform also includes more tools and services that make it easier to build and manage a streaming platform:

- Confluent Control Center (proprietary), the most comprehensive GUI-driven system for managing and monitoring Apache Kafka

- Confluent Kafka Connectors (open source) for SQL databases, Hadoop, and Hive

- Confluent Kafka Clients (open source) for other programming languages including C/C++ and Python

- Confluent Kafka REST Proxy (open source) to allow any system that can connect through HTTP to send and receive messages with Kafka

- Confluent Schema Registry (open source) to help ensure that every application uses the correct data schema when writing data into a Kafka topic, and that every application can read the data from a Kafka topic.

Collectively, the components of the Confluent Platform give your team a simple and clear path towards establishing a consistent yet flexible approach for building an enterprise-wide stream data platform for a wide array of use cases.

The capabilities of these components are briefly summarized in the following sections and described in more detail thoughout the other chapters of this documentation. We help you get started easily through guides and quickstarts, describe best practices both for the deployment and management of Kafka, and show you how to use the Confluent Platform to get the most out of your data infrastructure.

Apache Kafka¶

Apache Kafka is a real-time, fault-tolerant, highly scalable messaging system. It is used for many different applications including collecting data on user activity, aggregating application logs, transporting financial transactions, sending email and SMS messages, and measuring environment data.

Kafka’s unifying abstraction, a partitioned and replicated low latency commit log, allows these applications with very different throughput and latency requirements to be implemented on a single messaging system. It also encourages clean, loosely coupled architectures by acting as a highly reliable mediator between systems.

Kafka is a powerful and flexible tool that forms the foundation of the Confluent Platform. However, it is not a complete stream data platform: it provides a stable platform for transporting data, but system architects and application writers need many more tools to build production systems.

Note

Apache Kafka in the Confluent Platform: Confluent is committed to maintaining, enhancing, and supporting the open source Apache Kafka project. As this documentation discusses, the version of Kafka in the Confluent Platform may contain patches over the matching open source version but is fully compatible with the matching open source version.

Apache Kafka and all its sub-components are available as open source under the Apache License v2.0 license. Source code at https://github.com/apache/kafka.

Focus: Kafka Streams¶

Kafka Streams, a component of open source Apache Kafka, is a powerful, easy-to-use library for building highly scalable, fault-tolerant, distributed stream processing applications on top of Apache Kafka. It has a very low barrier to entry, easy operationalization, and a high-level DSL for writing stream processing applications. As such it is the most convenient yet scalable option to process and analyze data that is backed by Kafka.

Kafka Streams is a component of Apache Kafka and thus available as open source software under the Apache License v2.0 license. Source code at https://github.com/apache/kafka.

Focus: Kafka Connect¶

Kafka Connect, a component of open source Apache Kafka, is a tool for scalably and reliably streaming data between Kafka and other data systems. Kafka Connect makes it simple to configure connectors to move data into and out of Kafka. Kafka Connect can ingest entire databases or collect metrics from all your application servers into Kafka topics, making the data available for stream processing. Connectors can deliver data from Kafka topics into secondary indexes like Elasticsearch or into batch systems such as Hadoop for offline analysis.

Kafka Connect can run either as a standalone process for testing and one-off jobs, or as a distributed, scalable, fault tolerant service supporting an entire organization. This allows it to scale down to development, testing, and small production deployments with a low barrier to entry and low operational overhead, and to scale up to support a large organization’s data pipeline.

Kafka Connect is a component of Apache Kafka and thus available as open source software under the Apache License v2.0 license. Source code at https://github.com/apache/kafka.

Confluent Control Center¶

Confluent Control Center is a GUI-based system for managing and monitoring Apache Kafka. It allows you to easily manage Kafka Connect, to create, edit, and manage connections to other systems. It also allows you to monitor data streams from producer to consumer, assuring that every message is delivered, and measuring how long it takes to delier messages. Using Control Center, you can build a production data pipeline based on Apache Kafka without writing a line of code.

Confluent Control Center is a proprietary component of the Confluent Platform.

Kafka Connectors¶

Kafka connectors leverage the Kafka Connect framework to connect Apache Kafka to other data systems such as Apache Hadoop. We have built connectors for the most popular data sources and sinks, and include fully tested and supported versions of these connectors with the Confluent Platform.

Tip

Beyond the Kafka connectors listed below, which are part of the Confluent Platform, you can find further connectors at the Kafka Connector Hub.

Kafka Connect JDBC Connector¶

The Confluent JDBC connector allows you to import data from any JDBC-compatible relational database into Kafka. By using JDBC, this connector can support a wide variety of databases without requiring custom code for each one.

The JDBC connector is available as open source software under the Apache License v2.0 license. Source code at https://github.com/confluentinc/kafka-connect-jdbc.

Kafka Connect HDFS Connector¶

The Confluent Hadoop Connector allows you to export data from Kafka topics to HDFS in a variety of formats and integrates with Hive to make data immediately available for querying with Hive.

The HDFS connector is available as open source software under the Apache License v2.0 license. Source code at https://github.com/confluentinc/kafka-connect-hdfs.

Kafka Clients¶

Java client library¶

The open source Apache Kafka project includes a first-class Java client implementation, the “standard” Kafka client. See Kafka Clients for details.

C/C++ client library¶

The library librdkafka is the C/C++ implementation of the Apache Kafka protocol, containing both Producer and Consumer support. It was designed with message delivery, reliability and high performance in mind. Current benchmarking figures exceed 800,000 messages/second for the producer and 3 million messages/second for the consumer. See Kafka Clients for details. This library includes support for many new features Kafka 0.10 including message security. It also integrates easily with libserdes, our C/C++ library for Avro data serialization (supporting the Schema Registry).

The C/C++ library is available as open source software under the BSD 2-clause license. Source code at https://github.com/edenhill/librdkafka.

Python client library¶

With CP 3.0, we are releasing a preview of a full-featured, high-performance client for Python. See Kafka Clients for details.

The Python library is available as open source software under the Apache License v2.0 license. Source code at https://github.com/confluentinc/confluent-kafka-python.

Confluent Schema Registry¶

One of the most difficult challenges with loosely coupled systems is ensuring compatibility of data and code as the system grows and evolves. With a messaging service like Kafka, services that interact with each other must agree on a common format, called a schema, for messages. In many systems, these formats are ad hoc, only implicitly defined by the code, and often are duplicated across each system that uses that message type.

As requirements change, it becomes necessary to evolve these formats. With only an ad-hoc definition, it is very difficult for developers to determine what the impact of their change might be.

The Confluent Schema Registry enables safe, zero downtime evolution of schemas by centralizing the management of schemas written for the Avro serialization system. It tracks all versions of schemas used for every topic in Kafka and only allows evolution of schemas according to user-defined compatibility settings. This gives developers confidence that they can safely modify schemas as necessary without worrying that doing so will break a different service they may not even be aware of.

The Schema Registry also includes plugins for Kafka clients that handle schema storage and retrieval for Kafka messages that are sent in the Avro format. This integration is seamless – if you are already using Kafka with Avro data, using the Schema Registry only requires including the serializers with your application and changing one setting.

The Confluent Schema Registry is available as open source software under the Apache License v2.0 license. Source code at https://github.com/confluentinc/schema-registry.

The Confluent Schema Registry is available as open source software under the Apache License v2.0 license. Source code at https://github.com/confluentinc/schema-registry.

Confluent Kafka REST Proxy¶

Apache Kafka and Confluent provide native clients for Java, C, C++, and Python that make it fast and easy to produce and consume messages through Kafka. These clients are usually the easiest, fastest, and most secure way to communicate directly with Kafka.

But sometimes, it isn’t practical to write and maintain an application that uses the native clients. For example, an organization might want to connect a legacy application written in PHP to Kafka. Or suppose that a company is running point-of-sale software that runs on cash registers, written in C# and runing on Windows NT 4.0, maintained by contractors, and needs to post data across the public internet. To help with these cases, Confluent Platform includes a REST Proxy. The REST Proxy addresses these problems.

The Confluent Kafka REST Proxy makes it easy to work with Kafka from any language by providing a RESTful HTTP service for interacting with Kafka clusters. The REST Proxy supports all the core functionality: sending messages to Kafka, reading messages, both individually and as part of a consumer group, and inspecting cluster metadata, such as the list of topics and their settings. You can get the full benefits of the high quality, officially maintained Java clients from any language.

The REST Proxy also integrates with the Schema Registry. It can read and write Avro data, registering and looking up schemas in the Schema Registry. Because it automatically translates JSON data to and from Avro, you can get all the benefits of centralized schema management from any language using only HTTP and JSON.

The Confluent Kafka REST Proxy is available as open source software under the Apache License v2.0 license. Source code at https://github.com/confluentinc/kafka-rest.

Confluent Proactive Support¶

Proactive Support is a component of the Confluent Platform that improves Confluent’s support for the platform by collecting and reporting support metrics (“Metrics”) to Confluent. Proactive Support is enabled by default in the Confluent Platform. We do this to provide proactive support to our customers, to help us build better products, to help customers comply with support contracts, and to help guide our marketing efforts.

Proactive Support is composed of three sub-modules. Two of them are available as open source software under the Apache License v2.0 license. Source code for these components is available at https://github.com/confluentinc/support-metrics-common and https://github.com/confluentinc/support-metrics-client. There is also an optional metrics collection component available as part of the Support Metrics package. Support Metrics is a proprietary component of the Confluent Platform. You may use the Confluent Platform with (recommended) or without Support Metrics installed or enabled.

Migrating an existing Kafka deployment¶

Confluent is committed to supporting and developing the open source Apache Kafka project. The version of Kafka in the Confluent Platform is fully compatible with the matching open source version and only contains additional patches for critical bugs when the Confluent Platform and Apache Kafka release schedules do not align. An existing cluster can typically be upgraded easily by performing a rolling restart of Kafka brokers, though we do provide detailed instructions for each CP release.

Migrating developer tools¶

The rest of the Confluent Platform can be added incrementally. Start by adding the Schema Registry and updating your applications to use the Avro serializer. Next, add the REST Proxy to support applications that may not have access to good Kafka clients or Avro libraries. Finally, run periodic Camus jobs to automatically load data from Kafka into HDFS.

Migrating to Control Center¶

You can also migrate an existing cluster to take advantage of Control Center. Simply deploy Control Center on a server, configure the server to communicate with your cluster, add interceptors to your Connect instance, producers, and consumers, and you’re ready to go.