Hadoop Connections

Hadoop connection enables Clover to interact with the Hadoop distributed file system (HDFS), and to run MapReduce jobs on a Hadoop cluster. Hadoop connections can be created as both internal and external. See sections Creating Internal Database Connections and Creating External (Shared) Database Connections to learn how to create them. Definition process for Hadoop connections is very similar to other connections in Clover, just select Create Hadoop connection instead of Create DB connection.

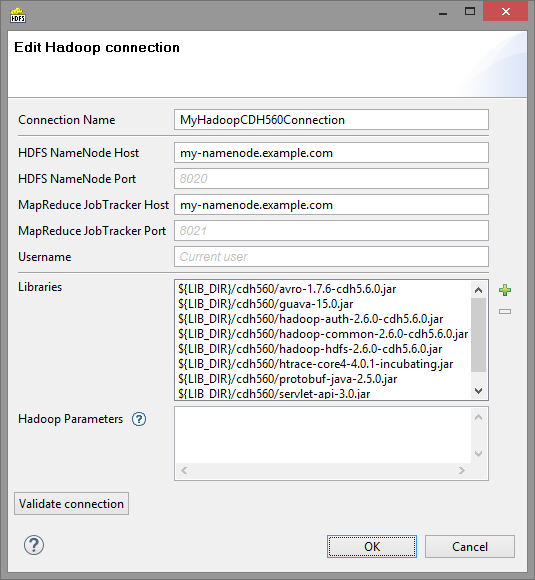

Figure 33.15. Hadoop Connection Dialog

From the Hadoop connection properties, Connection Name and HDFS NameNode Host are mandatory. Also Libraries are almost always required.

- Connection Name

In this field, type in a name you want for this Hadoop connection. Note that if you are creating a new connection, the connection name you enter here will be used to generate an ID of the connection. Whereas the connection name is just an informational label, the connection ID is used to reference this connection from various graph components (e.g. in file URL, as noted in Reading of Remote Files). Once the connection is created, the ID cannot be changed using this dialog to avoid accidental breaking of references (if you really want to change the ID of already created connection, you can do so in the Properties view).

- HDFS NameNode Host & Port

Specify hostname or IP address of your HDFS NameNode into the HDFS NameNode Host field.

If you leave HDFS NameNode Port field empty, default port number 8020 will be used.

- MapReduce JobTracker Host & Port

Specify hostname or IP address of your JobTracker into the MapReduce JobTracker Host field. This field is optional. If you leave it empty, Clover won't be able to execute MapReduce jobs using this connection (access to HDFS will still work fine though).

If you don't fill in the MapReduce JobTracker Port field, default port number 8021 will be used.

- Username

This is a name of a user under which you want to perform file operations on the HDFS and execute MapReduce jobs.

HDFS works in a similar way as usual Unix file systems (file ownership, access permissions). But unless your Hadoop cluster has Kerberos security enabled, these names serve rather as labels and avoidance for accidental data loss; everyone can impersonate anyone with no effort.

MapReduce jobs, however, cannot be easily executed as user other then the one which runs Clover graph. If you need to execute MapReduce jobs, leave this field empty.

Default Username is OS account name under which a Clover transformation graph runs. So it can be, for instance, your Windows login, and Linux running the HDFS NameNode doesn't need to have a user with the same name defined at all.

- Libraries

Here you have to specify paths to Hadoop libraries needed to communicate with your Hadoop NameNode server and (optionally) JobTracker server. There's quite a few incompatible versions of Hadoop out there, so you have to pick those that match version of your Hadoop cluster. For detailed overview of required Hadoop libraries see Libraries Needed for Hadoop.

For example, the screen shot above depicts libraries needed to use Cloudera 5.6 of Hadoop distribution. The libraries are available for download from Cloudera's web site.

The paths to the libraries can be absolute or project relative. Graph parameters can be used as well.

![[Tip]](figures/tip.png)

TROUBLESHOOTING If you omit some required library, you'll typically end up with

java.lang.NoClassDefFoundError.If an attempt is made to connect to a Hadoop server of one version using libraries of different version, error like the following will usually appear:

org.apache.hadoop.ipc.RemoteException: Server IPC version 7 cannot communicate with client version 4.![[Note]](figures/note.png)

Java versions Hadoop is guaranteed to run only on Oracle Java 1.6+, but Hadoop developers do make an effort to remove any Oracle/Sun-specific code. See Hadoop Java Versions on Hadoop Wiki.

Notably, Cloudera 3 distribution of Hadoop does work only with Oracle Java.

![[Tip]](figures/tip.png)

Usage on the Clover Server Libraries do not need to be specified if they are present on the classpath of the application server where the Clover Server is deployed. For example in case you use Tomcat app server and the Hadoop libraries are present in the

$CATALINA_HOME/libdirectory.If you do define the libraries paths, note that absolute paths are absolute paths on the application server. Relative paths are sandbox (project) relative and will work only if the libraries are located in a shared sandbox.

- Hadoop Parameters

In this simple text field, specify various parameters to fine-tune HDFS operations. Usually, leaving this field empty is just fine. You can find a list of available properties with default values in documentation of

core-default.xmlandhdfs-default.xmlfiles for your version of Hadoop. They are a bit difficult to find in the documentation, so here are few example links: hdfs-default.xml of latest release and hdfs-default.xml for v.0.20.2. Only some of the properties listed there will have an effect on Hadoop clients, most are exclusively server-side configuration.Text you enter here has to take the format of standard Java properties file. Hover mouse pointer above the question mark icon to get a hint.

Once you've finished setting up your Hadoop connection, click the Validate connection button to quickly see that the parameters you entered can be used to successfully establish a connection to your Hadoop HDFS NameNode. Note that connection validation is unfortunately not available if the libraries are located in (remote) Clover Server sandbox.

![[Note]](figures/note.png) | Note |

|---|---|

HDFS fully supports the append file operation since Hadoop version 0.21.0 |

Connecting to YARN (aka MapReduce 2.0, or MRv2)

If you run YARN instead of first generation of MapReduce framework on your Hadoop cluster, the following are the steps required to configure the Clover Hadoop connection:

Write arbitrary value into the MapReduce JobTracker Host field. This value won't be used, but will ensure that MapReduce job execution is enabled for this Hadoop connection.

Add this key-value pair to the Hadoop Parameters:

mapreduce.framework.name=yarnIn the Hadoop Parameters, add key

yarn.resourcemanager.addresswith value in form of colon separated hostname and port of your YARN ResourceManager, e.g.yarn.resourcemanager.address=my-resourcemanager.example.com:8032

You will probably have to specify the yarn.application.classpath parameter too, if the default value from

yarn-default.xml isn't working. In this case you would probably find some

java.lang.NoClassDefFoundError in log of failed YARN application container.

See also:

| HadoopReader |

| HadoopWriter |