The revised architecture for our application consists of a single IceGrid node responsible for our encoding server that runs on the computer named

ComputeServer.

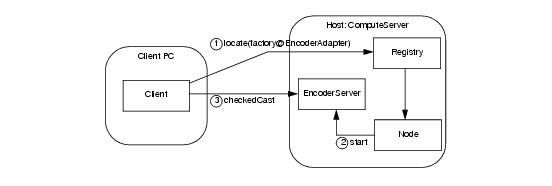

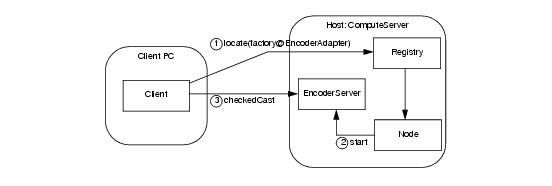

Figure 39.5 shows the client’s initial invocation on its indirect proxy and the actions that IceGrid takes to make this invocation possible.

In contrast to the architecture shown in Section 39.4.1, we no longer need to manually start our server. In this revised application, the client’s locate request prompts the registry to query the node about the server’s state and start it if necessary. Once the server starts successfully, the locate request completes and subsequent client communication occurs directly with the server.

We can deploy our application using the icegridadmin command line utility (see

Section 39.23.1), but first we must define our descriptors in XML. The descriptors are quite brief:

<icegrid>

<application name="Ripper">

<node name="Node1">

<server id="EncoderServer"

exe="/opt/ripper/bin/server"

activation="on‑demand">

<adapter name="EncoderAdapter"

id="EncoderAdapter"

endpoints="tcp"/>

</server>

</node>

</application>

</icegrid>

For IceGrid’s purposes, we have named our application Ripper. It consists of a single server,

EncoderServer, assigned to the node

Node11. The server’s

exe attribute supplies the pathname of its executable, and the

activation attribute indicates that the server should be activated on demand when necessary.

The object adapter’s descriptor is the most interesting. As you can see, the name and

id attributes both specify the value

EncoderAdapter. The value of

name reflects the adapter’s name in the server process (i.e., the argument passed to

createObjectAdapter) that is used for configuration purposes, whereas the value of

id uniquely identifies the adapter within the registry and is used in indirect proxies. These attributes are not required to have the same value. Had we omitted the

id attribute, IceGrid would have composed a unique value by combining the server name and adapter name to produce the following identifier:

The endpoints attribute defines one or more endpoints for the adapter. As explained in

Section 39.4.4, these endpoints do not require a fixed port.

See Section 39.16 for detailed information on using XML to define descriptors.

In Section 39.4.2, we created the directory

/opt/ripper/registry for use by the registry. The node also needs a subdirectory for its own purposes, so we will use

/opt/ripper/node. Again, these directories must exist before starting the registry and node.

We also need to create an Ice configuration file to hold properties required by the registry and node. The file

/opt/ripper/config contains the following properties:

# Registry propertiesIceGrid.Registry.Client.Endpoints=tcp -p 4061

IceGrid.Registry.Server.Endpoints=tcp

IceGrid.Registry.Internal.Endpoints=tcp

IceGrid.Registry.AdminPermissionsVerifier=IceGrid/NullPermissionsVerifier

IceGrid.Registry.Data=/opt/ripper/registry

# Node properties

IceGrid.Node.Endpoints=tcp

IceGrid.Node.Name=Node1

IceGrid.Node.Data=/opt/ripper/node

IceGrid.Node.CollocateRegistry=1

Ice.Default.Locator=IceGrid/Locator:tcp -p 4061

The registry and node can share this configuration file. In fact, by defining IceGrid.Node.CollocateRegistry=1, we have indicated that the registry and node should run in the same process.

Section 39.4.2 described the registry properties. One difference, however, is that we no longer define

IceGrid.Registry.DynamicRegistration. By omitting this property, we force the registry to reject the registration of object adapters that have not been deployed.

Server configuration is accomplished using descriptors. During deployment, the node creates a subdirectory tree for each server. Inside this tree the node creates a configuration file containing properties derived from the server’s descriptors. For instance, the adapter’s descriptor in

Section 39.5.2 generates the following properties in the server’s configuration file:

# Server configurationIce.Admin.ServerId=EncoderServer

Ice.Admin.Endpoints=tcp -h 127.0.0.1

Ice.ProgramName=EncoderServer

# Object adapter EncoderAdapter

EncoderAdapter.Endpoints=tcp

EncoderAdapter.AdapterId=EncoderAdapter

Ice.Default.Locator=IceGrid/Locator:default -p 4061

Note that this file should not be edited directly because any changes you make are lost the next time the node regenerates the file. The correct way to add properties to the file is to include property definitions in the server’s descriptor. For example, we can add the property

Ice.Trace.Network=1 by modifying the server descriptor as follows:

<icegrid>

<application name="Ripper">

<node name="Node1">

<server id="EncoderServer"

exe="/opt/ripper/bin/server"

activation="on‑demand">

<adapter name="EncoderAdapter"

id="EncoderAdapter"

endpoints="tcp"/>

<property name="Ice.Trace.Network"

value="1"/>

</server>

</node>

</application>

</icegrid>

When a node activates a server, it passes the location of the server’s configuration file using the

--Ice.Config command-line argument. If you start a server manually from a command prompt, you must supply this argument yourself.

Now that the configuration file is written and the directory structure is prepared, we are ready to start the IceGrid registry and node. Using a collocated registry and node, we only need to use one command:

$ icegridnode --Ice.Config=/opt/ripper/config

With the registry up and running, it is now time to deploy our application. Like our client, the

icegridadmin utility also requires a definition for the

Ice.Default.Locator property. We can start the utility with the following command:

$ icegridadmin --Ice.Config=/opt/ripper/config

After confirming that it can contact the registry, icegridadmin provides a command prompt at which we deploy our application. Assuming our descriptor is stored in

/opt/ripper/app.xml, the deployment command is shown below:

>>> application add "/opt/ripper/app.xml"

>>> application list

Ripper

>>> server start EncoderServer

>>> adapter endpoints EncoderAdapter

We have deployed our first IceGrid application, but you might be questioning whether it was worth the effort. Even at this early stage, we have already gained several benefits:

Admittedly, we have not made much progress yet in our stated goal of improving the performance of the ripper over alternative solutions that are restricted to running on a single computer. Our client now has the ability to easily delegate the encoding task to a server running on another computer, but we have not achieved the parallelism that we really need. For example, if the client created a number of encoders and used them simultaneously from multiple threads, the encoding performance might actually be

worse than simply encoding the data directly in the client, as the remote computer would likely slow to a crawl while attempting to task-switch among a number of processor-intensive tasks.

Adding more nodes to our environment would allow us to distribute the encoding load to more compute servers. Using the techniques we have learned so far, let us investigate the impact that adding a node would have on our descriptors, configuration, and client application.

<icegrid>

<application name="Ripper">

<node name="Node1">

<server id="EncoderServer1"

exe="/opt/ripper/bin/server"

activation="on‑demand">

<adapter name="EncoderAdapter"

endpoints="tcp"/>

</server>

</node>

<node name="Node2">

<server id="EncoderServer2"

exe="/opt/ripper/bin/server"

activation="on‑demand">

<adapter name="EncoderAdapter"

endpoints="tcp"/>

</server>

</node>

</application>

</icegrid>

Note that we now have two node elements instead of a single one. You might be tempted to simply use the host name as the node name. However, in general, that is not a good idea. For example, you may want to run several IceGrid nodes on a single machine (for example, for testing). Similarly, you may have to rename a host at some point, or need to migrate a node to a different host. But, unless you also rename the node, that leads to the situation where you have a node with the name of a (possibly obsolete) host when the node in fact is not running on that host. Obviously, this makes for a confusing configuration—it is better to use abstract node names, such as

Node1.

Aside from the new node element, notice that the server identifiers must be unique. The adapter name, however, can remain as

EncoderAdapter because this name is used only for local purposes within the server process. In fact, using a different name for each adapter would actually complicate the server implementation, since it would somehow need to discover the name it should use when creating the adapter.

We have also removed the id attribute from our adapter descriptors; the default values supplied by IceGrid are sufficient for our purposes (see

Section 39.16.1).

We can continue to use the configuration file we created in Section 39.5.3 for our combined registry-node process. We need a separate configuration file for

Node2, primarily to define a different value for the property

IceGrid.Node.Name. However, we also cannot have two nodes configured with

IceGrid.Node.CollocateRegistry=1 because only one master registry is allowed, so we must remove this property:

IceGrid.Node.Endpoints=tcpIceGrid.Node.Name=Node2

IceGrid.Node.Data=/opt/ripper/node

Ice.Default.Locator=IceGrid/Locator:tcp -h registryhost -p 4061

We assume that /opt/ripper/node refers to a local file system directory on the computer hosting

Node2, and not a shared volume, because two nodes must not share the same data directory.

$ icegridadmin --Ice.Config=/opt/ripper/config

>>>

application update "/opt/ripper/app.xml"

We have added a new node, but we still need to modify our client to take advantage of it. As it stands now, our client can delegate an encoding task to one of the two

MP3EncoderFactory objects. The client selects a factory by using the appropriate indirect proxy:

string adapter;

if ((rand() % 2) == 0)

adapter = "EncoderServer1.EncoderAdapter";

else

adapter = "EncoderServer2.EncoderAdapter";

Ice::ObjectPrx proxy =

communicator‑>stringToProxy("factory@" + adapter);

Ripper::MP3EncoderFactoryPrx factory =

Ripper::MP3EncoderFactoryPrx::checkedCast(proxy);

Ripper::MP3EncoderPrx encoder = factory‑>createEncoder();

Figure 39.5. Architecture for deployed ripper application.In contrast to the architecture shown in Section 39.4.1, we no longer need to manually start our server. In this revised application, the client’s locate request prompts the registry to query the node about the server’s state and start it if necessary. Once the server starts successfully, the locate request completes and subsequent client communication occurs directly with the server.

Figure 39.5. Architecture for deployed ripper application.In contrast to the architecture shown in Section 39.4.1, we no longer need to manually start our server. In this revised application, the client’s locate request prompts the registry to query the node about the server’s state and start it if necessary. Once the server starts successfully, the locate request completes and subsequent client communication occurs directly with the server.