The failure of an IceGrid registry or registry host can have serious consequences. A client can continue to use an existing connection to a server without interruption, but any activity that requires interaction with the registry is vulnerable to a single point of failure. As a result, the IceGrid registry supports replication using a master-slave configuration to provide high availability for applications that require it.

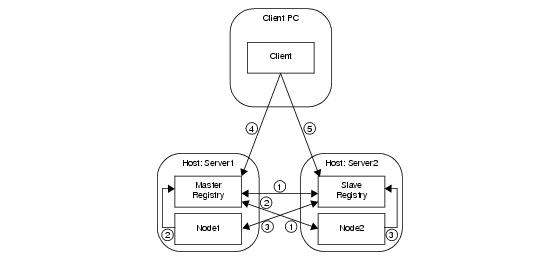

In IceGrid’s registry replication architecture, there is one master replica and any number of slave replicas. The master synchronizes its deployment information with the slaves so that any replica is capable of responding to locate requests, managing nodes, and starting servers on demand. Should the master registry or its host fail, properly configured clients transparently fail over to one of the slaves.

Each replica has a unique name. The name Master is reserved for the master replica, while replicas can use any name that can legally appear in an object identity.

Figure 38.6 illustrates the underlying concepts of registry replication:

A master registry replica has a number of responsibilities, only some of which are supported by slaves. The master replica knows all of its slaves, but the slaves are not in contact with one another. If the master replica fails, the slaves can perform several vital functions that should keep most applications running without interruption. Eventually, however, a new master replica must be started to restore full registry functionality. For a slave replica to become the master, the slave must be restarted.

One of the most important functions of a registry replica is responding to locate requests from clients, and every replica has the capability to service these requests. Slaves synchronize their databases with the master so that they have all of the information necessary to transform object identities, object adapter identifiers, and replica group identifiers into an appropriate set of endpoints.

Replicating the registry also replicates the object that supports the IceGrid::Query interface (see

Section 38.6.5). A client that resolves the

IceGrid/Query object identity receives the endpoints of all active replicas, any of which can execute the client’s requests.

The state of an IceGrid registry is accessible via the IceGrid::Admin interface or (more commonly) using an administrative tool that encapsulates this interface. Modifications to the registry’s state, such as deploying or updating an application, can only be done using the master replica. Administrative access to slave replicas is allowed but restricted to read-only operations. The administrative utilities provide mechanisms for you to select a particular replica to contact.

For programmatic access to a replica’s administrative interface, the IceGrid/Registry identity corresponds to the master replica and the identity

IceGrid/Registry-name corresponds to the slave with the given name.

The registry implements the session manager interfaces required for integration with a Glacier2 router (see

Section 38.15). The master replica supports the object identities

IceGrid/SessionManager and

IceGrid/AdminSessionManager. The slave replicas offer support for read-only administrative sessions using the object identity

IceGrid/AdminSessionManager-name.

Each replica must specify a unique name in the configuration property IceGrid.Registry.ReplicaName. The default value of this property is

Master, therefore the master replica can omit this property if desired.

At startup, a slave replica attempts to register itself with its master in order to synchronize its databases and obtain the list of active nodes. The slave uses the proxy supplied by the

Ice.Default.Locator property to connect to the master, therefore this proxy must be defined and contain at least the endpoint of the master replica.

For better reliability if a failure occurs, we recommend that you also include the endpoints of all slave replicas in the

Ice.Default.Locator property. There is no harm in adding the slave’s own endpoints to the proxy in

Ice.Default.Locator; in fact, it makes configuration simpler because all of the slaves can share the same property definition. Although slaves do not communicate with each other, it is possible for one of the slaves to be promoted to the master, therefore supplying the endpoints of all slaves minimizes the chance of a communication failure.

IceGrid.InstanceName=DemoIceGrid

IceGrid.Registry.Client.Endpoints=default ‑p 12000

IceGrid.Registry.Server.Endpoints=default

IceGrid.Registry.Internal.Endpoints=default

IceGrid.Registry.Data=db/master

...

Ice.Default.Locator=DemoIceGrid/Locator:default ‑p 12000

IceGrid.Registry.Client.Endpoints=default ‑p 12001

IceGrid.Registry.Server.Endpoints=default

IceGrid.Registry.Internal.Endpoints=default

IceGrid.Registry.Data=db/replica1

IceGrid.Registry.ReplicaName=Replica1

...

The endpoints contained in the Ice.Default.Locator property determine which registry replicas the client can use when issuing locate requests. If high availability is important, this property should include the endpoints of at least two (and preferably all) replicas. Not only does this increase the reliability of the client, it also distributes the work load of responding to locate requests among all of the replicas.

As with slave replicas and clients, an IceGrid node should be configured with an Ice.Default.Locator property that contains the endpoints of all replicas. Doing so allows a node to notify each of them about its presence, thereby enabling the replicas to activate its servers and obtain the endpoints of its object adapters.

Ice.Default.Locator=\

DemoIceGrid/Locator:default ‑p 12000:default ‑p 12001

IceGrid.Node.Name=node1

IceGrid.Node.Endpoints=default

IceGrid.Node.Data=db/node1