|

IMS Question and Test Interoperability OverviewVersion 2.0 Final Specification |

Copyright © 2005 IMS Global Learning Consortium, Inc. All Rights Reserved.

The IMS Logo is a registered trademark of IMS/GLC.

Document Name: IMS Question and Test Interoperability Overview

Revision: 24 January 2005

| Date Issued: |

24 January 2005 |

IPR and Distribution Notices

Recipients of this document are requested to submit, with their comments, notification of any relevant patent claims or other intellectual property rights of which they may be aware that might be infringed by any implementation of the specification set forth in this document, and to provide supporting documentation.

IMS takes no position regarding the validity or scope of any intellectual property or other rights that might be claimed to pertain to the implementation or use of the technology described in this document or the extent to which any license under such rights might or might not be available; neither does it represent that it has made any effort to identify any such rights. Information on IMS's procedures with respect to rights in IMS specifications can be found at the IMS Intellectual Property Rights web page: http://www.imsglobal.org/ipr/imsipr_policyFinal.pdf.

Copyright © 2005 IMS Global Learning Consortium. All Rights Reserved.

If you wish to copy or distribute this document, you must complete a valid Registered User license registration with IMS and receive an email from IMS granting the license to distribute the specification. To register, follow the instructions on the IMS website: http://www.imsglobal.org/specificationdownload.cfm.

This document may be copied and furnished to others by Registered Users who have registered on the IMS website provided that the above copyright notice and this paragraph are included on all such copies. However, this document itself may not be modified in any way, such as by removing the copyright notice or references to IMS, except as needed for the purpose of developing IMS specifications, under the auspices of a chartered IMS project group.

Use of this specification to develop products or services is governed by the license with IMS found on the IMS website: http://www.imsglobal.org/license.html.

The limited permissions granted above are perpetual and will not be revoked by IMS or its successors or assigns.

THIS SPECIFICATION IS BEING OFFERED WITHOUT ANY WARRANTY WHATSOEVER, AND IN PARTICULAR, ANY WARRANTY OF NONINFRINGEMENT IS EXPRESSLY DISCLAIMED. ANY USE OF THIS SPECIFICATION SHALL BE MADE ENTIRELY AT THE IMPLEMENTER'S OWN RISK, AND NEITHER THE CONSORTIUM, NOR ANY OF ITS MEMBERS OR SUBMITTERS, SHALL HAVE ANY LIABILITY WHATSOEVER TO ANY IMPLEMENTER OR THIRD PARTY FOR ANY DAMAGES OF ANY NATURE WHATSOEVER, DIRECTLY OR INDIRECTLY, ARISING FROM THE USE OF THIS SPECIFICATION.

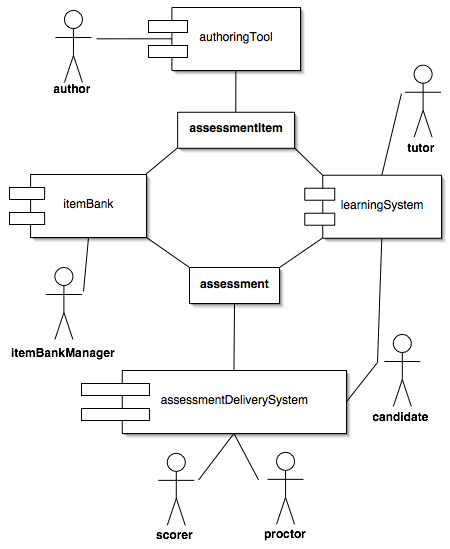

The IMS Question & Test Interoperability (QTI) specification describes a data model for the representation of question (assessmentItem) and test (assessment) data and their corresponding results reports. Therefore, the specification enables the exchange of this item, assessment and results data between authoring tools, item banks, learning systems and assessment delivery systems. The data model is described abstractly, using [UML] to facilitate binding to a wide range of data-modeling tools and programming languages, however, for interchange between systems a binding is provided to the industry standard eXtensible Markup Language [XML] and use of this binding is strongly recommended. The IMS QTI specification has been designed to support both interoperability and innovation through the provision of well-defined extension points. These extension points can be used to wrap specialized or proprietary data in ways that allows it to be used alongside items that can be represented directly.

An initial V0.5 specification was released for discussion in March 1999 and in November it was agreed to develop IMS Question & Test Interoperability v1.0 which was released as a public draft in February 2000 and as a final specification in May that year. The specification was extended and updated twice, in March 2001 and January 2002. By February of that year in excess of 6000 copies of the IMS QTI 1.x specifications had been downloaded from the IMS web-site.

Since then, a number of issues of have been raised by implementers and reviewed by the QTI project team. Many of them were dealt with in an addendum, which defined version 1.2.1 of the specification and was released in March 2003. Some of the issues could not be dealt with this way as they required changes to the specification that would not be backwardly compatible or because they uncovered more fundamental issues that would require extensive clarification or significant extension of the specification to resolve.

Since the QTI specification was first conceived, the breadth of IMS specifications has grown and work on Content Packaging, Simple Sequencing and most recently Learning Design created the need for a cross-specification review. This review took place during 2003 and a number of harmonization issues affecting QTI were identified. In September that year a project charter was agreed to address both the collected issues from 1.x and the harmonization issues and to draft QTI V2.0. In order to make the work manageable and ensure that results were returned to the community at the earliest opportunity some restrictions were placed on the scope of the recommended work. Therefore, this release of the specification concentrates only on the individual assessmentItem and does not update those parts of the specification that dealt with the aggregation of item's into sections and assessments or the reporting of results.

The IMS QTI work specifically relates to content providers (that is, question and test authors and publishers), developers of authoring and content management tools, assessment delivery systems and learning systems. The data model for representing question-based content is suitable for targeting users in learning, education and training across all age ranges and national contexts.

QTI is designed to facilitate interoperability between a number of systems that are described here in relation to the actors that use them.

Specifically, QTI is designed to:

The Role of Assessments and Assessment Items

A system used by an author for creating or modifying an assessment item.

A system for collecting and managing collections of assessment items.

A system for managing the delivery of assessments to candidates. The system contains a delivery engine for delivering the items to the candidates and scores the responses automatically (where applicable) or by distributing them to scorers.

A system that enables or directs learners in learning activities, possibly coordinated with a tutor. For the purposes of this specification a learner exposed to an assessment item as part of an interaction with a learning system (i.e., through formative assessment) is still described as a candidate as no formal distinction between formative and summative assessment is made. A learning system is also considered to contain a delivery engine though the administration and security model is likely to be very different from that employed by an assessmentDeliverySystem.

The set of roles identified in this specification have been reduced to a small set of abstract actors for simplicity. Typically roles in real learning and assessment systems are more complex but, for the purposes of this specification, it is assumed that they can be generalized by one or more of the roles defined here.

The author of an assessment item. In simple situations an item may have a single author, in more complex situations an item may go through a creation and quality control process involving many people. In this specification we identify all of these people with the role of author. An author is concerned with the content of an item, which distinguishes them from the role of an itemBankManager. An author interacts with an item through an authoringTool.

An actor with responsibility for managing a collection of assessment items with an itemBank.

A person charged with overseeing the delivery of an assessment. Often referred to as an invigilator. For the purposes of this specification a proctor is anyone (other than the candidate) who is involved in the delivery process but who does not have a role in assessing the candidate's responses.

A person or external system responsible for assessing the candidate's responses during assessment delivery. Scorers are optional, for example, many assessment items can be scored automatically using response processing rules defined in the item itself.

Someone involved in managing, directing or supporting the learning process for a learner but who is not subject to (the same) assessment.

The person being assessed by an assessment or assessment item.

The specification is spread over a number of documents:

This specification updates version 1 however, in its current form, it does not yet replace it entirely. The following documents were released with version 1.2:

| Title |

IMS Question and Test Interoperability Overview |

| Editor |

Steve Lay (University of Cambridge) |

| Version |

2.0 |

| Version Date |

24 January 2005 |

| Status |

Final Specification |

| Summary |

This document describes the QTI specification Overview. |

| Revision Information |

24 January 2005 |

| Purpose |

This document has been approved by the IMS Technical Board and is made available for adoption. |

| Document Location |

http://www.imsglobal.org/question/qti_v2p0/imsqti_oviewv2p0.html |

| To register any comments or questions about this specification please visit:

http://www.imsglobal.org/developers/ims/imsforum/categories.cfm?catid=23 |

The following individuals contributed to the development of this document:

| Name | Organization | Name | Organization |

|---|---|---|---|

| Niall Barr |

CETIS |

Joshua Marks |

McGraw-Hill |

| Sam Easterby-Smith |

Canvas Learning |

David Poor |

McGraw-Hill |

| Jeanne Ferrante |

ETS |

Greg Quirus |

ETS |

| Pierre Gorissen |

SURF |

Niall Sclater |

CETIS |

| Regina Hoag |

ETS |

Colin Smythe |

IMS |

| Christian Kaefer |

McGraw-Hill |

GT Springer |

Texas Instruments |

| John Kleeman |

Question Mark |

Colin Tattersall |

OUNL |

| Steve Lay |

UCLES |

Rowin Young |

CETIS |

| Jez Lord |

Canvas Learning |

| Version No. | Release Date | Comments |

|---|---|---|

| Base Document 2.0 |

09 March 2004 |

The first version of the QTI Item v2.0 specification. |

| Public Draft 2.0 |

07 June 2004 |

The Public Draft version 2.0 of the QTI Item Specification. |

| Final 2.0 |

24 January 2005 |

The Final version 2.0 of the QTI specification. |

IMS Global Learning Consortium, Inc. ("IMS/GLC") is publishing the information contained in this IMS Question and Test Interoperability Overview ("Specification") for purposes of scientific, experimental, and scholarly collaboration only.

IMS/GLC makes no warranty or representation regarding the accuracy or completeness of the Specification.

This material is provided on an "As Is" and "As Available" basis.

The Specification is at all times subject to change and revision without notice.

It is your sole responsibility to evaluate the usefulness, accuracy, and completeness of the Specification as it relates to you.

IMS/GLC would appreciate receiving your comments and suggestions.

Please contact IMS/GLC through our website at http://www.imsglobal.org

Please refer to Document Name: IMS Question and Test Interoperability Overview Revision: 24 January 2005