Unit Testing¶

Theano relies heavily on unit testing. Its importance cannot be stressed enough!

Unit Testing revolves around the following principles:

- ensuring correctness: making sure that your Op, Type or Optimization works in the way you intended it to work. It is important for this testing to be as thorough as possible: test not only the obvious cases, but more importantly the corner cases which are more likely to trigger bugs down the line.

- test all possible failure paths. This means testing that your code fails in the appropriate manner, by raising the correct errors when in certain situations.

- sanity check: making sure that everything still runs after you’ve done your modification. If your changes cause unit tests to start failing, it could be that you’ve changed an API on which other users rely on. It is therefore your responsibility to either a) provide the fix or b) inform the author of your changes and coordinate with that person to produce a fix. If this sounds like too much of a burden... then good! APIs aren’t meant to be changed on a whim!

This page is in no way meant to replace tutorials on Python’s unittest module, for this we refer the reader to the official documentation. We will however adress certain specificities about how unittests relate to theano.

Unittest Primer¶

A unittest is a subclass of unittest.TestCase, with member

functions with names that start with the string test. For

example:

import unittest

class MyTestCase(unittest.TestCase):

def test0(self):

pass

# test passes cleanly

def test1(self):

self.assertTrue(2+2 == 5)

# raises an exception, causes test to fail

def test2(self):

assert 2+2 == 5

# causes error in test (basically a failure, but counted separately)

def test2(self):

assert 2+2 == 4

# this test has the same name as a previous one,

# so this is the one that runs.

How to Run Unit Tests ?¶

Two options are available:

theano-nose¶

The easiest by far is to use theano-nose which is a command line

utility that recurses through a given directory, finds all unittests

matching a specific criteria and executes them. By default, it will

find & execute tests case in test*.py files whose method name starts

with ‘test’.

theano-nose is a wrapper around nosetests. You should be

able to execute it if you installed Theano using pip, or if you ran

“python setup.py develop” after the installation. If theano-nose is

not found by your shell, you will need to add Theano/bin to your

PATH environment variable.

Note

In Theano versions <= 0.5, theano-nose was not included. If you

are working with such a version, you can call nosetests instead

of theano-nose in all the examples below.

Running all unit tests

cd Theano/theano

theano-nose

Running unit tests with standard out

theano-nose -s

Running unit tests contained in a specific .py file

theano-nose <filename>.py

Running a specific unit test

theano-nose <filename>.py:<classname>.<method_name>

Using unittest module¶

To launch tests cases from within python, you can also use the

functionality offered by the unittest module. The simplest thing

is to run all the tests in a file using unittest.main(). Python’s

built-in unittest module uses metaclasses to know about all the

unittest.TestCase classes you have created. This call will run

them all, printing ‘.’ for passed tests, and a stack trace for

exceptions. The standard footer code in theano’s test files is:

if __name__ == '__main__':

unittest.main()

You can also choose to run a subset of the full test suite.

To run all the tests in one or more TestCase subclasses:

suite = unittest.TestLoader()

suite = suite.loadTestsFromTestCase(MyTestCase0)

suite = suite.loadTestsFromTestCase(MyTestCase1)

...

unittest.TextTestRunner(verbosity=2).run(suite)

To run just a single MyTestCase member test function called test0:

MyTestCase('test0').debug()

Folder Layout¶

“tests” directories are scattered throughout theano. Each tests subfolder is meant to contain the unittests which validate the .py files in the parent folder.

Files containing unittests should be prefixed with the word “test”.

Optimally every python module should have a unittest file associated with it, as shown below. Unittests testing functionality of module <module>.py should therefore be stored in tests/test_<module>.py:

Theano/theano/tensor/basic.py

Theano/theano/tensor/elemwise.py

Theano/theano/tensor/tests/test_basic.py

Theano/theano/tensor/tests/test_elemwise.py

How to Write a Unittest¶

Test Cases and Methods¶

Unittests should be grouped “logically” into test cases, which are meant to group all unittests operating on the same element and/or concept. Test cases are implemented as Python classes which inherit from unittest.TestCase

Test cases contain multiple test methods. These should be prefixed with the word “test”.

Test methods should be as specific as possible and cover a particular aspect of the problem. For example, when testing the TensorDot Op, one test method could check for validity, while another could verify that the proper errors are raised when inputs have invalid dimensions.

Test method names should be as explicit as possible, so that users can see at first glance, what functionality is being tested and what tests need to be added.

Example:

import unittest

class TestTensorDot(unittest.TestCase):

def test_validity(self):

# do stuff

...

def test_invalid_dims(self):

# do more stuff

...

Test cases can define a special setUp method, which will get called before each test method is executed. This is a good place to put functionality which is shared amongst all test methods in the test case (i.e initializing data, parameters, seeding random number generators – more on this later)

import unittest

class TestTensorDot(unittest.TestCase):

def setUp(self):

# data which will be used in various test methods

self.avals = numpy.array([[1,5,3],[2,4,1]])

self.bvals = numpy.array([[2,3,1,8],[4,2,1,1],[1,4,8,5]])

Similarly, test cases can define a tearDown method, which will be implicitely called at the end of each test method.

Checking for correctness¶

When checking for correctness of mathematical expressions, the user should preferably compare theano’s output to the equivalent numpy implementation.

Example:

class TestTensorDot(unittest.TestCase):

def setUp(self):

...

def test_validity(self):

a = T.dmatrix('a')

b = T.dmatrix('b')

c = T.dot(a, b)

f = theano.function([a, b], [c])

cmp = f(self.avals, self.bvals) == numpy.dot(self.avals, self.bvals)

self.assertTrue(numpy.all(cmp))

Avoid hard-coding variables, as in the following case:

self.assertTrue(numpy.all(f(self.avals, self.bvals) == numpy.array([[25, 25, 30, 28], [21, 18, 14, 25]])))

This makes the test case less manageable and forces the user to update the variables each time the input is changed or possibly when the module being tested changes (after a bug fix for example). It also constrains the test case to specific input/output data pairs. The section on random values covers why this might not be such a good idea.

Here is a list of useful functions, as defined by TestCase:

- checking the state of boolean variables: assert, assertTrue, assertFalse

- checking for (in)equality constraints: assertEqual, assertNotEqual

- checking for (in)equality constraints up to a given precision (very useful in theano): assertAlmostEqual, assertNotAlmostEqual

Checking for errors¶

On top of verifying that your code provides the correct output, it is equally important to test that it fails in the appropriate manner, raising the appropriate exceptions, etc. Silent failures are deadly, as they can go unnoticed for a long time and a hard to detect “after-the-fact”.

Example:

import unittest

class TestTensorDot(unittest.TestCase):

...

def test_3D_dot_fail(self):

def func():

a = T.TensorType('float64', (False,False,False)) # create 3d tensor

b = T.dmatrix()

c = T.dot(a,b) # we expect this to fail

# above should fail as dot operates on 2D tensors only

self.assertRaises(TypeError, func)

Useful function, as defined by TestCase:

- assertRaises

Test Cases and Theano Modes¶

When compiling theano functions or modules, a mode parameter can be given to specify which linker and optimizer to use.

Example:

from theano import function

f = function([a,b],[c],mode='FAST_RUN')

Whenever possible, unit tests should omit this parameter. Leaving

out the mode will ensure that unit tests use the default mode.

This default mode is set to

the configuration variable config.mode, which defaults to

‘FAST_RUN’, and can be set by various mechanisms (see config).

In particular, the enviromnment variable THEANO_FLAGS

allows the user to easily switch the mode in which unittests are

run. For example to run all tests in all modes from a BASH script,

type this:

THEANO_FLAGS='mode=FAST_COMPILE' theano-nose

THEANO_FLAGS='mode=FAST_RUN' theano-nose

THEANO_FLAGS='mode=DebugMode' theano-nose

Using Random Values in Test Cases¶

numpy.random is often used in unit tests to initialize large data structures, for use as inputs to the function or module being tested. When doing this, it is imperative that the random number generator be seeded at the be beginning of each unit test. This will ensure that unittest behaviour is consistent from one execution to another (i.e always pass or always fail).

Instead of using numpy.random.seed to do this, we encourage users to

do the following:

from theano.tests import unittest_tools

class TestTensorDot(unittest.TestCase):

def setUp(self):

unittest_tools.seed_rng()

# OR ... call with an explicit seed

unittest_tools.seed_rng(234234) #use only if really necessary!

The behaviour of seed_rng is as follows:

- If an explicit seed is given, it will be used for seeding numpy’s rng.

- If not, it will use

config.unittests.rseed(its default value is 666). - If config.unittests.rseed is set to “random”, it will seed the rng with None, which is equivalent to seeding with a random seed.

The main advantage of using unittest_tools.seed_rng is that it allows us to change the seed used in the unitests, without having to manually edit all the files. For example, this allows the nightly build to run theano-nose repeatedly, changing the seed on every run (hence achieving a higher confidence that the variables are correct), while still making sure unittests are deterministic.

Users who prefer their unittests to be random (when run on their local

machine) can simply set config.unittests.rseed to ‘random’ (see

config).

Similarly, to provide a seed to numpy.random.RandomState, simply use:

import numpy

rng = numpy.random.RandomState(unittest_tools.fetch_seed())

# OR providing an explicit seed

rng = numpy.random.RandomState(unittest_tools.fetch_seed(1231)) #again not recommended

Note that the ability to change the seed from one nosetest to another, is incompatible with the method of hard-coding the baseline variables (against which we compare the theano outputs). These must then be determined “algorithmically”. Although this represents more work, the test suite will be better because of it.

Creating an Op UnitTest¶

A few tools have been developed to help automate the development of unitests for Theano Ops.

Validating the Gradient¶

The verify_grad function can be used to validate that the grad

function of your Op is properly implemented. verify_grad is based

on the Finite Difference Method where the derivative of function f

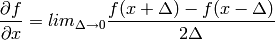

at point x is approximated as:

verify_grad performs the following steps:

- approximates the gradient numerically using the Finite Difference Method

- calculate the gradient using the symbolic expression provided in the

gradfunction - compares the two values. The tests passes if they are equal to within a certain tolerance.

Here is the prototype for the verify_grad function.

def verify_grad(fun, pt, n_tests=2, rng=None, eps=1.0e-7, abs_tol=0.0001, rel_tol=0.0001):

verify_grad raises an Exception if the difference between the analytic gradient and

numerical gradient (computed through the Finite Difference Method) of a random

projection of the fun’s output to a scalar exceeds

both the given absolute and relative tolerances.

The parameters are as follows:

fun: a Python function that takes Theano variables as inputs, and returns a Theano variable. For instance, an Op instance with a single output is such a function. It can also be a Python function that calls an op with some of its inputs being fixed to specific values, or that combine multiple ops.pt: the list of numpy.ndarrays to use as input valuesn_tests: number of times to run the testrng: random number generator used to generate a random vector u, we check the gradient of sum(u*fn) at pteps: stepsize used in the Finite Difference Methodabs_tol: absolute tolerance used as threshold for gradient comparisonrel_tol: relative tolerance used as threshold for gradient comparison

In the general case, you can define fun as you want, as long as it

takes as inputs Theano symbolic variables and returns a sinble Theano

symbolic variable:

def test_verify_exprgrad():

def fun(x,y,z):

return (x + tensor.cos(y)) / (4 * z)**2

x_val = numpy.asarray([[1], [1.1], [1.2]])

y_val = numpy.asarray([0.1, 0.2])

z_val = numpy.asarray(2)

rng = numpy.random.RandomState(42)

tensor.verify_grad(fun, [x_val, y_val, z_val], rng=rng)

Here is an example showing how to use verify_grad on an Op instance:

def test_flatten_outdimNone():

# Testing gradient w.r.t. all inputs of an op (in this example the op

# being used is Flatten(), which takes a single input).

a_val = numpy.asarray([[0,1,2],[3,4,5]], dtype='float64')

rng = numpy.random.RandomState(42)

tensor.verify_grad(tensor.Flatten(), [a_val], rng=rng)

Here is another example, showing how to verify the gradient w.r.t. a subset of

an Op’s inputs. This is useful in particular when the gradient w.r.t. some of

the inputs cannot be computed by finite difference (e.g. for discrete inputs),

which would cause verify_grad to crash.

def test_crossentropy_softmax_grad():

op = tensor.nnet.crossentropy_softmax_argmax_1hot_with_bias

def op_with_fixed_y_idx(x, b):

# Input `y_idx` of this Op takes integer values, so we fix them

# to some constant array.

# Although this op has multiple outputs, we can return only one.

# Here, we return the first output only.

return op(x, b, y_idx=numpy.asarray([0, 2]))[0]

x_val = numpy.asarray([[-1, 0, 1], [3, 2, 1]], dtype='float64')

b_val = numpy.asarray([1, 2, 3], dtype='float64')

rng = numpy.random.RandomState(42)

tensor.verify_grad(op_with_fixed_y_idx, [x_val, b_val], rng=rng)

Note

Although verify_grad is defined in theano.tensor.basic, unittests

should use the version of verify_grad defined in theano.tests.unittest_tools.

This is simply a wrapper function which takes care of seeding the random

number generator appropriately before calling theano.tensor.basic.verify_grad

makeTester and makeBroadcastTester¶

Most Op unittests perform the same function. All such tests must

verify that the op generates the proper output, that the gradient is

valid, that the Op fails in known/expected ways. Because so much of

this is common, two helper functions exists to make your lives easier:

makeTester and makeBroadcastTester (defined in module

theano.tensor.tests.test_basic).

Here is an example of makeTester generating testcases for the Dot

product op:

from numpy import dot

from numpy.random import rand

from theano.tensor.tests.test_basic import makeTester

DotTester = makeTester(name = 'DotTester',

op = dot,

expected = lambda x, y: numpy.dot(x, y),

checks = {},

good = dict(correct1 = (rand(5, 7), rand(7, 5)),

correct2 = (rand(5, 7), rand(7, 9)),

correct3 = (rand(5, 7), rand(7))),

bad_build = dict(),

bad_runtime = dict(bad1 = (rand(5, 7), rand(5, 7)),

bad2 = (rand(5, 7), rand(8,3))),

grad = dict())

In the above example, we provide a name and a reference to the op we

want to test. We then provide in the expected field, a function

which makeTester can use to compute the correct values. The

following five parameters are dictionaries which contain:

- checks: dictionary of validation functions (dictionary key is a description of what each function does). Each function accepts two parameters and performs some sort of validation check on each op-input/op-output value pairs. If the function returns False, an Exception is raised containing the check’s description.

- good: contains valid input values, for which the output should match the expected output. Unittest will fail if this is not the case.

- bad_build: invalid parameters which should generate an Exception

when attempting to build the graph (call to

make_nodeshould fail). Fails unless an Exception is raised. - bad_runtime: invalid parameters which should generate an Exception

at runtime, when trying to compute the actual output values (call to

performshould fail). Fails unless an Exception is raised. - grad: dictionary containing input values which will be used in the

call to

verify_grad

makeBroadcastTester is a wrapper function for makeTester. If an

inplace=True parameter is passed to it, it will take care of

adding an entry to the checks dictionary. This check will ensure

that inputs and outputs are equal, after the Op’s perform function has

been applied.