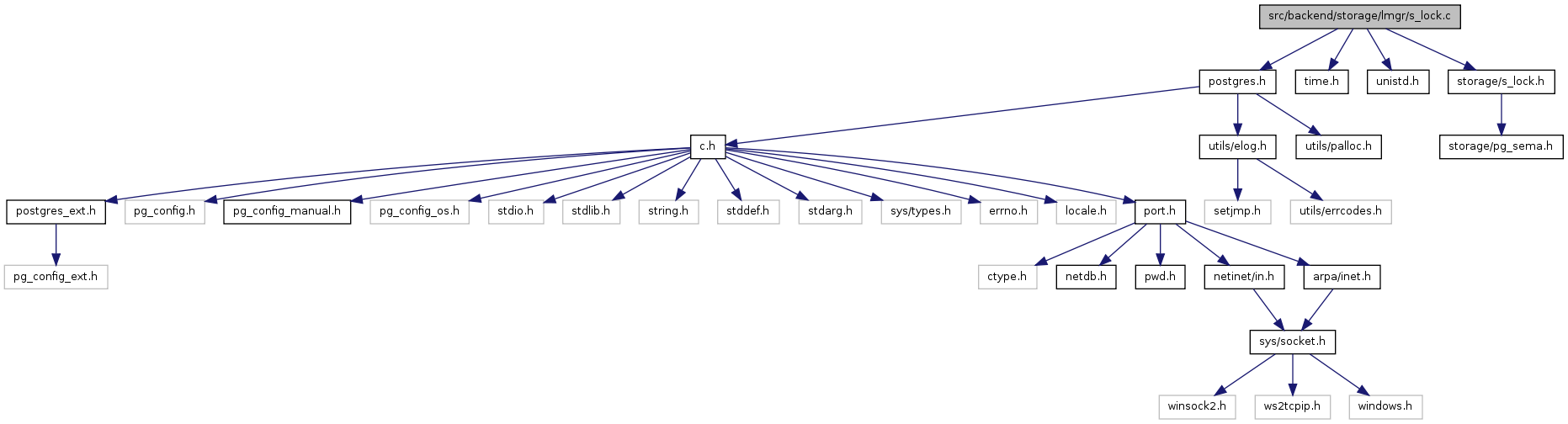

#include "postgres.h"#include <time.h>#include <unistd.h>#include "storage/s_lock.h"

Go to the source code of this file.

Defines | |

| #define | MIN_SPINS_PER_DELAY 10 |

| #define | MAX_SPINS_PER_DELAY 1000 |

| #define | NUM_DELAYS 1000 |

| #define | MIN_DELAY_MSEC 1 |

| #define | MAX_DELAY_MSEC 1000 |

Functions | |

| static void | s_lock_stuck (volatile slock_t *lock, const char *file, int line) |

| int | s_lock (volatile slock_t *lock, const char *file, int line) |

| void | set_spins_per_delay (int shared_spins_per_delay) |

| int | update_spins_per_delay (int shared_spins_per_delay) |

Variables | |

| slock_t | dummy_spinlock |

| static int | spins_per_delay = DEFAULT_SPINS_PER_DELAY |

| #define MAX_DELAY_MSEC 1000 |

Referenced by s_lock().

| #define MAX_SPINS_PER_DELAY 1000 |

Referenced by s_lock().

| #define MIN_DELAY_MSEC 1 |

| #define MIN_SPINS_PER_DELAY 10 |

Referenced by s_lock().

| #define NUM_DELAYS 1000 |

Referenced by s_lock().

| int s_lock | ( | volatile slock_t * | lock, | |

| const char * | file, | |||

| int | line | |||

| ) |

Definition at line 50 of file s_lock.c.

References Max, MAX_DELAY_MSEC, MAX_RANDOM_VALUE, MAX_SPINS_PER_DELAY, Min, MIN_SPINS_PER_DELAY, NUM_DELAYS, pg_usleep(), random(), s_lock_stuck(), SPIN_DELAY, spins_per_delay, and TAS_SPIN.

{

/*

* We loop tightly for awhile, then delay using pg_usleep() and try again.

* Preferably, "awhile" should be a small multiple of the maximum time we

* expect a spinlock to be held. 100 iterations seems about right as an

* initial guess. However, on a uniprocessor the loop is a waste of

* cycles, while in a multi-CPU scenario it's usually better to spin a bit

* longer than to call the kernel, so we try to adapt the spin loop count

* depending on whether we seem to be in a uniprocessor or multiprocessor.

*

* Note: you might think MIN_SPINS_PER_DELAY should be just 1, but you'd

* be wrong; there are platforms where that can result in a "stuck

* spinlock" failure. This has been seen particularly on Alphas; it seems

* that the first TAS after returning from kernel space will always fail

* on that hardware.

*

* Once we do decide to block, we use randomly increasing pg_usleep()

* delays. The first delay is 1 msec, then the delay randomly increases to

* about one second, after which we reset to 1 msec and start again. The

* idea here is that in the presence of heavy contention we need to

* increase the delay, else the spinlock holder may never get to run and

* release the lock. (Consider situation where spinlock holder has been

* nice'd down in priority by the scheduler --- it will not get scheduled

* until all would-be acquirers are sleeping, so if we always use a 1-msec

* sleep, there is a real possibility of starvation.) But we can't just

* clamp the delay to an upper bound, else it would take a long time to

* make a reasonable number of tries.

*

* We time out and declare error after NUM_DELAYS delays (thus, exactly

* that many tries). With the given settings, this will usually take 2 or

* so minutes. It seems better to fix the total number of tries (and thus

* the probability of unintended failure) than to fix the total time

* spent.

*

* The pg_usleep() delays are measured in milliseconds because 1 msec is a

* common resolution limit at the OS level for newer platforms. On older

* platforms the resolution limit is usually 10 msec, in which case the

* total delay before timeout will be a bit more.

*/

#define MIN_SPINS_PER_DELAY 10

#define MAX_SPINS_PER_DELAY 1000

#define NUM_DELAYS 1000

#define MIN_DELAY_MSEC 1

#define MAX_DELAY_MSEC 1000

int spins = 0;

int delays = 0;

int cur_delay = 0;

while (TAS_SPIN(lock))

{

/* CPU-specific delay each time through the loop */

SPIN_DELAY();

/* Block the process every spins_per_delay tries */

if (++spins >= spins_per_delay)

{

if (++delays > NUM_DELAYS)

s_lock_stuck(lock, file, line);

if (cur_delay == 0) /* first time to delay? */

cur_delay = MIN_DELAY_MSEC;

pg_usleep(cur_delay * 1000L);

#if defined(S_LOCK_TEST)

fprintf(stdout, "*");

fflush(stdout);

#endif

/* increase delay by a random fraction between 1X and 2X */

cur_delay += (int) (cur_delay *

((double) random() / (double) MAX_RANDOM_VALUE) + 0.5);

/* wrap back to minimum delay when max is exceeded */

if (cur_delay > MAX_DELAY_MSEC)

cur_delay = MIN_DELAY_MSEC;

spins = 0;

}

}

/*

* If we were able to acquire the lock without delaying, it's a good

* indication we are in a multiprocessor. If we had to delay, it's a sign

* (but not a sure thing) that we are in a uniprocessor. Hence, we

* decrement spins_per_delay slowly when we had to delay, and increase it

* rapidly when we didn't. It's expected that spins_per_delay will

* converge to the minimum value on a uniprocessor and to the maximum

* value on a multiprocessor.

*

* Note: spins_per_delay is local within our current process. We want to

* average these observations across multiple backends, since it's

* relatively rare for this function to even get entered, and so a single

* backend might not live long enough to converge on a good value. That

* is handled by the two routines below.

*/

if (cur_delay == 0)

{

/* we never had to delay */

if (spins_per_delay < MAX_SPINS_PER_DELAY)

spins_per_delay = Min(spins_per_delay + 100, MAX_SPINS_PER_DELAY);

}

else

{

if (spins_per_delay > MIN_SPINS_PER_DELAY)

spins_per_delay = Max(spins_per_delay - 1, MIN_SPINS_PER_DELAY);

}

return delays;

}

| static void s_lock_stuck | ( | volatile slock_t * | lock, | |

| const char * | file, | |||

| int | line | |||

| ) | [static] |

| void set_spins_per_delay | ( | int | shared_spins_per_delay | ) |

Definition at line 168 of file s_lock.c.

References spins_per_delay.

Referenced by InitAuxiliaryProcess(), and InitProcess().

{

spins_per_delay = shared_spins_per_delay;

}

| int update_spins_per_delay | ( | int | shared_spins_per_delay | ) |

Definition at line 179 of file s_lock.c.

References spins_per_delay.

Referenced by AuxiliaryProcKill(), and ProcKill().

{

/*

* We use an exponential moving average with a relatively slow adaption

* rate, so that noise in any one backend's result won't affect the shared

* value too much. As long as both inputs are within the allowed range,

* the result must be too, so we need not worry about clamping the result.

*

* We deliberately truncate rather than rounding; this is so that single

* adjustments inside a backend can affect the shared estimate (see the

* asymmetric adjustment rules above).

*/

return (shared_spins_per_delay * 15 + spins_per_delay) / 16;

}

int spins_per_delay = DEFAULT_SPINS_PER_DELAY [static] |

Definition at line 25 of file s_lock.c.

Referenced by s_lock(), set_spins_per_delay(), and update_spins_per_delay().

1.7.1

1.7.1