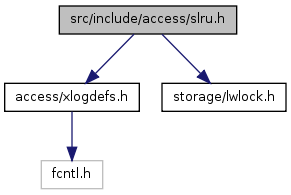

#include "access/xlogdefs.h"#include "storage/lwlock.h"

Go to the source code of this file.

| typedef SlruCtlData* SlruCtl |

| typedef struct SlruCtlData SlruCtlData |

| typedef bool(* SlruScanCallback)(SlruCtl ctl, char *filename, int segpage, void *data) |

| typedef SlruSharedData* SlruShared |

| typedef struct SlruSharedData SlruSharedData |

| enum SlruPageStatus |

Definition at line 45 of file slru.h.

{

SLRU_PAGE_EMPTY, /* buffer is not in use */

SLRU_PAGE_READ_IN_PROGRESS, /* page is being read in */

SLRU_PAGE_VALID, /* page is valid and not being written */

SLRU_PAGE_WRITE_IN_PROGRESS /* page is being written out */

} SlruPageStatus;

Definition at line 1034 of file slru.c.

References Assert, CloseTransientFile(), SlruSharedData::ControlLock, SlruCtlData::do_fsync, SlruFlushData::fd, i, InvalidTransactionId, LW_EXCLUSIVE, LWLockAcquire(), LWLockRelease(), SlruFlushData::num_files, SlruSharedData::num_slots, SlruSharedData::page_dirty, SlruSharedData::page_status, pg_fsync(), SlruFlushData::segno, SlruCtlData::shared, slru_errcause, slru_errno, SLRU_PAGE_EMPTY, SLRU_PAGE_VALID, SlruInternalWritePage(), and SlruReportIOError().

Referenced by CheckPointCLOG(), CheckPointMultiXact(), CheckPointPredicate(), CheckPointSUBTRANS(), ShutdownCLOG(), ShutdownMultiXact(), and ShutdownSUBTRANS().

{

SlruShared shared = ctl->shared;

SlruFlushData fdata;

int slotno;

int pageno = 0;

int i;

bool ok;

/*

* Find and write dirty pages

*/

fdata.num_files = 0;

LWLockAcquire(shared->ControlLock, LW_EXCLUSIVE);

for (slotno = 0; slotno < shared->num_slots; slotno++)

{

SlruInternalWritePage(ctl, slotno, &fdata);

/*

* When called during a checkpoint, we cannot assert that the slot is

* clean now, since another process might have re-dirtied it already.

* That's okay.

*/

Assert(checkpoint ||

shared->page_status[slotno] == SLRU_PAGE_EMPTY ||

(shared->page_status[slotno] == SLRU_PAGE_VALID &&

!shared->page_dirty[slotno]));

}

LWLockRelease(shared->ControlLock);

/*

* Now fsync and close any files that were open

*/

ok = true;

for (i = 0; i < fdata.num_files; i++)

{

if (ctl->do_fsync && pg_fsync(fdata.fd[i]))

{

slru_errcause = SLRU_FSYNC_FAILED;

slru_errno = errno;

pageno = fdata.segno[i] * SLRU_PAGES_PER_SEGMENT;

ok = false;

}

if (CloseTransientFile(fdata.fd[i]))

{

slru_errcause = SLRU_CLOSE_FAILED;

slru_errno = errno;

pageno = fdata.segno[i] * SLRU_PAGES_PER_SEGMENT;

ok = false;

}

}

if (!ok)

SlruReportIOError(ctl, pageno, InvalidTransactionId);

}

| void SimpleLruInit | ( | SlruCtl | ctl, | |

| const char * | name, | |||

| int | nslots, | |||

| int | nlsns, | |||

| LWLockId | ctllock, | |||

| const char * | subdir | |||

| ) |

Definition at line 163 of file slru.c.

References Assert, SlruSharedData::buffer_locks, BUFFERALIGN, SlruSharedData::ControlLock, SlruSharedData::cur_lru_count, SlruCtlData::Dir, SlruCtlData::do_fsync, SlruSharedData::group_lsn, IsUnderPostmaster, SlruSharedData::lsn_groups_per_page, LWLockAssign(), MAXALIGN, SlruSharedData::num_slots, SlruSharedData::page_buffer, SlruSharedData::page_dirty, SlruSharedData::page_lru_count, SlruSharedData::page_number, SlruSharedData::page_status, SlruCtlData::shared, ShmemInitStruct(), SimpleLruShmemSize(), and StrNCpy.

Referenced by AsyncShmemInit(), CLOGShmemInit(), MultiXactShmemInit(), OldSerXidInit(), and SUBTRANSShmemInit().

{

SlruShared shared;

bool found;

shared = (SlruShared) ShmemInitStruct(name,

SimpleLruShmemSize(nslots, nlsns),

&found);

if (!IsUnderPostmaster)

{

/* Initialize locks and shared memory area */

char *ptr;

Size offset;

int slotno;

Assert(!found);

memset(shared, 0, sizeof(SlruSharedData));

shared->ControlLock = ctllock;

shared->num_slots = nslots;

shared->lsn_groups_per_page = nlsns;

shared->cur_lru_count = 0;

/* shared->latest_page_number will be set later */

ptr = (char *) shared;

offset = MAXALIGN(sizeof(SlruSharedData));

shared->page_buffer = (char **) (ptr + offset);

offset += MAXALIGN(nslots * sizeof(char *));

shared->page_status = (SlruPageStatus *) (ptr + offset);

offset += MAXALIGN(nslots * sizeof(SlruPageStatus));

shared->page_dirty = (bool *) (ptr + offset);

offset += MAXALIGN(nslots * sizeof(bool));

shared->page_number = (int *) (ptr + offset);

offset += MAXALIGN(nslots * sizeof(int));

shared->page_lru_count = (int *) (ptr + offset);

offset += MAXALIGN(nslots * sizeof(int));

shared->buffer_locks = (LWLockId *) (ptr + offset);

offset += MAXALIGN(nslots * sizeof(LWLockId));

if (nlsns > 0)

{

shared->group_lsn = (XLogRecPtr *) (ptr + offset);

offset += MAXALIGN(nslots * nlsns * sizeof(XLogRecPtr));

}

ptr += BUFFERALIGN(offset);

for (slotno = 0; slotno < nslots; slotno++)

{

shared->page_buffer[slotno] = ptr;

shared->page_status[slotno] = SLRU_PAGE_EMPTY;

shared->page_dirty[slotno] = false;

shared->page_lru_count[slotno] = 0;

shared->buffer_locks[slotno] = LWLockAssign();

ptr += BLCKSZ;

}

}

else

Assert(found);

/*

* Initialize the unshared control struct, including directory path. We

* assume caller set PagePrecedes.

*/

ctl->shared = shared;

ctl->do_fsync = true; /* default behavior */

StrNCpy(ctl->Dir, subdir, sizeof(ctl->Dir));

}

| int SimpleLruReadPage | ( | SlruCtl | ctl, | |

| int | pageno, | |||

| bool | write_ok, | |||

| TransactionId | xid | |||

| ) |

Definition at line 358 of file slru.c.

References Assert, SlruSharedData::buffer_locks, SlruSharedData::ControlLock, LW_EXCLUSIVE, LWLockAcquire(), LWLockRelease(), SlruSharedData::page_dirty, SlruSharedData::page_number, SlruSharedData::page_status, SlruCtlData::shared, SimpleLruWaitIO(), SimpleLruZeroLSNs(), SLRU_PAGE_EMPTY, SLRU_PAGE_READ_IN_PROGRESS, SLRU_PAGE_VALID, SLRU_PAGE_WRITE_IN_PROGRESS, SlruPhysicalReadPage(), SlruRecentlyUsed, SlruReportIOError(), and SlruSelectLRUPage().

Referenced by asyncQueueAddEntries(), GetMultiXactIdMembers(), OldSerXidAdd(), RecordNewMultiXact(), SimpleLruReadPage_ReadOnly(), StartupMultiXact(), SubTransSetParent(), TransactionIdSetPageStatus(), and TrimCLOG().

{

SlruShared shared = ctl->shared;

/* Outer loop handles restart if we must wait for someone else's I/O */

for (;;)

{

int slotno;

bool ok;

/* See if page already is in memory; if not, pick victim slot */

slotno = SlruSelectLRUPage(ctl, pageno);

/* Did we find the page in memory? */

if (shared->page_number[slotno] == pageno &&

shared->page_status[slotno] != SLRU_PAGE_EMPTY)

{

/*

* If page is still being read in, we must wait for I/O. Likewise

* if the page is being written and the caller said that's not OK.

*/

if (shared->page_status[slotno] == SLRU_PAGE_READ_IN_PROGRESS ||

(shared->page_status[slotno] == SLRU_PAGE_WRITE_IN_PROGRESS &&

!write_ok))

{

SimpleLruWaitIO(ctl, slotno);

/* Now we must recheck state from the top */

continue;

}

/* Otherwise, it's ready to use */

SlruRecentlyUsed(shared, slotno);

return slotno;

}

/* We found no match; assert we selected a freeable slot */

Assert(shared->page_status[slotno] == SLRU_PAGE_EMPTY ||

(shared->page_status[slotno] == SLRU_PAGE_VALID &&

!shared->page_dirty[slotno]));

/* Mark the slot read-busy */

shared->page_number[slotno] = pageno;

shared->page_status[slotno] = SLRU_PAGE_READ_IN_PROGRESS;

shared->page_dirty[slotno] = false;

/* Acquire per-buffer lock (cannot deadlock, see notes at top) */

LWLockAcquire(shared->buffer_locks[slotno], LW_EXCLUSIVE);

/* Release control lock while doing I/O */

LWLockRelease(shared->ControlLock);

/* Do the read */

ok = SlruPhysicalReadPage(ctl, pageno, slotno);

/* Set the LSNs for this newly read-in page to zero */

SimpleLruZeroLSNs(ctl, slotno);

/* Re-acquire control lock and update page state */

LWLockAcquire(shared->ControlLock, LW_EXCLUSIVE);

Assert(shared->page_number[slotno] == pageno &&

shared->page_status[slotno] == SLRU_PAGE_READ_IN_PROGRESS &&

!shared->page_dirty[slotno]);

shared->page_status[slotno] = ok ? SLRU_PAGE_VALID : SLRU_PAGE_EMPTY;

LWLockRelease(shared->buffer_locks[slotno]);

/* Now it's okay to ereport if we failed */

if (!ok)

SlruReportIOError(ctl, pageno, xid);

SlruRecentlyUsed(shared, slotno);

return slotno;

}

}

| int SimpleLruReadPage_ReadOnly | ( | SlruCtl | ctl, | |

| int | pageno, | |||

| TransactionId | xid | |||

| ) |

Definition at line 450 of file slru.c.

References SlruSharedData::ControlLock, LW_EXCLUSIVE, LW_SHARED, LWLockAcquire(), LWLockRelease(), SlruSharedData::num_slots, SlruSharedData::page_number, SlruSharedData::page_status, SlruCtlData::shared, SimpleLruReadPage(), SLRU_PAGE_EMPTY, SLRU_PAGE_READ_IN_PROGRESS, and SlruRecentlyUsed.

Referenced by asyncQueueReadAllNotifications(), OldSerXidGetMinConflictCommitSeqNo(), SubTransGetParent(), TransactionIdGetStatus(), and TruncateMultiXact().

{

SlruShared shared = ctl->shared;

int slotno;

/* Try to find the page while holding only shared lock */

LWLockAcquire(shared->ControlLock, LW_SHARED);

/* See if page is already in a buffer */

for (slotno = 0; slotno < shared->num_slots; slotno++)

{

if (shared->page_number[slotno] == pageno &&

shared->page_status[slotno] != SLRU_PAGE_EMPTY &&

shared->page_status[slotno] != SLRU_PAGE_READ_IN_PROGRESS)

{

/* See comments for SlruRecentlyUsed macro */

SlruRecentlyUsed(shared, slotno);

return slotno;

}

}

/* No luck, so switch to normal exclusive lock and do regular read */

LWLockRelease(shared->ControlLock);

LWLockAcquire(shared->ControlLock, LW_EXCLUSIVE);

return SimpleLruReadPage(ctl, pageno, true, xid);

}

| Size SimpleLruShmemSize | ( | int | nslots, | |

| int | nlsns | |||

| ) |

Definition at line 143 of file slru.c.

References BUFFERALIGN, and MAXALIGN.

Referenced by AsyncShmemSize(), CLOGShmemSize(), MultiXactShmemSize(), PredicateLockShmemSize(), SimpleLruInit(), and SUBTRANSShmemSize().

{

Size sz;

/* we assume nslots isn't so large as to risk overflow */

sz = MAXALIGN(sizeof(SlruSharedData));

sz += MAXALIGN(nslots * sizeof(char *)); /* page_buffer[] */

sz += MAXALIGN(nslots * sizeof(SlruPageStatus)); /* page_status[] */

sz += MAXALIGN(nslots * sizeof(bool)); /* page_dirty[] */

sz += MAXALIGN(nslots * sizeof(int)); /* page_number[] */

sz += MAXALIGN(nslots * sizeof(int)); /* page_lru_count[] */

sz += MAXALIGN(nslots * sizeof(LWLockId)); /* buffer_locks[] */

if (nlsns > 0)

sz += MAXALIGN(nslots * nlsns * sizeof(XLogRecPtr)); /* group_lsn[] */

return BUFFERALIGN(sz) + BLCKSZ * nslots;

}

| void SimpleLruTruncate | ( | SlruCtl | ctl, | |

| int | cutoffPage | |||

| ) |

Definition at line 1097 of file slru.c.

References SlruSharedData::ControlLock, SlruCtlData::Dir, ereport, errmsg(), SlruSharedData::latest_page_number, LOG, LW_EXCLUSIVE, LWLockAcquire(), LWLockRelease(), NULL, SlruSharedData::num_slots, SlruSharedData::page_dirty, SlruSharedData::page_number, SlruSharedData::page_status, SlruCtlData::PagePrecedes, SlruCtlData::shared, SimpleLruWaitIO(), SLRU_PAGE_EMPTY, SLRU_PAGE_VALID, SlruInternalWritePage(), SlruScanDirCbDeleteCutoff(), and SlruScanDirectory().

Referenced by asyncQueueAdvanceTail(), CheckPointPredicate(), clog_redo(), TruncateCLOG(), TruncateMultiXact(), and TruncateSUBTRANS().

{

SlruShared shared = ctl->shared;

int slotno;

/*

* The cutoff point is the start of the segment containing cutoffPage.

*/

cutoffPage -= cutoffPage % SLRU_PAGES_PER_SEGMENT;

/*

* Scan shared memory and remove any pages preceding the cutoff page, to

* ensure we won't rewrite them later. (Since this is normally called in

* or just after a checkpoint, any dirty pages should have been flushed

* already ... we're just being extra careful here.)

*/

LWLockAcquire(shared->ControlLock, LW_EXCLUSIVE);

restart:;

/*

* While we are holding the lock, make an important safety check: the

* planned cutoff point must be <= the current endpoint page. Otherwise we

* have already wrapped around, and proceeding with the truncation would

* risk removing the current segment.

*/

if (ctl->PagePrecedes(shared->latest_page_number, cutoffPage))

{

LWLockRelease(shared->ControlLock);

ereport(LOG,

(errmsg("could not truncate directory \"%s\": apparent wraparound",

ctl->Dir)));

return;

}

for (slotno = 0; slotno < shared->num_slots; slotno++)

{

if (shared->page_status[slotno] == SLRU_PAGE_EMPTY)

continue;

if (!ctl->PagePrecedes(shared->page_number[slotno], cutoffPage))

continue;

/*

* If page is clean, just change state to EMPTY (expected case).

*/

if (shared->page_status[slotno] == SLRU_PAGE_VALID &&

!shared->page_dirty[slotno])

{

shared->page_status[slotno] = SLRU_PAGE_EMPTY;

continue;

}

/*

* Hmm, we have (or may have) I/O operations acting on the page, so

* we've got to wait for them to finish and then start again. This is

* the same logic as in SlruSelectLRUPage. (XXX if page is dirty,

* wouldn't it be OK to just discard it without writing it? For now,

* keep the logic the same as it was.)

*/

if (shared->page_status[slotno] == SLRU_PAGE_VALID)

SlruInternalWritePage(ctl, slotno, NULL);

else

SimpleLruWaitIO(ctl, slotno);

goto restart;

}

LWLockRelease(shared->ControlLock);

/* Now we can remove the old segment(s) */

(void) SlruScanDirectory(ctl, SlruScanDirCbDeleteCutoff, &cutoffPage);

}

| void SimpleLruWritePage | ( | SlruCtl | ctl, | |

| int | slotno | |||

| ) |

Definition at line 561 of file slru.c.

References NULL, and SlruInternalWritePage().

Referenced by AsyncShmemInit(), BootStrapCLOG(), BootStrapMultiXact(), BootStrapSUBTRANS(), clog_redo(), and multixact_redo().

{

SlruInternalWritePage(ctl, slotno, NULL);

}

| int SimpleLruZeroPage | ( | SlruCtl | ctl, | |

| int | pageno | |||

| ) |

Definition at line 246 of file slru.c.

References Assert, SlruSharedData::latest_page_number, MemSet, SlruSharedData::page_buffer, SlruSharedData::page_dirty, SlruSharedData::page_number, SlruSharedData::page_status, SlruCtlData::shared, SimpleLruZeroLSNs(), SLRU_PAGE_EMPTY, SLRU_PAGE_VALID, SlruRecentlyUsed, and SlruSelectLRUPage().

Referenced by asyncQueueAddEntries(), AsyncShmemInit(), OldSerXidAdd(), ZeroCLOGPage(), ZeroMultiXactMemberPage(), ZeroMultiXactOffsetPage(), and ZeroSUBTRANSPage().

{

SlruShared shared = ctl->shared;

int slotno;

/* Find a suitable buffer slot for the page */

slotno = SlruSelectLRUPage(ctl, pageno);

Assert(shared->page_status[slotno] == SLRU_PAGE_EMPTY ||

(shared->page_status[slotno] == SLRU_PAGE_VALID &&

!shared->page_dirty[slotno]) ||

shared->page_number[slotno] == pageno);

/* Mark the slot as containing this page */

shared->page_number[slotno] = pageno;

shared->page_status[slotno] = SLRU_PAGE_VALID;

shared->page_dirty[slotno] = true;

SlruRecentlyUsed(shared, slotno);

/* Set the buffer to zeroes */

MemSet(shared->page_buffer[slotno], 0, BLCKSZ);

/* Set the LSNs for this new page to zero */

SimpleLruZeroLSNs(ctl, slotno);

/* Assume this page is now the latest active page */

shared->latest_page_number = pageno;

return slotno;

}

Definition at line 1213 of file slru.c.

References DEBUG2, SlruCtlData::Dir, ereport, errmsg(), MAXPGPATH, snprintf(), and unlink().

Referenced by AsyncShmemInit().

Definition at line 1175 of file slru.c.

References SlruCtlData::PagePrecedes.

Referenced by TruncateCLOG().

{

int cutoffPage = *(int *) data;

cutoffPage -= cutoffPage % SLRU_PAGES_PER_SEGMENT;

if (ctl->PagePrecedes(segpage, cutoffPage))

return true; /* found one; don't iterate any more */

return false; /* keep going */

}

| bool SlruScanDirectory | ( | SlruCtl | ctl, | |

| SlruScanCallback | callback, | |||

| void * | data | |||

| ) |

Definition at line 1236 of file slru.c.

References AllocateDir(), callback(), dirent::d_name, DEBUG2, SlruCtlData::Dir, elog, FreeDir(), NULL, and ReadDir().

Referenced by AsyncShmemInit(), SimpleLruTruncate(), TruncateCLOG(), and TruncateMultiXact().

{

bool retval = false;

DIR *cldir;

struct dirent *clde;

int segno;

int segpage;

cldir = AllocateDir(ctl->Dir);

while ((clde = ReadDir(cldir, ctl->Dir)) != NULL)

{

if (strlen(clde->d_name) == 4 &&

strspn(clde->d_name, "0123456789ABCDEF") == 4)

{

segno = (int) strtol(clde->d_name, NULL, 16);

segpage = segno * SLRU_PAGES_PER_SEGMENT;

elog(DEBUG2, "SlruScanDirectory invoking callback on %s/%s",

ctl->Dir, clde->d_name);

retval = callback(ctl, clde->d_name, segpage, data);

if (retval)

break;

}

}

FreeDir(cldir);

return retval;

}

1.7.1

1.7.1