As we mentioned on page 755, instantiating a servant for each Ice object on server start‑up is a viable design, provided that you can afford the amount of memory required by the servants, as well as the delay in start‑up of the server. However, Ice supports more flexible mappings between Ice objects and servants; these alternate mappings allow you to precisely control the trade-off between memory consumption, scalability, and performance. We outline a few of the more common implementation techniques in this section.

If you use a servant locator, the servant returned by locate is used only for the current request, that is, the Ice run time does not add the servant to the active servant map. Of course, this means that if another request comes in for the same Ice object,

locate must again retrieve the object state and instantiate a servant. A common implementation technique is to add each servant to the ASM as part of

locate. This means that only the first request for each Ice object triggers a call to

locate; thereafter, the servant for the corresponding Ice object can be found in the ASM and the Ice run time can immediately dispatch another incoming request for the same Ice object without having to call the servant locator.

An implementation of locate to do this would look something like the following:

Ice::ObjectPtr

MyServantLocator::locate(const Ice::Current& c,

Ice::LocalObjectPtr& cookie)

{

// Get the object identity. (We use the name member

// as the database key.)

//

std::string name = c.id.name;

// Use the identity to retrieve the state from the database.

//

ServantDetails d;

try {

d = DB_lookup(name);

} catch (const DB_error&)

return 0;

}

// We have the state, instantiate a servant.

//

Ice::ObjectPtr servant = new PhoneEntryI(d);

// Add the servant to the ASM.

//

c.adapter‑>add(servant, c.id); // NOTE: Incorrect!

return servant;

}

This is almost identical to the implementation on page 763—the only difference is that we also add the servant to the ASM by calling

ObjectAdapter::add. Unfortunately, this implementation is wrong because it suffers from a race condition. Consider the situation where we do not have a servant for a particular Ice object in the ASM, and two clients more or less simultaneously send a request for the same Ice object. It is entirely possible for the thread scheduler to schedule the two incoming requests such that the Ice run time completes the lookup in the ASM for both requests and, for each request, concludes that no servant is in memory. The net effect is that

locate will be called twice for the same Ice object, and our servant locator will instantiate two servants instead of a single servant. Because the second call to

ObjectAdapter::add will raise an

AlreadyRegisteredException, only one of the two servants will be added to the ASM.

Of course, this is hardly the behavior we expect. To avoid the race condition, our implementation of

locate must check whether a concurrent invocation has already instantiated a servant for the incoming request and, if so, return that servant instead of instantiating a new one. The Ice run time provides the

ObjectAdapter::find operation to allow us to test whether an entry for a specific identity already exists in the ASM:

module Ice {

local interface ObjectAdapter {

// ...

Object find(Identity id);

// ...

};

};

find returns the servant if it exists in the ASM and null, otherwise. Using this lookup function, together with a mutex, allows us to correctly implement

locate. The class definition of our servant locator now has a private mutex so we can establish a critical region inside

locate:

class MyServantLocator : public virtual Ice::ServantLocator {

public:

virtual Ice::ObjectPtr locate(const Ice::Current& c,

Ice::LocalObjectPtr&);

// Declaration of finished() and deactivate() here...

private:

IceUtil::Mutex _m;

};

The locate member function locks the mutex and tests whether a servant is already in the ASM: if so, it returns that servant; otherwise, it instantiates a new servant and adds it to the ASM as before:

Ice::ObjectPtr

MyServantLocator::locate(const Ice::Current& c,

Ice::LocalObjectPtr&)

{

IceUtil::Mutex::Lock lock(_m);

// Check if we have instantiated a servant already.

//

Ice::ObjectPtr servant = c.adapter.find(c.id);

if (!servant) { // We don't have a servant already

// Instantiate a servant.

//

ServantDetails d;

try {

d = DB_lookup(c.id.name);

} catch (const DB_error&) {

return 0;

}

servant = new PhoneEntryI(d);

// Add the servant to the ASM.

//

c.adapter‑>add(servant, c.id);

}

return servant;

}

The Java version of this locator is almost identical, but we use the synchronized qualifier instead of a mutex to make

locate a critical region:

1

synchronized public Ice.Object

locate(Ice.Current c, Ice.LocalObjectHolder cookie)

{

// Check if we have instantiated a servant already.

//

Ice.Object servant = c.adapter.find(c.id);

if (servant == null) { // We don't have a servant already

// Instantiate a servant

//

ServantDetails d;

try {

d = DB.lookup(c.id.name);

} catch (DB.error&) {

return null;

}

servant = new PhoneEntryI(d);

// Add the servant to the ASM.

//

c.adapter.add(servant, c.id);

}

return servant;

}

In general, incremental initialization is beneficial if instantiating servants during start‑up is too slow. The memory savings can be worthwhile as well but, as a rule, are realized only for comparatively short-lived servers: for long-running servers, chances are that, sooner or later, every Ice object will be accessed by some client or another; in that case, there are no memory savings because we end up with an instantiated servant for every Ice object regardless.

A common problem with object-oriented middleware is scalability: servers frequently are used as front ends to large databases that are accessed remotely by clients. The servers’ job is to present an object-oriented view to clients of a very large number of records in the database. Typically, the number of records is far too large to instantiate servants for even a fraction of the database records.

A common technique for solving this problem is to use default servants. A default servant is a servant that, for each request, takes on the persona of a different Ice object. In other words, the servant changes its behavior according to the object identity that is accessed by a request, on a per-request basis. In this way, it is possible to allow clients access to an unlimited number of Ice objects with only a single servant in memory.

Default servant implementations are attractive not only because of the memory savings they offer, but also because of the simplicity of implementation: in essence, a default servant is a facade

[2] to the persistent state of an object in the database. This means that the programming required to implement a default servant is typically minimal: it simply consists of the code required to read and write the corresponding database records.

To create a default servant implementation, we need as many locators as there are non-abstract interfaces in the system. For example, for our file system application, we require two locators, one for directories and one for files. In addition, the object identities we create use the

category member of the object identity to encode the type of interface of the corresponding Ice object. The value of the category field can be anything that identifies the interface, such as the ‘

d’ and ‘

f’ convention we used on

page 768. Alternatively, you could use

"Directory" and

"File", or use the type ID of the corresponding interface, such as

"::Filesystem::Directory" and

"::Filesystem::File". The

name member of the object identity must be set to whatever identifier we can use to retrieve the persistent state of each directory and file from secondary storage. (For our file system application, we used a UUID as a unique identifier.)

class DirectoryLocator : public virtual Ice::ServantLocator {

public:

DirectoryLocator() : _servant(new DirectoryI)

{

}

virtual Ice::ObjectPtr locate(const Ice::Current&,

Ice::LocalObjectPtr&)

{

return _servant;

}

virtual void finished(const Ice::Current&,

const Ice::ObjectPtr&,

const Ice::LocalObjectPtr&)

{

}

virtual void deactivate(const std::string&)

{

}

private:

Ice::ObjectPtr _servant;

};

Note that constructor of the locator instantiates a servant and returns that same servant from every call to

locate. The implementation of the file locator is analogous to the one for directories. Registration of the servant locators proceeds as usual:

_adapter‑>addServantLocator(new DirectoryLocator, "d");

_adapter‑>addServantLocator(new FileLocator, "f");

1. Use the passed Current object to get the identity for the current request.

2.

Use the name member of the identity to locate the persistent state of the servant on secondary storage. If no record can be found for the identity, throw an

ObjectNotExistException.

Filesystem::NodeSeq

Filesystem::DirectoryI::list(const Ice::Current& c) const

{

// Use the identity of the directory to retrieve

// its contents.

DirectoryContents dc;

try {

dc = DB_getDirectory(c.id.name);

} catch(const DB_error&) {

throw Ice::ObjectNotExistException(__FILE__, __LINE__);

}

// Use the records retrieved from the database to

// initialize return value.

//

FileSystem::NodeSeq ns;

// ...

return ns;

}

Note that the servant implementation is completely stateless: the only state it operates on is the identity of the Ice object for the current request (and that identity is passed as part of the

Current parameter). The price we have to pay for the unlimited scalability and reduced memory footprint is performance: default servants make a database access for every invoked operation which is obviously slower than caching state in memory, as part of a servant that has been added to the ASM. However, this does not mean that default servants carry an unacceptable performance penalty: databases often provide sophisticated caching, so even though the operation implementations read and write the database, as long as they access cached state, performance may be entirely acceptable.

One issue you need to be aware of with default servants is the need to override ice_ping: the default implementation of

ice_ping that the servant inherits from its skeleton class always succeeds. For servants that are registered with the ASM, this is exactly what we want; however, for default servants,

ice_ping must fail if a client uses a proxy to a no‑longer existent Ice object. To avoid getting successful

ice_ping invocations for non-existent Ice objects, you must override

ice_ping in the default servant. The implementation must check whether the object identity for the request denotes a still-existing Ice object and, if not, throw

ObjectNotExistException:

void

Filesystem::DirectoryI::ice_ping(const Ice::Current& c) const

{

try {

d = DB_lookup(c.id.name);

} catch (const DB_error&) {

throw Ice::ObjectNotExistException(__FILE__, __LINE__);

}

}

It is good practice to override ice_ping if you are using default servants.

Depending on the nature of your application, you may be able to steer a middle path that provides better performance while keeping memory requirements low: if your application has a number of frequently-accessed objects that are performance-critical, you can add servants for those objects to the ASM. If you store the state of these objects in data members inside the servants, you effectively have a cache of these objects.

The remaining, less-frequently accessed objects can be implemented with a default servant. For example, in our file system implementation, we could choose to instantiate directory servants permanently, but to have file objects implemented with a default servant. This provides efficient navigation through the directory tree and incurs slower performance only for the (presumably less frequent) file accesses.

This technique could be augmented with a cache of recently-accessed files, along similar lines to the buffer pool used by the Unix kernel

[10]. The point is that you can combine use of the ASM with servant locators and default servants to precisely control the trade-offs among scalability, memory consumption, and performance to suit the needs of your application.

A variation on the previous theme and particularly interesting use of a servant locator is as an

evictor [4]. An evictor is a servant locator that maintains a cache of servants:

•

Whenever a request arrives (that is, locate is called by the Ice run time), the evictor checks to see whether it can find a servant for the request in its cache. If so, it returns the servant that is already instantiated in the cache; otherwise, it instantiates a servant and adds it to the cache.

•

The cache is a queue that is maintained in least-recently used (LRU) order: the least-recently used servant is at the tail of the queue, and the most-recently used servant is at the head of the queue. Whenever a servant is returned from or added to the cache, it is moved from its current queue position to the head of the queue, that is, the "newest" servant is always at the head, and the "oldest" servant is always at the tail.

•

The queue has a configurable length that corresponds to how many servants will be held in the cache; if a request arrives for an Ice object that does not have a servant in memory and the cache is full, the evictor removes the least-recently used servant at the tail of the queue from the cache in order to make room for the servant about to be instantiated at the head of the queue.

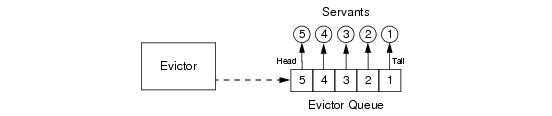

Figure 28.2 illustrates an evictor with a cache size of five after five invocations have been made, for object identities 1 to 5, in that order.

At this point, the evictor has instantiated five servants, and has placed each servant onto the evictor queue. Because requests were sent by the client for object identities 1 to 5 (in that order), servant 5 ends up at the head of the queue (at the most-recently used position), and servant 1 ends up at the tail of the queue (at the least-recently used position).

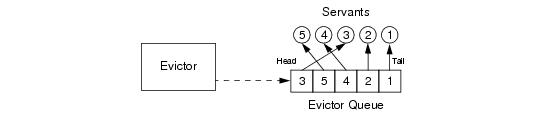

Assume that the client now sends a request for servant 3. In this case, the servant is found on the evictor queue and moved to the head position. The resulting ordering is shown in

Figure 28.3.

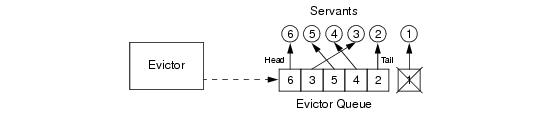

Assume that the next client request is for object identity 6. The evictor queue is fully populated, so the evictor creates a servant for object identity 6, places that servant at the head of the queue, and evicts the servant with identity 1 (the least-recently used servant) at the tail of the queue, as shown in

Figure 28.4.

The evictor pattern combines the advantages of the ASM with the advantages of a default servant: provided that the cache size is sufficient to hold the working set of servants in memory, most requests are served by an already instantiated servant, without incurring the overhead of creating a servant and accessing the database to initialize servant state. By setting the cache size, you can control the trade-off between performance and memory consumption as appropriate for your application.

The following sections show how to implement an evictor in both C++ and Java. (You can also find the source code for the evictor with the code examples for this book in the Ice distribution.)

The evictor we show here is designed as an abstract base class: in order to use it, you derive an object from the

EvictorBase base class and implement two methods that are called by the evictor when it needs to add or evict a servant. This leads to a class definitions as follows:

class EvictorBase : public Ice::ServantLocator {

public:

EvictorBase(int size = 1000);

virtual Ice::ObjectPtr locate(const Ice::Current& c,

Ice::LocalObjectPtr& cookie);

virtual void finished(const Ice::Current& c,

const Ice::ObjectPtr&,

const Ice::LocalObjectPtr& cookie);

virtual void deactivate(const std::string&);

protected:

virtual Ice::ObjectPtr add(const Ice::Current&,

Ice::LocalObjectPtr&) = 0;

virtual void evict(const Ice::ObjectPtr&,

const Ice::LocalObjectPtr&) = 0;

private:

// ...

};

typedef IceUtil::Handle<EvictorBase> EvictorBasePtr;

The locate,

finished, and

deactivate functions are inherited from the

ServantLocator base class; these functions implement the logic to maintain the queue in LRU order and to add and evict servants as needed.

The add and

evict functions are called by the evictor when it needs to add a new servant to the queue and when it evicts a servant from the queue. Note that these functions are pure virtual, so they must be implemented in a derived class. The job of

add is to instantiate and initialize a servant for use by the evictor. The

evict function is called by the evictor when it evicts a servant. This allows

evict to perform any cleanup. Note that

add can return a cookie that the evictor passes to

evict, so you can move context information from

add to

evict.

The need for the use count deserves some extra explanation: suppose a client invokes a long-running operation on an Ice object with identity

I. In response, the evictor adds a servant for

I to the evictor queue. While the original invocation is still executing, other clients invoke operations on various Ice objects, which leads to more servants for other object identities being added to the queue. As a result, the servant for identity

I gradually migrates toward the tail of the queue. If enough client requests for other Ice objects arrive while the operation on object

I is still executing, the servant for

I could be evicted while it is still executing the original request.

By itself, this will not do any harm. However, if the servant is evicted and a client then invokes another request on object

I, the evictor would have no idea that a servant for

I is still around and would add a second servant for

I. However, having two servants for the same Ice object in memory is likely to cause problems, especially if the servant’s operation implementations write to a database.

The use count allows us to avoid this problem: we keep track of how many requests are currently executing inside each servant and, while a servant is busy, avoid evicting that servant. As a result, the queue size is not a hard upper limit: long-running operations can temporarily cause more servants than the limit to appear in the queue. However, as soon as excess servants become idle, they are evicted as usual.

The evictor queue does not store the identity of the servant. Instead, the entries on the queue are iterators into the evictor map. This is useful when the time comes to evict a servant: instead of having to search the map for the identity of the servant to be evicted, we can simply delete the map entry that is pointed at by the iterator at the tail of the queue. We can get away with storing an iterator into the evictor queue as part of the map, and storing an iterator into the evictor map as part of the queue because both

std::list and

std::map do not invalidate forward iterators when we add or delete entries

2 (except for invalidating iterators that point at a deleted entry, of course).

Finally, our locate and

finished implementations will need to exchange a cookie that contains a smart pointer to the entry in the evictor map. This is necessary so that

finished can decrement the servant’s use count.

class EvictorBase : public Ice::ServantLocator {

// ...

private:

struct EvictorEntry;

typedef IceUtil::Handle<EvictorEntry> EvictorEntryPtr;

typedef std::map<Ice::Identity, EvictorEntryPtr> EvictorMap;

typedef std::list<EvictorMap::iterator> EvictorQueue;

struct EvictorEntry : public Ice::LocalObject

{

Ice::ObjectPtr servant;

Ice::LocalObjectPtr userCookie;

EvictorQueue::iterator queuePos;

int useCount;

};

EvictorMap _map;

EvictorQueue _queue;

Ice::Int _size;

IceUtil::Mutex _mutex;

void evictServants();

};

Note that the evictor stores the evictor map, queue, and the queue size in the private data members

_map,

_queue, and

_size. In addition, we use a private

_mutex data member so we can correctly serialize access to the evictor’s data structures.

The evictServants member function takes care of evicting servants when the queue length exceeds its limit—we will discuss this function in more detail shortly.

The EvictorEntry structure serves as the cookie that we pass from

locate to

finished; it stores the servant, the servant’s position in the evictor queue, the servant’s use count, and the cookie that we pass from

add to

evict.

Ice::ObjectPtr

EvictorBase::locate(const Ice::Current& c,

Ice::LocalObjectPtr& cookie)

{

IceUtil::Mutex::Lock lock(_mutex);

//

// Check if we have a servant in the map already.

//

EvictorEntryPtr entry;

EvictorMap::iterator i = _map.find(c.id);

if (i != _map.end()) {

//

// Got an entry already, dequeue the entry from

// its current position.

//

entry = i‑>second;

_queue.erase(entry‑>queuePos);

} else {

//

// We do not have an entry. Ask the derived class to

// instantiate a servant and add a new entry to the map.

//

entry = new EvictorEntry;

entry‑>servant = add(c, entry‑>userCookie); // Down‑call

if (!entry‑>servant) {

return 0;

}

entry‑>useCount = 0;

i = _map.insert(std::make_pair(c.id, entry)).first;

}

//

// Increment the use count of the servant and enqueue

// the entry at the front, so we get LRU order.

//

++(entry‑>useCount);

entry‑>queuePos = _queue.insert(_queue.begin(), i);

cookie = entry;

return entry‑>servant;

}

The first step in locate is to lock the

_mutex data member. This protects the evictor’s data structures from concurrent access. The next step is to instantiate a smart pointer to an

EvictorEntry. That smart pointer acts as the cookie that is returned from

locate and will be passed by the Ice run time to the corresponding call to

finished. That same smart pointer is also the value type of our map entries, so we do not store two copies of the same information redundantly—instead, smart pointers ensure that a single copy of each

EvictorEntry structure is shared by both the cookie and the map.

The next step is to look in the evictor map to see whether we already have an entry for this object identity. If so, we remove the entry from its current queue position.

Otherwise, we do not have an entry for this object identity yet, so we have to create one. The code creates a new evictor entry, and then calls

add to get a new servant. This is a down-call to the concrete class that will be derived from

EvictorBase. The implementation of

add must attempt to locate the object state for the Ice object with the identity passed inside the

Current object and either return a servant as usual, or return null or throw an exception to indicate failure. If

add returns null, we return zero to let the Ice run time know that no servant could be found for the current request. If

add succeeds, we initialize the entry’s use count to zero and insert the entry into the evictor map.

The last few lines of locate add the entry for the current request to the head of the evictor queue to maintain its LRU property, increment the use count of the entry, set the cookie that is returned from locate to point at the

EvictorEntry, and finally return the servant to the Ice run time.

The implementation of finished is comparatively simple. It decrements the use count of the entry and then calls

evictServants to get rid of any servants that might need to be evicted:

void

EvictorBase::finished(const Ice::Current&,

const Ice::ObjectPtr&,

const Ice::LocalObjectPtr& cookie)

{

IceUtil::Mutex::Lock lock(_mutex);

EvictorCookiePtr ec = EvictorCookiePtr::dynamicCast(cookie);

// Decrement use count and check if

// there is something to evict.

//

‑‑(ec‑>entry‑>useCount);

evictServants();

}

In turn, evictServants examines the evictor queue: if the queue length exceeds the evictor’s size, the excess entries are scanned. Any entries with a zero use count are then evicted:

void

EvictorBase::evictServants()

{

//

// If the evictor queue has grown larger than the limit,

// look at the excess elements to see whether any of them

// can be evicted.

//

EvictorQueue::reverse_iterator p = _queue.rbegin();

int excessEntries = static_cast<int>(_map.size() ‑ _size);

for (int i = 0; i < excessEntries; ++i) {

EvictorMap::iterator mapPos = *p;

if (mapPos‑>second‑>useCount == 0) {

evict(mapPos‑>second‑>servant,

mapPos‑>second‑>userCookie);

p = EvictorQueue::reverse_iterator(

_queue.erase(mapPos‑>second‑>queuePos));

_map.erase(mapPos);

} else

++p;

}

}

The code scans the excess entries, starting at the tail of the evictor queue. If an entry has a zero use count, it is evicted: after calling the

evict member function in the derived class, the code removes the evicted entry from both the map and the queue.

Finally, the implementation of deactivate sets the evictor size to zero and then calls

evictServants. This results in eviction of all servants. The Ice run time guarantees to call

deactivate only once no more requests are executing in an object adapter; as a result, it is guaranteed that all entries in the evictor will be idle and therefore will be evicted.

void

EvictorBase::deactivate(const std::string& category)

{

IceUtil::Mutex::Lock lock(_mutex);

_size = 0;

evictServants();

}

Note that, with this implementation of evictServants, we only scan the tail section of the evictor queue for servants to evict. If we have long-running operations, this allows the number of servants in the queue to remain above the evictor size if the servants in the tail section have a non-zero use count. This means that, even immediately after calling

evictServants, the queue length can still exceed the evictor size.

We can adopt a more aggressive strategy for eviction: instead of scanning only the excess entries in the queue, if, after looking in the tail section of the queue, we still have more servants in the queue than the queue size, we keep scanning for servants with a zero use count until the queue size drops below the limit. This alternative version of

evictServants looks as follows:

void

EvictorBase::evictServants()

{

//

// If the evictor queue has grown larger than the limit,

// try to evict servants until the length drops

// below the limit.

//

EvictorQueue::reverse_iterator p = _queue.rbegin();

int numEntries = static_cast<int>_map.size();

for (int i = 0; i < numEntries && _map.size() > _size; ++i) {

EvictorMap::iterator mapPos = *p;

if (mapPos‑>second‑>useCount == 0) {

evict(mapPos‑>second‑>servant,

mapPos‑>second‑>userCookie);

p = EvictorQueue::reverse_iterator(

_queue.erase(mapPos‑>second‑>queuePos));

_map.erase(mapPos);

} else

++p;

}

}

The only difference in this version is that terminating condition for the for-loop has changed: instead of scanning only the excess entries for servants with a use count, this version keeps scanning until the evictor size drops below the limit.

Which version is more appropriate depends on your application: if locating and evicting servants is expensive, and memory is not at a premium, the first version (which only scans the tail section) is more appropriate; if you want to keep memory consumption to a minimum, the second version in more appropriate. Also keep in mind that the difference between the two versions is significant only if you have long-running operations and many concurrent invocations from clients; otherwise, there is no point in more aggressively scanning for servants to remove because they are going be become idle again very quickly and get evicted as soon as the next request arrives.

The evictor we show here is designed as an abstract base class: in order to use it, you derive an object from the

Evictor.EvictorBase base class and implement two methods that are called by the evictor when it needs to add or evict a servant. This leads to a class definitions as follows:

package Evictor;

public abstract class EvictorBase implements Ice.ServantLocator

{

public

EvictorBase()

{

_size = 1000;

}

public

EvictorBase(int size)

{

_size = size < 0 ? 1000 : size;

}

public abstract Ice.Object

add(Ice.Current c, Ice.LocalObjectHolder cookie);

public abstract void

evict(Ice.Object servant, java.lang.Object cookie);

synchronized public final Ice.Object

locate(Ice.Current c, Ice.LocalObjectHolder cookie)

{

// ...

}

synchronized public final void

finished(Ice.Current c, Ice.Object o, java.lang.Object cookie)

{

// ...

}

synchronized public final void

deactivate(String category)

{

// ...

}

// ...

private int _size;

}

The locate,

finished, and

deactivate methods are inherited from the

ServantLocator base class; these methods implement the logic to maintain the queue in LRU order and to add and evict servants as needed. The methods are synchronized, so the evictor’s internal data structures are protected from concurrent access.

The add and

evict methods are called by the evictor when it needs to add a new servant to the queue and when it evicts a servant from the queue. Note that these functions are abstract, so they must be implemented in a derived class. The job of

add is to instantiate and initialize a servant for use by the evictor. The

evict function is called by the evictor when it evicts a servant. This allows

evict to perform any cleanup. Note that

add can return a cookie that the evictor passes to

evict, so you can move context information from

add to

evict.

•

The evictor queue must be maintained in least-recently used order, that is, every time an invocation arrives and we find an entry for the identity in the evictor map, we also must locate the servant’s identity on the evictor queue and move it to the front of the queue. However, scanning for that entry is inefficient because it requires

O(

n) time. To get around this, we store an iterator in the evictor map that marks the corresponding entry’s position in the evictor queue. This allows us to dequeue the entry from its current position and enqueue it at the head of the queue in

O(1) time.

Unfortunately, the various lists provided by java.util do not allow us to keep an iterator to a list position without invalidating that iterator as the list is updated. To deal with this, we use a special-purpose linked list implementation,

Evictor.LinkedList, that does not have this limitation.

LinkedList has an interface similar to

java.util.LinkedList but does not invalidate iterators other than iterators that point at an element that is removed. For brevity, we do not show the implementation of this list here—you can find the implementation in the code examples for this book in the Ice distribution.

•

We maintain a use count as part of the map in order to avoid incorrect eviction of servants. Suppose a client invokes a long-running operation on an Ice object with identity

I. In response, the evictor adds a servant for

I to the evictor queue. While the original invocation is still executing, other clients invoke operations on various Ice objects, which leads to more servants for other object identities being added to the queue. As a result, the servant for identity

I gradually migrates toward the tail of the queue. If enough client requests for other Ice objects arrive while the operation on object

I is still executing, the servant for

I could be evicted while it is still executing the original request.

By itself, this will not do any harm. However, if the servant is evicted and a client then invokes another request on object

I, the evictor would have no idea that a servant for

I is still around and would add a second servant for

I. However, having two servants for the same Ice object in memory is likely to cause problems, especially if the servant’s operation implementations write to a database.

The use count allows us to avoid this problem: we keep track of how many requests are currently executing inside each servant and, while a servant is busy, avoid evicting that servant. As a result, the queue size is not a hard upper limit: long-running operations can temporarily cause more servants than the limit to appear in the queue. However, as soon as excess servants become idle, they are evicted as usual.

Finally, our locate and

finished implementations will need to exchange a cookie that contains a smart pointer to the entry in the evictor map. This is necessary so that

finished can decrement the servant’s use count.

package Evictor;

public abstract class EvictorBase implements Ice.ServantLocator

{

// ...

private class EvictorEntry

{

Ice.Object servant;

java.lang.Object userCookie;

java.util.Iterator<Ice.Identity> queuePos;

int useCount;

}

private void evictServants()

{

// ...

}

private java.util.Map<Ice.Identity, EvictorEntry> _map =

new java.util.HashMap<Ice.Identity, EvictorEntry>();

private Evictor.LinkedList<Ice.Identity> _queue =

new Evictor.LinkedList<Ice.Identity>();

private int _size;

}

Note that the evictor stores the evictor map, queue, and the queue size in the private data members

_map,

_queue, and

_size. The map key is the identity of the Ice object, and the lookup value is of type

EvictorEntry. The queue simply stores identities, of type

Ice::Identity.

The evictServants member function takes care of evicting servants when the queue length exceeds its limit—we will discuss this function in more detail shortly.

synchronized public final Ice.Object

locate(Ice.Current c, Ice.LocalObjectHolder cookie)

{

//

// Check if we have a servant in the map already.

//

EvictorEntry entry = _map.get(c.id);

if (entry != null) {

//

// Got an entry already, dequeue the entry from

// its current position.

//

entry.queuePos.remove();

} else {

//

// We do not have entry. Ask the derived class to

// instantiate a servant and add a new entry to the map.

//

entry = new EvictorEntry();

Ice.LocalObjectHolder cookieHolder =

new Ice.LocalObjectHolder();

entry.servant = add(c, cookieHolder); // Down‑call

if (entry.servant == null) {

return null;

}

entry.userCookie = cookieHolder.value;

entry.useCount = 0;

_map.put(c.id, entry);

}

//

// Increment the use count of the servant and enqueue

// the entry at the front, so we get LRU order.

//

++(entry.useCount);

_queue.addFirst(c.id);

entry.queuePos = _queue.iterator();

entry.queuePos.next(); // Position iterator on the element.

cookie.value = entry;

return entry.servant;

}

The code uses an EvictorEntry as the cookie that is returned from

locate and will be passed by the Ice run time to the corresponding call to

finished.

We first look for an existing entry in the evictor map, using the object identity as the key. If we have an entry in the map already, we dequeue the corresponding identity from the evictor queue. (The

queuePos member of

EvictorEntry is an iterator that marks that entry’s position in the evictor queue.)

Otherwise, we do not have an entry in the map, so we create a new one and call the

add method. This is a down-call to the concrete class that will be derived from

EvictorBase. The implementation of

add must attempt to locate the object state for the Ice object with the identity passed inside the

Current object and either return a servant as usual, or return null or throw an exception to indicate failure. If

add returns null, we return null to let the Ice run time know that no servant could be found for the current request. If

add succeeds, we initialize the entry’s use count to zero and insert the entry into the evictor map.

The final few lines of code increment the entry’s use count, add the entry at the head of the evictor queue, store the entry’s position in the queue, and assign the entry to the cookie that is returned from

locate, before returning the servant to the Ice run time.

The implementation of finished is comparatively simple. It decrements the use count of the entry and then calls

evictServants to get rid of any servants that might need to be evicted:

synchronized public final void

finished(Ice.Current c, Ice.Object o, java.lang.Object cookie)

{

EvictorEntry entry = (EvictorEntry)cookie;

// Decrement use count and check if

// there is something to evict.

//

‑‑(entry.useCount);

evictServants();

}

In turn, evictServants examines the evictor queue: if the queue length exceeds the evictor’s size, the excess entries are scanned. Any entries with a zero use count are then evicted:

private void evictServants()

{

//

// If the evictor queue has grown larger than the limit,

// look at the excess elements to see whether any of them

// can be evicted.

//

java.util.Iterator<Ice.Identity> p = _queue.riterator();

int excessEntries = _map.size() ‑ _size;

for (int i = 0; i < excessEntries; ++i) {

Ice.Identity id = p.next();

EvictorEntry e = _map.get(id);

if (e.useCount == 0) {

evict(e.servant, e.userCookie); // Down‑call

e.queuePos.remove();

_map.remove(id);

}

}

}

The code scans the excess entries, starting at the tail of the evictor queue. If an entry has a zero use count, it is evicted: after calling the

evict member function in the derived class, the code removes the evicted entry from both the map and the queue.

Finally, the implementation of deactivate sets the evictor size to zero and then calls

evictServants. This results in eviction of all servants. The Ice run time guarantees to call

deactivate only once no more requests are executing in an object adapter; as a result, it is guaranteed that all entries in the evictor will be idle and therefore will be evicted.

synchronized public final void

deactivate(String category)

{

_size = 0;

evictServants();

}

Note that, with this implementation of evictServants, we only scan the tail section of the evictor queue for servants to evict. If we have long-running operations, this allows the number of servants in the queue to remain above the evictor size if the servants in the tail section have a non-zero use count. This means that, even immediately after calling

evictServants, the queue length can still exceed the evictor size.

We can adopt a more aggressive strategy for eviction: instead of scanning only the excess entries in the queue, if, after looking in the tail section of the queue, we still have more servants in the queue than the queue size, we keep scanning for servants with a zero use count until the queue size drops below the limit. This alternative version of

evictServants looks as follows:

private void evictServants()

{

//

// If the evictor queue has grown larger than the limit,

// look at the excess elements to see whether any of them

// can be evicted.

//

java.util.Iterator<Ice.Identity> p = _queue.riterator();

int numEntries = _map.size();

for (int i = 0; i < excessEntries && _map.size() > _size;

++i) {

Ice.Identity id = p.next();

EvictorEntry e = _map.get(id);

if (e.useCount == 0) {

evict(e.servant, e.userCookie); // Down‑call

e.queuePos.remove();

_map.remove(id);

}

}

}

The only difference in this version is that terminating condition for the for-loop has changed: instead of scanning only the excess entries for servants with a use count, this version keeps scanning until the evictor size drops below the limit.

Which version is more appropriate depends on your application: if locating and evicting servants is expensive, and memory is not at a premium, the first version (which only scans the tail section) is more appropriate; if you want to keep memory consumption to a minimum, the second version in more appropriate. Also keep in mind that the difference between the two versions is significant only if you have long-running operations and many concurrent invocations from clients; otherwise, there is no point in more aggressively scanning for servants to remove because they are going be become idle again very quickly and get evicted as soon as the next request arrives.

The System.Collections classes do not provide a container that does not invalidate iterators when we modify the contents of the container but, to efficiently implement an evictor, we need such a container. To deal with this, we use a special-purpose linked list implementation,

Evictor.LinkedList, that does not invalidate iterators when we delete or add an element. For brevity, we only show the interface of

LinkedList here—you can find the implementation in the code examples for this book in the Ice for .NET distribution.

namespace Evictor

{

public class LinkedList<T> : ICollection<T>, ICollection,

ICloneable

{

public LinkedList();

public int Count { get; }

public void Add(T value);

public void AddFirst(T value);

public void Clear();

public bool Contains(T value);

public bool Remove(T value);

public IEnumerator GetEnumerator();

public class Enumerator : IEnumerator<T>, IEnumerator,

IDisposable

{

public void Reset();

public T Current { get; }

public bool MoveNext();

public bool MovePrev();

public void Remove();

public void Dispose();

}

public void CopyTo(T[] array, int index);

public void CopyTo(Array array, int index);

public object Clone();

public bool IsReadOnly { get; }

public bool IsSynchronized { get; }

public object SyncRoot { get; }

}

}

The Add method appends an element to the list, and the

AddFirst method prepends an element to the list.

GetEnumerator returns an enumerator for the list elements; immediately after calling

GetEnumerator, the enumerator does not point at any element until you call either

MoveNext or

MovePrev, which position the enumerator at the first and last element, respectively.

Current returns the element at the enumerator position, and

Remove deletes the element at the current position and leaves the enumerator pointing at no element. Calling

MoveNext or

MovePrev after calling

Remove positions the enumerator at the element following or preceding the deleted element, respectively.

MoveNext and

MovePrev return true if they have positioned the enumerator on an element; otherwise, they return false and leave the enumerator position on the last and first element, respectively.

Given this LinkedList, we can implement the evictor. The evictor we show here is designed as an abstract base class: in order to use it, you derive an object from the

Evictor.EvictorBase base class and implement two methods that are called by the evictor when it needs to add or evict a servant. This leads to a class definitions as follows:

namespace Evictor

{

public abstract class EvictorBase

: Ice.ServantLocator

{

public EvictorBase()

{

_size = 1000;

}

public EvictorBase(int size)

{

_size = size < 0 ? 1000 : size;

}

protected abstract Ice.Object add(Ice.Current c,

out object cookie);

protected abstract void evict(Ice.Object servant,

object cookie);

public Ice.Object locate(Ice.Current c,

out object cookie)

{

lock(this)

{

// ...

}

}

public void finished(Ice.Current c, Ice.Object o,

object cookie)

{

lock(this)

{

// ...

}

}

public void deactivate(string category)

{

lock(this)

{

// ...

}

}

private int _size;

}

}

The locate,

finished, and

deactivate methods are inherited from the

ServantLocator base class; these methods implement the logic to maintain the queue in LRU order and to add and evict servants as needed. The methods use a

lock(this) statement for their body, so the evictor’s internal data structures are protected from concurrent access.

The add and

evict methods are called by the evictor when it needs to add a new servant to the queue and when it evicts a servant from the queue. Note that these functions are abstract, so they must be implemented in a derived class. The job of

add is to instantiate and initialize a servant for use by the evictor. The

evict function is called by the evictor when it evicts a servant. This allows

evict to perform any cleanup. Note that

add can return a cookie that the evictor passes to

evict, so you can move context information from

add to

evict.

•

The evictor queue must be maintained in least-recently used order, that is, every time an invocation arrives and we find an entry for the identity in the evictor map, we also must locate the servant’s identity on the evictor queue and move it to the front of the queue. However, scanning for that entry is inefficient because it requires

O(

n) time. To get around this, we store an iterator in the evictor map that marks the corresponding entry’s position in the evictor queue. This allows us to dequeue the entry from its current position and enqueue it at the head of the queue in

O(1) time, using the

Evictor.LinkedList implementation we saw on

page 796.

•

We maintain a use count as part of the map in order to avoid incorrect eviction of servants. Suppose a client invokes a long-running operation on an Ice object with identity

I. In response, the evictor adds a servant for

I to the evictor queue. While the original invocation is still executing, other clients invoke operations on various Ice objects, which leads to more servants for other object identities being added to the queue. As a result, the servant for identity

I gradually migrates toward the tail of the queue. If enough client requests for other Ice objects arrive while the operation on object

I is still executing, the servant for

I could be evicted while it is still executing the original request.

By itself, this will not do any harm. However, if the servant is evicted and a client then invokes another request on object

I, the evictor would have no idea that a servant for

I is still around and would add a second servant for

I. However, having two servants for the same Ice object in memory is likely to cause problems, especially if the servant’s operation implementations write to a database.

The use count allows us to avoid this problem: we keep track of how many requests are currently executing inside each servant and, while a servant is busy, avoid evicting that servant. As a result, the queue size is not a hard upper limit: long-running operations can temporarily cause more servants than the limit to appear in the queue. However, as soon as excess servants become idle, they are evicted as usual.

Finally, our locate and

finished implementations will need to exchange a cookie that contains a smart pointer to the entry in the evictor map. This is necessary so that

finished can decrement the servant’s use count.

namespace Evictor

{

using System.Collections.Generic;

public abstract class EvictorBase

: Ice.ServantLocator

{

// ...

private class EvictorEntry

{

internal Ice.Object servant;

internal object userCookie;

internal LinkedList<Ice.Identity>.Enumerator queuePos;

internal int useCount;

}

private void evictServants()

{

// ...

}

private Dictionary<Ice.Identity, EvictorEntry> _map

= new Dictionary<Ice.Identity, EvictorEntry>();

private LinkedList<Ice.Identity> _queue =

new LinkedList<Ice.Identity>();

private int _size;

}

}

Note that the evictor stores the evictor map, queue, and the queue size in the private data members

_map,

_queue, and

_size. The map key is the identity of the Ice object, and the lookup value is of type

EvictorEntry. The queue simply stores identities, of type

Ice.Identity.

The evictServants member function takes care of evicting servants when the queue length exceeds its limit—we will discuss this function in more detail shortly.

public Ice.Object locate(Ice.Current c,

out object cookie)

{

lock(this)

{

//

// Check if we a servant in the map already.

//

EvictorEntry entry = _map[c.id];

if (entry != null) {

//

// Got an entry already, dequeue the entry from

// its current position.

//

entry.queuePos.Remove();

} else {

//

// We do not have an entry. Ask the derived class to

// instantiate a servant and add an entry to the map.

//

entry = new EvictorEntry();

entry.servant = add(c, out entry.userCookie);

if (entry.servant == null) {

cookie = null;

return null;

}

entry.useCount = 0;

_map[c.id] = entry;

}

//

// Increment the use count of the servant and enqueue

// the entry at the front, so we get LRU order.

//

++(entry.useCount);

_queue.AddFirst(c.id);

entry.queuePos = (LinkedList<Ice.Identity>.Enumerator)

_queue.GetEnumerator();

entry.queuePos.MoveNext();

cookie = entry;

return entry.servant;

}

}

The code uses an EvictorEntry as the cookie that is returned from

locate and will be passed by the Ice run time to the corresponding call to

finished.

We first look for an existing entry in the evictor map, using the object identity as the key. If we have an entry in the map already, we dequeue the corresponding identity from the evictor queue. (The

queuePos member of

EvictorEntry is an iterator that marks that entry’s position in the evictor queue.)

Otherwise, we do not have an entry in the map, so we create a new one and call the

add method. This is a down-call to the concrete class that will be derived from

EvictorBase. The implementation of

add must attempt to locate the object state for the Ice object with the identity passed inside the

Current object and either return a servant as usual, or return null or throw an exception to indicate failure. If

add returns null, we return null to let the Ice run time know that no servant could be found for the current request. If

add succeeds, we initialize the entry’s use count to zero and insert the entry into the evictor map.

The final few lines of code increment the entry’s use count, add the entry at the head of the evictor queue, store the entry’s position in the queue, and initialize the cookie that is returned from

locate, before returning the servant to the Ice run time.

The implementation of finished is comparatively simple. It decrements the use count of the entry and then calls

evictServants to get rid of any servants that might need to be evicted:

public void finished(Ice.Current c, Ice.Object o,

object cookie)

{

lock(this)

{

EvictorEntry entry = (EvictorEntry)cookie;

//

// Decrement use count and check if

// there is something to evict.

//

‑‑(entry.useCount);

evictServants();

}

}

In turn, evictServants examines the evictor queue: if the queue length exceeds the evictor’s size, the excess entries are scanned. Any entries with a zero use count are then evicted:

private void evictServants()

{

//

// If the evictor queue has grown larger than the limit,

// look at the excess elements to see whether any of them

// can be evicted.

//

LinkedList<Ice.Identity>.Enumerator p =

(LinkedList<Ice.Identity>.Enumerator)

_queue.GetEnumerator();

int excessEntries = _map.Count ‑ _size;

for (int i = 0; i < excessEntries; ++i) {

p.MovePrev();

Ice.Identity id = p.Current;

EvictorEntry e = _map[id];

if (e.useCount == 0) {

evict(e.servant, e.userCookie); // Down‑call

p.Remove();

_map.Remove(id);

}

}

}

The code scans the excess entries, starting at the tail of the evictor queue. If an entry has a zero use count, it is evicted: after calling the

evict member function in the derived class, the code removes the evicted entry from both the map and the queue.

Finally, the implementation of deactivate sets the evictor size to zero and then calls

evictServants. This results in eviction of all servants. The Ice run time guarantees to call

deactivate only once no more requests are executing in an object adapter; as a result, it is guaranteed that all entries in the evictor will be idle and therefore will be evicted.

public void deactivate(string category)

{

lock(this)

{

_size = 0;

evictServants();

}

}

Note that, with this implementation of evictServants, we only scan the tail section of the evictor queue for servants to evict. If we have long-running operations, this allows the number of servants in the queue to remain above the evictor size if the servants in the tail section have a non-zero use count. This means that, even immediately after calling

evictServants, the queue length can still exceed the evictor size.

We can adopt a more aggressive strategy for eviction: instead of scanning only the excess entries in the queue, if, after looking in the tail section of the queue, we still have more servants in the queue than the queue size, we keep scanning for servants with a zero use count until the queue size drops below the limit. This alternative version of

evictServants looks as follows:

private void evictServants()

{

//

// If the evictor queue has grown larger than the limit,

// look at the excess elements to see whether any of them

// can be evicted.

//

LinkedList<Ice.Identity>.Enumerator p =

(LinkedList<Ice.Identity>.Enumerator)

_queue.GetEnumerator();

int numEntries = _map.Count;

for (int i = 0; i < numEntries && _map.Count > _size; ++i) {

p.MovePrev();

Ice.Identity id = p.Current;

EvictorEntry e = _map[id];

if (e.useCount == 0) {

evict(e.servant, e.userCookie); // Down‑call

p.Remove();

_map.Remove(id);

}

}

}

The only difference in this version is that terminating condition for the for-loop has changed: instead of scanning only the excess entries for servants with a use count, this version keeps scanning until the evictor size drops below the limit.

Which version is more appropriate depends on your application: if locating and evicting servants is expensive, and memory is not at a premium, the first version (which only scans the tail section) is more appropriate; if you want to keep memory consumption to a minimum, the second version in more appropriate. Also keep in mind that the difference between the two versions is significant only if you have long-running operations and many concurrent invocations from clients; otherwise, there is no point in more aggressively scanning for servants to remove because they are going be become idle again very quickly and get evicted as soon as the next request arrives.

Using a servant evictor is simply a matter of deriving a class from EvictorBase and implementing the

add and

evict methods. You can turn a servant locator into an evictor by simply taking the code that you wrote for

locate and placing it into

add—

EvictorBase then takes care of maintaining the cache in least-recently used order and evicting servants as necessary. Unless you have clean‑up requirements for your servants (such as closing network connections or database handles), the implementation of

evict can be left empty.

One of the nice aspects of evictors is that you do not need to change anything in your servant implementation: the servants are ignorant of the fact that an evictor is in use. This makes it very easy to add an evictor to an already existing code base with little disturbance of the source code.

Evictors can provide substantial performance improvements over default servants: especially if initialization of servants is expensive (for example, because servant state must be initialized by reading from a network), an evictor performs much better than a default servant, while keeping memory requirements low.