Ice is inherently a multi-threaded platform. There is no such thing as a single-threaded server in Ice. As a result, you must concern yourself with concurrency issues: if a thread reads a data structure while another thread updates the same data structure, havoc will ensue unless you protect the data structure with appropriate locks. In order to build Ice applications that behave correctly, it is important that you understand the threading semantics of the Ice run time. This section discusses Ice’s

thread pool concurrency model and provides guidelines for writing thread-safe Ice applications.

•

The client thread pool services outgoing connections, which primarily involves handling the replies to outgoing requests and includes notifying AMI callback objects (see

Section 29.3). If a connection is used in bidirectional mode (see

Section 33.7), the client thread pool also dispatches incoming callback requests.

•

The server thread pool services incoming connections. It dispatches incoming requests and, for bidirectional connections, processes replies to outgoing requests.

By default, these two thread pools are shared by all of the communicator’s object adapters. If necessary, you can configure individual object adapters to use a private thread pool instead.

If a thread pool is exhausted because all threads are currently dispatching a request, additional incoming requests are transparently delayed until a request completes and relinquishes its thread; that thread is then used to dispatch the next pending request. Ice minimizes thread context switches in a thread pool by using a leader-follower implementation (see

[17]).

A thread pool is dynamic when

name.SizeMax exceeds

name.Size. As the demand for threads increases, the Ice run time adds more threads to the pool, up to the maximum size. Threads are terminated automatically when they have been idle for a while, but a thread pool always contains at least the minimum number of threads.

This property sets a high water mark; when the number of threads in a pool reaches this value, the Ice run time logs a warning message. If you see this warning message frequently, it could indicate that you need to increase the value of

name.SizeMax. If not defined, the default value is 80% of the value of

name.SizeMax. Set this property to zero to disable the warning.

Setting this property to a value greater than zero forces the thread pool to serialize all messages received over a connection. It is unnecessary to enable serialization for a thread pool whose maximum size is one because such a thread pool is already limited to processing one message at a time. For thread pools with more than one thread, serialization has a negative impact on latency and throughput. If not defined, the default value is zero.

For configuration purposes, the names of the client and server thread pools are Ice.ThreadPool.Client and

Ice.ThreadPool.Server, respectively. As an example, the following properties establish minimum and maximum sizes for these thread pools:

The default behavior of an object adapter is to share the thread pools of its communicator and, for many applications, this behavior is entirely sufficient. However, the ability to configure an object adapter with its own thread pool is useful in certain situations:

In a server with multiple object adapters, the configuration of the communicator’s client and server thread pools may be a good match for some object adapters, but others may have different requirements. For example, the servants hosted by one object adapter may not support concurrent access, in which case limiting that object adapter to a single-threaded pool eliminates the need for synchronization in those servants. On the other hand, another object adapter might need a multi-threaded pool for better performance.

An object adapter’s thread pool supports all of the properties described in Section 28.9.2. For configuration purposes, the name of an adapter’s thread pool is

adapter.ThreadPool, where

adapter is the name of the adapter.

These properties have the same semantics as those described earlier except they both have a default value of zero, meaning that an adapter uses the communicator’s thread pools by default.

Improper configuration of a thread pool can have a serious impact on the performance of your application. This section discusses some issues that you should consider when designing and configuring your applications.

It is important to remember that a communicator’s client and server thread pools have a default maximum size of one thread, therefore these limitations also apply to any object adapter that shares the communicator’s thread pools.

Configuring a thread pool to support multiple threads implies that the application is prepared for the Ice run time to dispatch operation invocations or AMI callbacks concurrently. Although greater effort is required to design a thread-safe application, you are rewarded with the ability to improve the application’s scalability and throughput.

Choosing appropriate minimum and maximum sizes for a thread pool requires careful analysis of your application. For example, in compute-bound applications it is best to limit the number of threads to the number of physical processors in the host machine; adding any more threads only increases context switches and reduces performance. Increasing the size of the pool beyond the number of processors can improve responsiveness when threads can become blocked while waiting for the operating system to complete a task, such as a network or file operation. On the other hand, a thread pool configured with too many threads can have the opposite effect and negatively impact performance. Testing your application in a realistic environment is the recommended way of determining the optimum size for a thread pool.

If your application uses nested invocations, it is very important that you evaluate whether it is possible for thread starvation to cause a deadlock. Increasing the size of a thread pool can lessen the chance of a deadlock, but other design solutions are usually preferred.

Section 28.9.5 discusses nested invocations in more detail.

When using a multi-threaded pool, the nondeterministic nature of thread scheduling means that requests from the same connection may not be dispatched in the order they were received. Some applications cannot tolerate this behavior, such as a transaction processing server that must guarantee that requests are executed in order. There are two ways of satisfying this requirement:

At first glance these two options may seem equivalent, but there is a significant difference: a single-threaded pool can only dispatch one request at a time and therefore serializes requests from

all connections, whereas a multi-threaded pool configured for serialization can dispatch requests from different connections concurrently while serializing requests from the same connection.

You can obtain the same behavior from a multi-threaded pool without enabling serialization, but only if you design the clients so that they do not send requests from multiple threads, do not send requests over more than one connection, and only use synchronous twoway invocations. In general, however, it is better to avoid such tight coupling between the implementations of the client and server.

Enabling serialization can improve responsiveness and performance compared to a single-threaded pool, but there is an associated cost. The extra synchronization that the pool must perform to serialize requests adds significant overhead and results in higher latency and reduced throughput.

As you can see, thread pool serialization is not a feature that you should enable without analyzing whether the benefits are worthwhile. For example, it might be an inappropriate choice for a server with long-running operations when the client needs the ability to have several operations in progress simultaneously. If serialization was enabled in this situation, the client would be forced to work around it by opening several connections to the server (see

Section 33.3), which again tightly couples the client and server implementations. If the server must keep track of the order of client requests, a better solution would be to use serialization in conjunction with (see

Section 29.4) asynchronous dispatch to queue the incoming requests for execution by other threads.

A nested invocation is one that is made within the context of another Ice operation. For instance, the implementation of an operation in a servant might need to make a nested invocation on some other object, or an AMI callback object might invoke an operation in the course of processing a reply to an asynchronous request. It is also possible for one of these invocations to result in a nested callback to the originating process. The maximum depth of such invocations is determined by the size of the thread pools used by the communicating parties.

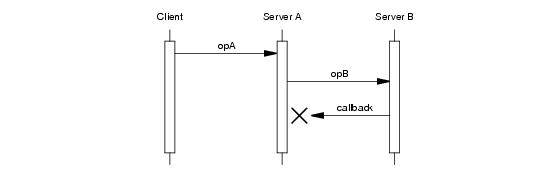

Applications that use nested invocations must be carefully designed to avoid the potential for deadlock, which can easily occur when invocations take a circular path. For example,

Figure 28.5 presents a deadlock scenario when using the default thread pool configuration.

In this diagram, the implementation of opA makes a nested twoway invocation of

opB, but the implementation of

opB causes a deadlock when it tries to make a nested callback. As mentioned in

Section 28.9.1, the communicator’s thread pools have a maximum size of one thread unless explicitly configured otherwise. In Server A, the only thread in the server thread pool is busy waiting for its invocation of

opB to complete, and therefore no threads remain to handle the callback from Server B. The client is now blocked because Server A is blocked, and they remain blocked indefinitely unless timeouts are used.

Configuring the server thread pool in Server A to support more than one thread allows the nested callback to proceed. This is the simplest solution, but it requires that you know in advance how deeply nested the invocations may occur, or that you set the maximum size to a sufficiently large value that exhausting the pool becomes unlikely. For example, setting the maximum size to two avoids a deadlock when a single client is involved, but a deadlock could easily occur again if multiple clients invoke

opA simultaneously. Furthermore, setting the maximum size too large can cause its own set of problems (see

Section 28.9.4).

If Server A called

opB using a oneway invocation, it would no longer need to wait for a response and therefore

opA could complete, making a thread available to handle the callback from Server B. However, we have made a significant change in the semantics of

opA because now there is no guarantee that

opB has completed before

opA returns, and it is still possible for the oneway invocation of

opB to block (see

Section 28.13).

•

Implement opA using asynchronous dispatch and invocation.

By declaring opA as an AMD operation (see

Section 29.4) and invoking

opB using AMI, Server A can avoid blocking the thread pool’s thread while it waits for

opB to complete. This technique, known as

asynchronous request chaining, is used extensively in Ice services such as IceGrid and Glacier2 to eliminate the possibility of deadlocks.

As another example, consider a client that makes a nested invocation from an AMI callback object using the default thread pool configuration. The (one and only) thread in the client thread pool receives the reply to the asynchronous request and invokes its callback object. If the callback object in turn makes a nested twoway invocation, a deadlock occurs because no more threads are available in the client thread pool to process the reply to the nested invocation. The solutions are similar to some of those presented for

Figure 28.5: increase the maximum size of the client thread pool, use a oneway invocation, or call the nested invocation using AMI.

•

The thread pool configurations in use by all communicating parties have a significant impact on an application’s ability to use nested invocations. While analyzing the path of circular invocations, you must pay careful attention to the threads involved to determine whether sufficient threads are available to avoid deadlock. This includes not just the threads that dispatch requests, but also the threads that make the requests and process the replies.

As you can imagine, tracing the call flow of a distributed application to ensure there is no possibility of deadlock can quickly become a complex and tedious process. In general, it is best to avoid circular invocations if at all possible.

The Ice run time itself is fully thread safe, meaning multiple application threads can safely call methods on objects such as communicators, object adapters, and proxies without synchronization problems. As a developer, you must also be concerned with thread safety because the Ice run time can dispatch multiple invocations concurrently in a server. In fact, it is possible for multiple requests to proceed in parallel within the same servant and within the same operation on that servant. It follows that, if the operation implementation manipulates non-stack storage (such as member variables of the servant or global or static data), you must interlock access to this data to avoid data corruption.

The need for thread safety in an application depends on its configuration. Using the default thread pool configuration typically makes synchronization unnecessary because at most one operation can be dispatched at a time. Thread safety becomes an issue once you increase the maximum size of a thread pool.

Ice uses the native synchronization and threading primitives of each platform. For C++ users, Ice provides a collection of convenient and portable wrapper classes for use by Ice applications (see

Chapter 27).

The marshaling semantics of the Ice run time present a subtle thread safety issue that arises when an operation returns data by reference. In C++, the only relevant case is returning an instance of a Slice class, either directly or nested as a member of another type. In Java, .NET, and Python, Slice structures, sequences, and dictionaries are also affected.

The potential for corruption occurs whenever a servant returns data by reference, yet continues to hold a reference to that data. For example, consider the following Java implementation:

public class GridI extends _GridDisp

{

GridI()

{

_grid = // ...

}

public int[][]

getGrid(Ice.Current curr)

{

return _grid;

}

public void

setValue(int x, int y, int val, Ice.Current curr)

{

_grid[x][y] = val;

}

private int[][] _grid;

}

Suppose that a client invoked the getGrid operation. While the Ice run time marshals the returned array in preparation to send a reply message, it is possible for another thread to dispatch the

setValue operation on the same servant. This race condition can result in several unexpected outcomes, including a failure during marshaling or inconsistent data in the reply to

getGrid. Synchronizing the

getGrid and

setValue operations would not fix the race condition because the Ice run time performs its marshaling outside of this synchronization.

One solution is to implement accessor operations, such as getGrid, so that they return copies of any data that might change. There are several drawbacks to this approach:

Another solution is to make copies of the affected data only when it is modified. In the revised code shown below,

setValue replaces

_grid with a copy that contains the new element, leaving the previous contents of

_grid unchanged:

public class GridI extends _GridDisp

{

...

public synchronized int[][]

getGrid(Ice.Current curr)

{

return _grid;

}

public synchronized void

setValue(int x, int y, int val, Ice.Current curr)

{

int[][] newGrid = // shallow copy...

newGrid[x][y] = val;

_grid = newGrid;

}

...

}

This allows the Ice run time top safely marshal the return value of getGrid because the array is never modified again. For applications where data is read more often than it is written, this solution is more efficient than the previous one because accessor operations do not need to make copies. Furthermore, intelligent use of shallow copying can minimize the overhead in mutating operations.

Finally, a third approach changes accessor operations to use AMD (see Section 29.4) in order to regain control over marshaling. After annotating the

getGrid operation with

amd metadata, we can revise the servant as follows:

public class GridI extends _GridDisp

{

...

public synchronized void

getGrid_async(AMD_Grid_getGrid cb, Ice.Current curr)

{

cb.ice_response(_grid);

}

public synchronized void

setValue(int x, int y, int val, Ice.Current curr)

{

_grid[x][y] = val;

}

...

}

Normally, AMD is used in situations where the servant needs to delay its response to the client without blocking the calling thread. For getGrid, that is not the goal; instead, as a side-effect, AMD provides the desired marshaling behavior. Specifically, the Ice run time marshals the reply to an asynchronous request at the time the servant invokes

ice_response on the AMD callback object. Because

getGrid and

setValue are synchronized, this guarantees that the data remains in a consistent state during marshaling.

On occasion, it is necessary to intercept the creation and destruction of threads created by the Ice run time, for example, to interoperate with libraries that require applications to make thread-specific initialization and finalization calls (such as COM’s

CoInitializeEx and

CoUninitialize). Ice provides callbacks to inform an application when each run-time thread is created and destroyed. For C++, the callback class looks as follows:

1

class ThreadNotification : public IceUtil::Shared {

public:

virtual void start() = 0;

virtual void stop() = 0;

};

typedef IceUtil::Handle<ThreadNotification> ThreadNotificationPtr;

To receive notification of thread creation and destruction, you must derive a class from

ThreadNotification and implement the

start and

stop member functions. These functions will be called by the Ice run by each thread as soon as it is created, and just before it exits. You must install your callback class in the Ice run time when you create a communicator by setting the

threadHook member of the

InitializationData structure (see

Section 28.3).

class MyHook : public virtual Ice::ThreadNotification {

public:

void start()

{

cout << "start: id = " << ThreadControl().id() << endl;

}

void stop()

{

cout << "stop: id = " << ThreadControl().id() << endl;

}

};

int

main(int argc, char* argv[])

{

// ...

Ice::InitializationData id;

id.threadHook = new MyHook;

communicator = Ice::initialize(argc, argv, id);

// ...

}

The implementation of your start and

stop methods can make whatever thread-specific calls are required by your application.

For Java and C#, Ice.ThreadNotification is an interface:

public interface ThreadNotification {

void start();

void stop();

}

To receive the thread creation and destruction callbacks, you must derive a class from this interface that implements the

start and

stop methods, and register an instance of that class when you create the communicator. (The code to do this is analogous to the C++ version.)