To do the tracking we need a video and object position on the first frame.

To run the code you have to specify input and output video path and object bounding box.

#include <opencv2/opencv.hpp>

#include <vector>

#include <iostream>

#include <iomanip>

#include "stats.h"

#include "utils.h"

const double akaze_thresh = 3e-4;

const double ransac_thresh = 2.5f;

const double nn_match_ratio = 0.8f;

const int bb_min_inliers = 100;

const int stats_update_period = 10;

{

public:

detector(_detector),

matcher(_matcher)

{}

void setFirstFrame(

const Mat frame, vector<Point2f> bb,

string title, Stats& stats);

Mat process(

const Mat frame, Stats& stats);

return detector;

}

protected:

Mat first_frame, first_desc;

vector<KeyPoint> first_kp;

vector<Point2f> object_bb;

};

void Tracker::setFirstFrame(

const Mat frame, vector<Point2f> bb,

string title, Stats& stats)

{

first_frame = frame.

clone();

stats.keypoints = (int)first_kp.size();

drawBoundingBox(first_frame, bb);

object_bb = bb;

}

Mat Tracker::process(

const Mat frame, Stats& stats)

{

vector<KeyPoint> kp;

stats.keypoints = (int)kp.size();

vector< vector<DMatch> > matches;

vector<KeyPoint> matched1, matched2;

matcher->knnMatch(first_desc, desc, matches, 2);

for(unsigned i = 0; i < matches.size(); i++) {

if(matches[i][0].distance < nn_match_ratio * matches[i][1].distance) {

matched1.push_back(first_kp[matches[i][0].queryIdx]);

matched2.push_back( kp[matches[i][0].trainIdx]);

}

}

stats.matches = (int)matched1.size();

Mat inlier_mask, homography;

vector<KeyPoint> inliers1, inliers2;

vector<DMatch> inlier_matches;

if(matched1.size() >= 4) {

RANSAC, ransac_thresh, inlier_mask);

}

if(matched1.size() < 4 || homography.

empty()) {

stats.inliers = 0;

stats.ratio = 0;

return res;

}

for(unsigned i = 0; i < matched1.size(); i++) {

int new_i = static_cast<int>(inliers1.size());

inliers1.push_back(matched1[i]);

inliers2.push_back(matched2[i]);

inlier_matches.push_back(

DMatch(new_i, new_i, 0));

}

}

stats.inliers = (int)inliers1.size();

stats.ratio = stats.inliers * 1.0 / stats.matches;

vector<Point2f> new_bb;

if(stats.inliers >= bb_min_inliers) {

drawBoundingBox(frame_with_bb, new_bb);

}

drawMatches(first_frame, inliers1, frame_with_bb, inliers2,

inlier_matches, res,

return res;

}

int main(int argc, char **argv)

{

if(argc < 4) {

cerr << "Usage: " << endl <<

"akaze_track input_path output_path bounding_box" << endl;

return 1;

}

if(!video_in.isOpened()) {

cerr << "Couldn't open " << argv[1] << endl;

return 1;

}

if(!video_out.isOpened()) {

cerr << "Couldn't open " << argv[2] << endl;

return 1;

}

vector<Point2f> bb;

if(fs["bounding_box"].empty()) {

cerr << "Couldn't read bounding_box from " << argv[3] << endl;

return 1;

}

fs["bounding_box"] >> bb;

Stats stats, akaze_stats, orb_stats;

Tracker akaze_tracker(akaze, matcher);

video_in >> frame;

akaze_tracker.setFirstFrame(frame, bb, "AKAZE", stats);

orb_tracker.setFirstFrame(frame, bb, "ORB", stats);

Stats akaze_draw_stats, orb_draw_stats;

Mat akaze_res, orb_res, res_frame;

for(int i = 1; i < frame_count; i++) {

bool update_stats = (i % stats_update_period == 0);

video_in >> frame;

akaze_res = akaze_tracker.process(frame, stats);

akaze_stats += stats;

if(update_stats) {

akaze_draw_stats = stats;

}

orb_res = orb_tracker.process(frame, stats);

orb_stats += stats;

if(update_stats) {

orb_draw_stats = stats;

}

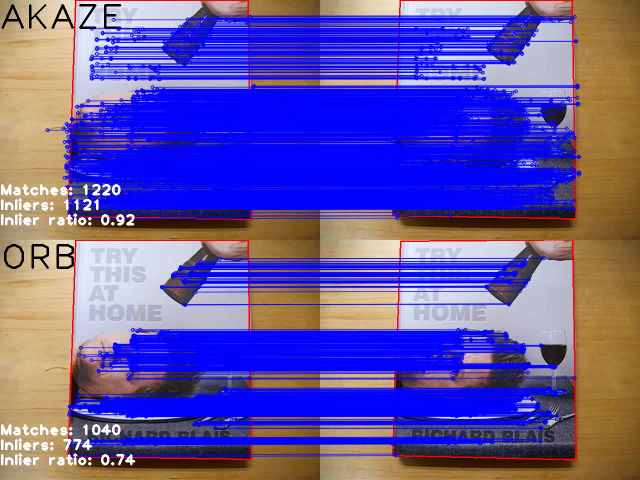

drawStatistics(akaze_res, akaze_draw_stats);

drawStatistics(orb_res, orb_draw_stats);

vconcat(akaze_res, orb_res, res_frame);

video_out << res_frame;

cout << i << "/" << frame_count - 1 << endl;

}

akaze_stats /= frame_count - 1;

orb_stats /= frame_count - 1;

printStatistics("AKAZE", akaze_stats);

printStatistics("ORB", orb_stats);

return 0;

}

This class implements algorithm described abobve using given feature detector and descriptor matcher.

1.8.9.1

1.8.9.1