Chapter 1. Deployment Architecture

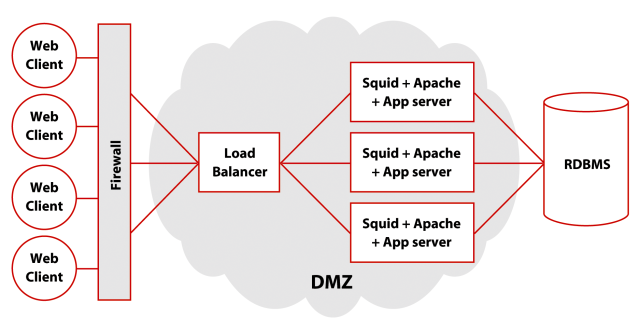

Red Hat recommends using a load-balancer, multiple front-end Web servers, and a database server for a typical CMS deployment. Figure 1-1 illustrates this configuration:

For this guide, we use the terms application server and Web server to mean the same server. In some instances they can be two faces of the same application. For example, Tomcat and Resin are application servers running the Java code that provides functionality and generates content for the Web server, and they also include full HTTP request handling. There can be more than one of these front-end servers for scalability and reliability. Here are some specific configuration recommendations:

- Load Balancer

A load balancer receives all of a website's incoming traffic and then distributes this traffic among the website's front-end Web servers. Red Hat uses load balancers to help ensure that no individual Web server becomes overwhelmed through partitioning work to different Web services. The load balancer also provides a level of fault tolerance by frequently monitoring the status of the Web servers and removing any that are slow or non-responsive from the available server pool.

For a basic setup, Red Hat recommends using a minimum of a single-CPU 1 GHz PIII or greater box with at least 1 GB of RAM running Red Hat Enterprise Linux AS and Red Hat Cluster Suite (http://www.redhat.com/software/rha/cluster/piranha/). An alternative would be a hardware appliance performing load balancing, for example F5's BIG-IP. An optional backup load balancer may provide additional fault-tolerance.

- Supplemental Balancing and Caching

Another useful combination recommended by Red Hat for all CMS installations is Squid and Apache, as detailed in Section 1.1 Deploying Squid and Apache for Caching and Acceleration. This configuration can be done within a load balancing solutions, and is implemented directly on the Web servers. The object caching greatly improves performance in database intensive page building. The exception are installations extensively using p2fs (publish-to-filesystem). In this situation, Squid caching does not normally improve performance with objects served from disk instead of database.

- Web Server

Red Hat recommends runnning Web servers and application code on a number of small, relatively inexpensive Red Hat Enterprise Linux machines. These front-end servers each run a copy of Squid, which caches content; Red Hat Stronghold/Apache; an application server, such as Resin or Tomcat; the CMS application itself; and any additional custom software.

By distributing the load across multiple machines, you can achieve inexpensive and reasonable hardware scalability as well as reliability. Because the CMS's bottleneck is the Web server CPU, you can add additional Web servers to grow a website's capacity as needed.

Red Hat recommends using the equivalent of at least a dual-PIII 1.4 GHz server equipped with 1 GB of RAM and SCSI drives in a RAID 1 configuration for each Web Server.

- Database Server

Red Hat CMS stores all of its persistent information using an RDBMS. CMS 6.1 supports Oracle8i®, Oracle9i®, and PostgreSQL 7.3.x. PostgreSQL 7.2.x is only supported in CMS versions prior to 6.0.

Red Hat recommends using the equivalent of at least a dual-PIII 1.4 GHz server equipped with 4 GB of RAM and SCSI drives in a RAID 1 configuration for the database server. Both Oracle Database and PostgreSQL support a large number of configuration options for optimizing performance, backup, and recovery. Consult with a DBA for more information regarding RDBMS configuration.

- Network

All machines in the deployment architecture should communicate on the same, physical 100 Mbit or Gigabit Ethernet network to minimize latency and maximize throughput.

1.1. Deploying Squid and Apache for Caching and Acceleration

This section provides a configuration guide for setting up a system where you have two or more application servers being load balanced by a caching web server running Squid and Apache. This generally improves performance of public areas of CMS websites by serving repeat requests for the same URL directly from the Squid cache.

For example, in a deployment the web/cache server might cache a portal home page, the category navigation pages and content items. Even when using p2fs (publish to filesystem), there are typically benefits to using Squid.

HTTP acceleration is achieved from the Squid/Apache combination by taking advantage of the lower per-request memory and processor overhead compared to an application server. This allows the cache/web server to handle far more concurrent connections. Having Squid on the same high-speed LAN as the application server means the web/cache server can read the response from the app server very quickly, freeing up the app server to deal with the next request. Squid does all the interacting with the relatively slow client.

You can read more about Squid at http://www.squid-cache.org/.

One of the main challenges of this deployment is running both services without breaking the virtual hosting. Difficulties with the virtual hosting occur most often with Microsoft Internet Explorer. To overcome this, you must deploy Apache and Squid on the same machine, using the same port number. Apache is configured to only bind to 127.0.0.1 and Squid binds only to the host's public IP address.

In /etc/httpd/conf/httpd.conf uncomment & change:

BindAddress *

to bind to localhost:

BindAddress 127.0.0.1

Next, uncomment & change:

Listen 80

to include the IP address:

Listen 127.0.0.1:80

Then go down to the <IfDefine HAVE_SSL> block containing the two Listen statements. Add this statement:

Listen 127.0.0.1:443

Next we begin our configuration of /etc/squid/squid.conf by changing:

# http_port 3128

to read:

http_port 192.168.0.100:80

The default Squid config doesn't cache URLs with query parameters in them. This is only for compatibility with badly written CGI scripts that don't send cache control headers. Since we know the CMS code is safe and done properly, we'll need to change this behavior. In the squid.conf, comment out:

#hierarchy_stoplist cgi-bin ? #acl QUERY urlpath_regex cgi-bin \? #no_cache deny QUERY

Turn on referrers and user agents so that log analysis is useful:

useragent_log /var/log/squid/agent.log referer_log /var/log/squid/referer.log

Tip You may need to compile Squid with --enable-referer-log and --enable-agent-log options.

Add access control rules to allow public users to access the cache. Add the following lines near the other block of acl definitions, substituting your host's public facing IP address for 192.168.0.100:

acl accel_host dst 127.0.0.1/255.255.255.255 acl accel_host dst 192.168.0.100/255.255.255.255 acl accel_port port 80

If the firewall in front of the Squid server has a different IP address to the network interface you need to add that in as well; replace 123.45.678.90 with your firewall external address.:

acl accel_host dst 123.45.678.90/255.255.255.255

Then, allow access to requests matching these acls. Comment out:

#http_access deny all

adding in:

http_access deny !accel_host http_access deny !accel_port http_access allow all

Set the main hostname, replacing www.example.com with your hostname:

visible_hostname www.example.com

Squid limits HTTP file uploads to 1 MB in size, so we need to increase this to at least 20 MB otherwise CMS file storage is rather limited in usefulness:

request_body_max_size 20 MB

Tell Squid to be an accelerator for the apache server:

httpd_accel_host 127.0.0.1 httpd_accel_port 80

Finally, for virtual hosting to work, set:

httpd_accel_single_host on httpd_accel_uses_host_header on