Clustering and High Availability in ServicemixServiceMix supports both High availability(HA) and clustering of containers. As you can configure HA without clustering its helpful to deal with them as two separate tasks. High Availability in ServiceMixWithin the scope of this document, high availability (HA) can be defined as two distinct ServiceMix container instances configured in a master/slave configuration. In ALL cases, the master is ACTIVE and the slave is in STANDBY mode waiting for a failover event to trigger it to take over.

As ServiceMix leverages ActiveMQ to provide HA functionality, there are a few choices in terms of how to configure master/slave via ActiveMQ:

(See An Introduction to ActiveMQ Master/Slave for more information) Clustering in ServiceMixWithin the scope of this document, a cluster can be defined as two or more ACTIVE ServiceMix container instances that are networked together. A cluster provides the following benefits:

Example: Configuring HAWhen learning about ServiceMix HA configuration, a good starting point is to configure HA on a single host. In this scenario lets assume we have container1_host1(master) and container2_host1(slave). To configure our example using a shared filesystem master/slave", the steps involved including the following: 1) Install two ServiceMix instances (servicemix1 & servicemix2). 2) Ensure <servicemix2> is an exact replica of <servicemix1>. The exact same files should be installed in the <servicemix2>/hotdeploy directory and <servicemix2>/conf. 3) Edit the <servicemix1>/conf/activemq.xml file and configure the ActiveMQ peristence adapter: <!-- We use 0.0.0.0 per AMQ-2094 --> <amq:transportConnectors> <amq:transportConnector uri="tcp://0.0.0.0:61616"/> </amq:transportConnectors> <amq:persistenceAdapter> <amq:amqPersistenceAdapter directory="file:<shared>/data/amq"/> </amq:persistenceAdapter> 4) Edit <servicemix2>/conf/activemq.xml and verify the ActiveMQ peristence adapter configuration. <amq:transportConnectors> <amq:transportConnector uri="tcp://0.0.0.0:61616"/> </amq:transportConnectors> <amq:persistenceAdapter> <amq:amqPersistenceAdapter directory="file:<shared>/data/amq"/> </amq:persistenceAdapter>

5) When using the same host you MAY need to change the JMX ports to avoid port conflicts by editing <servicemix2>/conf/servicemix.properties file to change the rmi.port. rmi.port = 1098 How to Test the ConfigurationBelow are the steps to testing the configuration: 1) Start a ServiceMix instance by running ServiceMix in the root of the install directory. servicemix1_home $ ./bin/servicemix

2) Start the slave container servicemix2_home $ ./bin/servicemix

3) Ctrl-c the master instance. You should see the slave fail over. This configuration has some advantages and some disadvantages: Advantages: Disadvantages: Extending the HA Example to Separate HostsThis example can be extended such that the master and slave are on separate hosts. Both the shared filesystem master/slave and the JDBC master/slave approaches still have the requirement that both instances access the SAME presistent store. With shared filesystem master/slave, this can be provided via a SAN and for JDBC master/slave both instances need to be able to access the same database instance. You pay for this flexibility with additional configuration overhead. Specifically you may need to introduce failover URLs into your deployed component configurations. Example: failover://(tcp://host_servicemix1:61616,tcp://host_servicemix2:61616)?initialReconnectDelay=100 You may also need to provide your clients with both HA host addresses (or insulate clients from this details via a hardware load balancer). Clients need to be aware that only one instance will be ACTIVE at a time. See the ActiveMQ Failover Transport Reference for more details on failover URLs. Clustering ConfigurationTo create a network of ServiceMix containers, you need to establish network connections between each of the containers in the network. So if we extend the HA example above and add two more container processes such that we have: smix1_host1(master) and smix2_host1(slave) Then we need to establish a network connection between the two active containers (namely smix1_host1 and smix1_host2). As Servicemix leverages ActiveMQ to provide clustering functionality, you have a few choices in terms of how you configure the *NetworkConnector*s: 1) Static Network Connectors With a static configuration, each NetworkConnector in the cluster is wired up explicitly via Here's an example of a static discovery configuration: <!-- We are setting our brokerName to be unique for this container --> <amq:broker id="broker" brokerName="host1_broker1" depends-on="jmxServer"> .... <networkConnectors> <networkConnector name="host1_to_host2" uri="static://(tcp://host2:61616)"/> <!-- Here's what it would like for a three container network --> <!-- (Note its not necessary to list our own hostname in the uri list) --> <!-- networkConnector name="host1_to_host2_host3" uri="static://(tcp://host2:61616,tcp://host3:61616)"/ --> </networkConnectors> </amq:broker>

2) Multicast If you have multicast enabled on your network and you have multicast configured in the <servicemix>/conf/activemq.xml, then when the two containers are started they should detect each other and transparently connect to one another. Here's an example of a multicast discovery configuration: <networkConnectors> <!-- by default just auto discover the other brokers --> <networkConnector name="default-nc" uri="multicast://default"/> </networkConnectors>

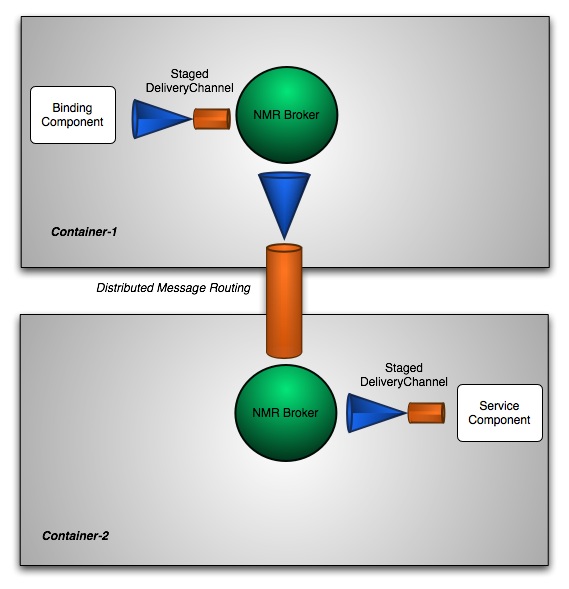

DiagramBelow is an example of a remote flow in a clustered configuration. JMS Flow

|