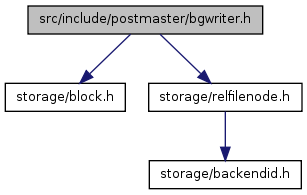

#include "storage/block.h"#include "storage/relfilenode.h"

Go to the source code of this file.

Functions | |

| void | BackgroundWriterMain (void) __attribute__((noreturn)) |

| void | CheckpointerMain (void) __attribute__((noreturn)) |

| void | RequestCheckpoint (int flags) |

| void | CheckpointWriteDelay (int flags, double progress) |

| bool | ForwardFsyncRequest (RelFileNode rnode, ForkNumber forknum, BlockNumber segno) |

| void | AbsorbFsyncRequests (void) |

| Size | CheckpointerShmemSize (void) |

| void | CheckpointerShmemInit (void) |

| bool | FirstCallSinceLastCheckpoint (void) |

Variables | |

| int | BgWriterDelay |

| int | CheckPointTimeout |

| int | CheckPointWarning |

| double | CheckPointCompletionTarget |

| void AbsorbFsyncRequests | ( | void | ) |

Definition at line 1299 of file checkpointer.c.

References AmCheckpointerProcess, BgWriterStats, CheckpointerCommLock, END_CRIT_SECTION, CheckpointerRequest::forknum, LW_EXCLUSIVE, LWLockAcquire(), LWLockRelease(), PgStat_MsgBgWriter::m_buf_fsync_backend, PgStat_MsgBgWriter::m_buf_written_backend, CheckpointerShmemStruct::num_backend_fsync, CheckpointerShmemStruct::num_backend_writes, CheckpointerShmemStruct::num_requests, palloc(), pfree(), RememberFsyncRequest(), CheckpointerShmemStruct::requests, CheckpointerRequest::rnode, CheckpointerRequest::segno, and START_CRIT_SECTION.

Referenced by CheckpointerMain(), CheckpointWriteDelay(), mdpostckpt(), and mdsync().

{

CheckpointerRequest *requests = NULL;

CheckpointerRequest *request;

int n;

if (!AmCheckpointerProcess())

return;

/*

* We have to PANIC if we fail to absorb all the pending requests (eg,

* because our hashtable runs out of memory). This is because the system

* cannot run safely if we are unable to fsync what we have been told to

* fsync. Fortunately, the hashtable is so small that the problem is

* quite unlikely to arise in practice.

*/

START_CRIT_SECTION();

/*

* We try to avoid holding the lock for a long time by copying the request

* array.

*/

LWLockAcquire(CheckpointerCommLock, LW_EXCLUSIVE);

/* Transfer stats counts into pending pgstats message */

BgWriterStats.m_buf_written_backend += CheckpointerShmem->num_backend_writes;

BgWriterStats.m_buf_fsync_backend += CheckpointerShmem->num_backend_fsync;

CheckpointerShmem->num_backend_writes = 0;

CheckpointerShmem->num_backend_fsync = 0;

n = CheckpointerShmem->num_requests;

if (n > 0)

{

requests = (CheckpointerRequest *) palloc(n * sizeof(CheckpointerRequest));

memcpy(requests, CheckpointerShmem->requests, n * sizeof(CheckpointerRequest));

}

CheckpointerShmem->num_requests = 0;

LWLockRelease(CheckpointerCommLock);

for (request = requests; n > 0; request++, n--)

RememberFsyncRequest(request->rnode, request->forknum, request->segno);

if (requests)

pfree(requests);

END_CRIT_SECTION();

}

| void BackgroundWriterMain | ( | void | ) |

Definition at line 94 of file bgwriter.c.

References AbortBufferIO(), ALLOCSET_DEFAULT_INITSIZE, ALLOCSET_DEFAULT_MAXSIZE, ALLOCSET_DEFAULT_MINSIZE, AllocSetContextCreate(), AtEOXact_Buffers(), AtEOXact_Files(), AtEOXact_HashTables(), AtEOXact_SMgr(), bg_quickdie(), BgBufferSync(), BgSigHupHandler(), bgwriter_sigusr1_handler(), BgWriterDelay, BlockSig, CurrentResourceOwner, elog, EmitErrorReport(), error_context_stack, ExitOnAnyError, FATAL, FirstCallSinceLastCheckpoint(), FlushErrorState(), got_SIGHUP, HIBERNATE_FACTOR, HOLD_INTERRUPTS, LWLockReleaseAll(), MemoryContextResetAndDeleteChildren(), MemoryContextSwitchTo(), MyProc, NULL, PG_exception_stack, PG_SETMASK, pg_usleep(), PGC_SIGHUP, pgstat_send_bgwriter(), pqsignal(), proc_exit(), ProcessConfigFile(), PGPROC::procLatch, ReqShutdownHandler(), ResetLatch(), RESOURCE_RELEASE_BEFORE_LOCKS, ResourceOwnerCreate(), ResourceOwnerRelease(), RESUME_INTERRUPTS, shutdown_requested, SIG_DFL, SIG_IGN, SIGALRM, SIGCHLD, SIGCONT, sigdelset, SIGHUP, sigjmp_buf, SIGPIPE, SIGQUIT, sigsetjmp, SIGTTIN, SIGTTOU, SIGUSR1, SIGUSR2, SIGWINCH, smgrcloseall(), StrategyNotifyBgWriter(), TopMemoryContext, UnBlockSig, UnlockBuffers(), WaitLatch(), WL_LATCH_SET, WL_POSTMASTER_DEATH, and WL_TIMEOUT.

Referenced by AuxiliaryProcessMain().

{

sigjmp_buf local_sigjmp_buf;

MemoryContext bgwriter_context;

bool prev_hibernate;

/*

* If possible, make this process a group leader, so that the postmaster

* can signal any child processes too. (bgwriter probably never has any

* child processes, but for consistency we make all postmaster child

* processes do this.)

*/

#ifdef HAVE_SETSID

if (setsid() < 0)

elog(FATAL, "setsid() failed: %m");

#endif

/*

* Properly accept or ignore signals the postmaster might send us.

*

* bgwriter doesn't participate in ProcSignal signalling, but a SIGUSR1

* handler is still needed for latch wakeups.

*/

pqsignal(SIGHUP, BgSigHupHandler); /* set flag to read config file */

pqsignal(SIGINT, SIG_IGN);

pqsignal(SIGTERM, ReqShutdownHandler); /* shutdown */

pqsignal(SIGQUIT, bg_quickdie); /* hard crash time */

pqsignal(SIGALRM, SIG_IGN);

pqsignal(SIGPIPE, SIG_IGN);

pqsignal(SIGUSR1, bgwriter_sigusr1_handler);

pqsignal(SIGUSR2, SIG_IGN);

/*

* Reset some signals that are accepted by postmaster but not here

*/

pqsignal(SIGCHLD, SIG_DFL);

pqsignal(SIGTTIN, SIG_DFL);

pqsignal(SIGTTOU, SIG_DFL);

pqsignal(SIGCONT, SIG_DFL);

pqsignal(SIGWINCH, SIG_DFL);

/* We allow SIGQUIT (quickdie) at all times */

sigdelset(&BlockSig, SIGQUIT);

/*

* Create a resource owner to keep track of our resources (currently only

* buffer pins).

*/

CurrentResourceOwner = ResourceOwnerCreate(NULL, "Background Writer");

/*

* Create a memory context that we will do all our work in. We do this so

* that we can reset the context during error recovery and thereby avoid

* possible memory leaks. Formerly this code just ran in

* TopMemoryContext, but resetting that would be a really bad idea.

*/

bgwriter_context = AllocSetContextCreate(TopMemoryContext,

"Background Writer",

ALLOCSET_DEFAULT_MINSIZE,

ALLOCSET_DEFAULT_INITSIZE,

ALLOCSET_DEFAULT_MAXSIZE);

MemoryContextSwitchTo(bgwriter_context);

/*

* If an exception is encountered, processing resumes here.

*

* See notes in postgres.c about the design of this coding.

*/

if (sigsetjmp(local_sigjmp_buf, 1) != 0)

{

/* Since not using PG_TRY, must reset error stack by hand */

error_context_stack = NULL;

/* Prevent interrupts while cleaning up */

HOLD_INTERRUPTS();

/* Report the error to the server log */

EmitErrorReport();

/*

* These operations are really just a minimal subset of

* AbortTransaction(). We don't have very many resources to worry

* about in bgwriter, but we do have LWLocks, buffers, and temp files.

*/

LWLockReleaseAll();

AbortBufferIO();

UnlockBuffers();

/* buffer pins are released here: */

ResourceOwnerRelease(CurrentResourceOwner,

RESOURCE_RELEASE_BEFORE_LOCKS,

false, true);

/* we needn't bother with the other ResourceOwnerRelease phases */

AtEOXact_Buffers(false);

AtEOXact_SMgr();

AtEOXact_Files();

AtEOXact_HashTables(false);

/*

* Now return to normal top-level context and clear ErrorContext for

* next time.

*/

MemoryContextSwitchTo(bgwriter_context);

FlushErrorState();

/* Flush any leaked data in the top-level context */

MemoryContextResetAndDeleteChildren(bgwriter_context);

/* Now we can allow interrupts again */

RESUME_INTERRUPTS();

/*

* Sleep at least 1 second after any error. A write error is likely

* to be repeated, and we don't want to be filling the error logs as

* fast as we can.

*/

pg_usleep(1000000L);

/*

* Close all open files after any error. This is helpful on Windows,

* where holding deleted files open causes various strange errors.

* It's not clear we need it elsewhere, but shouldn't hurt.

*/

smgrcloseall();

}

/* We can now handle ereport(ERROR) */

PG_exception_stack = &local_sigjmp_buf;

/*

* Unblock signals (they were blocked when the postmaster forked us)

*/

PG_SETMASK(&UnBlockSig);

/*

* Reset hibernation state after any error.

*/

prev_hibernate = false;

/*

* Loop forever

*/

for (;;)

{

bool can_hibernate;

int rc;

/* Clear any already-pending wakeups */

ResetLatch(&MyProc->procLatch);

if (got_SIGHUP)

{

got_SIGHUP = false;

ProcessConfigFile(PGC_SIGHUP);

}

if (shutdown_requested)

{

/*

* From here on, elog(ERROR) should end with exit(1), not send

* control back to the sigsetjmp block above

*/

ExitOnAnyError = true;

/* Normal exit from the bgwriter is here */

proc_exit(0); /* done */

}

/*

* Do one cycle of dirty-buffer writing.

*/

can_hibernate = BgBufferSync();

/*

* Send off activity statistics to the stats collector

*/

pgstat_send_bgwriter();

if (FirstCallSinceLastCheckpoint())

{

/*

* After any checkpoint, close all smgr files. This is so we

* won't hang onto smgr references to deleted files indefinitely.

*/

smgrcloseall();

}

/*

* Sleep until we are signaled or BgWriterDelay has elapsed.

*

* Note: the feedback control loop in BgBufferSync() expects that we

* will call it every BgWriterDelay msec. While it's not critical for

* correctness that that be exact, the feedback loop might misbehave

* if we stray too far from that. Hence, avoid loading this process

* down with latch events that are likely to happen frequently during

* normal operation.

*/

rc = WaitLatch(&MyProc->procLatch,

WL_LATCH_SET | WL_TIMEOUT | WL_POSTMASTER_DEATH,

BgWriterDelay /* ms */ );

/*

* If no latch event and BgBufferSync says nothing's happening, extend

* the sleep in "hibernation" mode, where we sleep for much longer

* than bgwriter_delay says. Fewer wakeups save electricity. When a

* backend starts using buffers again, it will wake us up by setting

* our latch. Because the extra sleep will persist only as long as no

* buffer allocations happen, this should not distort the behavior of

* BgBufferSync's control loop too badly; essentially, it will think

* that the system-wide idle interval didn't exist.

*

* There is a race condition here, in that a backend might allocate a

* buffer between the time BgBufferSync saw the alloc count as zero

* and the time we call StrategyNotifyBgWriter. While it's not

* critical that we not hibernate anyway, we try to reduce the odds of

* that by only hibernating when BgBufferSync says nothing's happening

* for two consecutive cycles. Also, we mitigate any possible

* consequences of a missed wakeup by not hibernating forever.

*/

if (rc == WL_TIMEOUT && can_hibernate && prev_hibernate)

{

/* Ask for notification at next buffer allocation */

StrategyNotifyBgWriter(&MyProc->procLatch);

/* Sleep ... */

rc = WaitLatch(&MyProc->procLatch,

WL_LATCH_SET | WL_TIMEOUT | WL_POSTMASTER_DEATH,

BgWriterDelay * HIBERNATE_FACTOR);

/* Reset the notification request in case we timed out */

StrategyNotifyBgWriter(NULL);

}

/*

* Emergency bailout if postmaster has died. This is to avoid the

* necessity for manual cleanup of all postmaster children.

*/

if (rc & WL_POSTMASTER_DEATH)

exit(1);

prev_hibernate = can_hibernate;

}

}

| void CheckpointerMain | ( | void | ) |

Definition at line 192 of file checkpointer.c.

References AbortBufferIO(), AbsorbFsyncRequests(), ALLOCSET_DEFAULT_INITSIZE, ALLOCSET_DEFAULT_MAXSIZE, ALLOCSET_DEFAULT_MINSIZE, AllocSetContextCreate(), AtEOXact_Buffers(), AtEOXact_Files(), AtEOXact_HashTables(), AtEOXact_SMgr(), BgWriterStats, BlockSig, CheckArchiveTimeout(), CHECKPOINT_CAUSE_XLOG, CHECKPOINT_END_OF_RECOVERY, checkpoint_requested, CheckpointerShmemStruct::checkpointer_pid, PROC_HDR::checkpointerLatch, CheckPointTimeout, CheckPointWarning, chkpt_quickdie(), chkpt_sigusr1_handler(), ChkptSigHupHandler(), ckpt_active, ckpt_cached_elapsed, CheckpointerShmemStruct::ckpt_done, CheckpointerShmemStruct::ckpt_failed, CheckpointerShmemStruct::ckpt_flags, CheckpointerShmemStruct::ckpt_lck, ckpt_start_recptr, ckpt_start_time, CheckpointerShmemStruct::ckpt_started, CreateCheckPoint(), CreateRestartPoint(), CurrentResourceOwner, elog, EmitErrorReport(), ereport, errhint(), errmsg_plural(), error_context_stack, ExitOnAnyError, FATAL, FlushErrorState(), GetInsertRecPtr(), got_SIGHUP, HOLD_INTERRUPTS, last_checkpoint_time, last_xlog_switch_time, LOG, LWLockReleaseAll(), PgStat_MsgBgWriter::m_requested_checkpoints, PgStat_MsgBgWriter::m_timed_checkpoints, MemoryContextResetAndDeleteChildren(), MemoryContextSwitchTo(), Min, MyProc, MyProcPid, NULL, PG_exception_stack, PG_SETMASK, pg_usleep(), PGC_SIGHUP, pgstat_send_bgwriter(), pqsignal(), proc_exit(), ProcessConfigFile(), ProcGlobal, PGPROC::procLatch, RecoveryInProgress(), ReqCheckpointHandler(), ReqShutdownHandler(), ResetLatch(), RESOURCE_RELEASE_BEFORE_LOCKS, ResourceOwnerCreate(), ResourceOwnerRelease(), RESUME_INTERRUPTS, shutdown_requested, ShutdownXLOG(), SIG_DFL, SIG_IGN, SIGALRM, SIGCHLD, SIGCONT, sigdelset, SIGHUP, sigjmp_buf, SIGPIPE, SIGQUIT, sigsetjmp, SIGTTIN, SIGTTOU, SIGUSR1, SIGUSR2, SIGWINCH, smgrcloseall(), SpinLockAcquire, SpinLockRelease, TopMemoryContext, UnBlockSig, UnlockBuffers(), UpdateSharedMemoryConfig(), WaitLatch(), WL_LATCH_SET, WL_POSTMASTER_DEATH, WL_TIMEOUT, and XLogArchiveTimeout.

Referenced by AuxiliaryProcessMain().

{

sigjmp_buf local_sigjmp_buf;

MemoryContext checkpointer_context;

CheckpointerShmem->checkpointer_pid = MyProcPid;

/*

* If possible, make this process a group leader, so that the postmaster

* can signal any child processes too. (checkpointer probably never has

* any child processes, but for consistency we make all postmaster child

* processes do this.)

*/

#ifdef HAVE_SETSID

if (setsid() < 0)

elog(FATAL, "setsid() failed: %m");

#endif

/*

* Properly accept or ignore signals the postmaster might send us

*

* Note: we deliberately ignore SIGTERM, because during a standard Unix

* system shutdown cycle, init will SIGTERM all processes at once. We

* want to wait for the backends to exit, whereupon the postmaster will

* tell us it's okay to shut down (via SIGUSR2).

*/

pqsignal(SIGHUP, ChkptSigHupHandler); /* set flag to read config

* file */

pqsignal(SIGINT, ReqCheckpointHandler); /* request checkpoint */

pqsignal(SIGTERM, SIG_IGN); /* ignore SIGTERM */

pqsignal(SIGQUIT, chkpt_quickdie); /* hard crash time */

pqsignal(SIGALRM, SIG_IGN);

pqsignal(SIGPIPE, SIG_IGN);

pqsignal(SIGUSR1, chkpt_sigusr1_handler);

pqsignal(SIGUSR2, ReqShutdownHandler); /* request shutdown */

/*

* Reset some signals that are accepted by postmaster but not here

*/

pqsignal(SIGCHLD, SIG_DFL);

pqsignal(SIGTTIN, SIG_DFL);

pqsignal(SIGTTOU, SIG_DFL);

pqsignal(SIGCONT, SIG_DFL);

pqsignal(SIGWINCH, SIG_DFL);

/* We allow SIGQUIT (quickdie) at all times */

sigdelset(&BlockSig, SIGQUIT);

/*

* Initialize so that first time-driven event happens at the correct time.

*/

last_checkpoint_time = last_xlog_switch_time = (pg_time_t) time(NULL);

/*

* Create a resource owner to keep track of our resources (currently only

* buffer pins).

*/

CurrentResourceOwner = ResourceOwnerCreate(NULL, "Checkpointer");

/*

* Create a memory context that we will do all our work in. We do this so

* that we can reset the context during error recovery and thereby avoid

* possible memory leaks. Formerly this code just ran in

* TopMemoryContext, but resetting that would be a really bad idea.

*/

checkpointer_context = AllocSetContextCreate(TopMemoryContext,

"Checkpointer",

ALLOCSET_DEFAULT_MINSIZE,

ALLOCSET_DEFAULT_INITSIZE,

ALLOCSET_DEFAULT_MAXSIZE);

MemoryContextSwitchTo(checkpointer_context);

/*

* If an exception is encountered, processing resumes here.

*

* See notes in postgres.c about the design of this coding.

*/

if (sigsetjmp(local_sigjmp_buf, 1) != 0)

{

/* Since not using PG_TRY, must reset error stack by hand */

error_context_stack = NULL;

/* Prevent interrupts while cleaning up */

HOLD_INTERRUPTS();

/* Report the error to the server log */

EmitErrorReport();

/*

* These operations are really just a minimal subset of

* AbortTransaction(). We don't have very many resources to worry

* about in checkpointer, but we do have LWLocks, buffers, and temp

* files.

*/

LWLockReleaseAll();

AbortBufferIO();

UnlockBuffers();

/* buffer pins are released here: */

ResourceOwnerRelease(CurrentResourceOwner,

RESOURCE_RELEASE_BEFORE_LOCKS,

false, true);

/* we needn't bother with the other ResourceOwnerRelease phases */

AtEOXact_Buffers(false);

AtEOXact_SMgr();

AtEOXact_Files();

AtEOXact_HashTables(false);

/* Warn any waiting backends that the checkpoint failed. */

if (ckpt_active)

{

/* use volatile pointer to prevent code rearrangement */

volatile CheckpointerShmemStruct *cps = CheckpointerShmem;

SpinLockAcquire(&cps->ckpt_lck);

cps->ckpt_failed++;

cps->ckpt_done = cps->ckpt_started;

SpinLockRelease(&cps->ckpt_lck);

ckpt_active = false;

}

/*

* Now return to normal top-level context and clear ErrorContext for

* next time.

*/

MemoryContextSwitchTo(checkpointer_context);

FlushErrorState();

/* Flush any leaked data in the top-level context */

MemoryContextResetAndDeleteChildren(checkpointer_context);

/* Now we can allow interrupts again */

RESUME_INTERRUPTS();

/*

* Sleep at least 1 second after any error. A write error is likely

* to be repeated, and we don't want to be filling the error logs as

* fast as we can.

*/

pg_usleep(1000000L);

/*

* Close all open files after any error. This is helpful on Windows,

* where holding deleted files open causes various strange errors.

* It's not clear we need it elsewhere, but shouldn't hurt.

*/

smgrcloseall();

}

/* We can now handle ereport(ERROR) */

PG_exception_stack = &local_sigjmp_buf;

/*

* Unblock signals (they were blocked when the postmaster forked us)

*/

PG_SETMASK(&UnBlockSig);

/*

* Ensure all shared memory values are set correctly for the config. Doing

* this here ensures no race conditions from other concurrent updaters.

*/

UpdateSharedMemoryConfig();

/*

* Advertise our latch that backends can use to wake us up while we're

* sleeping.

*/

ProcGlobal->checkpointerLatch = &MyProc->procLatch;

/*

* Loop forever

*/

for (;;)

{

bool do_checkpoint = false;

int flags = 0;

pg_time_t now;

int elapsed_secs;

int cur_timeout;

int rc;

/* Clear any already-pending wakeups */

ResetLatch(&MyProc->procLatch);

/*

* Process any requests or signals received recently.

*/

AbsorbFsyncRequests();

if (got_SIGHUP)

{

got_SIGHUP = false;

ProcessConfigFile(PGC_SIGHUP);

/*

* Checkpointer is the last process to shut down, so we ask it to

* hold the keys for a range of other tasks required most of which

* have nothing to do with checkpointing at all.

*

* For various reasons, some config values can change dynamically

* so the primary copy of them is held in shared memory to make

* sure all backends see the same value. We make Checkpointer

* responsible for updating the shared memory copy if the

* parameter setting changes because of SIGHUP.

*/

UpdateSharedMemoryConfig();

}

if (checkpoint_requested)

{

checkpoint_requested = false;

do_checkpoint = true;

BgWriterStats.m_requested_checkpoints++;

}

if (shutdown_requested)

{

/*

* From here on, elog(ERROR) should end with exit(1), not send

* control back to the sigsetjmp block above

*/

ExitOnAnyError = true;

/* Close down the database */

ShutdownXLOG(0, 0);

/* Normal exit from the checkpointer is here */

proc_exit(0); /* done */

}

/*

* Force a checkpoint if too much time has elapsed since the last one.

* Note that we count a timed checkpoint in stats only when this

* occurs without an external request, but we set the CAUSE_TIME flag

* bit even if there is also an external request.

*/

now = (pg_time_t) time(NULL);

elapsed_secs = now - last_checkpoint_time;

if (elapsed_secs >= CheckPointTimeout)

{

if (!do_checkpoint)

BgWriterStats.m_timed_checkpoints++;

do_checkpoint = true;

flags |= CHECKPOINT_CAUSE_TIME;

}

/*

* Do a checkpoint if requested.

*/

if (do_checkpoint)

{

bool ckpt_performed = false;

bool do_restartpoint;

/* use volatile pointer to prevent code rearrangement */

volatile CheckpointerShmemStruct *cps = CheckpointerShmem;

/*

* Check if we should perform a checkpoint or a restartpoint. As a

* side-effect, RecoveryInProgress() initializes TimeLineID if

* it's not set yet.

*/

do_restartpoint = RecoveryInProgress();

/*

* Atomically fetch the request flags to figure out what kind of a

* checkpoint we should perform, and increase the started-counter

* to acknowledge that we've started a new checkpoint.

*/

SpinLockAcquire(&cps->ckpt_lck);

flags |= cps->ckpt_flags;

cps->ckpt_flags = 0;

cps->ckpt_started++;

SpinLockRelease(&cps->ckpt_lck);

/*

* The end-of-recovery checkpoint is a real checkpoint that's

* performed while we're still in recovery.

*/

if (flags & CHECKPOINT_END_OF_RECOVERY)

do_restartpoint = false;

/*

* We will warn if (a) too soon since last checkpoint (whatever

* caused it) and (b) somebody set the CHECKPOINT_CAUSE_XLOG flag

* since the last checkpoint start. Note in particular that this

* implementation will not generate warnings caused by

* CheckPointTimeout < CheckPointWarning.

*/

if (!do_restartpoint &&

(flags & CHECKPOINT_CAUSE_XLOG) &&

elapsed_secs < CheckPointWarning)

ereport(LOG,

(errmsg_plural("checkpoints are occurring too frequently (%d second apart)",

"checkpoints are occurring too frequently (%d seconds apart)",

elapsed_secs,

elapsed_secs),

errhint("Consider increasing the configuration parameter \"checkpoint_segments\".")));

/*

* Initialize checkpointer-private variables used during

* checkpoint

*/

ckpt_active = true;

if (!do_restartpoint)

ckpt_start_recptr = GetInsertRecPtr();

ckpt_start_time = now;

ckpt_cached_elapsed = 0;

/*

* Do the checkpoint.

*/

if (!do_restartpoint)

{

CreateCheckPoint(flags);

ckpt_performed = true;

}

else

ckpt_performed = CreateRestartPoint(flags);

/*

* After any checkpoint, close all smgr files. This is so we

* won't hang onto smgr references to deleted files indefinitely.

*/

smgrcloseall();

/*

* Indicate checkpoint completion to any waiting backends.

*/

SpinLockAcquire(&cps->ckpt_lck);

cps->ckpt_done = cps->ckpt_started;

SpinLockRelease(&cps->ckpt_lck);

if (ckpt_performed)

{

/*

* Note we record the checkpoint start time not end time as

* last_checkpoint_time. This is so that time-driven

* checkpoints happen at a predictable spacing.

*/

last_checkpoint_time = now;

}

else

{

/*

* We were not able to perform the restartpoint (checkpoints

* throw an ERROR in case of error). Most likely because we

* have not received any new checkpoint WAL records since the

* last restartpoint. Try again in 15 s.

*/

last_checkpoint_time = now - CheckPointTimeout + 15;

}

ckpt_active = false;

}

/* Check for archive_timeout and switch xlog files if necessary. */

CheckArchiveTimeout();

/*

* Send off activity statistics to the stats collector. (The reason

* why we re-use bgwriter-related code for this is that the bgwriter

* and checkpointer used to be just one process. It's probably not

* worth the trouble to split the stats support into two independent

* stats message types.)

*/

pgstat_send_bgwriter();

/*

* Sleep until we are signaled or it's time for another checkpoint or

* xlog file switch.

*/

now = (pg_time_t) time(NULL);

elapsed_secs = now - last_checkpoint_time;

if (elapsed_secs >= CheckPointTimeout)

continue; /* no sleep for us ... */

cur_timeout = CheckPointTimeout - elapsed_secs;

if (XLogArchiveTimeout > 0 && !RecoveryInProgress())

{

elapsed_secs = now - last_xlog_switch_time;

if (elapsed_secs >= XLogArchiveTimeout)

continue; /* no sleep for us ... */

cur_timeout = Min(cur_timeout, XLogArchiveTimeout - elapsed_secs);

}

rc = WaitLatch(&MyProc->procLatch,

WL_LATCH_SET | WL_TIMEOUT | WL_POSTMASTER_DEATH,

cur_timeout * 1000L /* convert to ms */ );

/*

* Emergency bailout if postmaster has died. This is to avoid the

* necessity for manual cleanup of all postmaster children.

*/

if (rc & WL_POSTMASTER_DEATH)

exit(1);

}

}

| void CheckpointerShmemInit | ( | void | ) |

Definition at line 919 of file checkpointer.c.

References CheckpointerShmemSize(), CheckpointerShmemStruct::ckpt_lck, CheckpointerShmemStruct::max_requests, MemSet, NBuffers, ShmemInitStruct(), and SpinLockInit.

Referenced by CreateSharedMemoryAndSemaphores().

{

Size size = CheckpointerShmemSize();

bool found;

CheckpointerShmem = (CheckpointerShmemStruct *)

ShmemInitStruct("Checkpointer Data",

size,

&found);

if (!found)

{

/*

* First time through, so initialize. Note that we zero the whole

* requests array; this is so that CompactCheckpointerRequestQueue

* can assume that any pad bytes in the request structs are zeroes.

*/

MemSet(CheckpointerShmem, 0, size);

SpinLockInit(&CheckpointerShmem->ckpt_lck);

CheckpointerShmem->max_requests = NBuffers;

}

}

| Size CheckpointerShmemSize | ( | void | ) |

Definition at line 900 of file checkpointer.c.

References add_size(), mul_size(), NBuffers, and offsetof.

Referenced by CheckpointerShmemInit(), and CreateSharedMemoryAndSemaphores().

{

Size size;

/*

* Currently, the size of the requests[] array is arbitrarily set equal to

* NBuffers. This may prove too large or small ...

*/

size = offsetof(CheckpointerShmemStruct, requests);

size = add_size(size, mul_size(NBuffers, sizeof(CheckpointerRequest)));

return size;

}

| void CheckpointWriteDelay | ( | int | flags, | |

| double | progress | |||

| ) |

Definition at line 677 of file checkpointer.c.

References AbsorbFsyncRequests(), AmCheckpointerProcess, CheckArchiveTimeout(), CHECKPOINT_IMMEDIATE, got_SIGHUP, ImmediateCheckpointRequested(), IsCheckpointOnSchedule(), pg_usleep(), PGC_SIGHUP, pgstat_send_bgwriter(), ProcessConfigFile(), shutdown_requested, and UpdateSharedMemoryConfig().

Referenced by BufferSync().

{

static int absorb_counter = WRITES_PER_ABSORB;

/* Do nothing if checkpoint is being executed by non-checkpointer process */

if (!AmCheckpointerProcess())

return;

/*

* Perform the usual duties and take a nap, unless we're behind schedule,

* in which case we just try to catch up as quickly as possible.

*/

if (!(flags & CHECKPOINT_IMMEDIATE) &&

!shutdown_requested &&

!ImmediateCheckpointRequested() &&

IsCheckpointOnSchedule(progress))

{

if (got_SIGHUP)

{

got_SIGHUP = false;

ProcessConfigFile(PGC_SIGHUP);

/* update shmem copies of config variables */

UpdateSharedMemoryConfig();

}

AbsorbFsyncRequests();

absorb_counter = WRITES_PER_ABSORB;

CheckArchiveTimeout();

/*

* Report interim activity statistics to the stats collector.

*/

pgstat_send_bgwriter();

/*

* This sleep used to be connected to bgwriter_delay, typically 200ms.

* That resulted in more frequent wakeups if not much work to do.

* Checkpointer and bgwriter are no longer related so take the Big

* Sleep.

*/

pg_usleep(100000L);

}

else if (--absorb_counter <= 0)

{

/*

* Absorb pending fsync requests after each WRITES_PER_ABSORB write

* operations even when we don't sleep, to prevent overflow of the

* fsync request queue.

*/

AbsorbFsyncRequests();

absorb_counter = WRITES_PER_ABSORB;

}

}

| bool FirstCallSinceLastCheckpoint | ( | void | ) |

Definition at line 1372 of file checkpointer.c.

References CheckpointerShmemStruct::ckpt_done, CheckpointerShmemStruct::ckpt_lck, SpinLockAcquire, and SpinLockRelease.

Referenced by BackgroundWriterMain().

{

/* use volatile pointer to prevent code rearrangement */

volatile CheckpointerShmemStruct *cps = CheckpointerShmem;

static int ckpt_done = 0;

int new_done;

bool FirstCall = false;

SpinLockAcquire(&cps->ckpt_lck);

new_done = cps->ckpt_done;

SpinLockRelease(&cps->ckpt_lck);

if (new_done != ckpt_done)

FirstCall = true;

ckpt_done = new_done;

return FirstCall;

}

| bool ForwardFsyncRequest | ( | RelFileNode | rnode, | |

| ForkNumber | forknum, | |||

| BlockNumber | segno | |||

| ) |

Definition at line 1118 of file checkpointer.c.

References AmBackgroundWriterProcess, AmCheckpointerProcess, CheckpointerShmemStruct::checkpointer_pid, CheckpointerCommLock, PROC_HDR::checkpointerLatch, CompactCheckpointerRequestQueue(), elog, ERROR, CheckpointerRequest::forknum, IsUnderPostmaster, LW_EXCLUSIVE, LWLockAcquire(), LWLockRelease(), CheckpointerShmemStruct::max_requests, CheckpointerShmemStruct::num_backend_fsync, CheckpointerShmemStruct::num_backend_writes, CheckpointerShmemStruct::num_requests, ProcGlobal, CheckpointerShmemStruct::requests, CheckpointerRequest::rnode, CheckpointerRequest::segno, and SetLatch().

Referenced by ForgetDatabaseFsyncRequests(), ForgetRelationFsyncRequests(), register_dirty_segment(), and register_unlink().

{

CheckpointerRequest *request;

bool too_full;

if (!IsUnderPostmaster)

return false; /* probably shouldn't even get here */

if (AmCheckpointerProcess())

elog(ERROR, "ForwardFsyncRequest must not be called in checkpointer");

LWLockAcquire(CheckpointerCommLock, LW_EXCLUSIVE);

/* Count all backend writes regardless of if they fit in the queue */

if (!AmBackgroundWriterProcess())

CheckpointerShmem->num_backend_writes++;

/*

* If the checkpointer isn't running or the request queue is full, the

* backend will have to perform its own fsync request. But before forcing

* that to happen, we can try to compact the request queue.

*/

if (CheckpointerShmem->checkpointer_pid == 0 ||

(CheckpointerShmem->num_requests >= CheckpointerShmem->max_requests &&

!CompactCheckpointerRequestQueue()))

{

/*

* Count the subset of writes where backends have to do their own

* fsync

*/

if (!AmBackgroundWriterProcess())

CheckpointerShmem->num_backend_fsync++;

LWLockRelease(CheckpointerCommLock);

return false;

}

/* OK, insert request */

request = &CheckpointerShmem->requests[CheckpointerShmem->num_requests++];

request->rnode = rnode;

request->forknum = forknum;

request->segno = segno;

/* If queue is more than half full, nudge the checkpointer to empty it */

too_full = (CheckpointerShmem->num_requests >=

CheckpointerShmem->max_requests / 2);

LWLockRelease(CheckpointerCommLock);

/* ... but not till after we release the lock */

if (too_full && ProcGlobal->checkpointerLatch)

SetLatch(ProcGlobal->checkpointerLatch);

return true;

}

| void RequestCheckpoint | ( | int | flags | ) |

Definition at line 960 of file checkpointer.c.

References CHECK_FOR_INTERRUPTS, CHECKPOINT_IMMEDIATE, CHECKPOINT_WAIT, CheckpointerShmemStruct::checkpointer_pid, CheckpointerShmemStruct::ckpt_done, CheckpointerShmemStruct::ckpt_failed, CheckpointerShmemStruct::ckpt_flags, CheckpointerShmemStruct::ckpt_lck, CheckpointerShmemStruct::ckpt_started, CreateCheckPoint(), elog, ereport, errhint(), errmsg(), ERROR, IsPostmasterEnvironment, LOG, pg_usleep(), smgrcloseall(), SpinLockAcquire, and SpinLockRelease.

Referenced by createdb(), do_pg_start_backup(), dropdb(), DropTableSpace(), movedb(), standard_ProcessUtility(), StartupXLOG(), XLogPageRead(), and XLogWrite().

{

/* use volatile pointer to prevent code rearrangement */

volatile CheckpointerShmemStruct *cps = CheckpointerShmem;

int ntries;

int old_failed,

old_started;

/*

* If in a standalone backend, just do it ourselves.

*/

if (!IsPostmasterEnvironment)

{

/*

* There's no point in doing slow checkpoints in a standalone backend,

* because there's no other backends the checkpoint could disrupt.

*/

CreateCheckPoint(flags | CHECKPOINT_IMMEDIATE);

/*

* After any checkpoint, close all smgr files. This is so we won't

* hang onto smgr references to deleted files indefinitely.

*/

smgrcloseall();

return;

}

/*

* Atomically set the request flags, and take a snapshot of the counters.

* When we see ckpt_started > old_started, we know the flags we set here

* have been seen by checkpointer.

*

* Note that we OR the flags with any existing flags, to avoid overriding

* a "stronger" request by another backend. The flag senses must be

* chosen to make this work!

*/

SpinLockAcquire(&cps->ckpt_lck);

old_failed = cps->ckpt_failed;

old_started = cps->ckpt_started;

cps->ckpt_flags |= flags;

SpinLockRelease(&cps->ckpt_lck);

/*

* Send signal to request checkpoint. It's possible that the checkpointer

* hasn't started yet, or is in process of restarting, so we will retry a

* few times if needed. Also, if not told to wait for the checkpoint to

* occur, we consider failure to send the signal to be nonfatal and merely

* LOG it.

*/

for (ntries = 0;; ntries++)

{

if (CheckpointerShmem->checkpointer_pid == 0)

{

if (ntries >= 20) /* max wait 2.0 sec */

{

elog((flags & CHECKPOINT_WAIT) ? ERROR : LOG,

"could not request checkpoint because checkpointer not running");

break;

}

}

else if (kill(CheckpointerShmem->checkpointer_pid, SIGINT) != 0)

{

if (ntries >= 20) /* max wait 2.0 sec */

{

elog((flags & CHECKPOINT_WAIT) ? ERROR : LOG,

"could not signal for checkpoint: %m");

break;

}

}

else

break; /* signal sent successfully */

CHECK_FOR_INTERRUPTS();

pg_usleep(100000L); /* wait 0.1 sec, then retry */

}

/*

* If requested, wait for completion. We detect completion according to

* the algorithm given above.

*/

if (flags & CHECKPOINT_WAIT)

{

int new_started,

new_failed;

/* Wait for a new checkpoint to start. */

for (;;)

{

SpinLockAcquire(&cps->ckpt_lck);

new_started = cps->ckpt_started;

SpinLockRelease(&cps->ckpt_lck);

if (new_started != old_started)

break;

CHECK_FOR_INTERRUPTS();

pg_usleep(100000L);

}

/*

* We are waiting for ckpt_done >= new_started, in a modulo sense.

*/

for (;;)

{

int new_done;

SpinLockAcquire(&cps->ckpt_lck);

new_done = cps->ckpt_done;

new_failed = cps->ckpt_failed;

SpinLockRelease(&cps->ckpt_lck);

if (new_done - new_started >= 0)

break;

CHECK_FOR_INTERRUPTS();

pg_usleep(100000L);

}

if (new_failed != old_failed)

ereport(ERROR,

(errmsg("checkpoint request failed"),

errhint("Consult recent messages in the server log for details.")));

}

}

| int BgWriterDelay |

Definition at line 65 of file bgwriter.c.

Referenced by BackgroundWriterMain(), and BgBufferSync().

| double CheckPointCompletionTarget |

Definition at line 146 of file checkpointer.c.

Referenced by IsCheckpointOnSchedule().

Definition at line 144 of file checkpointer.c.

Referenced by CheckpointerMain(), and IsCheckpointOnSchedule().

Definition at line 145 of file checkpointer.c.

Referenced by CheckpointerMain().

1.7.1

1.7.1