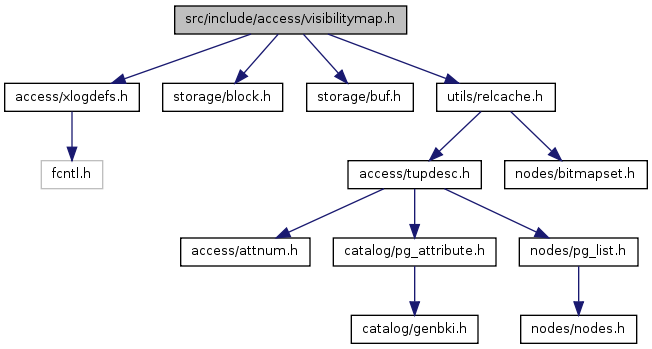

#include "access/xlogdefs.h"#include "storage/block.h"#include "storage/buf.h"#include "utils/relcache.h"

Go to the source code of this file.

Functions | |

| void | visibilitymap_clear (Relation rel, BlockNumber heapBlk, Buffer vmbuf) |

| void | visibilitymap_pin (Relation rel, BlockNumber heapBlk, Buffer *vmbuf) |

| bool | visibilitymap_pin_ok (BlockNumber heapBlk, Buffer vmbuf) |

| void | visibilitymap_set (Relation rel, BlockNumber heapBlk, Buffer heapBuf, XLogRecPtr recptr, Buffer vmBuf, TransactionId cutoff_xid) |

| bool | visibilitymap_test (Relation rel, BlockNumber heapBlk, Buffer *vmbuf) |

| BlockNumber | visibilitymap_count (Relation rel) |

| void | visibilitymap_truncate (Relation rel, BlockNumber nheapblocks) |

| void visibilitymap_clear | ( | Relation | rel, | |

| BlockNumber | heapBlk, | |||

| Buffer | vmbuf | |||

| ) |

Definition at line 150 of file visibilitymap.c.

References BUFFER_LOCK_EXCLUSIVE, BUFFER_LOCK_UNLOCK, BufferGetBlockNumber(), BufferGetPage, BufferIsValid, DEBUG1, elog, ERROR, HEAPBLK_TO_MAPBIT, HEAPBLK_TO_MAPBLOCK, HEAPBLK_TO_MAPBYTE, LockBuffer(), MarkBufferDirty(), PageGetContents, and RelationGetRelationName.

Referenced by heap_delete(), heap_insert(), heap_multi_insert(), heap_update(), heap_xlog_delete(), heap_xlog_insert(), heap_xlog_multi_insert(), heap_xlog_update(), and lazy_scan_heap().

{

BlockNumber mapBlock = HEAPBLK_TO_MAPBLOCK(heapBlk);

int mapByte = HEAPBLK_TO_MAPBYTE(heapBlk);

int mapBit = HEAPBLK_TO_MAPBIT(heapBlk);

uint8 mask = 1 << mapBit;

char *map;

#ifdef TRACE_VISIBILITYMAP

elog(DEBUG1, "vm_clear %s %d", RelationGetRelationName(rel), heapBlk);

#endif

if (!BufferIsValid(buf) || BufferGetBlockNumber(buf) != mapBlock)

elog(ERROR, "wrong buffer passed to visibilitymap_clear");

LockBuffer(buf, BUFFER_LOCK_EXCLUSIVE);

map = PageGetContents(BufferGetPage(buf));

if (map[mapByte] & mask)

{

map[mapByte] &= ~mask;

MarkBufferDirty(buf);

}

LockBuffer(buf, BUFFER_LOCK_UNLOCK);

}

| BlockNumber visibilitymap_count | ( | Relation | rel | ) |

Definition at line 379 of file visibilitymap.c.

References BufferGetPage, BufferIsValid, i, number_of_ones, PageGetContents, ReleaseBuffer(), and vm_readbuf().

Referenced by do_analyze_rel(), index_update_stats(), and lazy_vacuum_rel().

{

BlockNumber result = 0;

BlockNumber mapBlock;

for (mapBlock = 0;; mapBlock++)

{

Buffer mapBuffer;

unsigned char *map;

int i;

/*

* Read till we fall off the end of the map. We assume that any extra

* bytes in the last page are zeroed, so we don't bother excluding

* them from the count.

*/

mapBuffer = vm_readbuf(rel, mapBlock, false);

if (!BufferIsValid(mapBuffer))

break;

/*

* We choose not to lock the page, since the result is going to be

* immediately stale anyway if anyone is concurrently setting or

* clearing bits, and we only really need an approximate value.

*/

map = (unsigned char *) PageGetContents(BufferGetPage(mapBuffer));

for (i = 0; i < MAPSIZE; i++)

{

result += number_of_ones[map[i]];

}

ReleaseBuffer(mapBuffer);

}

return result;

}

| void visibilitymap_pin | ( | Relation | rel, | |

| BlockNumber | heapBlk, | |||

| Buffer * | vmbuf | |||

| ) |

Definition at line 195 of file visibilitymap.c.

References BufferGetBlockNumber(), BufferIsValid, HEAPBLK_TO_MAPBLOCK, ReleaseBuffer(), and vm_readbuf().

Referenced by GetVisibilityMapPins(), heap_delete(), heap_update(), heap_xlog_delete(), heap_xlog_insert(), heap_xlog_multi_insert(), heap_xlog_update(), heap_xlog_visible(), lazy_scan_heap(), and RelationGetBufferForTuple().

{

BlockNumber mapBlock = HEAPBLK_TO_MAPBLOCK(heapBlk);

/* Reuse the old pinned buffer if possible */

if (BufferIsValid(*buf))

{

if (BufferGetBlockNumber(*buf) == mapBlock)

return;

ReleaseBuffer(*buf);

}

*buf = vm_readbuf(rel, mapBlock, true);

}

| bool visibilitymap_pin_ok | ( | BlockNumber | heapBlk, | |

| Buffer | vmbuf | |||

| ) |

Definition at line 219 of file visibilitymap.c.

References BufferGetBlockNumber(), BufferIsValid, and HEAPBLK_TO_MAPBLOCK.

Referenced by GetVisibilityMapPins().

{

BlockNumber mapBlock = HEAPBLK_TO_MAPBLOCK(heapBlk);

return BufferIsValid(buf) && BufferGetBlockNumber(buf) == mapBlock;

}

| void visibilitymap_set | ( | Relation | rel, | |

| BlockNumber | heapBlk, | |||

| Buffer | heapBuf, | |||

| XLogRecPtr | recptr, | |||

| Buffer | vmBuf, | |||

| TransactionId | cutoff_xid | |||

| ) |

Definition at line 246 of file visibilitymap.c.

References Assert, BUFFER_LOCK_EXCLUSIVE, BUFFER_LOCK_UNLOCK, BufferGetBlockNumber(), BufferGetPage, BufferIsValid, DataChecksumsEnabled(), DEBUG1, elog, END_CRIT_SECTION, ERROR, HEAPBLK_TO_MAPBIT, HEAPBLK_TO_MAPBLOCK, HEAPBLK_TO_MAPBYTE, InRecovery, LockBuffer(), log_heap_visible(), MarkBufferDirty(), PageGetContents, PageIsAllVisible, PageSetLSN, RelationData::rd_node, RelationGetRelationName, RelationNeedsWAL, START_CRIT_SECTION, and XLogRecPtrIsInvalid.

Referenced by heap_xlog_visible(), lazy_scan_heap(), and lazy_vacuum_page().

{

BlockNumber mapBlock = HEAPBLK_TO_MAPBLOCK(heapBlk);

uint32 mapByte = HEAPBLK_TO_MAPBYTE(heapBlk);

uint8 mapBit = HEAPBLK_TO_MAPBIT(heapBlk);

Page page;

char *map;

#ifdef TRACE_VISIBILITYMAP

elog(DEBUG1, "vm_set %s %d", RelationGetRelationName(rel), heapBlk);

#endif

Assert(InRecovery || XLogRecPtrIsInvalid(recptr));

Assert(InRecovery || BufferIsValid(heapBuf));

/* Check that we have the right heap page pinned, if present */

if (BufferIsValid(heapBuf) && BufferGetBlockNumber(heapBuf) != heapBlk)

elog(ERROR, "wrong heap buffer passed to visibilitymap_set");

/* Check that we have the right VM page pinned */

if (!BufferIsValid(vmBuf) || BufferGetBlockNumber(vmBuf) != mapBlock)

elog(ERROR, "wrong VM buffer passed to visibilitymap_set");

page = BufferGetPage(vmBuf);

map = PageGetContents(page);

LockBuffer(vmBuf, BUFFER_LOCK_EXCLUSIVE);

if (!(map[mapByte] & (1 << mapBit)))

{

START_CRIT_SECTION();

map[mapByte] |= (1 << mapBit);

MarkBufferDirty(vmBuf);

if (RelationNeedsWAL(rel))

{

if (XLogRecPtrIsInvalid(recptr))

{

Assert(!InRecovery);

recptr = log_heap_visible(rel->rd_node, heapBuf, vmBuf,

cutoff_xid);

/*

* If data checksums are enabled, we need to protect the heap

* page from being torn.

*/

if (DataChecksumsEnabled())

{

Page heapPage = BufferGetPage(heapBuf);

/* caller is expected to set PD_ALL_VISIBLE first */

Assert(PageIsAllVisible(heapPage));

PageSetLSN(heapPage, recptr);

}

}

PageSetLSN(page, recptr);

}

END_CRIT_SECTION();

}

LockBuffer(vmBuf, BUFFER_LOCK_UNLOCK);

}

| bool visibilitymap_test | ( | Relation | rel, | |

| BlockNumber | heapBlk, | |||

| Buffer * | vmbuf | |||

| ) |

Definition at line 330 of file visibilitymap.c.

References BufferGetBlockNumber(), BufferGetPage, BufferIsValid, DEBUG1, elog, HEAPBLK_TO_MAPBIT, HEAPBLK_TO_MAPBLOCK, HEAPBLK_TO_MAPBYTE, PageGetContents, RelationGetRelationName, ReleaseBuffer(), and vm_readbuf().

Referenced by IndexOnlyNext(), lazy_scan_heap(), and lazy_vacuum_page().

{

BlockNumber mapBlock = HEAPBLK_TO_MAPBLOCK(heapBlk);

uint32 mapByte = HEAPBLK_TO_MAPBYTE(heapBlk);

uint8 mapBit = HEAPBLK_TO_MAPBIT(heapBlk);

bool result;

char *map;

#ifdef TRACE_VISIBILITYMAP

elog(DEBUG1, "vm_test %s %d", RelationGetRelationName(rel), heapBlk);

#endif

/* Reuse the old pinned buffer if possible */

if (BufferIsValid(*buf))

{

if (BufferGetBlockNumber(*buf) != mapBlock)

{

ReleaseBuffer(*buf);

*buf = InvalidBuffer;

}

}

if (!BufferIsValid(*buf))

{

*buf = vm_readbuf(rel, mapBlock, false);

if (!BufferIsValid(*buf))

return false;

}

map = PageGetContents(BufferGetPage(*buf));

/*

* A single-bit read is atomic. There could be memory-ordering effects

* here, but for performance reasons we make it the caller's job to worry

* about that.

*/

result = (map[mapByte] & (1 << mapBit)) ? true : false;

return result;

}

| void visibilitymap_truncate | ( | Relation | rel, | |

| BlockNumber | nheapblocks | |||

| ) |

Definition at line 427 of file visibilitymap.c.

References BUFFER_LOCK_EXCLUSIVE, BufferGetPage, BufferIsValid, DEBUG1, elog, HEAPBLK_TO_MAPBIT, HEAPBLK_TO_MAPBLOCK, HEAPBLK_TO_MAPBYTE, LockBuffer(), MAPSIZE, MarkBufferDirty(), MemSet, PageGetContents, RelationData::rd_smgr, RelationGetRelationName, RelationOpenSmgr, SMgrRelationData::smgr_vm_nblocks, smgrexists(), smgrnblocks(), smgrtruncate(), UnlockReleaseBuffer(), VISIBILITYMAP_FORKNUM, and vm_readbuf().

Referenced by RelationTruncate(), and smgr_redo().

{

BlockNumber newnblocks;

/* last remaining block, byte, and bit */

BlockNumber truncBlock = HEAPBLK_TO_MAPBLOCK(nheapblocks);

uint32 truncByte = HEAPBLK_TO_MAPBYTE(nheapblocks);

uint8 truncBit = HEAPBLK_TO_MAPBIT(nheapblocks);

#ifdef TRACE_VISIBILITYMAP

elog(DEBUG1, "vm_truncate %s %d", RelationGetRelationName(rel), nheapblocks);

#endif

RelationOpenSmgr(rel);

/*

* If no visibility map has been created yet for this relation, there's

* nothing to truncate.

*/

if (!smgrexists(rel->rd_smgr, VISIBILITYMAP_FORKNUM))

return;

/*

* Unless the new size is exactly at a visibility map page boundary, the

* tail bits in the last remaining map page, representing truncated heap

* blocks, need to be cleared. This is not only tidy, but also necessary

* because we don't get a chance to clear the bits if the heap is extended

* again.

*/

if (truncByte != 0 || truncBit != 0)

{

Buffer mapBuffer;

Page page;

char *map;

newnblocks = truncBlock + 1;

mapBuffer = vm_readbuf(rel, truncBlock, false);

if (!BufferIsValid(mapBuffer))

{

/* nothing to do, the file was already smaller */

return;

}

page = BufferGetPage(mapBuffer);

map = PageGetContents(page);

LockBuffer(mapBuffer, BUFFER_LOCK_EXCLUSIVE);

/* Clear out the unwanted bytes. */

MemSet(&map[truncByte + 1], 0, MAPSIZE - (truncByte + 1));

/*

* Mask out the unwanted bits of the last remaining byte.

*

* ((1 << 0) - 1) = 00000000 ((1 << 1) - 1) = 00000001 ... ((1 << 6) -

* 1) = 00111111 ((1 << 7) - 1) = 01111111

*/

map[truncByte] &= (1 << truncBit) - 1;

MarkBufferDirty(mapBuffer);

UnlockReleaseBuffer(mapBuffer);

}

else

newnblocks = truncBlock;

if (smgrnblocks(rel->rd_smgr, VISIBILITYMAP_FORKNUM) <= newnblocks)

{

/* nothing to do, the file was already smaller than requested size */

return;

}

/* Truncate the unused VM pages, and send smgr inval message */

smgrtruncate(rel->rd_smgr, VISIBILITYMAP_FORKNUM, newnblocks);

/*

* We might as well update the local smgr_vm_nblocks setting. smgrtruncate

* sent an smgr cache inval message, which will cause other backends to

* invalidate their copy of smgr_vm_nblocks, and this one too at the next

* command boundary. But this ensures it isn't outright wrong until then.

*/

if (rel->rd_smgr)

rel->rd_smgr->smgr_vm_nblocks = newnblocks;

}

1.7.1

1.7.1