To understand the possibilities OpenStack offers it's best to start with basic architectures that are tried-and-true and have been tested in production environments. We offer two such examples with basic pivots on the base operating system (Ubuntu and Red Hat Enterprise Linux) and the networking architectures. There are other differences between these two examples, but you should find the considerations made for the choices in each as well as a rationale for why it worked well in a given environment.

Because OpenStack is highly configurable, with many different back-ends and network configuration options, it is difficult to write documentation that covers all possible OpenStack deployments. Therefore, this guide defines example architectures to simplify the task of documenting, as well as to provide the scope for this guide. Both of the offered architecture examples are currently running in production and serving users.

![[Tip]](../common/images/admon/tip.png) | Tip |

|---|---|

As always, refer to the Glossary if you are unclear about any of the terminology mentioned in these architectures. |

This particular example architecture has been upgraded from Grizzly to Havana and tested in production environments where many public IP addresses are available for assignment to multiple instances. You can find a second example architecture that uses OpenStack Networking (neutron) after this section. Each example offers high availability, meaning that if a particular node goes down, another node with the same configuration can take over the tasks so that service continues to be available.

The simplest architecture you can build upon for Compute has a single cloud controller and multiple compute nodes. The simplest architecture for Object Storage has five nodes: one for identifying users and proxying requests to the API, then four for storage itself to provide enough replication for eventual consistency. This example architecture does not dictate a particular number of nodes, but shows the thinking and considerations that went into choosing this architecture including the features offered.

OpenStack release |

Havana |

Host operating system |

Ubuntu 12.04 LTS or Red Hat Enterprise Linux 6.5 including derivatives such as CentOS and Scientific Linux |

OpenStack package repository |

Ubuntu Cloud Archive (https://wiki.ubuntu.com/ServerTeam/CloudArchive) or RDO (http://openstack.redhat.com/Frequently_Asked_Questions) * |

Hypervisor |

KVM |

Database |

MySQL* |

Message queue |

RabbitMQ for Ubuntu, Qpid for Red Hat Enterprise Linux and derivatives |

Networking service |

nova-network |

Network manager |

FlatDHCP |

Single nova-network or multi-host? |

multi-host* |

Image Service (glance) back-end |

file |

Identity Service (keystone) driver |

SQL |

Block Storage Service (cinder) back-end |

LVM/iSCSI |

Live Migration back-end |

shared storage using NFS * |

Object storage |

OpenStack Object Storage (swift) |

An asterisk (*) indicates when the example architecture deviates from the settings of a default installation. We'll offer explanations for those deviations next.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

The following features of OpenStack are supported by the example architecture documented in this guide, but are optional:

|

This example architecture has been selected based on the current default feature set of OpenStack Havana, with an emphasis on stability. We believe that many clouds that currently run OpenStack in production have made similar choices.

You must first choose the operating system that runs on all of the physical nodes. While OpenStack is supported on several distributions of Linux, we used Ubuntu 12.04 LTS (Long Term Support), which is used by the majority of the development community, has feature completeness compared with other distributions, and has clear future support plans.

We recommend that you do not use the default Ubuntu OpenStack install packages and instead use the Ubuntu Cloud Archive (https://wiki.ubuntu.com/ServerTeam/CloudArchive). The Cloud Archive is a package repository supported by Canonical that allows you to upgrade to future OpenStack releases while remaining on Ubuntu 12.04.

KVM as a hypervisor complements the choice of Ubuntu - being a matched pair in terms of support, and also because of the significant degree of attention it garners from the OpenStack development community (including the authors, who mostly use KVM). It is also feature complete, free from licensing charges and restrictions.

MySQL follows a similar trend. Despite its recent change of ownership, this database is the most tested for use with OpenStack and is heavily documented. We deviate from the default database, SQLite, because SQLite is not an appropriate database for production usage.

The choice of RabbitMQ over other AMQP compatible options that are gaining support in OpenStack, such as ZeroMQ and Qpid is due to its ease of use and significant testing in production. It also is the only option which supports features such as Compute cells. We recommend clustering with RabbitMQ, as it is an integral component of the system, and fairly simple to implement due to its inbuilt nature.

As discussed in previous chapters, there are several options for

networking in OpenStack Compute. We recommend FlatDHCP and to use Multi-Host networking mode for high availability,

running one nova-network daemon per OpenStack Compute

host. This provides a robust mechanism for ensuring network

interruptions are isolated to individual compute hosts, and allows

for the direct use of hardware network gateways.

Live Migration is supported by way of shared storage, with NFS as the distributed file system.

Acknowledging that many small-scale deployments see running Object Storage just for the storage of virtual machine images as too costly, we opted for the file back-end in the OpenStack Image Service (Glance). If your cloud will include Object Storage, you can easily add it as a back-end.

We chose the SQL back-end for Identity Service (keystone) over others, such as LDAP. This back-end is simple to install and is robust. The authors acknowledge that many installations want to bind with existing directory services, and caution careful understanding of the array of options available (http://docs.openstack.org/havana/config-reference/content/ch_configuring-openstack-identity.html#configuring-keystone-for-ldap-backend).

Block Storage (cinder) is installed natively on external storage nodes and uses the LVM/iSCSI plugin. Most Block Storage Service plugins are tied to particular vendor products and implementations limiting their use to consumers of those hardware platforms, but LVM/iSCSI is robust and stable on commodity hardware.

While the cloud can be run without the OpenStack Dashboard, we consider it to be indispensable, not just for user interaction with the cloud, but also as a tool for operators. Additionally, the dashboard's use of Django makes it a flexible framework for extension.

This example architecture does not use the OpenStack Network Service (neutron), because it does not yet support multi-host networking and our organizations (university, government) have access to a large range of publicly-accessible IPv4 addresses.

In a default OpenStack deployment, there is a single

nova-network service that runs within the cloud

(usually on the cloud controller) that provides services such as

network address translation (NAT), DHCP, and DNS to the guest

instances. If the single node that runs the

nova-network service goes down, you cannot

access your instances and the instances cannot access the

Internet. The single node that runs the nova-network service can

become a bottleneck if excessive network traffic comes in and

goes out of the cloud.

![[Tip]](../common/images/admon/tip.png) | Tip |

|---|---|

Multi-host (http://docs.openstack.org/havana/install-guide/install/apt/content/nova-network.html) is a high-availability option for the network configuration where the nova-network service is run on every compute node instead of running on only a single node. |

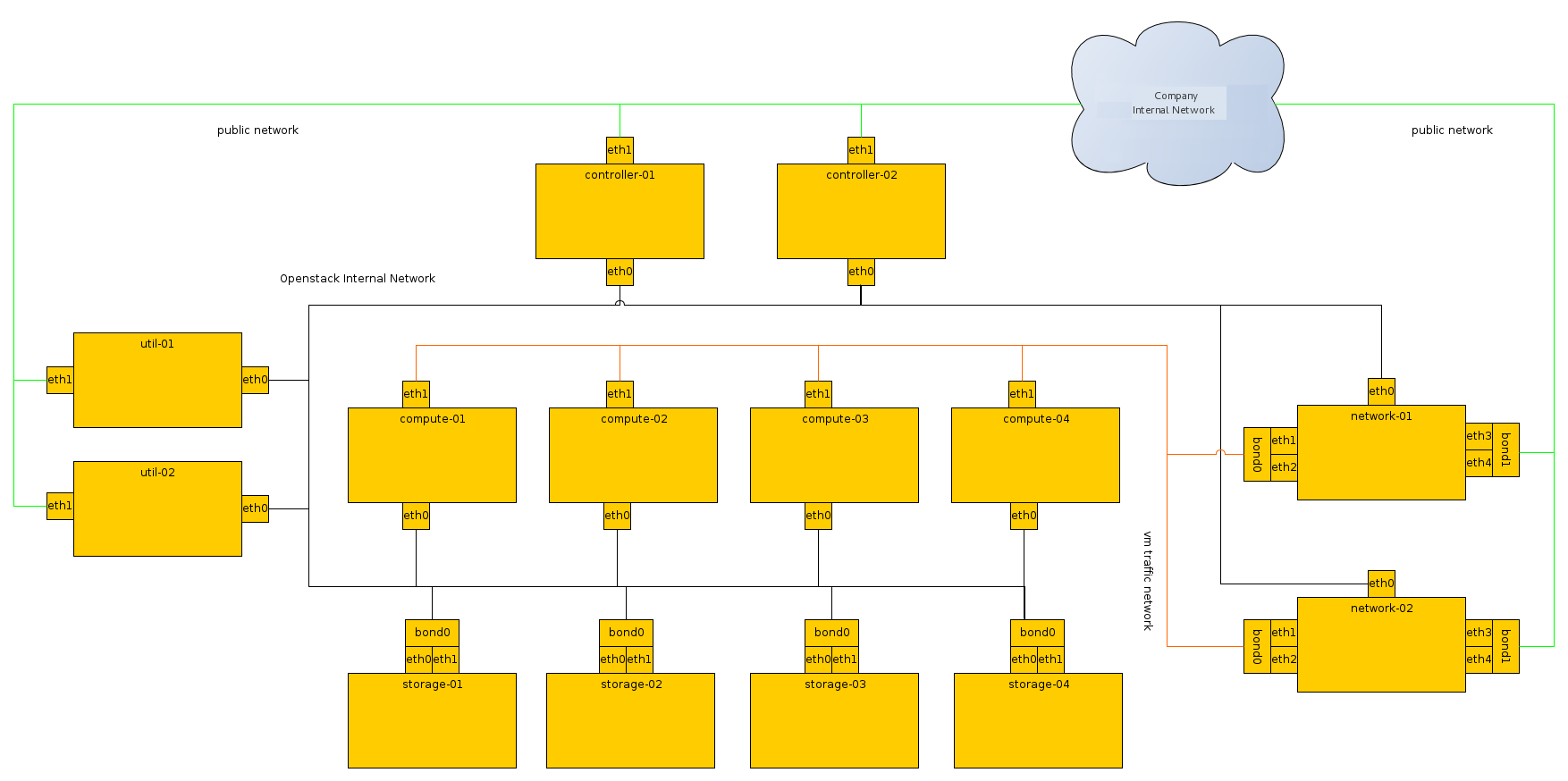

The reference architecture consists of multiple compute nodes, a cloud controller, an external NFS storage server for instance storage and an OpenStack Block Storage server for volume storage. A network time service (Network Time Protocol, NTP) synchronizes time on all the nodes. FlatDHCPManager in multi-host mode is used for the networking. A logical diagram for this example architecture shows which services are running on each node:

The cloud controller runs: the dashboard, the API

services, the database (MySQL), a message queue server

(RabbitMQ), the scheduler for choosing compute resources

(nova-scheduler), Identity services (keystone,

nova-consoleauth), Image services

(glance-api,

glance-registry), services for console access

of guests, and Block Storage services including the

scheduler for storage resources (cinder-api

and cinder-scheduler).

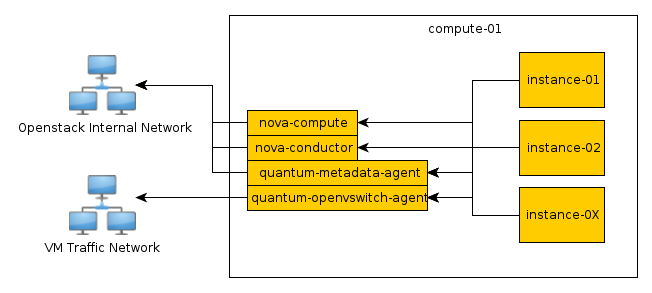

Compute nodes are where the computing resources are

held, and in our example architecture they run the

hypervisor (KVM), libvirt (the driver for the hypervisor,

which enables live migration from node to node),

nova-compute,

nova-api-metadata (generally only used

when running in multi-host mode, it retrieves

instance-specific metadata), nova-vncproxy,

and nova-network.

The network consists of two switches, one for the management or private traffic, and one which covers public access including Floating IPs. To support this, the cloud controller and the compute nodes have two network cards. The OpenStack Block Storage and NFS storage servers only need to access the private network and therefore only need one network card, but multiple cards run in a bonded configuration are recommended if possible. Floating IP access is direct to the internet, whereas Flat IP access goes through a NAT. To envision the network traffic use this diagram:

You can extend this reference architecture as follows:

Add additional cloud controllers (see Chapter 11, Maintenance, Failures, and Debugging).

Add an OpenStack Storage service (see the Object Storage chapter in the OpenStack Installation Guide for your distribution.

Add additional OpenStack Block Storage hosts (see Chapter 11, Maintenance, Failures, and Debugging).

This chapter provides an example architecture using OpenStack Networking, also known as the Neutron project, in a highly available environment.

A highly-available environment can be put into place if you require an environment that can scale horizontally, or want your cloud to continue to be operational in case of node failure. This example architecture has been written based on the current default feature set of OpenStack Havana, with an emphasis on high availability.

OpenStack release |

Havana |

Host operating system |

Red Hat Enterprise Linux 6.5 |

OpenStack package repository |

Red Hat Distributed OpenStack (RDO) (http://repos.fedorapeople.org/repos/openstack/openstack-havana/rdo-release-havana-7.noarch.rpm) |

Hypervisor |

KVM |

Database |

MySQL |

Message queue |

Qpid |

Networking service |

OpenStack Networking |

Tenant Network Separation |

VLAN |

Image Service (glance) back-end |

GlusterFS |

Identity Service (keystone) driver |

SQL |

Block Storage Service (cinder) back-end |

GlusterFS |

This example architecture has been selected based on the current default feature set of OpenStack Havana, with an emphasis on high availability. This architecture is currently being deployed in an internal Red Hat OpenStack cloud, and used to run hosted and shared services which by their nature must be highly available.

This architecture's components have been selected for the following reasons:

Red Hat Enterprise Linux - You must choose an operating system that can run on all of the physical nodes. This example architecture is based on Red Hat Enterprise Linux, which offers reliability, long-term support, certified testing, and is hardened. Enterprise customers, now moving into OpenStack usage, typically require these advantages.

RDO - The Red Hat Distributed OpenStack package offer an easy way to download the most current OpenStack release that is built for the Red Hat Enterprise Linux platform.

KVM - KVM is the supported hypervisor of choice for Red Hat Enterprise Linux (and included in distribution). It is feature complete, and free from licensing charges and restrictions.

MySQL - Mysql is used as the database backend for all databases in the OpenStack environment. MySQL is the supported database of choice for Red Hat Enterprise Linux (and included in distribution); the database is open source, scalable, and handles memory well.

Qpid - Apache Qpid offers 100 percent compatibility with the Advanced Message Queuing Protocol Standard, and its broker is available for both C++ and Java.

OpenStack Networking - OpenStack Networking offers sophisticated networking functionality, including Layer 2 (L2) network segregation and provider networks.

VLAN - Using a virtual local area network offers broadcast control, security, and physical layer transparency. If needed, use VXLAN to extend your address space.

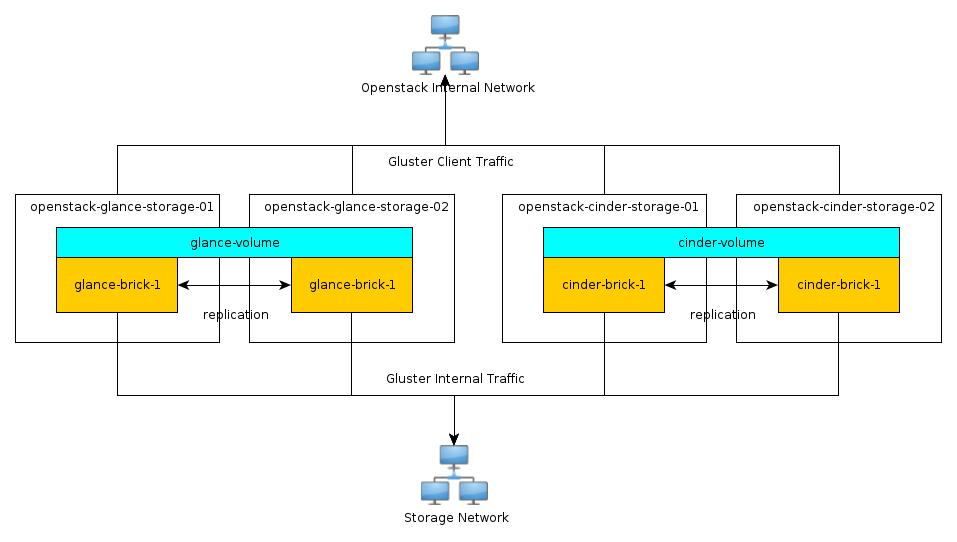

GlusterFS - GlusterFS offers scalable storage. As your environment grows, you can continue to add more storage nodes (instead of being restricted, for example, by an expensive storage array).

This section gives you a breakdown of the different nodes that make up the OpenStack environment. A node is a physical machine that is provisioned with an operating system, and running a defined software stack on top of it. The following table provides node descriptions and specifications.

| Type | Description | Example Hardware |

|---|---|---|

| Controller | Controller nodes are responsible for running the management software services needed for the OpenStack environment to function. These nodes:

|

Model: Dell R620 CPU: 2 x Intel® Xeon® CPU E5-2620 0 @ 2.00GHz Memory: 32GB Disk: 2 x 300GB 10000 RPM SAS Disks Network: 2 x 10G network ports |

| Compute | Compute nodes run the virtual machine instances in OpenStack. They:

|

Model: Dell R620 CPU: 2x Intel® Xeon® CPU E5-2650 0 @ 2.00GHz Memory: 128GB Disk: 2 x 600GB 10000 RPM SAS Disks Network: 4 x 10G network ports (For future proofing expansion) |

| Storage | Storage nodes store all the data required for the environment, including disk images in the Image Service library, and the persistent storage volumes created by the Block Storage service. Storage nodes use GlusterFS technology to keep the data highly available and scalable. |

Model: Dell R720xd CPU: 2 x Intel® Xeon® CPU E5-2620 0 @ 2.00GHz Memory: 64GB Disk: 2 x 500GB 7200 RPM SAS Disks + 24 x 600GB 10000 RPM SAS Disks Raid Controller: PERC H710P Integrated RAID Controller, 1GB NV Cache Network: 2 x 10G network ports |

| Network | Network nodes are responsible for doing all the virtual networking needed for people to create public or private networks, and uplink their virtual machines into external networks. Network nodes:

|

Model: Dell R620 CPU: 1 x Intel® Xeon® CPU E5-2620 0 @ 2.00GHz Memory: 32GB Disk: 2 x 300GB 10000 RPM SAS Disks Network: 5 x 10G network ports |

| Utility | Utility nodes are used by internal administration staff only to provide a number of basic system administration functions needed to get the environment up and running, and to maintain the hardware, OS, and software on which it runs. These nodes run services such as provisioning, configuration management, monitoring, or GlusterFS management software. They are not required to scale although these machines are usually backed up. |

Model: Dell R620 CPU: 2x Intel® Xeon® CPU E5-2620 0 @ 2.00GHz Memory: 32 GB Disk: 2 x 500GB 7200 RPM SAS Disks Network: 2 x 10G network ports |

The network contains all the management devices for all hardware in the environment (for example, by including Dell iDrac7 devices for the hardware nodes, and management interfaces for network switches). The network is accessed by internal staff only when diagnosing or recovering a hardware issue.

This network is used for OpenStack management functions and traffic, including

services needed for the provisioning of physical nodes

(pxe, tftp,

kickstart), traffic between various OpenStack node

types using OpenStack APIs and messages (for example,

nova-compute talking to

keystone or cinder-volume

talking to nova-api), and all traffic for storage data to

the storage layer underneath by the Gluster protocol. All physical nodes have at

least one network interface (typically eth0) in this

network. This network is only accessible from other VLANs on port 22 (for

ssh access to manage machines).

This network is a combination of:

IP addresses for public-facing interfaces on the controller nodes (which end users will access the OpenStack services)

A range of publicly routable, IPv4 network addresses to be used by OpenStack Networking for floating IPs. You may be restricted in your access to IPv4 addresses; a large range of IPv4 addresses is not necessary.

Routers for private networks created within OpenStack.

This network is connected to the controller nodes so users can access the OpenStack interfaces, and connected to the network nodes to provide VMs with publicly routable traffic functionality. The network is also connected to the utility machines so that any utility services that need to be made public (such as system monitoring) can be accessed.

This is a closed network that is not publicly routable and is simply used as a private, internal network for traffic between virtual machines in OpenStack, and between the virtual machines and the network nodes which provide l3 routes out to the public network (and floating IPs for connections back in to the VMs). Because this is a closed network, we are using a different address space to the others to clearly define the separation. Only Compute and OpenStack Networking nodes need to be connected to this network.

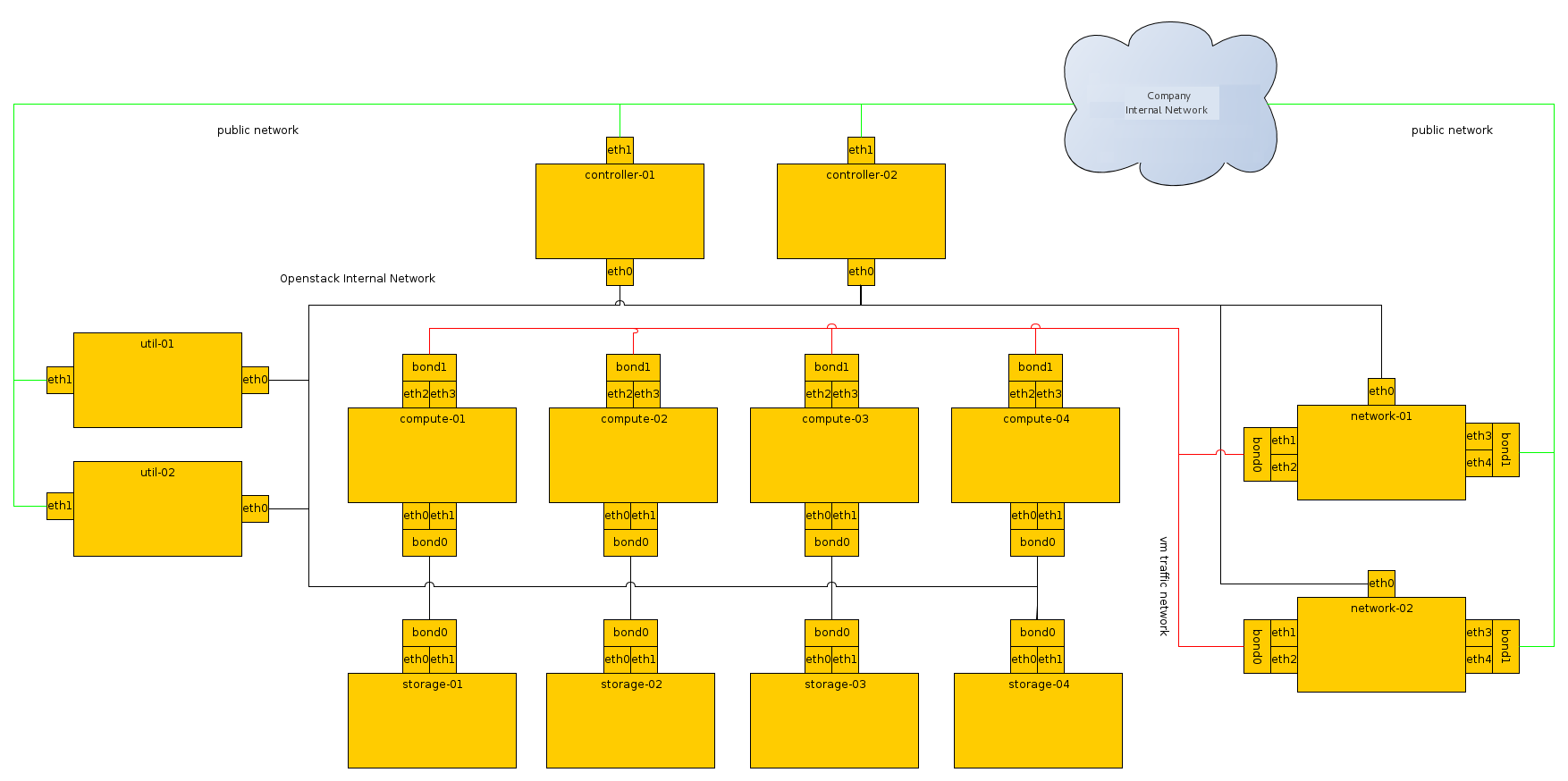

The following section details how the nodes are connected to the different networks (see the section called “Networking Layout”), and what other considerations need to take place (for example, bonding) when connecting nodes to the networks.

Initially, the connection setup should revolve around keeping the connectivity simple and straightforward, in order to minimise deployment complexity and time to deploy. The following deployment aims to have 1x10G connectivity available to all Compute nodes, while still leveraging bonding on appropriate nodes for maximum performance.

If the networking performance of the basic layout is not enough, you can move to the following layout which provides 2x10G network links to all instances in the environment, as well as providing more network bandwidth to the storage layer.

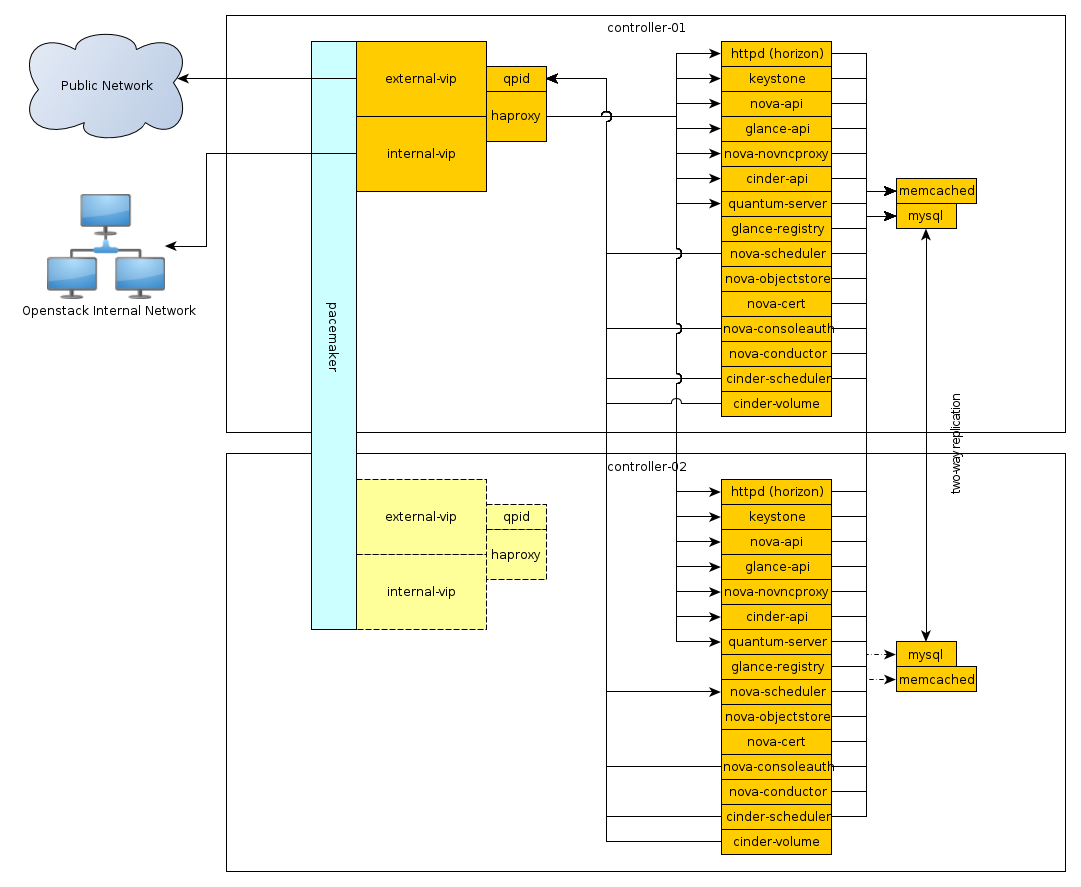

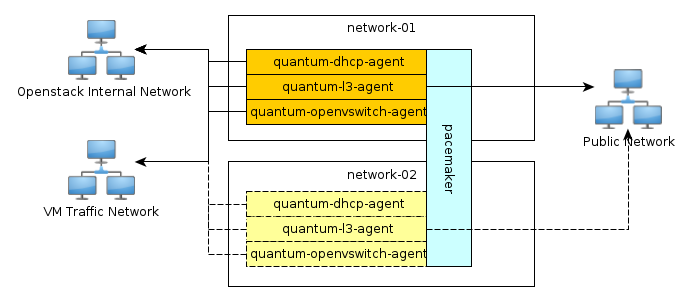

The following diagrams include logical information about the different types of nodes, indicating what services will be running on top of them, and how they interact with each other. The diagrams also illustrate how the availability and scalability of services are achieved.

The following tables includes example configuration and considerations for both third-party and OpenStack components:

| Component | Tuning | Availability | Scalability |

|---|---|---|---|

| MySQL | binlog-format = row |

Master-Master replication. However, both nodes are not used at the same time. Replication keeps all nodes as close to being up to date as possible (although the asynchronous nature of the replication means a fully consistent state is not possible). Connections to the database only happen through a Pacemaker virtual IP, ensuring that most problems that occur with master-master replication can be avoided. | Not heavily considered. Once load on the MySQL server increases enough that scalability needs to be considered, multiple masters or a master/slave setup can be used. |

| Qpid | max-connections=1000 worker-threads=20

connection-backlog=10, sasl security enabled with

SASL-BASIC authentication |

Qpid is added as a resource to the Pacemaker software that runs on Controller nodes where Qpid is situated. This ensures only one Qpid instance is running at one time, and the node with the Pacemaker virtual IP will always be the node running Qpid. | Not heavily considered. However, Qpid can be changed to run on all controller nodes for scalability and availability purposes, and removed from Pacemaker. |

| Haproxy | maxconn 3000 |

Haproxy is a software layer-7 load balancer used to front door all clustered OpenStack API components and do SSL termination. Haproxy can be added as a resource to the Pacemaker software that runs on the Controller nodes where HAProxy is situated. This ensures that only one HAProxy instance is running at one time, and the node with the Pacemaker virtual IP will always be the node running HAProxy. | Not considered. Haproxy has small enough performance overheads that a

single instance should scale enough for this level of workload. If extra

scalability is needed, keepalived or other

Layer-4 load balancing can be introduced to be placed in front of

multiple copies of Haproxy. |

| Memcached | MAXCONN="8192" CACHESIZE="30457" |

Memcached is a fast in-memory key/value cache software that is used by OpenStack components for caching data and increasing performance. Memcached runs on all controller nodes, ensuring that should one go down, another instance of memcached is available. | Not considered. A single instance of memcached should be able to scale

to the desired workloads. If scalability is desired, Haproxy can be

placed in front of Memcached (in raw tcp mode)

to utilise multiple memcached instances for

scalability. However, this might cause cache consistency issues. |

| Pacemaker | Configured to use corosync and

cman as a cluster communication

stack/quorum manager, and as a two-node cluster. |

Pacemaker is the clustering software used to ensure the availability of services running on the controller and network nodes:

|

If more nodes need to be made cluster aware, Pacemaker can scale to 64 nodes. |

| GlusterFS | glusterfs performance profile "virt" enabled on

all volumes. Volumes are setup in 2-node replication. |

Glusterfs is a clustered file system that is run on the storage nodes to

provide persistent scalable data storage in the environment. Because all

connections to gluster use the gluster native

mount points, the gluster instances themselves

provide availability and failover functionality. |

The scalability of GlusterFS storage can be achieved by adding in more storage volumes. |

| Component | Node type | Tuning | Availability | Scalability |

|---|---|---|---|---|

| Dashboard (horizon) | Controller | Configured to use memcached as a session store,

neutron support is enabled,

can_set_mount_point = False |

The Dashboard is run on all controller nodes, ensuring at least once instance will be available in case of node failure. It also sits behind HAProxy, which detects when the software fails and routes requests around the failing instance. | The Dashboard is run on all controller nodes, so scalability can be achieved with additional controller nodes. Haproxy allows scalability for the Dashboard as more nodes are added. |

| Identity (keystone) | Controller | Configured to use memcached for caching, and use PKI for tokens. | Identity is run on all controller nodes, ensuring at least once instance will be available in case of node failure. Identity also sits behind HAProxy, which detects when the software fails and routes requests around the failing instance. | Identity is run on all controller nodes, so scalability can be achieved with additional controller nodes. Haproxy allows scalability for Identity as more nodes are added. |

| Image Service (glance) | Controller | /var/lib/glance/images is a GlusterFS native

mount to a Gluster volume off the storage layer. |

The Image Service is run on all controller nodes, ensuring at least once instance will be available in case of node failure. It also sits behind HAProxy, which detects when the software fails and routes requests around the failing instance. | The Image Service is run on all controller nodes, so scalability can be achieved with additional controller nodes. HAProxy allows scalability for the Image Service as more nodes are added. |

| Compute (nova) | Controller, Compute | Configured to use Qpid. Configured |

The nova API, scheduler, objectstore, cert, consoleauth, conductor, and vncproxy services are run on all controller nodes, ensuring at least once instance will be available in case of node failure. Compute is also behind HAProxy, which detects when the software fails and routes requests around the failing instance. Compute's compute and conductor services, which run on the compute nodes, are only needed to run services on that node, so availability of those services is coupled tightly to the nodes that are available. As long as a compute node is up, it will have the needed services running on top of it. |

The nova API, scheduler, objectstore, cert, consoleauth, conductor, and vncproxy services are run on all controller nodes, so scalability can be achieved with additional controller nodes. HAProxy allows scalability for Compute as more nodes are added. The scalability of services running on the compute nodes (compute, conductor) is achieved linearly by adding in more compute nodes. |

| Block Storage (cinder) | Controller | Configured to use Qpid, qpid_heartbeat = 10,

configured to use a Gluster volume from the storage layer as the backend

for Block Storage, using the Gluster native client. |

Block Storage API, scheduler, and volume services are run on all controller nodes, ensuring at least once instance will be available in case of node failure. Block Storage also sits behind HAProxy, which detects if the software fails and routes requests around the failing instance. | Block Storage API, scheduler and volume services are run on all controller nodes, so scalability can be achieved with additional controller nodes. HAProxy allows scalability for Block Storage as more nodes are added. |

| OpenStack Networking (neutron) | Controller, Compute, Network | Configured to use QPID. qpid_heartbeat = 10,

kernel namespace support enabled, tenant_network_type =

vlan, allow_overlapping_ips =

true, tenant_network_type =

vlan, bridge_uplinks =

br-ex:em2, bridge_mappings =

physnet1:br-ex |

The OpenStack Networking service is run on all controller nodes, ensuring at least one instance will be available in case of node failure. It also sits behind HAProxy, which detects if the software fails and routes requests around the failing instance. OpenStack Networking's |

The OpenStack Networking server service is run on all controller nodes,

so scalability can be achieved with additional controller nodes. HAProxy

allows scalability for OpenStack Networking as more nodes are added.

Scalability of services running on the network nodes is not currently

supported by OpenStack Networking, so they are not be considered. One

copy of the services should be sufficient to handle the workload.

Scalability of the ovs-agent running on compute

nodes is achieved by adding in more compute nodes as necessary. |

With so many considerations and options available our hope is to provide a few clearly-marked and tested paths for your OpenStack exploration. If you're looking for additional ideas, check out the Use Cases appendix, the OpenStack Installation Guides, or the OpenStack User Stories page.