An OpenStack cloud does not have much value without users. This chapter covers topics that relate to managing users, projects, and quotas. This chapter describes users and projects as described by version 2 of the OpenStack Identity API.

![[Warning]](../common/images/admon/warning.png) | Warning |

|---|---|

While version 3 of the Identity API is available, the client tools do not yet implement those calls and most OpenStack clouds are still implementing Identity API v2.0. |

In OpenStack user interfaces and documentation, a group of users is referred to as a project or tenant. These terms are interchangeable.

The initial implementation of the OpenStack Compute Service (nova) had

its own authentication system and used the term

project. When authentication moved into the OpenStack

Identity Service (keystone) project, it used the term

tenant to refer to a group of users. Because of this

legacy, some of the OpenStack tools refer to projects and some refer to

tenants.

![[Tip]](../common/images/admon/tip.png) | Tip |

|---|---|

This guide uses the term |

Users must be associated with at least one project, though they may belong to many. Therefore, you should add at least one project before adding users.

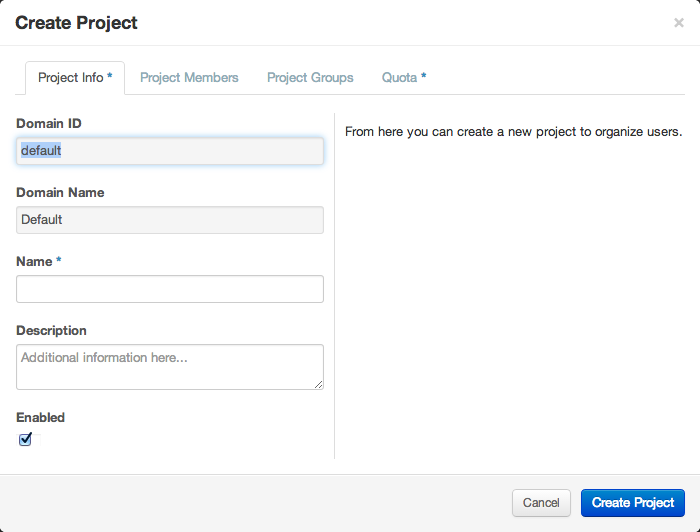

To create a project through the OpenStack dashboard:

Log in as an administrative user.

Select the Admin tab in the left navigation bar.

Under Identity Panel, click Projects.

Click the button.

You are prompted for a project name and an optional, but recommended, description. Select the check box at the bottom of the form to enable this project. By default, it is enabled, which is shown in Figure 9.1, “Dashboard's Create Project form”.

It is also possible to add project members and adjust the project quotas. We'll discuss those actions later, but in practice it can be quite convenient to deal with all these operations at one time.

To add a project through the command line, you must use the keystone utility, which uses "tenant" in place of "project":

# keystone tenant-create --name=demo

This command creates a project named "demo." Optionally, you can add a description

string by appending --description

, which can be very useful. You can also

create a group in a disabled state by appending tenant-description--enabled false to the command.

By default, projects are created in an enabled state.

To prevent system capacities from being exhausted without notification, you can set up quotas. Quotas are operational limits. For example, the number of gigabytes allowed per tenant can be controlled to ensure that a single tenant cannot consume all of the disk space. Quotas are currently enforced at the tenant (or project) level, rather than by user.

![[Warning]](../common/images/admon/warning.png) | Warning |

|---|---|

Because without sensible quotas a single tenant could use up all the available resources, default quotas are shipped with OpenStack. You should pay attention to what quota settings make sense for your hardware capabilities. |

Using the command-line interface, you can manage quotas for the OpenStack Compute Service and the Block Storage Service.

Typically, default values are changed because a tenant requires more than the OpenStack default of 10 volumes per tenant, or more than the OpenStack default of 1 TB of disk space on a compute node.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

To view all tenants, run: $ keystone tenant-list +----------------------------------+----------+---------+ | id | name | enabled | +----------------------------------+----------+---------+ | a981642d22c94e159a4a6540f70f9f8d | admin | True | | 934b662357674c7b9f5e4ec6ded4d0e7 | tenant01 | True | | 7bc1dbfd7d284ec4a856ea1eb82dca80 | tenant02 | True | | 9c554aaef7804ba49e1b21cbd97d218a | services | True | +----------------------------------+----------+---------+

|

OpenStack Havana introduced a basic quota feature for the Image service, so you can now restrict a project's image storage by total number of bytes. Currently, this quota is applied cloud-wide, so if you were to set an Image quota limit of 5 GB, then all projects in your cloud will be able to store only 5 GB of images and snapshots.

To enable this feature, edit the

/etc/glance/glance-api.conf file, and under the

[DEFAULT] section, add:

user_storage_quota = <bytes>

For example, to restrict a project's image storage to 5 GB, do this:

user_storage_quota = 5368709120

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

In the Icehouse release, there is a configuration option in

|

As an administrative user, you can update the Compute Service quotas for an existing tenant, as well as update the quota defaults for a new tenant.

| Quota | Description | Property Name |

|---|---|---|

|

Fixed IPs |

Number of fixed IP addresses allowed per tenant. This number must be equal to or greater than the number of allowed instances. |

|

|

Floating IPs |

Number of floating IP addresses allowed per tenant. |

|

|

Injected File Content Bytes |

Number of content bytes allowed per injected file. |

|

|

Injected File Path Bytes |

Number of bytes allowed per injected file path. |

|

|

Injected Files |

Number of injected files allowed per tenant. |

|

|

Instances |

Number of instances allowed per tenant. |

|

|

Key Pairs |

Number of key pairs allowed per user. |

|

|

Metadata Items |

Number of metadata items allowed per instance. |

|

|

RAM |

Megabytes of instance RAM allowed per tenant. |

|

|

Security Group Rules |

Number of rules per security group. |

|

|

Security Groups |

Number of security groups per tenant. |

|

|

VCPUs |

Number of instance cores allowed per tenant. |

|

As an administrative user, you can use the nova

quota-* commands, which are provided by the

python-novaclient package, to view and update

tenant quotas.

To view and update default quota values

List all default quotas for all tenants, as follows:

$ nova quota-defaults

For example:

$ nova quota-defaults +-----------------------------+-------+ | Property | Value | +-----------------------------+-------+ | metadata_items | 128 | | injected_file_content_bytes | 10240 | | ram | 51200 | | floating_ips | 10 | | key_pairs | 100 | | instances | 10 | | security_group_rules | 20 | | injected_files | 5 | | cores | 20 | | fixed_ips | -1 | | injected_file_path_bytes | 255 | | security_groups | 10 | +-----------------------------+-------+

Update a default value for a new tenant, as follows:

$ nova quota-class-update default

keyvalueFor example:

$ nova quota-class-update default instances 15

To view quota values for a tenant (project)

Place the tenant ID in a useable variable, as follows:

$ tenant=$(keystone tenant-list | awk '/

tenantName/ {print $2}')List the currently set quota values for a tenant, as follows:

$ nova quota-show --tenant $tenant

For example:

$ nova quota-show --tenant $tenant +-----------------------------+-------+ | Property | Value | +-----------------------------+-------+ | metadata_items | 128 | | injected_file_content_bytes | 10240 | | ram | 51200 | | floating_ips | 12 | | key_pairs | 100 | | instances | 10 | | security_group_rules | 20 | | injected_files | 5 | | cores | 20 | | fixed_ips | -1 | | injected_file_path_bytes | 255 | | security_groups | 10 | +-----------------------------+-------+

To update quota values for a tenant (project)

Obtain the tenant ID, as follows:

$ tenant=$(keystone tenant-list | awk '/

tenantName/ {print $2}')Update a particular quota value, as follows:

# nova quota-update --

quotaNamequotaValuetenantIDFor example:

# nova quota-update --floating-ips 20 $tenant # nova quota-show --tenant $tenant +-----------------------------+-------+ | Property | Value | +-----------------------------+-------+ | metadata_items | 128 | | injected_file_content_bytes | 10240 | | ram | 51200 | | floating_ips | 20 | | key_pairs | 100 | | instances | 10 | | security_group_rules | 20 | | injected_files | 5 | | cores | 20 | | fixed_ips | -1 | | injected_file_path_bytes | 255 | | security_groups | 10 | +-----------------------------+-------+

![[Note]](../common/images/admon/note.png)

Note To view a list of options for the quota-update command, run:

$ nova help quota-update

Object Storage quotas were introduced in Swift 1.8 (OpenStack Grizzly). There are currently two categories of quotas for Object Storage:

Container quotas: Limits the total size (in bytes) or number of objects that can be stored in a single container.

Account quotas: Limits the total size (in bytes) that a user has available in the Object Storage service.

To take advantage of either container quotas or account quotas, your Object Storage

proxy server must have container_quotas or account_quotas (or

both) added to the [pipeline:main] pipeline. Each quota type also requires its own section

in the proxy-server.conf file:

[pipeline:main] pipeline = healthcheck [...] container_quotas account_quotas proxy-server [filter:account_quotas] use = egg:swift#account_quotas [filter:container_quotas] use = egg:swift#container_quotas

To view and update Object Storage quotas, use the swift command provided by

the python-swiftclient package. Any user included in the project can view the

quotas placed on their project. To update Object Storage quotas on a project, you must have

the role of ResellerAdmin in the project that the quota is being applied to.

To view account quotas placed on a project:

$ swift stat

Account: AUTH_b36ed2d326034beba0a9dd1fb19b70f9

Containers: 0

Objects: 0

Bytes: 0

Meta Quota-Bytes: 214748364800

X-Timestamp: 1351050521.29419

Content-Type: text/plain; charset=utf-8

Accept-Ranges: bytesTo apply or update account quotas on a project:

$ swift post -m quota-bytes:

<bytes>For example, to place a 5 GB quota on an account:

$ swift post -m quota-bytes:

5368709120To verify the quota, run the swift stat command again:

$ swift stat

Account: AUTH_b36ed2d326034beba0a9dd1fb19b70f9

Containers: 0

Objects: 0

Bytes: 0

Meta Quota-Bytes: 5368709120

X-Timestamp: 1351541410.38328

Content-Type: text/plain; charset=utf-8

Accept-Ranges: bytesAs an administrative user, you can update the Block Storage Service quotas for a tenant, as well as update the quota defaults for a new tenant.

| Property Name | Description |

|

gigabytes |

Number of volume gigabytes allowed per tenant. |

|

snapshots |

Number of Block Storage snapshots allowed per tenant. |

|

volumes |

Number of Block Storage volumes allowed per tenant. |

As an administrative user, you can use the cinder

quota-* commands, which are provided by the

python-cinderclient package, to view and update

tenant quotas.

To view and update default Block Storage quota values

List all default quotas for all tenants, as follows:

$ cinder quota-defaults

For example:

$ cinder quota-defaults +-----------+-------+ | Property | Value | +-----------+-------+ | gigabytes | 1000 | | snapshots | 10 | | volumes | 10 | +-----------+-------+

To update a default value for a new tenant, update the property in the

/etc/cinder/cinder.conffile.

To view Block Storage quotas for a tenant

View quotas for the tenant, as follows:

# cinder quota-show

tenantNameFor example:

# cinder quota-show tenant01 +-----------+-------+ | Property | Value | +-----------+-------+ | gigabytes | 1000 | | snapshots | 10 | | volumes | 10 | +-----------+-------+

To update Compute service quotas

Place the tenant ID in a useable variable, as follows:

$ tenant=$(keystone tenant-list | awk '/

tenantName/ {print $2}')Update a particular quota value, as follows:

# cinder quota-update --

quotaNameNewValuetenantIDFor example:

# cinder quota-update --volumes 15 $tenant # cinder quota-show tenant01 +-----------+-------+ | Property | Value | +-----------+-------+ | gigabytes | 1000 | | snapshots | 10 | | volumes | 15 | +-----------+-------+

The command-line tools for managing users are inconvenient to use directly. They require issuing multiple commands to complete a single task, and they use UUIDs rather than symbolic names for many items. In practice, humans typically do not use these tools directly. Fortunately, the OpenStack dashboard provides a reasonable interface to this. In addition, many sites write custom tools for local needs to enforce local policies and provide levels of self-service to users that aren't currently available with packaged tools.

To create a user, you need the following information:

Username

Email address

Password

Primary project

Role

Username and email address are self-explanatory, though your site may have local conventions you should observe. Setting and changing passwords in the Identity service requires administrative privileges. As of the Folsom release, users cannot change their own passwords. This is a large driver for creating local custom tools, and must be kept in mind when assigning and distributing passwords. The primary project is simply the first project the user is associated with and must exist prior to creating the user. Role is almost always going to be "member." Out of the box, OpenStack comes with two roles defined:

member: a typical user.

admin: an administrative super user, which has full permissions across all projects and should be used with great care.

It is possible to define other roles, but doing so is uncommon.

Once you've gathered this information, creating the user in the dashboard is just another web form similar to what we've seen before and can be found by clicking the Users link in the Admin navigation bar and then clicking the Create User button at the top right.

Modifying users is also done from this Users page. If you have a large number of users, this page can get quite crowded. The Filter search box at the top of the page can be used to limit the users listing. A form very similar to the user creation dialog can be pulled up by selecting Edit from the actions dropdown menu at the end of the line for the user you are modifying.

Many sites run with users being associated with only one project. This is a more conservative and simpler choice both for administration and for users. Administratively, if a user reports a problem with an instance or quota, it is obvious which project this relates to as well. Users needn't worry about what project they are acting in if they are only in one project. However, note that, by default, any user can affect the resources of any other user within their project. It is also possible to associate users with multiple projects if that makes sense for your organization.

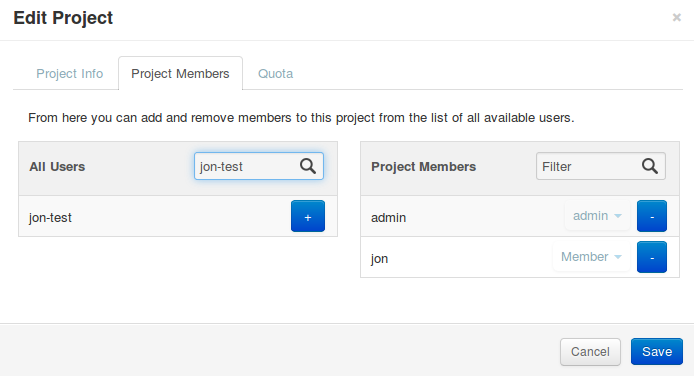

Associating existing users with an additional project or removing them from an older project is done from the Projects page of the dashboard by selecting Modify Users from the Actions column, as shown in Figure 9.2, “Edit Project Members tab”:

From this view you can do a number of useful and a few dangerous things.

The first column of this form, named All Users, includes a list of all the users in your cloud who are not already associated with this project. The second column shows all the users who are. These lists can be quite long, but they can be limited by typing a substring of the user name you are looking for in the filter field at the top of the column.

From here, click the + icon to add users to the project. Click the - to remove them.

The dangerous possibility comes with the ability to change member roles. This is the dropdown list below the user name in the Project Members list. In virtually all cases this value should be set to Member. This example purposefully shows an administrative user where this value is admin.

![[Warning]](../common/images/admon/warning.png) | Warning |

|---|---|

The admin is global not per project, so granting a user the admin role in any project gives the user administrative rights across the whole cloud. |

Typical use is to only create administrative users in a single project, by convention the admin project, which is created by default during cloud setup. If your administrative users also use the cloud to launch and manage instances, it is strongly recommended that you use separate user accounts for administrative access and normal operations and that they be in distinct projects.

The default authorization settings allow administrative users only to create resources on behalf of a different project. OpenStack handles two kind of authorization policies:

Operation-based: Policies specify access criteria for specific operations, possibly with fine-grained control over specific attributes.

Resource-based: Whether access to a specific resource might be granted or not according to the permissions configured for the resource (currently available only for the network resource). The actual authorization policies enforced in an OpenStack service vary from deployment to deployment.

The policy engine reads entries from the policy.json file. The actual

location of this file might vary from distribution to distribution: for nova, it is

typically in /etc/nova/policy.json. You can update entries while the system is

running, and you do not have to restart services. Currently, the only way to update such

policies is to edit the policy file.

The OpenStack service's policy engine matches a

policy directly. A rule indicates evaluation of the

elements of such policies. For instance, in a

compute:create:

[["rule:admin_or_owner"]] statement, the

policy is compute:create, and the rule is

admin_or_owner.

Policies are triggered by an OpenStack policy engine

whenever one of them matches an OpenStack API

operation or a specific attribute being used in a

given operation. For instance, the engine tests the

create:compute policy every time a

user sends a POST /v2/{tenant_id}/servers

request to the OpenStack Compute API server. Policies

can be also related to specific API extensions. For instance, if a user

needs an extension like

compute_extension:rescue the

attributes defined by the provider extensions trigger

the rule test for that operation.

An authorization policy can be composed by one or more rules. If more rules are specified, evaluation policy is successful if any of the rules evaluates successfully; if an API operation matches multiple policies, then all the policies must evaluate successfully. Also, authorization rules are recursive. Once a rule is matched, the rule(s) can be resolved to another rule, until a terminal rule is reached. These are the rules defined:

Role-based rules: Evaluate successfully if the user submitting the request has the specified role. For instance

"role:admin"is successful if the user submitting the request is an administrator.Field-based rules: Evaluate successfully if a field of the resource specified in the current request matches a specific value. For instance

"field:networks:shared=True"is successful if the attribute shared of the network resource is set to true.Generic rules: Compare an attribute in the resource with an attribute extracted from the user's security credentials and evaluates successfully if the comparison is successful. For instance

"tenant_id:%(tenant_id)s"is successful if the tenant identifier in the resource is equal to the tenant identifier of the user submitting the request.

Here are snippets of the default nova

policy.json file:

{

"context_is_admin": [["role:admin"]],

"admin_or_owner": [["is_admin:True"], ["project_id:%(project_id)s"]], [1]

"default": [["rule:admin_or_owner"]], [2]

"compute:create": [ ],

"compute:create:attach_network": [ ],

"compute:create:attach_volume": [ ],

"compute:get_all": [ ],

"admin_api": [["is_admin:True"]],

"compute_extension:accounts": [["rule:admin_api"]],

"compute_extension:admin_actions": [["rule:admin_api"]],

"compute_extension:admin_actions:pause": [["rule:admin_or_owner"]],

"compute_extension:admin_actions:unpause": [["rule:admin_or_owner"]],

...

"compute_extension:admin_actions:migrate": [["rule:admin_api"]],

"compute_extension:aggregates": [["rule:admin_api"]],

"compute_extension:certificates": [ ],

...

"compute_extension:flavorextraspecs": [ ],

"compute_extension:flavormanage": [["rule:admin_api"]], [3]

}

[1] Shows a rule that evaluates successfully if the current user is an administrator or the owner of the resource specified in the request (tenant identifier is equal).

[2] Shows the default policy, which is always evaluated if an API operation does not

match any of the policies in policy.json.

[3] Shows a policy restricting the ability to manipulate flavors to administrators using the Admin API only.

In some cases, some operations should be restricted to administrators only. Therefore, as a further example, let us consider how this sample policy file could be modified in a scenario where we enable users to create their own flavors:

"compute_extension:flavormanage": [ ],

Users on your cloud can disrupt other users, sometimes intentionally and maliciously and other times by accident. Understanding the situation allows you to make a better decision on how to handle the disruption.

For example, a group of users have instances that are utilizing a large amount of compute resources for very compute-intensive tasks. This is driving the load up on compute nodes and affecting other users. In this situation, review your user use cases. You may find that high compute scenarios are common, and should then plan for proper segregation in your cloud, such as host aggregation or regions.

Another example is a user consuming a very large amount of bandwidth. Again, the key is to understand what the user is doing. If they naturally need a high amount of bandwidth, you might have to limit their transmission rate as to not affect other users or move the user to an area with more bandwidth available. On the other hand, maybe the user's instance has been hacked and is part of a botnet launching DDOS attacks. Resolution of this issue is the same as though any other server on your network has been hacked. Contact the user and give them time to respond. If they don't respond, shut down the instance.

A final example is if a user is hammering cloud resources repeatedly. Contact the user and learn what they are trying to do. Maybe they don't understand that what they're doing is inappropriate or maybe there is an issue with the resource they are trying to access that is causing their requests to queue or lag.

One key element of systems administration that is often overlooked is that end users are the reason why systems administrators exist. Don't go the BOFH route and terminate every user who causes an alert to go off. Work with them to understand what they're trying to accomplish and see how your environment can better assist them in achieving their goals. Meet your users needs by organizing your users into projects, applying policies, managing quotas, and working with them.