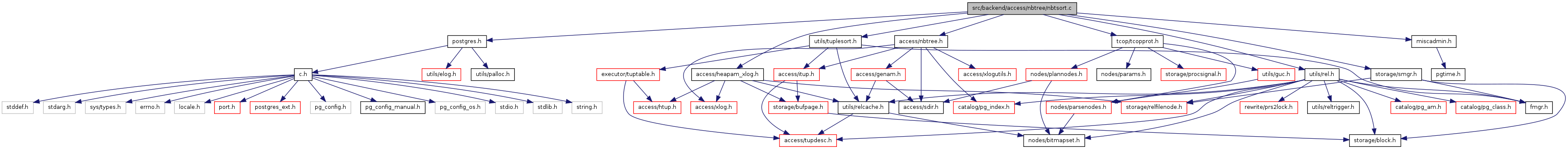

#include "postgres.h"#include "access/heapam_xlog.h"#include "access/nbtree.h"#include "miscadmin.h"#include "storage/smgr.h"#include "tcop/tcopprot.h"#include "utils/rel.h"#include "utils/tuplesort.h"

Go to the source code of this file.

Data Structures | |

| struct | BTSpool |

| struct | BTPageState |

| struct | BTWriteState |

Typedefs | |

| typedef struct BTPageState | BTPageState |

| typedef struct BTWriteState | BTWriteState |

Functions | |

| static Page | _bt_blnewpage (uint32 level) |

| static BTPageState * | _bt_pagestate (BTWriteState *wstate, uint32 level) |

| static void | _bt_slideleft (Page page) |

| static void | _bt_sortaddtup (Page page, Size itemsize, IndexTuple itup, OffsetNumber itup_off) |

| static void | _bt_buildadd (BTWriteState *wstate, BTPageState *state, IndexTuple itup) |

| static void | _bt_uppershutdown (BTWriteState *wstate, BTPageState *state) |

| static void | _bt_load (BTWriteState *wstate, BTSpool *btspool, BTSpool *btspool2) |

| BTSpool * | _bt_spoolinit (Relation heap, Relation index, bool isunique, bool isdead) |

| void | _bt_spooldestroy (BTSpool *btspool) |

| void | _bt_spool (IndexTuple itup, BTSpool *btspool) |

| void | _bt_leafbuild (BTSpool *btspool, BTSpool *btspool2) |

| static void | _bt_blwritepage (BTWriteState *wstate, Page page, BlockNumber blkno) |

| typedef struct BTPageState BTPageState |

| typedef struct BTWriteState BTWriteState |

Definition at line 241 of file nbtsort.c.

References _bt_pageinit(), BTP_LEAF, BTPageOpaqueData::btpo, BTPageOpaqueData::btpo_cycleid, BTPageOpaqueData::btpo_flags, BTPageOpaqueData::btpo_next, BTPageOpaqueData::btpo_prev, BTPageOpaqueData::level, PageGetSpecialPointer, and palloc().

Referenced by _bt_buildadd(), and _bt_pagestate().

{

Page page;

BTPageOpaque opaque;

page = (Page) palloc(BLCKSZ);

/* Zero the page and set up standard page header info */

_bt_pageinit(page, BLCKSZ);

/* Initialize BT opaque state */

opaque = (BTPageOpaque) PageGetSpecialPointer(page);

opaque->btpo_prev = opaque->btpo_next = P_NONE;

opaque->btpo.level = level;

opaque->btpo_flags = (level > 0) ? 0 : BTP_LEAF;

opaque->btpo_cycleid = 0;

/* Make the P_HIKEY line pointer appear allocated */

((PageHeader) page)->pd_lower += sizeof(ItemIdData);

return page;

}

| static void _bt_blwritepage | ( | BTWriteState * | wstate, | |

| Page | page, | |||

| BlockNumber | blkno | |||

| ) | [static] |

Definition at line 268 of file nbtsort.c.

References BTWriteState::btws_pages_written, BTWriteState::btws_use_wal, BTWriteState::btws_zeropage, BTWriteState::index, log_newpage(), MAIN_FORKNUM, PageSetChecksumInplace(), palloc0(), pfree(), RelationData::rd_node, RelationData::rd_smgr, RelationOpenSmgr, smgrextend(), and smgrwrite().

Referenced by _bt_buildadd(), and _bt_uppershutdown().

{

/* Ensure rd_smgr is open (could have been closed by relcache flush!) */

RelationOpenSmgr(wstate->index);

/* XLOG stuff */

if (wstate->btws_use_wal)

{

/* We use the heap NEWPAGE record type for this */

log_newpage(&wstate->index->rd_node, MAIN_FORKNUM, blkno, page);

}

/*

* If we have to write pages nonsequentially, fill in the space with

* zeroes until we come back and overwrite. This is not logically

* necessary on standard Unix filesystems (unwritten space will read as

* zeroes anyway), but it should help to avoid fragmentation. The dummy

* pages aren't WAL-logged though.

*/

while (blkno > wstate->btws_pages_written)

{

if (!wstate->btws_zeropage)

wstate->btws_zeropage = (Page) palloc0(BLCKSZ);

/* don't set checksum for all-zero page */

smgrextend(wstate->index->rd_smgr, MAIN_FORKNUM,

wstate->btws_pages_written++,

(char *) wstate->btws_zeropage,

true);

}

PageSetChecksumInplace(page, blkno);

/*

* Now write the page. There's no need for smgr to schedule an fsync for

* this write; we'll do it ourselves before ending the build.

*/

if (blkno == wstate->btws_pages_written)

{

/* extending the file... */

smgrextend(wstate->index->rd_smgr, MAIN_FORKNUM, blkno,

(char *) page, true);

wstate->btws_pages_written++;

}

else

{

/* overwriting a block we zero-filled before */

smgrwrite(wstate->index->rd_smgr, MAIN_FORKNUM, blkno,

(char *) page, true);

}

pfree(page);

}

| static void _bt_buildadd | ( | BTWriteState * | wstate, | |

| BTPageState * | state, | |||

| IndexTuple | itup | |||

| ) | [static] |

Definition at line 449 of file nbtsort.c.

References _bt_blnewpage(), _bt_blwritepage(), _bt_pagestate(), _bt_sortaddtup(), Assert, BTMaxItemSize, BTPageOpaqueData::btpo_next, BTPageOpaqueData::btpo_prev, BTPageState::btps_blkno, BTPageState::btps_lastoff, BTPageState::btps_level, BTPageState::btps_minkey, BTPageState::btps_next, BTPageState::btps_page, BTWriteState::btws_pages_alloced, CHECK_FOR_INTERRUPTS, CopyIndexTuple(), ereport, errcode(), errhint(), errmsg(), ERROR, errtableconstraint(), BTWriteState::heap, BTWriteState::index, IndexTupleDSize, ItemIdGetLength, ItemIdSetUnused, ItemPointerSet, MAXALIGN, NULL, OffsetNumberNext, P_FIRSTKEY, P_HIKEY, PageGetFreeSpace(), PageGetItem, PageGetItemId, PageGetSpecialPointer, pfree(), RelationGetRelationName, and IndexTupleData::t_tid.

Referenced by _bt_load(), and _bt_uppershutdown().

{

Page npage;

BlockNumber nblkno;

OffsetNumber last_off;

Size pgspc;

Size itupsz;

/*

* This is a handy place to check for cancel interrupts during the btree

* load phase of index creation.

*/

CHECK_FOR_INTERRUPTS();

npage = state->btps_page;

nblkno = state->btps_blkno;

last_off = state->btps_lastoff;

pgspc = PageGetFreeSpace(npage);

itupsz = IndexTupleDSize(*itup);

itupsz = MAXALIGN(itupsz);

/*

* Check whether the item can fit on a btree page at all. (Eventually, we

* ought to try to apply TOAST methods if not.) We actually need to be

* able to fit three items on every page, so restrict any one item to 1/3

* the per-page available space. Note that at this point, itupsz doesn't

* include the ItemId.

*

* NOTE: similar code appears in _bt_insertonpg() to defend against

* oversize items being inserted into an already-existing index. But

* during creation of an index, we don't go through there.

*/

if (itupsz > BTMaxItemSize(npage))

ereport(ERROR,

(errcode(ERRCODE_PROGRAM_LIMIT_EXCEEDED),

errmsg("index row size %lu exceeds maximum %lu for index \"%s\"",

(unsigned long) itupsz,

(unsigned long) BTMaxItemSize(npage),

RelationGetRelationName(wstate->index)),

errhint("Values larger than 1/3 of a buffer page cannot be indexed.\n"

"Consider a function index of an MD5 hash of the value, "

"or use full text indexing."),

errtableconstraint(wstate->heap,

RelationGetRelationName(wstate->index))));

/*

* Check to see if page is "full". It's definitely full if the item won't

* fit. Otherwise, compare to the target freespace derived from the

* fillfactor. However, we must put at least two items on each page, so

* disregard fillfactor if we don't have that many.

*/

if (pgspc < itupsz || (pgspc < state->btps_full && last_off > P_FIRSTKEY))

{

/*

* Finish off the page and write it out.

*/

Page opage = npage;

BlockNumber oblkno = nblkno;

ItemId ii;

ItemId hii;

IndexTuple oitup;

/* Create new page of same level */

npage = _bt_blnewpage(state->btps_level);

/* and assign it a page position */

nblkno = wstate->btws_pages_alloced++;

/*

* We copy the last item on the page into the new page, and then

* rearrange the old page so that the 'last item' becomes its high key

* rather than a true data item. There had better be at least two

* items on the page already, else the page would be empty of useful

* data.

*/

Assert(last_off > P_FIRSTKEY);

ii = PageGetItemId(opage, last_off);

oitup = (IndexTuple) PageGetItem(opage, ii);

_bt_sortaddtup(npage, ItemIdGetLength(ii), oitup, P_FIRSTKEY);

/*

* Move 'last' into the high key position on opage

*/

hii = PageGetItemId(opage, P_HIKEY);

*hii = *ii;

ItemIdSetUnused(ii); /* redundant */

((PageHeader) opage)->pd_lower -= sizeof(ItemIdData);

/*

* Link the old page into its parent, using its minimum key. If we

* don't have a parent, we have to create one; this adds a new btree

* level.

*/

if (state->btps_next == NULL)

state->btps_next = _bt_pagestate(wstate, state->btps_level + 1);

Assert(state->btps_minkey != NULL);

ItemPointerSet(&(state->btps_minkey->t_tid), oblkno, P_HIKEY);

_bt_buildadd(wstate, state->btps_next, state->btps_minkey);

pfree(state->btps_minkey);

/*

* Save a copy of the minimum key for the new page. We have to copy

* it off the old page, not the new one, in case we are not at leaf

* level.

*/

state->btps_minkey = CopyIndexTuple(oitup);

/*

* Set the sibling links for both pages.

*/

{

BTPageOpaque oopaque = (BTPageOpaque) PageGetSpecialPointer(opage);

BTPageOpaque nopaque = (BTPageOpaque) PageGetSpecialPointer(npage);

oopaque->btpo_next = nblkno;

nopaque->btpo_prev = oblkno;

nopaque->btpo_next = P_NONE; /* redundant */

}

/*

* Write out the old page. We never need to touch it again, so we can

* free the opage workspace too.

*/

_bt_blwritepage(wstate, opage, oblkno);

/*

* Reset last_off to point to new page

*/

last_off = P_FIRSTKEY;

}

/*

* If the new item is the first for its page, stash a copy for later. Note

* this will only happen for the first item on a level; on later pages,

* the first item for a page is copied from the prior page in the code

* above.

*/

if (last_off == P_HIKEY)

{

Assert(state->btps_minkey == NULL);

state->btps_minkey = CopyIndexTuple(itup);

}

/*

* Add the new item into the current page.

*/

last_off = OffsetNumberNext(last_off);

_bt_sortaddtup(npage, itupsz, itup, last_off);

state->btps_page = npage;

state->btps_blkno = nblkno;

state->btps_lastoff = last_off;

}

Definition at line 198 of file nbtsort.c.

References _bt_load(), BTREE_METAPAGE, BTWriteState::btws_pages_alloced, BTWriteState::btws_pages_written, BTWriteState::btws_use_wal, BTWriteState::btws_zeropage, BTSpool::heap, BTWriteState::heap, BTSpool::index, BTWriteState::index, log_btree_build_stats, RelationNeedsWAL, ResetUsage(), ShowUsage(), BTSpool::sortstate, tuplesort_performsort(), and XLogIsNeeded.

Referenced by btbuild().

{

BTWriteState wstate;

#ifdef BTREE_BUILD_STATS

if (log_btree_build_stats)

{

ShowUsage("BTREE BUILD (Spool) STATISTICS");

ResetUsage();

}

#endif /* BTREE_BUILD_STATS */

tuplesort_performsort(btspool->sortstate);

if (btspool2)

tuplesort_performsort(btspool2->sortstate);

wstate.heap = btspool->heap;

wstate.index = btspool->index;

/*

* We need to log index creation in WAL iff WAL archiving/streaming is

* enabled UNLESS the index isn't WAL-logged anyway.

*/

wstate.btws_use_wal = XLogIsNeeded() && RelationNeedsWAL(wstate.index);

/* reserve the metapage */

wstate.btws_pages_alloced = BTREE_METAPAGE + 1;

wstate.btws_pages_written = 0;

wstate.btws_zeropage = NULL; /* until needed */

_bt_load(&wstate, btspool, btspool2);

}

| static void _bt_load | ( | BTWriteState * | wstate, | |

| BTSpool * | btspool, | |||

| BTSpool * | btspool2 | |||

| ) | [static] |

Definition at line 675 of file nbtsort.c.

References _bt_buildadd(), _bt_freeskey(), _bt_mkscankey_nodata(), _bt_pagestate(), _bt_uppershutdown(), DatumGetInt32, FunctionCall2Coll(), i, BTWriteState::index, index_getattr, MAIN_FORKNUM, NULL, pfree(), RelationData::rd_smgr, RelationGetDescr, RelationGetNumberOfAttributes, RelationNeedsWAL, RelationOpenSmgr, SK_BT_DESC, SK_BT_NULLS_FIRST, ScanKeyData::sk_collation, ScanKeyData::sk_flags, ScanKeyData::sk_func, smgrimmedsync(), BTSpool::sortstate, and tuplesort_getindextuple().

Referenced by _bt_leafbuild().

{

BTPageState *state = NULL;

bool merge = (btspool2 != NULL);

IndexTuple itup,

itup2 = NULL;

bool should_free,

should_free2,

load1;

TupleDesc tupdes = RelationGetDescr(wstate->index);

int i,

keysz = RelationGetNumberOfAttributes(wstate->index);

ScanKey indexScanKey = NULL;

if (merge)

{

/*

* Another BTSpool for dead tuples exists. Now we have to merge

* btspool and btspool2.

*/

/* the preparation of merge */

itup = tuplesort_getindextuple(btspool->sortstate,

true, &should_free);

itup2 = tuplesort_getindextuple(btspool2->sortstate,

true, &should_free2);

indexScanKey = _bt_mkscankey_nodata(wstate->index);

for (;;)

{

load1 = true; /* load BTSpool next ? */

if (itup2 == NULL)

{

if (itup == NULL)

break;

}

else if (itup != NULL)

{

for (i = 1; i <= keysz; i++)

{

ScanKey entry;

Datum attrDatum1,

attrDatum2;

bool isNull1,

isNull2;

int32 compare;

entry = indexScanKey + i - 1;

attrDatum1 = index_getattr(itup, i, tupdes, &isNull1);

attrDatum2 = index_getattr(itup2, i, tupdes, &isNull2);

if (isNull1)

{

if (isNull2)

compare = 0; /* NULL "=" NULL */

else if (entry->sk_flags & SK_BT_NULLS_FIRST)

compare = -1; /* NULL "<" NOT_NULL */

else

compare = 1; /* NULL ">" NOT_NULL */

}

else if (isNull2)

{

if (entry->sk_flags & SK_BT_NULLS_FIRST)

compare = 1; /* NOT_NULL ">" NULL */

else

compare = -1; /* NOT_NULL "<" NULL */

}

else

{

compare =

DatumGetInt32(FunctionCall2Coll(&entry->sk_func,

entry->sk_collation,

attrDatum1,

attrDatum2));

if (entry->sk_flags & SK_BT_DESC)

compare = -compare;

}

if (compare > 0)

{

load1 = false;

break;

}

else if (compare < 0)

break;

}

}

else

load1 = false;

/* When we see first tuple, create first index page */

if (state == NULL)

state = _bt_pagestate(wstate, 0);

if (load1)

{

_bt_buildadd(wstate, state, itup);

if (should_free)

pfree(itup);

itup = tuplesort_getindextuple(btspool->sortstate,

true, &should_free);

}

else

{

_bt_buildadd(wstate, state, itup2);

if (should_free2)

pfree(itup2);

itup2 = tuplesort_getindextuple(btspool2->sortstate,

true, &should_free2);

}

}

_bt_freeskey(indexScanKey);

}

else

{

/* merge is unnecessary */

while ((itup = tuplesort_getindextuple(btspool->sortstate,

true, &should_free)) != NULL)

{

/* When we see first tuple, create first index page */

if (state == NULL)

state = _bt_pagestate(wstate, 0);

_bt_buildadd(wstate, state, itup);

if (should_free)

pfree(itup);

}

}

/* Close down final pages and write the metapage */

_bt_uppershutdown(wstate, state);

/*

* If the index is WAL-logged, we must fsync it down to disk before it's

* safe to commit the transaction. (For a non-WAL-logged index we don't

* care since the index will be uninteresting after a crash anyway.)

*

* It's obvious that we must do this when not WAL-logging the build. It's

* less obvious that we have to do it even if we did WAL-log the index

* pages. The reason is that since we're building outside shared buffers,

* a CHECKPOINT occurring during the build has no way to flush the

* previously written data to disk (indeed it won't know the index even

* exists). A crash later on would replay WAL from the checkpoint,

* therefore it wouldn't replay our earlier WAL entries. If we do not

* fsync those pages here, they might still not be on disk when the crash

* occurs.

*/

if (RelationNeedsWAL(wstate->index))

{

RelationOpenSmgr(wstate->index);

smgrimmedsync(wstate->index->rd_smgr, MAIN_FORKNUM);

}

}

| static BTPageState * _bt_pagestate | ( | BTWriteState * | wstate, | |

| uint32 | level | |||

| ) | [static] |

Definition at line 326 of file nbtsort.c.

References _bt_blnewpage(), BTPageState::btps_blkno, BTPageState::btps_full, BTPageState::btps_lastoff, BTPageState::btps_level, BTPageState::btps_minkey, BTPageState::btps_next, BTPageState::btps_page, BTREE_DEFAULT_FILLFACTOR, BTWriteState::btws_pages_alloced, BTWriteState::index, palloc0(), and RelationGetTargetPageFreeSpace.

Referenced by _bt_buildadd(), and _bt_load().

{

BTPageState *state = (BTPageState *) palloc0(sizeof(BTPageState));

/* create initial page for level */

state->btps_page = _bt_blnewpage(level);

/* and assign it a page position */

state->btps_blkno = wstate->btws_pages_alloced++;

state->btps_minkey = NULL;

/* initialize lastoff so first item goes into P_FIRSTKEY */

state->btps_lastoff = P_HIKEY;

state->btps_level = level;

/* set "full" threshold based on level. See notes at head of file. */

if (level > 0)

state->btps_full = (BLCKSZ * (100 - BTREE_NONLEAF_FILLFACTOR) / 100);

else

state->btps_full = RelationGetTargetPageFreeSpace(wstate->index,

BTREE_DEFAULT_FILLFACTOR);

/* no parent level, yet */

state->btps_next = NULL;

return state;

}

| static void _bt_slideleft | ( | Page | page | ) | [static] |

Definition at line 359 of file nbtsort.c.

References OffsetNumberNext, P_FIRSTKEY, P_HIKEY, PageGetItemId, PageGetMaxOffsetNumber, and PageIsEmpty.

Referenced by _bt_uppershutdown().

{

OffsetNumber off;

OffsetNumber maxoff;

ItemId previi;

ItemId thisii;

if (!PageIsEmpty(page))

{

maxoff = PageGetMaxOffsetNumber(page);

previi = PageGetItemId(page, P_HIKEY);

for (off = P_FIRSTKEY; off <= maxoff; off = OffsetNumberNext(off))

{

thisii = PageGetItemId(page, off);

*previi = *thisii;

previi = thisii;

}

((PageHeader) page)->pd_lower -= sizeof(ItemIdData);

}

}

| static void _bt_sortaddtup | ( | Page | page, | |

| Size | itemsize, | |||

| IndexTuple | itup, | |||

| OffsetNumber | itup_off | |||

| ) | [static] |

Definition at line 394 of file nbtsort.c.

References elog, ERROR, InvalidOffsetNumber, P_ISLEAF, PageAddItem(), PageGetSpecialPointer, and IndexTupleData::t_info.

Referenced by _bt_buildadd().

{

BTPageOpaque opaque = (BTPageOpaque) PageGetSpecialPointer(page);

IndexTupleData trunctuple;

if (!P_ISLEAF(opaque) && itup_off == P_FIRSTKEY)

{

trunctuple = *itup;

trunctuple.t_info = sizeof(IndexTupleData);

itup = &trunctuple;

itemsize = sizeof(IndexTupleData);

}

if (PageAddItem(page, (Item) itup, itemsize, itup_off,

false, false) == InvalidOffsetNumber)

elog(ERROR, "failed to add item to the index page");

}

| void _bt_spool | ( | IndexTuple | itup, | |

| BTSpool * | btspool | |||

| ) |

Definition at line 188 of file nbtsort.c.

References BTSpool::sortstate, and tuplesort_putindextuple().

Referenced by btbuildCallback().

{

tuplesort_putindextuple(btspool->sortstate, itup);

}

| void _bt_spooldestroy | ( | BTSpool * | btspool | ) |

Definition at line 178 of file nbtsort.c.

References pfree(), BTSpool::sortstate, and tuplesort_end().

Referenced by btbuild().

{

tuplesort_end(btspool->sortstate);

pfree(btspool);

}

Definition at line 150 of file nbtsort.c.

References BTSpool::heap, BTSpool::index, BTSpool::isunique, maintenance_work_mem, palloc0(), BTSpool::sortstate, tuplesort_begin_index_btree(), and work_mem.

Referenced by btbuild().

{

BTSpool *btspool = (BTSpool *) palloc0(sizeof(BTSpool));

int btKbytes;

btspool->heap = heap;

btspool->index = index;

btspool->isunique = isunique;

/*

* We size the sort area as maintenance_work_mem rather than work_mem to

* speed index creation. This should be OK since a single backend can't

* run multiple index creations in parallel. Note that creation of a

* unique index actually requires two BTSpool objects. We expect that the

* second one (for dead tuples) won't get very full, so we give it only

* work_mem.

*/

btKbytes = isdead ? work_mem : maintenance_work_mem;

btspool->sortstate = tuplesort_begin_index_btree(heap, index, isunique,

btKbytes, false);

return btspool;

}

| static void _bt_uppershutdown | ( | BTWriteState * | wstate, | |

| BTPageState * | state | |||

| ) | [static] |

Definition at line 609 of file nbtsort.c.

References _bt_blwritepage(), _bt_buildadd(), _bt_initmetapage(), _bt_slideleft(), Assert, BTPageOpaqueData::btpo_flags, BTPageState::btps_blkno, BTPageState::btps_level, BTPageState::btps_minkey, BTPageState::btps_next, BTPageState::btps_page, BTREE_METAPAGE, ItemPointerSet, NULL, P_HIKEY, PageGetSpecialPointer, palloc(), pfree(), and IndexTupleData::t_tid.

Referenced by _bt_load().

{

BTPageState *s;

BlockNumber rootblkno = P_NONE;

uint32 rootlevel = 0;

Page metapage;

/*

* Each iteration of this loop completes one more level of the tree.

*/

for (s = state; s != NULL; s = s->btps_next)

{

BlockNumber blkno;

BTPageOpaque opaque;

blkno = s->btps_blkno;

opaque = (BTPageOpaque) PageGetSpecialPointer(s->btps_page);

/*

* We have to link the last page on this level to somewhere.

*

* If we're at the top, it's the root, so attach it to the metapage.

* Otherwise, add an entry for it to its parent using its minimum key.

* This may cause the last page of the parent level to split, but

* that's not a problem -- we haven't gotten to it yet.

*/

if (s->btps_next == NULL)

{

opaque->btpo_flags |= BTP_ROOT;

rootblkno = blkno;

rootlevel = s->btps_level;

}

else

{

Assert(s->btps_minkey != NULL);

ItemPointerSet(&(s->btps_minkey->t_tid), blkno, P_HIKEY);

_bt_buildadd(wstate, s->btps_next, s->btps_minkey);

pfree(s->btps_minkey);

s->btps_minkey = NULL;

}

/*

* This is the rightmost page, so the ItemId array needs to be slid

* back one slot. Then we can dump out the page.

*/

_bt_slideleft(s->btps_page);

_bt_blwritepage(wstate, s->btps_page, s->btps_blkno);

s->btps_page = NULL; /* writepage freed the workspace */

}

/*

* As the last step in the process, construct the metapage and make it

* point to the new root (unless we had no data at all, in which case it's

* set to point to "P_NONE"). This changes the index to the "valid" state

* by filling in a valid magic number in the metapage.

*/

metapage = (Page) palloc(BLCKSZ);

_bt_initmetapage(metapage, rootblkno, rootlevel);

_bt_blwritepage(wstate, metapage, BTREE_METAPAGE);

}

1.7.1

1.7.1