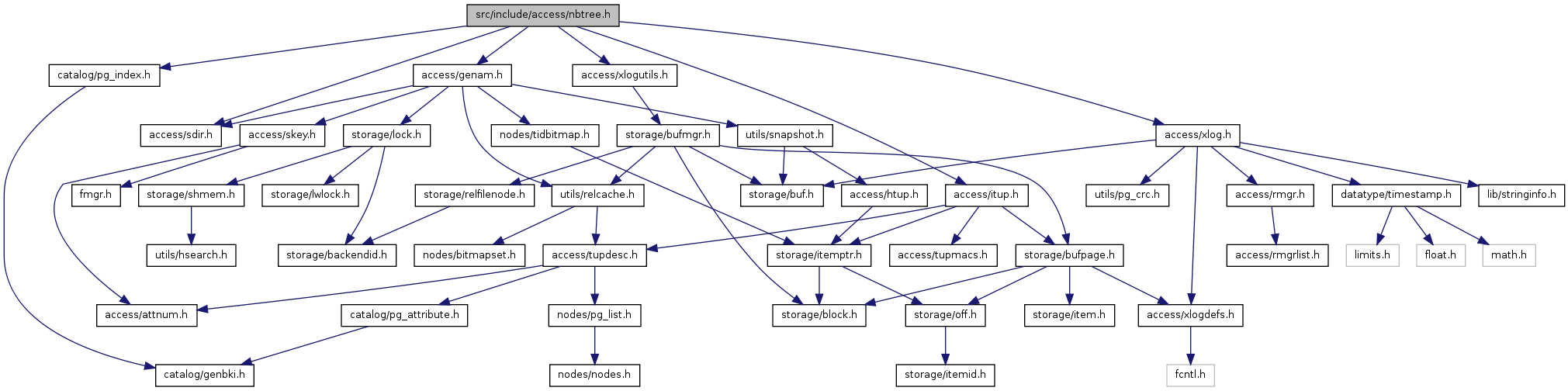

#include "access/genam.h"#include "access/itup.h"#include "access/sdir.h"#include "access/xlog.h"#include "access/xlogutils.h"#include "catalog/pg_index.h"

Go to the source code of this file.

| #define BT_READ BUFFER_LOCK_SHARE |

Definition at line 438 of file nbtree.h.

Referenced by _bt_check_unique(), _bt_first(), _bt_get_endpoint(), _bt_getroot(), _bt_getrootheight(), _bt_gettrueroot(), _bt_killitems(), _bt_next(), _bt_pagedel(), _bt_parent_deletion_safe(), _bt_search(), _bt_steppage(), _bt_walk_left(), btvacuumpage(), and pgstat_btree_page().

| #define BT_WRITE BUFFER_LOCK_EXCLUSIVE |

Definition at line 439 of file nbtree.h.

Referenced by _bt_doinsert(), _bt_findinsertloc(), _bt_getbuf(), _bt_getroot(), _bt_insert_parent(), _bt_insertonpg(), _bt_newroot(), _bt_pagedel(), and _bt_split().

| #define BTCommuteStrategyNumber | ( | strat | ) | (BTMaxStrategyNumber + 1 - (strat)) |

Definition at line 416 of file nbtree.h.

Referenced by _bt_compare_scankey_args(), and _bt_fix_scankey_strategy().

| #define BTEntrySame | ( | i1, | ||

| i2 | ||||

| ) | BTTidSame((i1)->t_tid, (i2)->t_tid) |

Definition at line 155 of file nbtree.h.

Referenced by _bt_getstackbuf().

| #define BTMaxItemSize | ( | page | ) |

MAXALIGN_DOWN((PageGetPageSize(page) - \ MAXALIGN(SizeOfPageHeaderData + 3*sizeof(ItemIdData)) - \ MAXALIGN(sizeof(BTPageOpaqueData))) / 3)

Definition at line 117 of file nbtree.h.

Referenced by _bt_buildadd(), and _bt_findinsertloc().

| #define BTORDER_PROC 1 |

Definition at line 430 of file nbtree.h.

Referenced by _bt_first(), _bt_mkscankey(), _bt_mkscankey_nodata(), _bt_sort_array_elements(), assignProcTypes(), ExecIndexBuildScanKeys(), ExecInitExpr(), get_sort_function_for_ordering_op(), load_rangetype_info(), lookup_type_cache(), and MJExamineQuals().

| #define BTP_DELETED (1 << 2) |

Definition at line 71 of file nbtree.h.

Referenced by pgstat_btree_page().

| #define BTP_HALF_DEAD (1 << 4) |

Definition at line 73 of file nbtree.h.

Referenced by pgstat_btree_page().

| #define BTP_LEAF (1 << 0) |

Definition at line 69 of file nbtree.h.

Referenced by _bt_blnewpage(), _bt_getroot(), and btree_xlog_split().

| #define BTP_META (1 << 3) |

Definition at line 72 of file nbtree.h.

Referenced by _bt_getroot(), _bt_getrootheight(), and _bt_gettrueroot().

| #define BTP_ROOT (1 << 1) |

Definition at line 70 of file nbtree.h.

Referenced by _bt_split().

| #define BTP_SPLIT_END (1 << 5) |

Definition at line 74 of file nbtree.h.

Referenced by _bt_split(), and btvacuumpage().

| #define BTPageGetMeta | ( | p | ) | ((BTMetaPageData *) PageGetContents(p)) |

Definition at line 104 of file nbtree.h.

Referenced by _bt_getroot(), _bt_getrootheight(), _bt_gettrueroot(), _bt_initmetapage(), _bt_insertonpg(), _bt_newroot(), _bt_pagedel(), _bt_restore_meta(), bt_metap(), and pgstatindex().

| #define BTREE_DEFAULT_FILLFACTOR 90 |

Definition at line 130 of file nbtree.h.

Referenced by _bt_findsplitloc(), and _bt_pagestate().

| #define BTREE_MAGIC 0x053162 |

Definition at line 108 of file nbtree.h.

Referenced by _bt_getroot(), _bt_getrootheight(), and _bt_gettrueroot().

| #define BTREE_METAPAGE 0 |

Definition at line 107 of file nbtree.h.

Referenced by _bt_getroot(), _bt_getrootheight(), _bt_gettrueroot(), _bt_insertonpg(), _bt_leafbuild(), _bt_newroot(), _bt_pagedel(), _bt_restore_meta(), _bt_uppershutdown(), btbuildempty(), btvacuumscan(), and pgstat_relation().

| #define BTREE_VERSION 2 |

Definition at line 109 of file nbtree.h.

Referenced by _bt_getroot(), _bt_getrootheight(), and _bt_gettrueroot().

| #define BTScanPosIsValid | ( | scanpos | ) | BufferIsValid((scanpos).buf) |

Definition at line 531 of file nbtree.h.

Referenced by btendscan(), btgettuple(), btmarkpos(), btrescan(), and btrestrpos().

| #define BTSORTSUPPORT_PROC 2 |

Definition at line 431 of file nbtree.h.

Referenced by assignProcTypes(), get_sort_function_for_ordering_op(), and MJExamineQuals().

| #define BTTidSame | ( | i1, | ||

| i2 | ||||

| ) |

| #define MAX_BT_CYCLE_ID 0xFF7F |

Definition at line 84 of file nbtree.h.

Referenced by _bt_start_vacuum().

| #define P_FIRSTDATAKEY | ( | opaque | ) | (P_RIGHTMOST(opaque) ? P_HIKEY : P_FIRSTKEY) |

Definition at line 202 of file nbtree.h.

Referenced by _bt_binsrch(), _bt_check_unique(), _bt_checkkeys(), _bt_compare(), _bt_endpoint(), _bt_findinsertloc(), _bt_findsplitloc(), _bt_get_endpoint(), _bt_getstackbuf(), _bt_insertonpg(), _bt_killitems(), _bt_pagedel(), _bt_parent_deletion_safe(), _bt_pgaddtup(), _bt_readpage(), _bt_split(), _bt_steppage(), _bt_vacuum_one_page(), btree_xlog_delete_page(), btree_xlog_split(), btvacuumpage(), and pgstat_btree_page().

| #define P_FIRSTKEY ((OffsetNumber) 2) |

Definition at line 201 of file nbtree.h.

Referenced by _bt_buildadd(), _bt_newroot(), _bt_slideleft(), and btree_xlog_newroot().

| #define P_HAS_GARBAGE | ( | opaque | ) | ((opaque)->btpo_flags & BTP_HAS_GARBAGE) |

Definition at line 180 of file nbtree.h.

Referenced by _bt_findinsertloc().

| #define P_HIKEY ((OffsetNumber) 1) |

Definition at line 200 of file nbtree.h.

Referenced by _bt_buildadd(), _bt_check_unique(), _bt_findinsertloc(), _bt_findsplitloc(), _bt_insert_parent(), _bt_insertonpg(), _bt_moveright(), _bt_newroot(), _bt_pagedel(), _bt_parent_deletion_safe(), _bt_slideleft(), _bt_split(), _bt_uppershutdown(), btree_xlog_delete_page(), btree_xlog_newroot(), and btree_xlog_split().

| #define P_IGNORE | ( | opaque | ) | ((opaque)->btpo_flags & (BTP_DELETED|BTP_HALF_DEAD)) |

Definition at line 179 of file nbtree.h.

Referenced by _bt_check_unique(), _bt_findinsertloc(), _bt_get_endpoint(), _bt_getroot(), _bt_getstackbuf(), _bt_gettrueroot(), _bt_moveright(), _bt_steppage(), btvacuumpage(), GetBTPageStatistics(), and pgstatindex().

| #define P_ISDELETED | ( | opaque | ) | ((opaque)->btpo_flags & BTP_DELETED) |

Definition at line 177 of file nbtree.h.

Referenced by _bt_page_recyclable(), _bt_pagedel(), _bt_walk_left(), bt_page_items(), btvacuumpage(), GetBTPageStatistics(), and pgstatindex().

| #define P_ISHALFDEAD | ( | opaque | ) | ((opaque)->btpo_flags & BTP_HALF_DEAD) |

Definition at line 178 of file nbtree.h.

Referenced by _bt_pagedel(), and btvacuumpage().

| #define P_ISLEAF | ( | opaque | ) | ((opaque)->btpo_flags & BTP_LEAF) |

Definition at line 175 of file nbtree.h.

Referenced by _bt_binsrch(), _bt_compare(), _bt_endpoint(), _bt_findinsertloc(), _bt_findsplitloc(), _bt_insertonpg(), _bt_isequal(), _bt_pgaddtup(), _bt_search(), _bt_sortaddtup(), btvacuumpage(), GetBTPageStatistics(), pgstat_btree_page(), and pgstatindex().

| #define P_ISROOT | ( | opaque | ) | ((opaque)->btpo_flags & BTP_ROOT) |

Definition at line 176 of file nbtree.h.

Referenced by _bt_insertonpg(), _bt_pagedel(), _bt_parent_deletion_safe(), _bt_split(), GetBTPageStatistics(), and pgstatindex().

| #define P_LEFTMOST | ( | opaque | ) | ((opaque)->btpo_prev == P_NONE) |

Definition at line 173 of file nbtree.h.

Referenced by _bt_getroot(), _bt_insertonpg(), _bt_walk_left(), and btree_xlog_cleanup().

| #define P_NONE 0 |

Definition at line 167 of file nbtree.h.

Referenced by _bt_get_endpoint(), _bt_getroot(), _bt_getrootheight(), _bt_gettrueroot(), _bt_pagedel(), _bt_steppage(), btbuildempty(), btree_xlog_delete_page(), btree_xlog_split(), btvacuumpage(), and pgstatindex().

| #define P_RIGHTMOST | ( | opaque | ) | ((opaque)->btpo_next == P_NONE) |

Definition at line 174 of file nbtree.h.

Referenced by _bt_check_unique(), _bt_endpoint(), _bt_findinsertloc(), _bt_findsplitloc(), _bt_get_endpoint(), _bt_getroot(), _bt_getstackbuf(), _bt_gettrueroot(), _bt_insertonpg(), _bt_moveright(), _bt_pagedel(), _bt_parent_deletion_safe(), _bt_split(), _bt_walk_left(), btree_xlog_cleanup(), btree_xlog_split(), and btvacuumpage().

| #define SizeOfBtreeDelete (offsetof(xl_btree_delete, nitems) + sizeof(int)) |

Definition at line 326 of file nbtree.h.

Referenced by btree_xlog_delete().

| #define SizeOfBtreeDeletePage (offsetof(xl_btree_delete_page, btpo_xact) + sizeof(TransactionId)) |

Definition at line 389 of file nbtree.h.

Referenced by btree_xlog_delete_page().

| #define SizeOfBtreeInsert (offsetof(xl_btreetid, tid) + SizeOfIptrData) |

Definition at line 262 of file nbtree.h.

Referenced by btree_xlog_insert().

| #define SizeOfBtreeNewroot (offsetof(xl_btree_newroot, level) + sizeof(uint32)) |

Definition at line 406 of file nbtree.h.

Referenced by btree_xlog_newroot().

| #define SizeOfBtreeReusePage (sizeof(xl_btree_reuse_page)) |

| #define SizeOfBtreeSplit (offsetof(xl_btree_split, firstright) + sizeof(OffsetNumber)) |

Definition at line 308 of file nbtree.h.

Referenced by btree_xlog_split().

| #define SizeOfBtreeVacuum (offsetof(xl_btree_vacuum, lastBlockVacuumed) + sizeof(BlockNumber)) |

Definition at line 370 of file nbtree.h.

Referenced by btree_xlog_vacuum().

| #define SK_BT_DESC (INDOPTION_DESC << SK_BT_INDOPTION_SHIFT) |

Definition at line 593 of file nbtree.h.

Referenced by _bt_check_rowcompare(), _bt_compare(), _bt_compare_scankey_args(), _bt_first(), _bt_fix_scankey_strategy(), _bt_load(), inlineApplySortFunction(), and reversedirection_index_btree().

| #define SK_BT_INDOPTION_SHIFT 24 |

Definition at line 592 of file nbtree.h.

Referenced by _bt_mkscankey().

| #define SK_BT_NULLS_FIRST (INDOPTION_NULLS_FIRST << SK_BT_INDOPTION_SHIFT) |

Definition at line 594 of file nbtree.h.

Referenced by _bt_check_rowcompare(), _bt_checkkeys(), _bt_compare(), _bt_compare_scankey_args(), _bt_first(), _bt_fix_scankey_strategy(), _bt_load(), and inlineApplySortFunction().

| #define SK_BT_REQBKWD 0x00020000 |

Definition at line 591 of file nbtree.h.

Referenced by _bt_check_rowcompare(), and _bt_checkkeys().

| #define SK_BT_REQFWD 0x00010000 |

Definition at line 590 of file nbtree.h.

Referenced by _bt_check_rowcompare(), _bt_checkkeys(), and _bt_mark_scankey_required().

| #define XLOG_BTREE_DELETE 0x70 |

Definition at line 217 of file nbtree.h.

Referenced by _bt_delitems_delete(), btree_desc(), and btree_redo().

| #define XLOG_BTREE_DELETE_PAGE 0x80 |

Definition at line 218 of file nbtree.h.

Referenced by btree_desc(), and btree_redo().

| #define XLOG_BTREE_DELETE_PAGE_HALF 0xB0 |

Definition at line 221 of file nbtree.h.

Referenced by btree_desc(), btree_redo(), and btree_xlog_delete_page().

| #define XLOG_BTREE_DELETE_PAGE_META 0x90 |

Definition at line 219 of file nbtree.h.

Referenced by btree_desc(), btree_redo(), and btree_xlog_delete_page().

| #define XLOG_BTREE_INSERT_LEAF 0x00 |

Definition at line 210 of file nbtree.h.

Referenced by _bt_insertonpg(), btree_desc(), and btree_redo().

| #define XLOG_BTREE_INSERT_META 0x20 |

Definition at line 212 of file nbtree.h.

Referenced by btree_desc(), and btree_redo().

| #define XLOG_BTREE_INSERT_UPPER 0x10 |

Definition at line 211 of file nbtree.h.

Referenced by btree_desc(), and btree_redo().

| #define XLOG_BTREE_NEWROOT 0xA0 |

Definition at line 220 of file nbtree.h.

Referenced by _bt_getroot(), _bt_newroot(), btree_desc(), and btree_redo().

| #define XLOG_BTREE_REUSE_PAGE 0xD0 |

Definition at line 225 of file nbtree.h.

Referenced by _bt_log_reuse_page(), btree_desc(), and btree_redo().

| #define XLOG_BTREE_SPLIT_L 0x30 |

Definition at line 213 of file nbtree.h.

Referenced by _bt_split(), btree_desc(), and btree_redo().

| #define XLOG_BTREE_SPLIT_L_ROOT 0x50 |

Definition at line 215 of file nbtree.h.

Referenced by _bt_split(), btree_desc(), and btree_redo().

| #define XLOG_BTREE_SPLIT_R 0x40 |

Definition at line 214 of file nbtree.h.

Referenced by btree_desc(), and btree_redo().

| #define XLOG_BTREE_SPLIT_R_ROOT 0x60 |

Definition at line 216 of file nbtree.h.

Referenced by btree_desc(), and btree_redo().

| #define XLOG_BTREE_VACUUM 0xC0 |

Definition at line 223 of file nbtree.h.

Referenced by _bt_delitems_vacuum(), btree_desc(), and btree_redo().

| typedef struct BTArrayKeyInfo BTArrayKeyInfo |

| typedef struct BTMetaPageData BTMetaPageData |

| typedef BTPageOpaqueData* BTPageOpaque |

| typedef struct BTPageOpaqueData BTPageOpaqueData |

| typedef BTScanOpaqueData* BTScanOpaque |

| typedef struct BTScanOpaqueData BTScanOpaqueData |

| typedef BTScanPosData* BTScanPos |

| typedef struct BTScanPosData BTScanPosData |

| typedef struct BTScanPosItem BTScanPosItem |

| typedef BTStackData* BTStack |

| typedef struct BTStackData BTStackData |

| typedef struct xl_btree_delete xl_btree_delete |

| typedef struct xl_btree_delete_page xl_btree_delete_page |

| typedef struct xl_btree_insert xl_btree_insert |

| typedef struct xl_btree_metadata xl_btree_metadata |

| typedef struct xl_btree_newroot xl_btree_newroot |

| typedef struct xl_btree_reuse_page xl_btree_reuse_page |

| typedef struct xl_btree_split xl_btree_split |

| typedef struct xl_btree_vacuum xl_btree_vacuum |

| typedef struct xl_btreetid xl_btreetid |

| bool _bt_advance_array_keys | ( | IndexScanDesc | scan, | |

| ScanDirection | dir | |||

| ) |

Definition at line 549 of file nbtutils.c.

References BTScanOpaqueData::arrayKeyData, BTScanOpaqueData::arrayKeys, BTArrayKeyInfo::cur_elem, BTArrayKeyInfo::elem_values, i, BTArrayKeyInfo::num_elems, BTScanOpaqueData::numArrayKeys, IndexScanDescData::opaque, BTArrayKeyInfo::scan_key, ScanDirectionIsBackward, and ScanKeyData::sk_argument.

Referenced by btgetbitmap(), and btgettuple().

{

BTScanOpaque so = (BTScanOpaque) scan->opaque;

bool found = false;

int i;

/*

* We must advance the last array key most quickly, since it will

* correspond to the lowest-order index column among the available

* qualifications. This is necessary to ensure correct ordering of output

* when there are multiple array keys.

*/

for (i = so->numArrayKeys - 1; i >= 0; i--)

{

BTArrayKeyInfo *curArrayKey = &so->arrayKeys[i];

ScanKey skey = &so->arrayKeyData[curArrayKey->scan_key];

int cur_elem = curArrayKey->cur_elem;

int num_elems = curArrayKey->num_elems;

if (ScanDirectionIsBackward(dir))

{

if (--cur_elem < 0)

{

cur_elem = num_elems - 1;

found = false; /* need to advance next array key */

}

else

found = true;

}

else

{

if (++cur_elem >= num_elems)

{

cur_elem = 0;

found = false; /* need to advance next array key */

}

else

found = true;

}

curArrayKey->cur_elem = cur_elem;

skey->sk_argument = curArrayKey->elem_values[cur_elem];

if (found)

break;

}

return found;

}

| OffsetNumber _bt_binsrch | ( | Relation | rel, | |

| Buffer | buf, | |||

| int | keysz, | |||

| ScanKey | scankey, | |||

| bool | nextkey | |||

| ) |

Definition at line 234 of file nbtsearch.c.

References _bt_compare(), Assert, BufferGetPage, OffsetNumberPrev, P_FIRSTDATAKEY, P_ISLEAF, PageGetMaxOffsetNumber, and PageGetSpecialPointer.

Referenced by _bt_doinsert(), _bt_findinsertloc(), _bt_first(), and _bt_search().

{

Page page;

BTPageOpaque opaque;

OffsetNumber low,

high;

int32 result,

cmpval;

page = BufferGetPage(buf);

opaque = (BTPageOpaque) PageGetSpecialPointer(page);

low = P_FIRSTDATAKEY(opaque);

high = PageGetMaxOffsetNumber(page);

/*

* If there are no keys on the page, return the first available slot. Note

* this covers two cases: the page is really empty (no keys), or it

* contains only a high key. The latter case is possible after vacuuming.

* This can never happen on an internal page, however, since they are

* never empty (an internal page must have children).

*/

if (high < low)

return low;

/*

* Binary search to find the first key on the page >= scan key, or first

* key > scankey when nextkey is true.

*

* For nextkey=false (cmpval=1), the loop invariant is: all slots before

* 'low' are < scan key, all slots at or after 'high' are >= scan key.

*

* For nextkey=true (cmpval=0), the loop invariant is: all slots before

* 'low' are <= scan key, all slots at or after 'high' are > scan key.

*

* We can fall out when high == low.

*/

high++; /* establish the loop invariant for high */

cmpval = nextkey ? 0 : 1; /* select comparison value */

while (high > low)

{

OffsetNumber mid = low + ((high - low) / 2);

/* We have low <= mid < high, so mid points at a real slot */

result = _bt_compare(rel, keysz, scankey, page, mid);

if (result >= cmpval)

low = mid + 1;

else

high = mid;

}

/*

* At this point we have high == low, but be careful: they could point

* past the last slot on the page.

*

* On a leaf page, we always return the first key >= scan key (resp. >

* scan key), which could be the last slot + 1.

*/

if (P_ISLEAF(opaque))

return low;

/*

* On a non-leaf page, return the last key < scan key (resp. <= scan key).

* There must be one if _bt_compare() is playing by the rules.

*/

Assert(low > P_FIRSTDATAKEY(opaque));

return OffsetNumberPrev(low);

}

| IndexTuple _bt_checkkeys | ( | IndexScanDesc | scan, | |

| Page | page, | |||

| OffsetNumber | offnum, | |||

| ScanDirection | dir, | |||

| bool * | continuescan | |||

| ) |

Definition at line 1372 of file nbtutils.c.

References _bt_check_rowcompare(), Assert, DatumGetBool, FunctionCall2Coll(), IndexScanDescData::ignore_killed_tuples, index_getattr, IndexScanDescData::indexRelation, ItemIdIsDead, BTScanOpaqueData::keyData, NULL, BTScanOpaqueData::numberOfKeys, IndexScanDescData::opaque, P_FIRSTDATAKEY, PageGetItem, PageGetItemId, PageGetMaxOffsetNumber, PageGetSpecialPointer, RelationGetDescr, ScanDirectionIsBackward, ScanDirectionIsForward, ScanKeyData::sk_argument, ScanKeyData::sk_attno, SK_BT_NULLS_FIRST, SK_BT_REQBKWD, SK_BT_REQFWD, ScanKeyData::sk_collation, ScanKeyData::sk_flags, ScanKeyData::sk_func, SK_ISNULL, SK_ROW_HEADER, SK_SEARCHNOTNULL, and SK_SEARCHNULL.

Referenced by _bt_readpage().

{

ItemId iid = PageGetItemId(page, offnum);

bool tuple_alive;

IndexTuple tuple;

TupleDesc tupdesc;

BTScanOpaque so;

int keysz;

int ikey;

ScanKey key;

*continuescan = true; /* default assumption */

/*

* If the scan specifies not to return killed tuples, then we treat a

* killed tuple as not passing the qual. Most of the time, it's a win to

* not bother examining the tuple's index keys, but just return

* immediately with continuescan = true to proceed to the next tuple.

* However, if this is the last tuple on the page, we should check the

* index keys to prevent uselessly advancing to the next page.

*/

if (scan->ignore_killed_tuples && ItemIdIsDead(iid))

{

/* return immediately if there are more tuples on the page */

if (ScanDirectionIsForward(dir))

{

if (offnum < PageGetMaxOffsetNumber(page))

return NULL;

}

else

{

BTPageOpaque opaque = (BTPageOpaque) PageGetSpecialPointer(page);

if (offnum > P_FIRSTDATAKEY(opaque))

return NULL;

}

/*

* OK, we want to check the keys so we can set continuescan correctly,

* but we'll return NULL even if the tuple passes the key tests.

*/

tuple_alive = false;

}

else

tuple_alive = true;

tuple = (IndexTuple) PageGetItem(page, iid);

tupdesc = RelationGetDescr(scan->indexRelation);

so = (BTScanOpaque) scan->opaque;

keysz = so->numberOfKeys;

for (key = so->keyData, ikey = 0; ikey < keysz; key++, ikey++)

{

Datum datum;

bool isNull;

Datum test;

/* row-comparison keys need special processing */

if (key->sk_flags & SK_ROW_HEADER)

{

if (_bt_check_rowcompare(key, tuple, tupdesc, dir, continuescan))

continue;

return NULL;

}

datum = index_getattr(tuple,

key->sk_attno,

tupdesc,

&isNull);

if (key->sk_flags & SK_ISNULL)

{

/* Handle IS NULL/NOT NULL tests */

if (key->sk_flags & SK_SEARCHNULL)

{

if (isNull)

continue; /* tuple satisfies this qual */

}

else

{

Assert(key->sk_flags & SK_SEARCHNOTNULL);

if (!isNull)

continue; /* tuple satisfies this qual */

}

/*

* Tuple fails this qual. If it's a required qual for the current

* scan direction, then we can conclude no further tuples will

* pass, either.

*/

if ((key->sk_flags & SK_BT_REQFWD) &&

ScanDirectionIsForward(dir))

*continuescan = false;

else if ((key->sk_flags & SK_BT_REQBKWD) &&

ScanDirectionIsBackward(dir))

*continuescan = false;

/*

* In any case, this indextuple doesn't match the qual.

*/

return NULL;

}

if (isNull)

{

if (key->sk_flags & SK_BT_NULLS_FIRST)

{

/*

* Since NULLs are sorted before non-NULLs, we know we have

* reached the lower limit of the range of values for this

* index attr. On a backward scan, we can stop if this qual

* is one of the "must match" subset. We can stop regardless

* of whether the qual is > or <, so long as it's required,

* because it's not possible for any future tuples to pass. On

* a forward scan, however, we must keep going, because we may

* have initially positioned to the start of the index.

*/

if ((key->sk_flags & (SK_BT_REQFWD | SK_BT_REQBKWD)) &&

ScanDirectionIsBackward(dir))

*continuescan = false;

}

else

{

/*

* Since NULLs are sorted after non-NULLs, we know we have

* reached the upper limit of the range of values for this

* index attr. On a forward scan, we can stop if this qual is

* one of the "must match" subset. We can stop regardless of

* whether the qual is > or <, so long as it's required,

* because it's not possible for any future tuples to pass. On

* a backward scan, however, we must keep going, because we

* may have initially positioned to the end of the index.

*/

if ((key->sk_flags & (SK_BT_REQFWD | SK_BT_REQBKWD)) &&

ScanDirectionIsForward(dir))

*continuescan = false;

}

/*

* In any case, this indextuple doesn't match the qual.

*/

return NULL;

}

test = FunctionCall2Coll(&key->sk_func, key->sk_collation,

datum, key->sk_argument);

if (!DatumGetBool(test))

{

/*

* Tuple fails this qual. If it's a required qual for the current

* scan direction, then we can conclude no further tuples will

* pass, either.

*

* Note: because we stop the scan as soon as any required equality

* qual fails, it is critical that equality quals be used for the

* initial positioning in _bt_first() when they are available. See

* comments in _bt_first().

*/

if ((key->sk_flags & SK_BT_REQFWD) &&

ScanDirectionIsForward(dir))

*continuescan = false;

else if ((key->sk_flags & SK_BT_REQBKWD) &&

ScanDirectionIsBackward(dir))

*continuescan = false;

/*

* In any case, this indextuple doesn't match the qual.

*/

return NULL;

}

}

/* Check for failure due to it being a killed tuple. */

if (!tuple_alive)

return NULL;

/* If we get here, the tuple passes all index quals. */

return tuple;

}

Definition at line 492 of file nbtpage.c.

References BufferGetBlockNumber(), BufferGetPage, ereport, errcode(), errhint(), errmsg(), ERROR, MAXALIGN, PageGetSpecialSize, PageIsNew, and RelationGetRelationName.

Referenced by _bt_getbuf(), _bt_relandgetbuf(), and btvacuumpage().

{

Page page = BufferGetPage(buf);

/*

* ReadBuffer verifies that every newly-read page passes

* PageHeaderIsValid, which means it either contains a reasonably sane

* page header or is all-zero. We have to defend against the all-zero

* case, however.

*/

if (PageIsNew(page))

ereport(ERROR,

(errcode(ERRCODE_INDEX_CORRUPTED),

errmsg("index \"%s\" contains unexpected zero page at block %u",

RelationGetRelationName(rel),

BufferGetBlockNumber(buf)),

errhint("Please REINDEX it.")));

/*

* Additionally check that the special area looks sane.

*/

if (PageGetSpecialSize(page) != MAXALIGN(sizeof(BTPageOpaqueData)))

ereport(ERROR,

(errcode(ERRCODE_INDEX_CORRUPTED),

errmsg("index \"%s\" contains corrupted page at block %u",

RelationGetRelationName(rel),

BufferGetBlockNumber(buf)),

errhint("Please REINDEX it.")));

}

| int32 _bt_compare | ( | Relation | rel, | |

| int | keysz, | |||

| ScanKey | scankey, | |||

| Page | page, | |||

| OffsetNumber | offnum | |||

| ) |

Definition at line 339 of file nbtsearch.c.

References DatumGetInt32, FunctionCall2Coll(), i, index_getattr, P_FIRSTDATAKEY, P_ISLEAF, PageGetItem, PageGetItemId, PageGetSpecialPointer, RelationGetDescr, ScanKeyData::sk_argument, ScanKeyData::sk_attno, SK_BT_DESC, SK_BT_NULLS_FIRST, ScanKeyData::sk_collation, ScanKeyData::sk_flags, ScanKeyData::sk_func, and SK_ISNULL.

Referenced by _bt_binsrch(), _bt_findinsertloc(), and _bt_moveright().

{

TupleDesc itupdesc = RelationGetDescr(rel);

BTPageOpaque opaque = (BTPageOpaque) PageGetSpecialPointer(page);

IndexTuple itup;

int i;

/*

* Force result ">" if target item is first data item on an internal page

* --- see NOTE above.

*/

if (!P_ISLEAF(opaque) && offnum == P_FIRSTDATAKEY(opaque))

return 1;

itup = (IndexTuple) PageGetItem(page, PageGetItemId(page, offnum));

/*

* The scan key is set up with the attribute number associated with each

* term in the key. It is important that, if the index is multi-key, the

* scan contain the first k key attributes, and that they be in order. If

* you think about how multi-key ordering works, you'll understand why

* this is.

*

* We don't test for violation of this condition here, however. The

* initial setup for the index scan had better have gotten it right (see

* _bt_first).

*/

for (i = 1; i <= keysz; i++)

{

Datum datum;

bool isNull;

int32 result;

datum = index_getattr(itup, scankey->sk_attno, itupdesc, &isNull);

/* see comments about NULLs handling in btbuild */

if (scankey->sk_flags & SK_ISNULL) /* key is NULL */

{

if (isNull)

result = 0; /* NULL "=" NULL */

else if (scankey->sk_flags & SK_BT_NULLS_FIRST)

result = -1; /* NULL "<" NOT_NULL */

else

result = 1; /* NULL ">" NOT_NULL */

}

else if (isNull) /* key is NOT_NULL and item is NULL */

{

if (scankey->sk_flags & SK_BT_NULLS_FIRST)

result = 1; /* NOT_NULL ">" NULL */

else

result = -1; /* NOT_NULL "<" NULL */

}

else

{

/*

* The sk_func needs to be passed the index value as left arg and

* the sk_argument as right arg (they might be of different

* types). Since it is convenient for callers to think of

* _bt_compare as comparing the scankey to the index item, we have

* to flip the sign of the comparison result. (Unless it's a DESC

* column, in which case we *don't* flip the sign.)

*/

result = DatumGetInt32(FunctionCall2Coll(&scankey->sk_func,

scankey->sk_collation,

datum,

scankey->sk_argument));

if (!(scankey->sk_flags & SK_BT_DESC))

result = -result;

}

/* if the keys are unequal, return the difference */

if (result != 0)

return result;

scankey++;

}

/* if we get here, the keys are equal */

return 0;

}

| void _bt_delitems_delete | ( | Relation | rel, | |

| Buffer | buf, | |||

| OffsetNumber * | itemnos, | |||

| int | nitems, | |||

| Relation | heapRel | |||

| ) |

Definition at line 882 of file nbtpage.c.

References Assert, xl_btree_delete::block, BTPageOpaqueData::btpo_flags, XLogRecData::buffer, XLogRecData::buffer_std, BufferGetBlockNumber(), BufferGetPage, XLogRecData::data, END_CRIT_SECTION, xl_btree_delete::hnode, XLogRecData::len, MarkBufferDirty(), XLogRecData::next, xl_btree_delete::nitems, xl_btree_delete::node, PageGetSpecialPointer, PageIndexMultiDelete(), PageSetLSN, RelationData::rd_node, RelationNeedsWAL, START_CRIT_SECTION, XLOG_BTREE_DELETE, and XLogInsert().

Referenced by _bt_vacuum_one_page().

{

Page page = BufferGetPage(buf);

BTPageOpaque opaque;

/* Shouldn't be called unless there's something to do */

Assert(nitems > 0);

/* No ereport(ERROR) until changes are logged */

START_CRIT_SECTION();

/* Fix the page */

PageIndexMultiDelete(page, itemnos, nitems);

/*

* Unlike _bt_delitems_vacuum, we *must not* clear the vacuum cycle ID,

* because this is not called by VACUUM.

*/

/*

* Mark the page as not containing any LP_DEAD items. This is not

* certainly true (there might be some that have recently been marked, but

* weren't included in our target-item list), but it will almost always be

* true and it doesn't seem worth an additional page scan to check it.

* Remember that BTP_HAS_GARBAGE is only a hint anyway.

*/

opaque = (BTPageOpaque) PageGetSpecialPointer(page);

opaque->btpo_flags &= ~BTP_HAS_GARBAGE;

MarkBufferDirty(buf);

/* XLOG stuff */

if (RelationNeedsWAL(rel))

{

XLogRecPtr recptr;

XLogRecData rdata[3];

xl_btree_delete xlrec_delete;

xlrec_delete.node = rel->rd_node;

xlrec_delete.hnode = heapRel->rd_node;

xlrec_delete.block = BufferGetBlockNumber(buf);

xlrec_delete.nitems = nitems;

rdata[0].data = (char *) &xlrec_delete;

rdata[0].len = SizeOfBtreeDelete;

rdata[0].buffer = InvalidBuffer;

rdata[0].next = &(rdata[1]);

/*

* We need the target-offsets array whether or not we store the whole

* buffer, to allow us to find the latestRemovedXid on a standby

* server.

*/

rdata[1].data = (char *) itemnos;

rdata[1].len = nitems * sizeof(OffsetNumber);

rdata[1].buffer = InvalidBuffer;

rdata[1].next = &(rdata[2]);

rdata[2].data = NULL;

rdata[2].len = 0;

rdata[2].buffer = buf;

rdata[2].buffer_std = true;

rdata[2].next = NULL;

recptr = XLogInsert(RM_BTREE_ID, XLOG_BTREE_DELETE, rdata);

PageSetLSN(page, recptr);

}

END_CRIT_SECTION();

}

| void _bt_delitems_vacuum | ( | Relation | rel, | |

| Buffer | buf, | |||

| OffsetNumber * | itemnos, | |||

| int | nitems, | |||

| BlockNumber | lastBlockVacuumed | |||

| ) |

Definition at line 794 of file nbtpage.c.

References xl_btree_vacuum::block, BTPageOpaqueData::btpo_cycleid, BTPageOpaqueData::btpo_flags, XLogRecData::buffer, XLogRecData::buffer_std, BufferGetBlockNumber(), BufferGetPage, XLogRecData::data, END_CRIT_SECTION, xl_btree_vacuum::lastBlockVacuumed, XLogRecData::len, MarkBufferDirty(), XLogRecData::next, xl_btree_vacuum::node, PageGetSpecialPointer, PageIndexMultiDelete(), PageSetLSN, RelationData::rd_node, RelationNeedsWAL, START_CRIT_SECTION, XLOG_BTREE_VACUUM, and XLogInsert().

Referenced by btvacuumpage(), and btvacuumscan().

{

Page page = BufferGetPage(buf);

BTPageOpaque opaque;

/* No ereport(ERROR) until changes are logged */

START_CRIT_SECTION();

/* Fix the page */

if (nitems > 0)

PageIndexMultiDelete(page, itemnos, nitems);

/*

* We can clear the vacuum cycle ID since this page has certainly been

* processed by the current vacuum scan.

*/

opaque = (BTPageOpaque) PageGetSpecialPointer(page);

opaque->btpo_cycleid = 0;

/*

* Mark the page as not containing any LP_DEAD items. This is not

* certainly true (there might be some that have recently been marked, but

* weren't included in our target-item list), but it will almost always be

* true and it doesn't seem worth an additional page scan to check it.

* Remember that BTP_HAS_GARBAGE is only a hint anyway.

*/

opaque->btpo_flags &= ~BTP_HAS_GARBAGE;

MarkBufferDirty(buf);

/* XLOG stuff */

if (RelationNeedsWAL(rel))

{

XLogRecPtr recptr;

XLogRecData rdata[2];

xl_btree_vacuum xlrec_vacuum;

xlrec_vacuum.node = rel->rd_node;

xlrec_vacuum.block = BufferGetBlockNumber(buf);

xlrec_vacuum.lastBlockVacuumed = lastBlockVacuumed;

rdata[0].data = (char *) &xlrec_vacuum;

rdata[0].len = SizeOfBtreeVacuum;

rdata[0].buffer = InvalidBuffer;

rdata[0].next = &(rdata[1]);

/*

* The target-offsets array is not in the buffer, but pretend that it

* is. When XLogInsert stores the whole buffer, the offsets array

* need not be stored too.

*/

if (nitems > 0)

{

rdata[1].data = (char *) itemnos;

rdata[1].len = nitems * sizeof(OffsetNumber);

}

else

{

rdata[1].data = NULL;

rdata[1].len = 0;

}

rdata[1].buffer = buf;

rdata[1].buffer_std = true;

rdata[1].next = NULL;

recptr = XLogInsert(RM_BTREE_ID, XLOG_BTREE_VACUUM, rdata);

PageSetLSN(page, recptr);

}

END_CRIT_SECTION();

}

| bool _bt_doinsert | ( | Relation | rel, | |

| IndexTuple | itup, | |||

| IndexUniqueCheck | checkUnique, | |||

| Relation | heapRel | |||

| ) |

Definition at line 103 of file nbtinsert.c.

References _bt_binsrch(), _bt_check_unique(), _bt_findinsertloc(), _bt_freeskey(), _bt_freestack(), _bt_insertonpg(), _bt_mkscankey(), _bt_moveright(), _bt_relbuf(), _bt_search(), BT_WRITE, buf, BUFFER_LOCK_UNLOCK, CheckForSerializableConflictIn(), LockBuffer(), NULL, RelationData::rd_rel, TransactionIdIsValid, UNIQUE_CHECK_EXISTING, UNIQUE_CHECK_NO, and XactLockTableWait().

Referenced by btinsert().

{

bool is_unique = false;

int natts = rel->rd_rel->relnatts;

ScanKey itup_scankey;

BTStack stack;

Buffer buf;

OffsetNumber offset;

/* we need an insertion scan key to do our search, so build one */

itup_scankey = _bt_mkscankey(rel, itup);

top:

/* find the first page containing this key */

stack = _bt_search(rel, natts, itup_scankey, false, &buf, BT_WRITE);

offset = InvalidOffsetNumber;

/* trade in our read lock for a write lock */

LockBuffer(buf, BUFFER_LOCK_UNLOCK);

LockBuffer(buf, BT_WRITE);

/*

* If the page was split between the time that we surrendered our read

* lock and acquired our write lock, then this page may no longer be the

* right place for the key we want to insert. In this case, we need to

* move right in the tree. See Lehman and Yao for an excruciatingly

* precise description.

*/

buf = _bt_moveright(rel, buf, natts, itup_scankey, false, BT_WRITE);

/*

* If we're not allowing duplicates, make sure the key isn't already in

* the index.

*

* NOTE: obviously, _bt_check_unique can only detect keys that are already

* in the index; so it cannot defend against concurrent insertions of the

* same key. We protect against that by means of holding a write lock on

* the target page. Any other would-be inserter of the same key must

* acquire a write lock on the same target page, so only one would-be

* inserter can be making the check at one time. Furthermore, once we are

* past the check we hold write locks continuously until we have performed

* our insertion, so no later inserter can fail to see our insertion.

* (This requires some care in _bt_insertonpg.)

*

* If we must wait for another xact, we release the lock while waiting,

* and then must start over completely.

*

* For a partial uniqueness check, we don't wait for the other xact. Just

* let the tuple in and return false for possibly non-unique, or true for

* definitely unique.

*/

if (checkUnique != UNIQUE_CHECK_NO)

{

TransactionId xwait;

offset = _bt_binsrch(rel, buf, natts, itup_scankey, false);

xwait = _bt_check_unique(rel, itup, heapRel, buf, offset, itup_scankey,

checkUnique, &is_unique);

if (TransactionIdIsValid(xwait))

{

/* Have to wait for the other guy ... */

_bt_relbuf(rel, buf);

XactLockTableWait(xwait);

/* start over... */

_bt_freestack(stack);

goto top;

}

}

if (checkUnique != UNIQUE_CHECK_EXISTING)

{

/*

* The only conflict predicate locking cares about for indexes is when

* an index tuple insert conflicts with an existing lock. Since the

* actual location of the insert is hard to predict because of the

* random search used to prevent O(N^2) performance when there are

* many duplicate entries, we can just use the "first valid" page.

*/

CheckForSerializableConflictIn(rel, NULL, buf);

/* do the insertion */

_bt_findinsertloc(rel, &buf, &offset, natts, itup_scankey, itup, heapRel);

_bt_insertonpg(rel, buf, stack, itup, offset, false);

}

else

{

/* just release the buffer */

_bt_relbuf(rel, buf);

}

/* be tidy */

_bt_freestack(stack);

_bt_freeskey(itup_scankey);

return is_unique;

}

| void _bt_end_vacuum | ( | Relation | rel | ) |

Definition at line 1939 of file nbtutils.c.

References BtreeVacuumLock, LockRelId::dbId, i, LockInfoData::lockRelId, LW_EXCLUSIVE, LWLockAcquire(), LWLockRelease(), BTVacInfo::num_vacuums, RelationData::rd_lockInfo, LockRelId::relId, BTOneVacInfo::relid, and BTVacInfo::vacuums.

Referenced by _bt_end_vacuum_callback(), and btbulkdelete().

{

int i;

LWLockAcquire(BtreeVacuumLock, LW_EXCLUSIVE);

/* Find the array entry */

for (i = 0; i < btvacinfo->num_vacuums; i++)

{

BTOneVacInfo *vac = &btvacinfo->vacuums[i];

if (vac->relid.relId == rel->rd_lockInfo.lockRelId.relId &&

vac->relid.dbId == rel->rd_lockInfo.lockRelId.dbId)

{

/* Remove it by shifting down the last entry */

*vac = btvacinfo->vacuums[btvacinfo->num_vacuums - 1];

btvacinfo->num_vacuums--;

break;

}

}

LWLockRelease(BtreeVacuumLock);

}

| void _bt_end_vacuum_callback | ( | int | code, | |

| Datum | arg | |||

| ) |

Definition at line 1967 of file nbtutils.c.

References _bt_end_vacuum(), and DatumGetPointer.

Referenced by btbulkdelete().

{

_bt_end_vacuum((Relation) DatumGetPointer(arg));

}

| bool _bt_first | ( | IndexScanDesc | scan, | |

| ScanDirection | dir | |||

| ) |

Definition at line 447 of file nbtsearch.c.

References _bt_binsrch(), _bt_endpoint(), _bt_freestack(), _bt_preprocess_keys(), _bt_readpage(), _bt_search(), _bt_steppage(), Assert, BT_READ, BTEqualStrategyNumber, BTGreaterEqualStrategyNumber, BTGreaterStrategyNumber, BTLessEqualStrategyNumber, BTLessStrategyNumber, BTORDER_PROC, BTScanPosData::buf, buf, BUFFER_LOCK_UNLOCK, BufferGetBlockNumber(), BufferIsValid, cur, BTScanOpaqueData::currPos, BTScanOpaqueData::currTuples, DatumGetPointer, elog, ERROR, get_opfamily_proc(), i, index_getprocinfo(), INDEX_MAX_KEYS, IndexScanDescData::indexRelation, InvalidOid, InvalidStrategy, BTScanPosData::itemIndex, BTScanPosData::items, BTScanOpaqueData::keyData, LockBuffer(), BTScanOpaqueData::markItemIndex, BTScanPosData::moreLeft, BTScanPosData::moreRight, NULL, BTScanOpaqueData::numberOfKeys, BTScanOpaqueData::numKilled, OffsetNumberPrev, IndexScanDescData::opaque, pgstat_count_index_scan, PredicateLockPage(), PredicateLockRelation(), BTScanOpaqueData::qual_ok, RelationData::rd_opcintype, RelationData::rd_opfamily, RegProcedureIsValid, RelationGetRelationName, ScanDirectionIsBackward, ScanDirectionIsForward, ScanKeyEntryInitialize(), ScanKeyEntryInitializeWithInfo(), ScanKeyData::sk_argument, ScanKeyData::sk_attno, SK_BT_DESC, SK_BT_NULLS_FIRST, ScanKeyData::sk_collation, ScanKeyData::sk_flags, SK_ISNULL, SK_ROW_END, SK_ROW_HEADER, SK_ROW_MEMBER, SK_SEARCHNOTNULL, ScanKeyData::sk_strategy, ScanKeyData::sk_subtype, HeapTupleData::t_self, IndexScanDescData::xs_ctup, IndexScanDescData::xs_itup, IndexScanDescData::xs_snapshot, and IndexScanDescData::xs_want_itup.

Referenced by btgetbitmap(), and btgettuple().

{

Relation rel = scan->indexRelation;

BTScanOpaque so = (BTScanOpaque) scan->opaque;

Buffer buf;

BTStack stack;

OffsetNumber offnum;

StrategyNumber strat;

bool nextkey;

bool goback;

ScanKey startKeys[INDEX_MAX_KEYS];

ScanKeyData scankeys[INDEX_MAX_KEYS];

ScanKeyData notnullkeys[INDEX_MAX_KEYS];

int keysCount = 0;

int i;

StrategyNumber strat_total;

BTScanPosItem *currItem;

pgstat_count_index_scan(rel);

/*

* Examine the scan keys and eliminate any redundant keys; also mark the

* keys that must be matched to continue the scan.

*/

_bt_preprocess_keys(scan);

/*

* Quit now if _bt_preprocess_keys() discovered that the scan keys can

* never be satisfied (eg, x == 1 AND x > 2).

*/

if (!so->qual_ok)

return false;

/*----------

* Examine the scan keys to discover where we need to start the scan.

*

* We want to identify the keys that can be used as starting boundaries;

* these are =, >, or >= keys for a forward scan or =, <, <= keys for

* a backwards scan. We can use keys for multiple attributes so long as

* the prior attributes had only =, >= (resp. =, <=) keys. Once we accept

* a > or < boundary or find an attribute with no boundary (which can be

* thought of as the same as "> -infinity"), we can't use keys for any

* attributes to its right, because it would break our simplistic notion

* of what initial positioning strategy to use.

*

* When the scan keys include cross-type operators, _bt_preprocess_keys

* may not be able to eliminate redundant keys; in such cases we will

* arbitrarily pick a usable one for each attribute. This is correct

* but possibly not optimal behavior. (For example, with keys like

* "x >= 4 AND x >= 5" we would elect to scan starting at x=4 when

* x=5 would be more efficient.) Since the situation only arises given

* a poorly-worded query plus an incomplete opfamily, live with it.

*

* When both equality and inequality keys appear for a single attribute

* (again, only possible when cross-type operators appear), we *must*

* select one of the equality keys for the starting point, because

* _bt_checkkeys() will stop the scan as soon as an equality qual fails.

* For example, if we have keys like "x >= 4 AND x = 10" and we elect to

* start at x=4, we will fail and stop before reaching x=10. If multiple

* equality quals survive preprocessing, however, it doesn't matter which

* one we use --- by definition, they are either redundant or

* contradictory.

*

* Any regular (not SK_SEARCHNULL) key implies a NOT NULL qualifier.

* If the index stores nulls at the end of the index we'll be starting

* from, and we have no boundary key for the column (which means the key

* we deduced NOT NULL from is an inequality key that constrains the other

* end of the index), then we cons up an explicit SK_SEARCHNOTNULL key to

* use as a boundary key. If we didn't do this, we might find ourselves

* traversing a lot of null entries at the start of the scan.

*

* In this loop, row-comparison keys are treated the same as keys on their

* first (leftmost) columns. We'll add on lower-order columns of the row

* comparison below, if possible.

*

* The selected scan keys (at most one per index column) are remembered by

* storing their addresses into the local startKeys[] array.

*----------

*/

strat_total = BTEqualStrategyNumber;

if (so->numberOfKeys > 0)

{

AttrNumber curattr;

ScanKey chosen;

ScanKey impliesNN;

ScanKey cur;

/*

* chosen is the so-far-chosen key for the current attribute, if any.

* We don't cast the decision in stone until we reach keys for the

* next attribute.

*/

curattr = 1;

chosen = NULL;

/* Also remember any scankey that implies a NOT NULL constraint */

impliesNN = NULL;

/*

* Loop iterates from 0 to numberOfKeys inclusive; we use the last

* pass to handle after-last-key processing. Actual exit from the

* loop is at one of the "break" statements below.

*/

for (cur = so->keyData, i = 0;; cur++, i++)

{

if (i >= so->numberOfKeys || cur->sk_attno != curattr)

{

/*

* Done looking at keys for curattr. If we didn't find a

* usable boundary key, see if we can deduce a NOT NULL key.

*/

if (chosen == NULL && impliesNN != NULL &&

((impliesNN->sk_flags & SK_BT_NULLS_FIRST) ?

ScanDirectionIsForward(dir) :

ScanDirectionIsBackward(dir)))

{

/* Yes, so build the key in notnullkeys[keysCount] */

chosen = ¬nullkeys[keysCount];

ScanKeyEntryInitialize(chosen,

(SK_SEARCHNOTNULL | SK_ISNULL |

(impliesNN->sk_flags &

(SK_BT_DESC | SK_BT_NULLS_FIRST))),

curattr,

((impliesNN->sk_flags & SK_BT_NULLS_FIRST) ?

BTGreaterStrategyNumber :

BTLessStrategyNumber),

InvalidOid,

InvalidOid,

InvalidOid,

(Datum) 0);

}

/*

* If we still didn't find a usable boundary key, quit; else

* save the boundary key pointer in startKeys.

*/

if (chosen == NULL)

break;

startKeys[keysCount++] = chosen;

/*

* Adjust strat_total, and quit if we have stored a > or <

* key.

*/

strat = chosen->sk_strategy;

if (strat != BTEqualStrategyNumber)

{

strat_total = strat;

if (strat == BTGreaterStrategyNumber ||

strat == BTLessStrategyNumber)

break;

}

/*

* Done if that was the last attribute, or if next key is not

* in sequence (implying no boundary key is available for the

* next attribute).

*/

if (i >= so->numberOfKeys ||

cur->sk_attno != curattr + 1)

break;

/*

* Reset for next attr.

*/

curattr = cur->sk_attno;

chosen = NULL;

impliesNN = NULL;

}

/*

* Can we use this key as a starting boundary for this attr?

*

* If not, does it imply a NOT NULL constraint? (Because

* SK_SEARCHNULL keys are always assigned BTEqualStrategyNumber,

* *any* inequality key works for that; we need not test.)

*/

switch (cur->sk_strategy)

{

case BTLessStrategyNumber:

case BTLessEqualStrategyNumber:

if (chosen == NULL)

{

if (ScanDirectionIsBackward(dir))

chosen = cur;

else

impliesNN = cur;

}

break;

case BTEqualStrategyNumber:

/* override any non-equality choice */

chosen = cur;

break;

case BTGreaterEqualStrategyNumber:

case BTGreaterStrategyNumber:

if (chosen == NULL)

{

if (ScanDirectionIsForward(dir))

chosen = cur;

else

impliesNN = cur;

}

break;

}

}

}

/*

* If we found no usable boundary keys, we have to start from one end of

* the tree. Walk down that edge to the first or last key, and scan from

* there.

*/

if (keysCount == 0)

return _bt_endpoint(scan, dir);

/*

* We want to start the scan somewhere within the index. Set up an

* insertion scankey we can use to search for the boundary point we

* identified above. The insertion scankey is built in the local

* scankeys[] array, using the keys identified by startKeys[].

*/

Assert(keysCount <= INDEX_MAX_KEYS);

for (i = 0; i < keysCount; i++)

{

ScanKey cur = startKeys[i];

Assert(cur->sk_attno == i + 1);

if (cur->sk_flags & SK_ROW_HEADER)

{

/*

* Row comparison header: look to the first row member instead.

*

* The member scankeys are already in insertion format (ie, they

* have sk_func = 3-way-comparison function), but we have to watch

* out for nulls, which _bt_preprocess_keys didn't check. A null

* in the first row member makes the condition unmatchable, just

* like qual_ok = false.

*/

ScanKey subkey = (ScanKey) DatumGetPointer(cur->sk_argument);

Assert(subkey->sk_flags & SK_ROW_MEMBER);

if (subkey->sk_flags & SK_ISNULL)

return false;

memcpy(scankeys + i, subkey, sizeof(ScanKeyData));

/*

* If the row comparison is the last positioning key we accepted,

* try to add additional keys from the lower-order row members.

* (If we accepted independent conditions on additional index

* columns, we use those instead --- doesn't seem worth trying to

* determine which is more restrictive.) Note that this is OK

* even if the row comparison is of ">" or "<" type, because the

* condition applied to all but the last row member is effectively

* ">=" or "<=", and so the extra keys don't break the positioning

* scheme. But, by the same token, if we aren't able to use all

* the row members, then the part of the row comparison that we

* did use has to be treated as just a ">=" or "<=" condition, and

* so we'd better adjust strat_total accordingly.

*/

if (i == keysCount - 1)

{

bool used_all_subkeys = false;

Assert(!(subkey->sk_flags & SK_ROW_END));

for (;;)

{

subkey++;

Assert(subkey->sk_flags & SK_ROW_MEMBER);

if (subkey->sk_attno != keysCount + 1)

break; /* out-of-sequence, can't use it */

if (subkey->sk_strategy != cur->sk_strategy)

break; /* wrong direction, can't use it */

if (subkey->sk_flags & SK_ISNULL)

break; /* can't use null keys */

Assert(keysCount < INDEX_MAX_KEYS);

memcpy(scankeys + keysCount, subkey, sizeof(ScanKeyData));

keysCount++;

if (subkey->sk_flags & SK_ROW_END)

{

used_all_subkeys = true;

break;

}

}

if (!used_all_subkeys)

{

switch (strat_total)

{

case BTLessStrategyNumber:

strat_total = BTLessEqualStrategyNumber;

break;

case BTGreaterStrategyNumber:

strat_total = BTGreaterEqualStrategyNumber;

break;

}

}

break; /* done with outer loop */

}

}

else

{

/*

* Ordinary comparison key. Transform the search-style scan key

* to an insertion scan key by replacing the sk_func with the

* appropriate btree comparison function.

*

* If scankey operator is not a cross-type comparison, we can use

* the cached comparison function; otherwise gotta look it up in

* the catalogs. (That can't lead to infinite recursion, since no

* indexscan initiated by syscache lookup will use cross-data-type

* operators.)

*

* We support the convention that sk_subtype == InvalidOid means

* the opclass input type; this is a hack to simplify life for

* ScanKeyInit().

*/

if (cur->sk_subtype == rel->rd_opcintype[i] ||

cur->sk_subtype == InvalidOid)

{

FmgrInfo *procinfo;

procinfo = index_getprocinfo(rel, cur->sk_attno, BTORDER_PROC);

ScanKeyEntryInitializeWithInfo(scankeys + i,

cur->sk_flags,

cur->sk_attno,

InvalidStrategy,

cur->sk_subtype,

cur->sk_collation,

procinfo,

cur->sk_argument);

}

else

{

RegProcedure cmp_proc;

cmp_proc = get_opfamily_proc(rel->rd_opfamily[i],

rel->rd_opcintype[i],

cur->sk_subtype,

BTORDER_PROC);

if (!RegProcedureIsValid(cmp_proc))

elog(ERROR, "missing support function %d(%u,%u) for attribute %d of index \"%s\"",

BTORDER_PROC, rel->rd_opcintype[i], cur->sk_subtype,

cur->sk_attno, RelationGetRelationName(rel));

ScanKeyEntryInitialize(scankeys + i,

cur->sk_flags,

cur->sk_attno,

InvalidStrategy,

cur->sk_subtype,

cur->sk_collation,

cmp_proc,

cur->sk_argument);

}

}

}

/*----------

* Examine the selected initial-positioning strategy to determine exactly

* where we need to start the scan, and set flag variables to control the

* code below.

*

* If nextkey = false, _bt_search and _bt_binsrch will locate the first

* item >= scan key. If nextkey = true, they will locate the first

* item > scan key.

*

* If goback = true, we will then step back one item, while if

* goback = false, we will start the scan on the located item.

*----------

*/

switch (strat_total)

{

case BTLessStrategyNumber:

/*

* Find first item >= scankey, then back up one to arrive at last

* item < scankey. (Note: this positioning strategy is only used

* for a backward scan, so that is always the correct starting

* position.)

*/

nextkey = false;

goback = true;

break;

case BTLessEqualStrategyNumber:

/*

* Find first item > scankey, then back up one to arrive at last

* item <= scankey. (Note: this positioning strategy is only used

* for a backward scan, so that is always the correct starting

* position.)

*/

nextkey = true;

goback = true;

break;

case BTEqualStrategyNumber:

/*

* If a backward scan was specified, need to start with last equal

* item not first one.

*/

if (ScanDirectionIsBackward(dir))

{

/*

* This is the same as the <= strategy. We will check at the

* end whether the found item is actually =.

*/

nextkey = true;

goback = true;

}

else

{

/*

* This is the same as the >= strategy. We will check at the

* end whether the found item is actually =.

*/

nextkey = false;

goback = false;

}

break;

case BTGreaterEqualStrategyNumber:

/*

* Find first item >= scankey. (This is only used for forward

* scans.)

*/

nextkey = false;

goback = false;

break;

case BTGreaterStrategyNumber:

/*

* Find first item > scankey. (This is only used for forward

* scans.)

*/

nextkey = true;

goback = false;

break;

default:

/* can't get here, but keep compiler quiet */

elog(ERROR, "unrecognized strat_total: %d", (int) strat_total);

return false;

}

/*

* Use the manufactured insertion scan key to descend the tree and

* position ourselves on the target leaf page.

*/

stack = _bt_search(rel, keysCount, scankeys, nextkey, &buf, BT_READ);

/* don't need to keep the stack around... */

_bt_freestack(stack);

/* remember which buffer we have pinned, if any */

so->currPos.buf = buf;

if (!BufferIsValid(buf))

{

/*

* We only get here if the index is completely empty. Lock relation

* because nothing finer to lock exists.

*/

PredicateLockRelation(rel, scan->xs_snapshot);

return false;

}

else

PredicateLockPage(rel, BufferGetBlockNumber(buf),

scan->xs_snapshot);

/* initialize moreLeft/moreRight appropriately for scan direction */

if (ScanDirectionIsForward(dir))

{

so->currPos.moreLeft = false;

so->currPos.moreRight = true;

}

else

{

so->currPos.moreLeft = true;

so->currPos.moreRight = false;

}

so->numKilled = 0; /* just paranoia */

so->markItemIndex = -1; /* ditto */

/* position to the precise item on the page */

offnum = _bt_binsrch(rel, buf, keysCount, scankeys, nextkey);

/*

* If nextkey = false, we are positioned at the first item >= scan key, or

* possibly at the end of a page on which all the existing items are less

* than the scan key and we know that everything on later pages is greater

* than or equal to scan key.

*

* If nextkey = true, we are positioned at the first item > scan key, or

* possibly at the end of a page on which all the existing items are less

* than or equal to the scan key and we know that everything on later

* pages is greater than scan key.

*

* The actually desired starting point is either this item or the prior

* one, or in the end-of-page case it's the first item on the next page or

* the last item on this page. Adjust the starting offset if needed. (If

* this results in an offset before the first item or after the last one,

* _bt_readpage will report no items found, and then we'll step to the

* next page as needed.)

*/

if (goback)

offnum = OffsetNumberPrev(offnum);

/*

* Now load data from the first page of the scan.

*/

if (!_bt_readpage(scan, dir, offnum))

{

/*

* There's no actually-matching data on this page. Try to advance to

* the next page. Return false if there's no matching data at all.

*/

if (!_bt_steppage(scan, dir))

return false;

}

/* Drop the lock, but not pin, on the current page */

LockBuffer(so->currPos.buf, BUFFER_LOCK_UNLOCK);

/* OK, itemIndex says what to return */

currItem = &so->currPos.items[so->currPos.itemIndex];

scan->xs_ctup.t_self = currItem->heapTid;

if (scan->xs_want_itup)

scan->xs_itup = (IndexTuple) (so->currTuples + currItem->tupleOffset);

return true;

}

| void _bt_freeskey | ( | ScanKey | skey | ) |

Definition at line 155 of file nbtutils.c.

References pfree().

Referenced by _bt_doinsert(), and _bt_load().

{

pfree(skey);

}

| void _bt_freestack | ( | BTStack | stack | ) |

Definition at line 164 of file nbtutils.c.

References BTStackData::bts_parent, NULL, and pfree().

Referenced by _bt_doinsert(), and _bt_first().

{

BTStack ostack;

while (stack != NULL)

{

ostack = stack;

stack = stack->bts_parent;

pfree(ostack);

}

}

Definition at line 1433 of file nbtsearch.c.

References _bt_getroot(), _bt_gettrueroot(), _bt_relandgetbuf(), BT_READ, BTPageOpaqueData::btpo, BTPageOpaqueData::btpo_next, buf, BufferGetPage, BufferIsValid, elog, ERROR, ItemPointerGetBlockNumber, BTPageOpaqueData::level, P_FIRSTDATAKEY, P_IGNORE, P_NONE, P_RIGHTMOST, PageGetItem, PageGetItemId, PageGetMaxOffsetNumber, PageGetSpecialPointer, RelationGetRelationName, and IndexTupleData::t_tid.

Referenced by _bt_endpoint(), _bt_insert_parent(), and _bt_pagedel().

{

Buffer buf;

Page page;

BTPageOpaque opaque;

OffsetNumber offnum;

BlockNumber blkno;

IndexTuple itup;

/*

* If we are looking for a leaf page, okay to descend from fast root;

* otherwise better descend from true root. (There is no point in being

* smarter about intermediate levels.)

*/

if (level == 0)

buf = _bt_getroot(rel, BT_READ);

else

buf = _bt_gettrueroot(rel);

if (!BufferIsValid(buf))

return InvalidBuffer;

page = BufferGetPage(buf);

opaque = (BTPageOpaque) PageGetSpecialPointer(page);

for (;;)

{

/*

* If we landed on a deleted page, step right to find a live page

* (there must be one). Also, if we want the rightmost page, step

* right if needed to get to it (this could happen if the page split

* since we obtained a pointer to it).

*/

while (P_IGNORE(opaque) ||

(rightmost && !P_RIGHTMOST(opaque)))

{

blkno = opaque->btpo_next;

if (blkno == P_NONE)

elog(ERROR, "fell off the end of index \"%s\"",

RelationGetRelationName(rel));

buf = _bt_relandgetbuf(rel, buf, blkno, BT_READ);

page = BufferGetPage(buf);

opaque = (BTPageOpaque) PageGetSpecialPointer(page);

}

/* Done? */

if (opaque->btpo.level == level)

break;

if (opaque->btpo.level < level)

elog(ERROR, "btree level %u not found in index \"%s\"",

level, RelationGetRelationName(rel));

/* Descend to leftmost or rightmost child page */

if (rightmost)

offnum = PageGetMaxOffsetNumber(page);

else

offnum = P_FIRSTDATAKEY(opaque);

itup = (IndexTuple) PageGetItem(page, PageGetItemId(page, offnum));

blkno = ItemPointerGetBlockNumber(&(itup->t_tid));

buf = _bt_relandgetbuf(rel, buf, blkno, BT_READ);

page = BufferGetPage(buf);

opaque = (BTPageOpaque) PageGetSpecialPointer(page);

}

return buf;

}

| Buffer _bt_getbuf | ( | Relation | rel, | |

| BlockNumber | blkno, | |||

| int | access | |||

| ) |

Definition at line 575 of file nbtpage.c.

References _bt_checkpage(), _bt_log_reuse_page(), _bt_page_recyclable(), _bt_pageinit(), _bt_relbuf(), Assert, BT_WRITE, BTPageOpaqueData::btpo, buf, BufferGetPage, BufferGetPageSize, ConditionalLockBuffer(), DEBUG2, elog, ExclusiveLock, GetFreeIndexPage(), InvalidBlockNumber, LockBuffer(), LockRelationForExtension(), P_NEW, PageGetSpecialPointer, PageIsNew, ReadBuffer(), RELATION_IS_LOCAL, ReleaseBuffer(), UnlockRelationForExtension(), BTPageOpaqueData::xact, and XLogStandbyInfoActive.

Referenced by _bt_getroot(), _bt_getrootheight(), _bt_getstackbuf(), _bt_gettrueroot(), _bt_insertonpg(), _bt_newroot(), _bt_pagedel(), _bt_split(), _bt_steppage(), and _bt_walk_left().

{

Buffer buf;

if (blkno != P_NEW)

{

/* Read an existing block of the relation */

buf = ReadBuffer(rel, blkno);

LockBuffer(buf, access);

_bt_checkpage(rel, buf);

}

else

{

bool needLock;

Page page;

Assert(access == BT_WRITE);

/*

* First see if the FSM knows of any free pages.

*

* We can't trust the FSM's report unreservedly; we have to check that

* the page is still free. (For example, an already-free page could

* have been re-used between the time the last VACUUM scanned it and

* the time the VACUUM made its FSM updates.)

*

* In fact, it's worse than that: we can't even assume that it's safe

* to take a lock on the reported page. If somebody else has a lock

* on it, or even worse our own caller does, we could deadlock. (The

* own-caller scenario is actually not improbable. Consider an index

* on a serial or timestamp column. Nearly all splits will be at the

* rightmost page, so it's entirely likely that _bt_split will call us

* while holding a lock on the page most recently acquired from FSM. A

* VACUUM running concurrently with the previous split could well have

* placed that page back in FSM.)

*

* To get around that, we ask for only a conditional lock on the

* reported page. If we fail, then someone else is using the page,

* and we may reasonably assume it's not free. (If we happen to be

* wrong, the worst consequence is the page will be lost to use till

* the next VACUUM, which is no big problem.)

*/

for (;;)

{

blkno = GetFreeIndexPage(rel);

if (blkno == InvalidBlockNumber)

break;

buf = ReadBuffer(rel, blkno);

if (ConditionalLockBuffer(buf))

{

page = BufferGetPage(buf);

if (_bt_page_recyclable(page))

{

/*

* If we are generating WAL for Hot Standby then create a

* WAL record that will allow us to conflict with queries

* running on standby.

*/

if (XLogStandbyInfoActive())

{

BTPageOpaque opaque = (BTPageOpaque) PageGetSpecialPointer(page);

_bt_log_reuse_page(rel, blkno, opaque->btpo.xact);

}

/* Okay to use page. Re-initialize and return it */

_bt_pageinit(page, BufferGetPageSize(buf));

return buf;

}

elog(DEBUG2, "FSM returned nonrecyclable page");

_bt_relbuf(rel, buf);

}

else

{

elog(DEBUG2, "FSM returned nonlockable page");

/* couldn't get lock, so just drop pin */

ReleaseBuffer(buf);

}

}

/*

* Extend the relation by one page.

*

* We have to use a lock to ensure no one else is extending the rel at

* the same time, else we will both try to initialize the same new

* page. We can skip locking for new or temp relations, however,

* since no one else could be accessing them.

*/

needLock = !RELATION_IS_LOCAL(rel);

if (needLock)

LockRelationForExtension(rel, ExclusiveLock);

buf = ReadBuffer(rel, P_NEW);

/* Acquire buffer lock on new page */

LockBuffer(buf, BT_WRITE);

/*

* Release the file-extension lock; it's now OK for someone else to

* extend the relation some more. Note that we cannot release this

* lock before we have buffer lock on the new page, or we risk a race

* condition against btvacuumscan --- see comments therein.

*/

if (needLock)

UnlockRelationForExtension(rel, ExclusiveLock);

/* Initialize the new page before returning it */

page = BufferGetPage(buf);

Assert(PageIsNew(page));

_bt_pageinit(page, BufferGetPageSize(buf));

}

/* ref count and lock type are correct */

return buf;

}

Definition at line 94 of file nbtpage.c.

References _bt_getbuf(), _bt_getroot(), _bt_relandgetbuf(), _bt_relbuf(), Assert, BT_READ, BT_WRITE, BTMetaPageData::btm_fastlevel, BTMetaPageData::btm_fastroot, BTMetaPageData::btm_level, BTMetaPageData::btm_magic, BTMetaPageData::btm_root, BTMetaPageData::btm_version, BTP_LEAF, BTP_META, BTPageGetMeta, BTPageOpaqueData::btpo, BTPageOpaqueData::btpo_cycleid, BTPageOpaqueData::btpo_flags, BTPageOpaqueData::btpo_next, BTPageOpaqueData::btpo_prev, BTREE_MAGIC, BTREE_METAPAGE, BTREE_VERSION, XLogRecData::buffer, BUFFER_LOCK_UNLOCK, BufferGetBlockNumber(), BufferGetPage, CacheInvalidateRelcache(), XLogRecData::data, elog, END_CRIT_SECTION, ereport, errcode(), errmsg(), ERROR, XLogRecData::len, xl_btree_newroot::level, BTPageOpaqueData::level, LockBuffer(), MarkBufferDirty(), MemoryContextAlloc(), XLogRecData::next, xl_btree_newroot::node, NULL, P_IGNORE, P_LEFTMOST, P_NEW, P_NONE, P_RIGHTMOST, PageGetSpecialPointer, PageSetLSN, pfree(), RelationData::rd_amcache, RelationData::rd_indexcxt, RelationData::rd_node, RelationGetRelationName, RelationNeedsWAL, xl_btree_newroot::rootblk, START_CRIT_SECTION, XLOG_BTREE_NEWROOT, and XLogInsert().

Referenced by _bt_get_endpoint(), _bt_getroot(), and _bt_search().

{

Buffer metabuf;

Page metapg;

BTPageOpaque metaopaque;

Buffer rootbuf;

Page rootpage;

BTPageOpaque rootopaque;

BlockNumber rootblkno;

uint32 rootlevel;

BTMetaPageData *metad;

/*

* Try to use previously-cached metapage data to find the root. This

* normally saves one buffer access per index search, which is a very

* helpful savings in bufmgr traffic and hence contention.

*/

if (rel->rd_amcache != NULL)

{

metad = (BTMetaPageData *) rel->rd_amcache;

/* We shouldn't have cached it if any of these fail */

Assert(metad->btm_magic == BTREE_MAGIC);

Assert(metad->btm_version == BTREE_VERSION);

Assert(metad->btm_root != P_NONE);

rootblkno = metad->btm_fastroot;

Assert(rootblkno != P_NONE);

rootlevel = metad->btm_fastlevel;

rootbuf = _bt_getbuf(rel, rootblkno, BT_READ);

rootpage = BufferGetPage(rootbuf);

rootopaque = (BTPageOpaque) PageGetSpecialPointer(rootpage);

/*

* Since the cache might be stale, we check the page more carefully

* here than normal. We *must* check that it's not deleted. If it's

* not alone on its level, then we reject too --- this may be overly

* paranoid but better safe than sorry. Note we don't check P_ISROOT,

* because that's not set in a "fast root".

*/

if (!P_IGNORE(rootopaque) &&

rootopaque->btpo.level == rootlevel &&

P_LEFTMOST(rootopaque) &&

P_RIGHTMOST(rootopaque))

{

/* OK, accept cached page as the root */

return rootbuf;

}

_bt_relbuf(rel, rootbuf);

/* Cache is stale, throw it away */

if (rel->rd_amcache)

pfree(rel->rd_amcache);

rel->rd_amcache = NULL;

}

metabuf = _bt_getbuf(rel, BTREE_METAPAGE, BT_READ);

metapg = BufferGetPage(metabuf);

metaopaque = (BTPageOpaque) PageGetSpecialPointer(metapg);

metad = BTPageGetMeta(metapg);

/* sanity-check the metapage */

if (!(metaopaque->btpo_flags & BTP_META) ||

metad->btm_magic != BTREE_MAGIC)

ereport(ERROR,

(errcode(ERRCODE_INDEX_CORRUPTED),

errmsg("index \"%s\" is not a btree",

RelationGetRelationName(rel))));

if (metad->btm_version != BTREE_VERSION)

ereport(ERROR,

(errcode(ERRCODE_INDEX_CORRUPTED),

errmsg("version mismatch in index \"%s\": file version %d, code version %d",

RelationGetRelationName(rel),

metad->btm_version, BTREE_VERSION)));

/* if no root page initialized yet, do it */

if (metad->btm_root == P_NONE)

{

/* If access = BT_READ, caller doesn't want us to create root yet */

if (access == BT_READ)

{

_bt_relbuf(rel, metabuf);

return InvalidBuffer;

}

/* trade in our read lock for a write lock */

LockBuffer(metabuf, BUFFER_LOCK_UNLOCK);

LockBuffer(metabuf, BT_WRITE);

/*

* Race condition: if someone else initialized the metadata between

* the time we released the read lock and acquired the write lock, we

* must avoid doing it again.

*/

if (metad->btm_root != P_NONE)

{

/*

* Metadata initialized by someone else. In order to guarantee no

* deadlocks, we have to release the metadata page and start all

* over again. (Is that really true? But it's hardly worth trying

* to optimize this case.)

*/

_bt_relbuf(rel, metabuf);

return _bt_getroot(rel, access);

}

/*

* Get, initialize, write, and leave a lock of the appropriate type on

* the new root page. Since this is the first page in the tree, it's

* a leaf as well as the root.

*/

rootbuf = _bt_getbuf(rel, P_NEW, BT_WRITE);

rootblkno = BufferGetBlockNumber(rootbuf);

rootpage = BufferGetPage(rootbuf);

rootopaque = (BTPageOpaque) PageGetSpecialPointer(rootpage);

rootopaque->btpo_prev = rootopaque->btpo_next = P_NONE;

rootopaque->btpo_flags = (BTP_LEAF | BTP_ROOT);

rootopaque->btpo.level = 0;

rootopaque->btpo_cycleid = 0;

/* NO ELOG(ERROR) till meta is updated */

START_CRIT_SECTION();

metad->btm_root = rootblkno;

metad->btm_level = 0;

metad->btm_fastroot = rootblkno;

metad->btm_fastlevel = 0;

MarkBufferDirty(rootbuf);

MarkBufferDirty(metabuf);

/* XLOG stuff */

if (RelationNeedsWAL(rel))

{

xl_btree_newroot xlrec;

XLogRecPtr recptr;

XLogRecData rdata;

xlrec.node = rel->rd_node;

xlrec.rootblk = rootblkno;

xlrec.level = 0;

rdata.data = (char *) &xlrec;

rdata.len = SizeOfBtreeNewroot;

rdata.buffer = InvalidBuffer;

rdata.next = NULL;

recptr = XLogInsert(RM_BTREE_ID, XLOG_BTREE_NEWROOT, &rdata);

PageSetLSN(rootpage, recptr);

PageSetLSN(metapg, recptr);

}

END_CRIT_SECTION();

/*

* Send out relcache inval for metapage change (probably unnecessary

* here, but let's be safe).

*/

CacheInvalidateRelcache(rel);

/*

* swap root write lock for read lock. There is no danger of anyone

* else accessing the new root page while it's unlocked, since no one

* else knows where it is yet.

*/

LockBuffer(rootbuf, BUFFER_LOCK_UNLOCK);

LockBuffer(rootbuf, BT_READ);

/* okay, metadata is correct, release lock on it */

_bt_relbuf(rel, metabuf);

}

else

{

rootblkno = metad->btm_fastroot;

Assert(rootblkno != P_NONE);

rootlevel = metad->btm_fastlevel;

/*

* Cache the metapage data for next time

*/

rel->rd_amcache = MemoryContextAlloc(rel->rd_indexcxt,

sizeof(BTMetaPageData));

memcpy(rel->rd_amcache, metad, sizeof(BTMetaPageData));

/*

* We are done with the metapage; arrange to release it via first

* _bt_relandgetbuf call

*/

rootbuf = metabuf;

for (;;)

{

rootbuf = _bt_relandgetbuf(rel, rootbuf, rootblkno, BT_READ);

rootpage = BufferGetPage(rootbuf);

rootopaque = (BTPageOpaque) PageGetSpecialPointer(rootpage);

if (!P_IGNORE(rootopaque))

break;

/* it's dead, Jim. step right one page */

if (P_RIGHTMOST(rootopaque))

elog(ERROR, "no live root page found in index \"%s\"",

RelationGetRelationName(rel));

rootblkno = rootopaque->btpo_next;

}

/* Note: can't check btpo.level on deleted pages */

if (rootopaque->btpo.level != rootlevel)

elog(ERROR, "root page %u of index \"%s\" has level %u, expected %u",

rootblkno, RelationGetRelationName(rel),

rootopaque->btpo.level, rootlevel);

}

/*

* By here, we have a pin and read lock on the root page, and no lock set

* on the metadata page. Return the root page's buffer.

*/

return rootbuf;

}

| int _bt_getrootheight | ( | Relation | rel | ) |

Definition at line 424 of file nbtpage.c.

References _bt_getbuf(), _bt_relbuf(), Assert, BT_READ, BTMetaPageData::btm_fastlevel, BTMetaPageData::btm_fastroot, BTMetaPageData::btm_magic, BTMetaPageData::btm_root, BTMetaPageData::btm_version, BTP_META, BTPageGetMeta, BTPageOpaqueData::btpo_flags, BTREE_MAGIC, BTREE_METAPAGE, BTREE_VERSION, BufferGetPage, ereport, errcode(), errmsg(), ERROR, MemoryContextAlloc(), NULL, P_NONE, PageGetSpecialPointer, RelationData::rd_amcache, RelationData::rd_indexcxt, and RelationGetRelationName.

Referenced by get_relation_info().

{

BTMetaPageData *metad;

/*

* We can get what we need from the cached metapage data. If it's not

* cached yet, load it. Sanity checks here must match _bt_getroot().

*/

if (rel->rd_amcache == NULL)

{

Buffer metabuf;

Page metapg;

BTPageOpaque metaopaque;

metabuf = _bt_getbuf(rel, BTREE_METAPAGE, BT_READ);

metapg = BufferGetPage(metabuf);

metaopaque = (BTPageOpaque) PageGetSpecialPointer(metapg);

metad = BTPageGetMeta(metapg);

/* sanity-check the metapage */

if (!(metaopaque->btpo_flags & BTP_META) ||

metad->btm_magic != BTREE_MAGIC)

ereport(ERROR,

(errcode(ERRCODE_INDEX_CORRUPTED),

errmsg("index \"%s\" is not a btree",

RelationGetRelationName(rel))));

if (metad->btm_version != BTREE_VERSION)

ereport(ERROR,

(errcode(ERRCODE_INDEX_CORRUPTED),

errmsg("version mismatch in index \"%s\": file version %d, code version %d",

RelationGetRelationName(rel),

metad->btm_version, BTREE_VERSION)));

/*

* If there's no root page yet, _bt_getroot() doesn't expect a cache

* to be made, so just stop here and report the index height is zero.

* (XXX perhaps _bt_getroot() should be changed to allow this case.)

*/

if (metad->btm_root == P_NONE)

{

_bt_relbuf(rel, metabuf);

return 0;

}

/*

* Cache the metapage data for next time

*/

rel->rd_amcache = MemoryContextAlloc(rel->rd_indexcxt,

sizeof(BTMetaPageData));

memcpy(rel->rd_amcache, metad, sizeof(BTMetaPageData));

_bt_relbuf(rel, metabuf);

}

metad = (BTMetaPageData *) rel->rd_amcache;

/* We shouldn't have cached it if any of these fail */

Assert(metad->btm_magic == BTREE_MAGIC);

Assert(metad->btm_version == BTREE_VERSION);

Assert(metad->btm_fastroot != P_NONE);

return metad->btm_fastlevel;

}

Definition at line 1724 of file nbtinsert.c.

References _bt_getbuf(), _bt_relbuf(), BTEntrySame, BTPageOpaqueData::btpo_next, BTStackData::bts_blkno, BTStackData::bts_btentry, BTStackData::bts_offset, buf, BufferGetPage, OffsetNumberNext, OffsetNumberPrev, P_FIRSTDATAKEY, P_IGNORE, P_RIGHTMOST, PageGetItem, PageGetItemId, PageGetMaxOffsetNumber, and PageGetSpecialPointer.

Referenced by _bt_insert_parent(), _bt_pagedel(), and _bt_parent_deletion_safe().

{

BlockNumber blkno;

OffsetNumber start;

blkno = stack->bts_blkno;

start = stack->bts_offset;

for (;;)

{

Buffer buf;

Page page;

BTPageOpaque opaque;

buf = _bt_getbuf(rel, blkno, access);