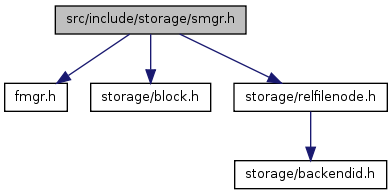

#include "fmgr.h"#include "storage/block.h"#include "storage/relfilenode.h"

Go to the source code of this file.

| #define SmgrIsTemp | ( | smgr | ) | RelFileNodeBackendIsTemp((smgr)->smgr_rnode) |

Definition at line 76 of file smgr.h.

Referenced by mdextend(), mdtruncate(), mdwrite(), ReadBuffer_common(), and register_dirty_segment().

| typedef SMgrRelationData* SMgrRelation |

| typedef struct SMgrRelationData SMgrRelationData |

| void AtEOXact_SMgr | ( | void | ) |

Definition at line 749 of file smgr.c.

References Assert, NULL, SMgrRelationData::smgr_owner, and smgrclose().

Referenced by AbortTransaction(), BackgroundWriterMain(), CheckpointerMain(), CommitTransaction(), PrepareTransaction(), and WalWriterMain().

{

/*

* Zap all unowned SMgrRelations. We rely on smgrclose() to remove each

* one from the list.

*/

while (first_unowned_reln != NULL)

{

Assert(first_unowned_reln->smgr_owner == NULL);

smgrclose(first_unowned_reln);

}

}

| void ForgetDatabaseFsyncRequests | ( | Oid | dbid | ) |

Definition at line 1606 of file md.c.

References RelFileNode::dbNode, FORGET_DATABASE_FSYNC, ForwardFsyncRequest(), InvalidForkNumber, IsUnderPostmaster, pg_usleep(), RelFileNode::relNode, RememberFsyncRequest(), and RelFileNode::spcNode.

Referenced by dbase_redo(), and dropdb().

{

RelFileNode rnode;

rnode.dbNode = dbid;

rnode.spcNode = 0;

rnode.relNode = 0;

if (pendingOpsTable)

{

/* standalone backend or startup process: fsync state is local */

RememberFsyncRequest(rnode, InvalidForkNumber, FORGET_DATABASE_FSYNC);

}

else if (IsUnderPostmaster)

{

/* see notes in ForgetRelationFsyncRequests */

while (!ForwardFsyncRequest(rnode, InvalidForkNumber,

FORGET_DATABASE_FSYNC))

pg_usleep(10000L); /* 10 msec seems a good number */

}

}

| void ForgetRelationFsyncRequests | ( | RelFileNode | rnode, | |

| ForkNumber | forknum | |||

| ) |

Definition at line 1573 of file md.c.

References FORGET_RELATION_FSYNC, ForwardFsyncRequest(), IsUnderPostmaster, pg_usleep(), and RememberFsyncRequest().

Referenced by mdunlink().

{

if (pendingOpsTable)

{

/* standalone backend or startup process: fsync state is local */

RememberFsyncRequest(rnode, forknum, FORGET_RELATION_FSYNC);

}

else if (IsUnderPostmaster)

{

/*

* Notify the checkpointer about it. If we fail to queue the cancel

* message, we have to sleep and try again ... ugly, but hopefully

* won't happen often.

*

* XXX should we CHECK_FOR_INTERRUPTS in this loop? Escaping with an

* error would leave the no-longer-used file still present on disk,

* which would be bad, so I'm inclined to assume that the checkpointer

* will always empty the queue soon.

*/

while (!ForwardFsyncRequest(rnode, forknum, FORGET_RELATION_FSYNC))

pg_usleep(10000L); /* 10 msec seems a good number */

/*

* Note we don't wait for the checkpointer to actually absorb the

* cancel message; see mdsync() for the implications.

*/

}

}

| void mdclose | ( | SMgrRelation | reln, | |

| ForkNumber | forknum | |||

| ) |

Definition at line 607 of file md.c.

References FileClose(), SMgrRelationData::md_fd, _MdfdVec::mdfd_chain, _MdfdVec::mdfd_vfd, NULL, and pfree().

Referenced by mdexists().

{

MdfdVec *v = reln->md_fd[forknum];

/* No work if already closed */

if (v == NULL)

return;

reln->md_fd[forknum] = NULL; /* prevent dangling pointer after error */

while (v != NULL)

{

MdfdVec *ov = v;

/* if not closed already */

if (v->mdfd_vfd >= 0)

FileClose(v->mdfd_vfd);

/* Now free vector */

v = v->mdfd_chain;

pfree(ov);

}

}

| void mdcreate | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| bool | isRedo | |||

| ) |

Definition at line 273 of file md.c.

References _fdvec_alloc(), Assert, ereport, errcode_for_file_access(), errmsg(), ERROR, IsBootstrapProcessingMode, SMgrRelationData::md_fd, _MdfdVec::mdfd_chain, _MdfdVec::mdfd_segno, _MdfdVec::mdfd_vfd, NULL, PathNameOpenFile(), pfree(), PG_BINARY, relpath, and SMgrRelationData::smgr_rnode.

{

char *path;

File fd;

if (isRedo && reln->md_fd[forkNum] != NULL)

return; /* created and opened already... */

Assert(reln->md_fd[forkNum] == NULL);

path = relpath(reln->smgr_rnode, forkNum);

fd = PathNameOpenFile(path, O_RDWR | O_CREAT | O_EXCL | PG_BINARY, 0600);

if (fd < 0)

{

int save_errno = errno;

/*

* During bootstrap, there are cases where a system relation will be

* accessed (by internal backend processes) before the bootstrap

* script nominally creates it. Therefore, allow the file to exist

* already, even if isRedo is not set. (See also mdopen)

*/

if (isRedo || IsBootstrapProcessingMode())

fd = PathNameOpenFile(path, O_RDWR | PG_BINARY, 0600);

if (fd < 0)

{

/* be sure to report the error reported by create, not open */

errno = save_errno;

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not create file \"%s\": %m", path)));

}

}

pfree(path);

reln->md_fd[forkNum] = _fdvec_alloc();

reln->md_fd[forkNum]->mdfd_vfd = fd;

reln->md_fd[forkNum]->mdfd_segno = 0;

reln->md_fd[forkNum]->mdfd_chain = NULL;

}

| bool mdexists | ( | SMgrRelation | reln, | |

| ForkNumber | forknum | |||

| ) |

Definition at line 256 of file md.c.

References EXTENSION_RETURN_NULL, mdclose(), mdopen(), and NULL.

{

/*

* Close it first, to ensure that we notice if the fork has been unlinked

* since we opened it.

*/

mdclose(reln, forkNum);

return (mdopen(reln, forkNum, EXTENSION_RETURN_NULL) != NULL);

}

| void mdextend | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | blocknum, | |||

| char * | buffer, | |||

| bool | skipFsync | |||

| ) |

Definition at line 474 of file md.c.

References _mdfd_getseg(), _mdnblocks(), Assert, ereport, errcode(), errcode_for_file_access(), errhint(), errmsg(), ERROR, EXTENSION_CREATE, FilePathName(), FileSeek(), FileWrite(), InvalidBlockNumber, _MdfdVec::mdfd_vfd, mdnblocks(), register_dirty_segment(), relpath, SMgrRelationData::smgr_rnode, and SmgrIsTemp.

Referenced by _mdfd_getseg().

{

off_t seekpos;

int nbytes;

MdfdVec *v;

/* This assert is too expensive to have on normally ... */

#ifdef CHECK_WRITE_VS_EXTEND

Assert(blocknum >= mdnblocks(reln, forknum));

#endif

/*

* If a relation manages to grow to 2^32-1 blocks, refuse to extend it any

* more --- we mustn't create a block whose number actually is

* InvalidBlockNumber.

*/

if (blocknum == InvalidBlockNumber)

ereport(ERROR,

(errcode(ERRCODE_PROGRAM_LIMIT_EXCEEDED),

errmsg("cannot extend file \"%s\" beyond %u blocks",

relpath(reln->smgr_rnode, forknum),

InvalidBlockNumber)));

v = _mdfd_getseg(reln, forknum, blocknum, skipFsync, EXTENSION_CREATE);

seekpos = (off_t) BLCKSZ *(blocknum % ((BlockNumber) RELSEG_SIZE));

Assert(seekpos < (off_t) BLCKSZ * RELSEG_SIZE);

/*

* Note: because caller usually obtained blocknum by calling mdnblocks,

* which did a seek(SEEK_END), this seek is often redundant and will be

* optimized away by fd.c. It's not redundant, however, if there is a

* partial page at the end of the file. In that case we want to try to

* overwrite the partial page with a full page. It's also not redundant

* if bufmgr.c had to dump another buffer of the same file to make room

* for the new page's buffer.

*/

if (FileSeek(v->mdfd_vfd, seekpos, SEEK_SET) != seekpos)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not seek to block %u in file \"%s\": %m",

blocknum, FilePathName(v->mdfd_vfd))));

if ((nbytes = FileWrite(v->mdfd_vfd, buffer, BLCKSZ)) != BLCKSZ)

{

if (nbytes < 0)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not extend file \"%s\": %m",

FilePathName(v->mdfd_vfd)),

errhint("Check free disk space.")));

/* short write: complain appropriately */

ereport(ERROR,

(errcode(ERRCODE_DISK_FULL),

errmsg("could not extend file \"%s\": wrote only %d of %d bytes at block %u",

FilePathName(v->mdfd_vfd),

nbytes, BLCKSZ, blocknum),

errhint("Check free disk space.")));

}

if (!skipFsync && !SmgrIsTemp(reln))

register_dirty_segment(reln, forknum, v);

Assert(_mdnblocks(reln, forknum, v) <= ((BlockNumber) RELSEG_SIZE));

}

| void mdimmedsync | ( | SMgrRelation | reln, | |

| ForkNumber | forknum | |||

| ) |

Definition at line 950 of file md.c.

References ereport, errcode_for_file_access(), errmsg(), ERROR, EXTENSION_FAIL, FilePathName(), FileSync(), _MdfdVec::mdfd_chain, _MdfdVec::mdfd_vfd, mdnblocks(), mdopen(), and NULL.

{

MdfdVec *v;

/*

* NOTE: mdnblocks makes sure we have opened all active segments, so that

* fsync loop will get them all!

*/

mdnblocks(reln, forknum);

v = mdopen(reln, forknum, EXTENSION_FAIL);

while (v != NULL)

{

if (FileSync(v->mdfd_vfd) < 0)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not fsync file \"%s\": %m",

FilePathName(v->mdfd_vfd))));

v = v->mdfd_chain;

}

}

| void mdinit | ( | void | ) |

Definition at line 196 of file md.c.

References ALLOCSET_DEFAULT_INITSIZE, ALLOCSET_DEFAULT_MAXSIZE, ALLOCSET_DEFAULT_MINSIZE, AllocSetContextCreate(), AmCheckpointerProcess, AmStartupProcess, HASHCTL::entrysize, HASHCTL::hash, HASH_CONTEXT, hash_create(), HASH_ELEM, HASH_FUNCTION, HASHCTL::hcxt, IsUnderPostmaster, HASHCTL::keysize, MemSet, and TopMemoryContext.

{

MdCxt = AllocSetContextCreate(TopMemoryContext,

"MdSmgr",

ALLOCSET_DEFAULT_MINSIZE,

ALLOCSET_DEFAULT_INITSIZE,

ALLOCSET_DEFAULT_MAXSIZE);

/*

* Create pending-operations hashtable if we need it. Currently, we need

* it if we are standalone (not under a postmaster) or if we are a startup

* or checkpointer auxiliary process.

*/

if (!IsUnderPostmaster || AmStartupProcess() || AmCheckpointerProcess())

{

HASHCTL hash_ctl;

MemSet(&hash_ctl, 0, sizeof(hash_ctl));

hash_ctl.keysize = sizeof(RelFileNode);

hash_ctl.entrysize = sizeof(PendingOperationEntry);

hash_ctl.hash = tag_hash;

hash_ctl.hcxt = MdCxt;

pendingOpsTable = hash_create("Pending Ops Table",

100L,

&hash_ctl,

HASH_ELEM | HASH_FUNCTION | HASH_CONTEXT);

pendingUnlinks = NIL;

}

}

| BlockNumber mdnblocks | ( | SMgrRelation | reln, | |

| ForkNumber | forknum | |||

| ) |

Definition at line 795 of file md.c.

References _mdfd_openseg(), _mdfd_segpath(), _mdnblocks(), elog, ereport, errcode_for_file_access(), errmsg(), ERROR, EXTENSION_FAIL, FATAL, _MdfdVec::mdfd_chain, mdopen(), and NULL.

Referenced by mdextend(), mdimmedsync(), mdtruncate(), and mdwrite().

{

MdfdVec *v = mdopen(reln, forknum, EXTENSION_FAIL);

BlockNumber nblocks;

BlockNumber segno = 0;

/*

* Skip through any segments that aren't the last one, to avoid redundant

* seeks on them. We have previously verified that these segments are

* exactly RELSEG_SIZE long, and it's useless to recheck that each time.

*

* NOTE: this assumption could only be wrong if another backend has

* truncated the relation. We rely on higher code levels to handle that

* scenario by closing and re-opening the md fd, which is handled via

* relcache flush. (Since the checkpointer doesn't participate in

* relcache flush, it could have segment chain entries for inactive

* segments; that's OK because the checkpointer never needs to compute

* relation size.)

*/

while (v->mdfd_chain != NULL)

{

segno++;

v = v->mdfd_chain;

}

for (;;)

{

nblocks = _mdnblocks(reln, forknum, v);

if (nblocks > ((BlockNumber) RELSEG_SIZE))

elog(FATAL, "segment too big");

if (nblocks < ((BlockNumber) RELSEG_SIZE))

return (segno * ((BlockNumber) RELSEG_SIZE)) + nblocks;

/*

* If segment is exactly RELSEG_SIZE, advance to next one.

*/

segno++;

if (v->mdfd_chain == NULL)

{

/*

* Because we pass O_CREAT, we will create the next segment (with

* zero length) immediately, if the last segment is of length

* RELSEG_SIZE. While perhaps not strictly necessary, this keeps

* the logic simple.

*/

v->mdfd_chain = _mdfd_openseg(reln, forknum, segno, O_CREAT);

if (v->mdfd_chain == NULL)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not open file \"%s\": %m",

_mdfd_segpath(reln, forknum, segno))));

}

v = v->mdfd_chain;

}

}

| void mdpostckpt | ( | void | ) |

Definition at line 1282 of file md.c.

References AbsorbFsyncRequests(), PendingUnlinkEntry::cycle_ctr, ereport, errcode_for_file_access(), errmsg(), linitial, list_delete_first(), MAIN_FORKNUM, mdckpt_cycle_ctr, NIL, pfree(), relpathperm, PendingUnlinkEntry::rnode, unlink(), and WARNING.

{

int absorb_counter;

absorb_counter = UNLINKS_PER_ABSORB;

while (pendingUnlinks != NIL)

{

PendingUnlinkEntry *entry = (PendingUnlinkEntry *) linitial(pendingUnlinks);

char *path;

/*

* New entries are appended to the end, so if the entry is new we've

* reached the end of old entries.

*

* Note: if just the right number of consecutive checkpoints fail, we

* could be fooled here by cycle_ctr wraparound. However, the only

* consequence is that we'd delay unlinking for one more checkpoint,

* which is perfectly tolerable.

*/

if (entry->cycle_ctr == mdckpt_cycle_ctr)

break;

/* Unlink the file */

path = relpathperm(entry->rnode, MAIN_FORKNUM);

if (unlink(path) < 0)

{

/*

* There's a race condition, when the database is dropped at the

* same time that we process the pending unlink requests. If the

* DROP DATABASE deletes the file before we do, we will get ENOENT

* here. rmtree() also has to ignore ENOENT errors, to deal with

* the possibility that we delete the file first.

*/

if (errno != ENOENT)

ereport(WARNING,

(errcode_for_file_access(),

errmsg("could not remove file \"%s\": %m", path)));

}

pfree(path);

/* And remove the list entry */

pendingUnlinks = list_delete_first(pendingUnlinks);

pfree(entry);

/*

* As in mdsync, we don't want to stop absorbing fsync requests for a

* long time when there are many deletions to be done. We can safely

* call AbsorbFsyncRequests() at this point in the loop (note it might

* try to delete list entries).

*/

if (--absorb_counter <= 0)

{

AbsorbFsyncRequests();

absorb_counter = UNLINKS_PER_ABSORB;

}

}

}

| void mdpreckpt | ( | void | ) |

Definition at line 1267 of file md.c.

References mdckpt_cycle_ctr.

{

/*

* Any unlink requests arriving after this point will be assigned the next

* cycle counter, and won't be unlinked until next checkpoint.

*/

mdckpt_cycle_ctr++;

}

| void mdprefetch | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | blocknum | |||

| ) |

Definition at line 634 of file md.c.

References _mdfd_getseg(), Assert, EXTENSION_FAIL, FilePrefetch(), and _MdfdVec::mdfd_vfd.

{

#ifdef USE_PREFETCH

off_t seekpos;

MdfdVec *v;

v = _mdfd_getseg(reln, forknum, blocknum, false, EXTENSION_FAIL);

seekpos = (off_t) BLCKSZ *(blocknum % ((BlockNumber) RELSEG_SIZE));

Assert(seekpos < (off_t) BLCKSZ * RELSEG_SIZE);

(void) FilePrefetch(v->mdfd_vfd, seekpos, BLCKSZ);

#endif /* USE_PREFETCH */

}

| void mdread | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | blocknum, | |||

| char * | buffer | |||

| ) |

Definition at line 655 of file md.c.

References _mdfd_getseg(), Assert, RelFileNodeBackend::backend, RelFileNode::dbNode, ereport, errcode(), errcode_for_file_access(), errmsg(), ERROR, EXTENSION_FAIL, FilePathName(), FileRead(), FileSeek(), InRecovery, _MdfdVec::mdfd_vfd, MemSet, RelFileNodeBackend::node, RelFileNode::relNode, SMgrRelationData::smgr_rnode, RelFileNode::spcNode, and zero_damaged_pages.

{

off_t seekpos;

int nbytes;

MdfdVec *v;

TRACE_POSTGRESQL_SMGR_MD_READ_START(forknum, blocknum,

reln->smgr_rnode.node.spcNode,

reln->smgr_rnode.node.dbNode,

reln->smgr_rnode.node.relNode,

reln->smgr_rnode.backend);

v = _mdfd_getseg(reln, forknum, blocknum, false, EXTENSION_FAIL);

seekpos = (off_t) BLCKSZ *(blocknum % ((BlockNumber) RELSEG_SIZE));

Assert(seekpos < (off_t) BLCKSZ * RELSEG_SIZE);

if (FileSeek(v->mdfd_vfd, seekpos, SEEK_SET) != seekpos)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not seek to block %u in file \"%s\": %m",

blocknum, FilePathName(v->mdfd_vfd))));

nbytes = FileRead(v->mdfd_vfd, buffer, BLCKSZ);

TRACE_POSTGRESQL_SMGR_MD_READ_DONE(forknum, blocknum,

reln->smgr_rnode.node.spcNode,

reln->smgr_rnode.node.dbNode,

reln->smgr_rnode.node.relNode,

reln->smgr_rnode.backend,

nbytes,

BLCKSZ);

if (nbytes != BLCKSZ)

{

if (nbytes < 0)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not read block %u in file \"%s\": %m",

blocknum, FilePathName(v->mdfd_vfd))));

/*

* Short read: we are at or past EOF, or we read a partial block at

* EOF. Normally this is an error; upper levels should never try to

* read a nonexistent block. However, if zero_damaged_pages is ON or

* we are InRecovery, we should instead return zeroes without

* complaining. This allows, for example, the case of trying to

* update a block that was later truncated away.

*/

if (zero_damaged_pages || InRecovery)

MemSet(buffer, 0, BLCKSZ);

else

ereport(ERROR,

(errcode(ERRCODE_DATA_CORRUPTED),

errmsg("could not read block %u in file \"%s\": read only %d of %d bytes",

blocknum, FilePathName(v->mdfd_vfd),

nbytes, BLCKSZ)));

}

}

| void mdsync | ( | void | ) |

Definition at line 977 of file md.c.

References _mdfd_getseg(), _mdfd_segpath(), AbsorbFsyncRequests(), Assert, bms_first_member(), bms_free(), PendingOperationEntry::canceled, CheckpointStats, CheckpointStatsData::ckpt_agg_sync_time, CheckpointStatsData::ckpt_longest_sync, CheckpointStatsData::ckpt_sync_rels, PendingOperationEntry::cycle_ctr, DEBUG1, elog, enableFsync, ereport, errcode_for_file_access(), errmsg(), ERROR, EXTENSION_RETURN_NULL, FILE_POSSIBLY_DELETED, FilePathName(), FileSync(), HASH_REMOVE, hash_search(), hash_seq_init(), hash_seq_search(), INSTR_TIME_GET_MICROSEC, INSTR_TIME_SET_CURRENT, INSTR_TIME_SUBTRACT, InvalidBackendId, log_checkpoints, longest(), MAX_FORKNUM, _MdfdVec::mdfd_vfd, mdsync_cycle_ctr, NULL, pfree(), PendingOperationEntry::requests, PendingOperationEntry::rnode, and smgropen().

Referenced by SetForwardFsyncRequests().

{

static bool mdsync_in_progress = false;

HASH_SEQ_STATUS hstat;

PendingOperationEntry *entry;

int absorb_counter;

/* Statistics on sync times */

int processed = 0;

instr_time sync_start,

sync_end,

sync_diff;

uint64 elapsed;

uint64 longest = 0;

uint64 total_elapsed = 0;

/*

* This is only called during checkpoints, and checkpoints should only

* occur in processes that have created a pendingOpsTable.

*/

if (!pendingOpsTable)

elog(ERROR, "cannot sync without a pendingOpsTable");

/*

* If we are in the checkpointer, the sync had better include all fsync

* requests that were queued by backends up to this point. The tightest

* race condition that could occur is that a buffer that must be written

* and fsync'd for the checkpoint could have been dumped by a backend just

* before it was visited by BufferSync(). We know the backend will have

* queued an fsync request before clearing the buffer's dirtybit, so we

* are safe as long as we do an Absorb after completing BufferSync().

*/

AbsorbFsyncRequests();

/*

* To avoid excess fsync'ing (in the worst case, maybe a never-terminating

* checkpoint), we want to ignore fsync requests that are entered into the

* hashtable after this point --- they should be processed next time,

* instead. We use mdsync_cycle_ctr to tell old entries apart from new

* ones: new ones will have cycle_ctr equal to the incremented value of

* mdsync_cycle_ctr.

*

* In normal circumstances, all entries present in the table at this point

* will have cycle_ctr exactly equal to the current (about to be old)

* value of mdsync_cycle_ctr. However, if we fail partway through the

* fsync'ing loop, then older values of cycle_ctr might remain when we

* come back here to try again. Repeated checkpoint failures would

* eventually wrap the counter around to the point where an old entry

* might appear new, causing us to skip it, possibly allowing a checkpoint

* to succeed that should not have. To forestall wraparound, any time the

* previous mdsync() failed to complete, run through the table and

* forcibly set cycle_ctr = mdsync_cycle_ctr.

*

* Think not to merge this loop with the main loop, as the problem is

* exactly that that loop may fail before having visited all the entries.

* From a performance point of view it doesn't matter anyway, as this path

* will never be taken in a system that's functioning normally.

*/

if (mdsync_in_progress)

{

/* prior try failed, so update any stale cycle_ctr values */

hash_seq_init(&hstat, pendingOpsTable);

while ((entry = (PendingOperationEntry *) hash_seq_search(&hstat)) != NULL)

{

entry->cycle_ctr = mdsync_cycle_ctr;

}

}

/* Advance counter so that new hashtable entries are distinguishable */

mdsync_cycle_ctr++;

/* Set flag to detect failure if we don't reach the end of the loop */

mdsync_in_progress = true;

/* Now scan the hashtable for fsync requests to process */

absorb_counter = FSYNCS_PER_ABSORB;

hash_seq_init(&hstat, pendingOpsTable);

while ((entry = (PendingOperationEntry *) hash_seq_search(&hstat)) != NULL)

{

ForkNumber forknum;

/*

* If the entry is new then don't process it this time; it might

* contain multiple fsync-request bits, but they are all new. Note

* "continue" bypasses the hash-remove call at the bottom of the loop.

*/

if (entry->cycle_ctr == mdsync_cycle_ctr)

continue;

/* Else assert we haven't missed it */

Assert((CycleCtr) (entry->cycle_ctr + 1) == mdsync_cycle_ctr);

/*

* Scan over the forks and segments represented by the entry.

*

* The bitmap manipulations are slightly tricky, because we can call

* AbsorbFsyncRequests() inside the loop and that could result in

* bms_add_member() modifying and even re-palloc'ing the bitmapsets.

* This is okay because we unlink each bitmapset from the hashtable

* entry before scanning it. That means that any incoming fsync

* requests will be processed now if they reach the table before we

* begin to scan their fork.

*/

for (forknum = 0; forknum <= MAX_FORKNUM; forknum++)

{

Bitmapset *requests = entry->requests[forknum];

int segno;

entry->requests[forknum] = NULL;

entry->canceled[forknum] = false;

while ((segno = bms_first_member(requests)) >= 0)

{

int failures;

/*

* If fsync is off then we don't have to bother opening the

* file at all. (We delay checking until this point so that

* changing fsync on the fly behaves sensibly.)

*/

if (!enableFsync)

continue;

/*

* If in checkpointer, we want to absorb pending requests

* every so often to prevent overflow of the fsync request

* queue. It is unspecified whether newly-added entries will

* be visited by hash_seq_search, but we don't care since we

* don't need to process them anyway.

*/

if (--absorb_counter <= 0)

{

AbsorbFsyncRequests();

absorb_counter = FSYNCS_PER_ABSORB;

}

/*

* The fsync table could contain requests to fsync segments

* that have been deleted (unlinked) by the time we get to

* them. Rather than just hoping an ENOENT (or EACCES on

* Windows) error can be ignored, what we do on error is

* absorb pending requests and then retry. Since mdunlink()

* queues a "cancel" message before actually unlinking, the

* fsync request is guaranteed to be marked canceled after the

* absorb if it really was this case. DROP DATABASE likewise

* has to tell us to forget fsync requests before it starts

* deletions.

*/

for (failures = 0;; failures++) /* loop exits at "break" */

{

SMgrRelation reln;

MdfdVec *seg;

char *path;

int save_errno;

/*

* Find or create an smgr hash entry for this relation.

* This may seem a bit unclean -- md calling smgr? But

* it's really the best solution. It ensures that the

* open file reference isn't permanently leaked if we get

* an error here. (You may say "but an unreferenced

* SMgrRelation is still a leak!" Not really, because the

* only case in which a checkpoint is done by a process

* that isn't about to shut down is in the checkpointer,

* and it will periodically do smgrcloseall(). This fact

* justifies our not closing the reln in the success path

* either, which is a good thing since in non-checkpointer

* cases we couldn't safely do that.)

*/

reln = smgropen(entry->rnode, InvalidBackendId);

/* Attempt to open and fsync the target segment */

seg = _mdfd_getseg(reln, forknum,

(BlockNumber) segno * (BlockNumber) RELSEG_SIZE,

false, EXTENSION_RETURN_NULL);

INSTR_TIME_SET_CURRENT(sync_start);

if (seg != NULL &&

FileSync(seg->mdfd_vfd) >= 0)

{

/* Success; update statistics about sync timing */

INSTR_TIME_SET_CURRENT(sync_end);

sync_diff = sync_end;

INSTR_TIME_SUBTRACT(sync_diff, sync_start);

elapsed = INSTR_TIME_GET_MICROSEC(sync_diff);

if (elapsed > longest)

longest = elapsed;

total_elapsed += elapsed;

processed++;

if (log_checkpoints)

elog(DEBUG1, "checkpoint sync: number=%d file=%s time=%.3f msec",

processed,

FilePathName(seg->mdfd_vfd),

(double) elapsed / 1000);

break; /* out of retry loop */

}

/* Compute file name for use in message */

save_errno = errno;

path = _mdfd_segpath(reln, forknum, (BlockNumber) segno);

errno = save_errno;

/*

* It is possible that the relation has been dropped or

* truncated since the fsync request was entered.

* Therefore, allow ENOENT, but only if we didn't fail

* already on this file. This applies both for

* _mdfd_getseg() and for FileSync, since fd.c might have

* closed the file behind our back.

*

* XXX is there any point in allowing more than one retry?

* Don't see one at the moment, but easy to change the

* test here if so.

*/

if (!FILE_POSSIBLY_DELETED(errno) ||

failures > 0)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not fsync file \"%s\": %m",

path)));

else

ereport(DEBUG1,

(errcode_for_file_access(),

errmsg("could not fsync file \"%s\" but retrying: %m",

path)));

pfree(path);

/*

* Absorb incoming requests and check to see if a cancel

* arrived for this relation fork.

*/

AbsorbFsyncRequests();

absorb_counter = FSYNCS_PER_ABSORB; /* might as well... */

if (entry->canceled[forknum])

break;

} /* end retry loop */

}

bms_free(requests);

}

/*

* We've finished everything that was requested before we started to

* scan the entry. If no new requests have been inserted meanwhile,

* remove the entry. Otherwise, update its cycle counter, as all the

* requests now in it must have arrived during this cycle.

*/

for (forknum = 0; forknum <= MAX_FORKNUM; forknum++)

{

if (entry->requests[forknum] != NULL)

break;

}

if (forknum <= MAX_FORKNUM)

entry->cycle_ctr = mdsync_cycle_ctr;

else

{

/* Okay to remove it */

if (hash_search(pendingOpsTable, &entry->rnode,

HASH_REMOVE, NULL) == NULL)

elog(ERROR, "pendingOpsTable corrupted");

}

} /* end loop over hashtable entries */

/* Return sync performance metrics for report at checkpoint end */

CheckpointStats.ckpt_sync_rels = processed;

CheckpointStats.ckpt_longest_sync = longest;

CheckpointStats.ckpt_agg_sync_time = total_elapsed;

/* Flag successful completion of mdsync */

mdsync_in_progress = false;

}

| void mdtruncate | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | nblocks | |||

| ) |

Definition at line 857 of file md.c.

References Assert, ereport, errcode_for_file_access(), errmsg(), ERROR, EXTENSION_FAIL, FilePathName(), FileTruncate(), InRecovery, SMgrRelationData::md_fd, _MdfdVec::mdfd_chain, _MdfdVec::mdfd_vfd, mdnblocks(), mdopen(), NULL, pfree(), register_dirty_segment(), relpath, SMgrRelationData::smgr_rnode, and SmgrIsTemp.

{

MdfdVec *v;

BlockNumber curnblk;

BlockNumber priorblocks;

/*

* NOTE: mdnblocks makes sure we have opened all active segments, so that

* truncation loop will get them all!

*/

curnblk = mdnblocks(reln, forknum);

if (nblocks > curnblk)

{

/* Bogus request ... but no complaint if InRecovery */

if (InRecovery)

return;

ereport(ERROR,

(errmsg("could not truncate file \"%s\" to %u blocks: it's only %u blocks now",

relpath(reln->smgr_rnode, forknum),

nblocks, curnblk)));

}

if (nblocks == curnblk)

return; /* no work */

v = mdopen(reln, forknum, EXTENSION_FAIL);

priorblocks = 0;

while (v != NULL)

{

MdfdVec *ov = v;

if (priorblocks > nblocks)

{

/*

* This segment is no longer active (and has already been unlinked

* from the mdfd_chain). We truncate the file, but do not delete

* it, for reasons explained in the header comments.

*/

if (FileTruncate(v->mdfd_vfd, 0) < 0)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not truncate file \"%s\": %m",

FilePathName(v->mdfd_vfd))));

if (!SmgrIsTemp(reln))

register_dirty_segment(reln, forknum, v);

v = v->mdfd_chain;

Assert(ov != reln->md_fd[forknum]); /* we never drop the 1st

* segment */

pfree(ov);

}

else if (priorblocks + ((BlockNumber) RELSEG_SIZE) > nblocks)

{

/*

* This is the last segment we want to keep. Truncate the file to

* the right length, and clear chain link that points to any

* remaining segments (which we shall zap). NOTE: if nblocks is

* exactly a multiple K of RELSEG_SIZE, we will truncate the K+1st

* segment to 0 length but keep it. This adheres to the invariant

* given in the header comments.

*/

BlockNumber lastsegblocks = nblocks - priorblocks;

if (FileTruncate(v->mdfd_vfd, (off_t) lastsegblocks * BLCKSZ) < 0)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not truncate file \"%s\" to %u blocks: %m",

FilePathName(v->mdfd_vfd),

nblocks)));

if (!SmgrIsTemp(reln))

register_dirty_segment(reln, forknum, v);

v = v->mdfd_chain;

ov->mdfd_chain = NULL;

}

else

{

/*

* We still need this segment and 0 or more blocks beyond it, so

* nothing to do here.

*/

v = v->mdfd_chain;

}

priorblocks += RELSEG_SIZE;

}

}

| void mdunlink | ( | RelFileNodeBackend | rnode, | |

| ForkNumber | forknum, | |||

| bool | isRedo | |||

| ) |

Definition at line 366 of file md.c.

References ForgetRelationFsyncRequests(), InvalidForkNumber, mdunlinkfork(), RelFileNodeBackend::node, and RelFileNodeBackendIsTemp.

{

/*

* We have to clean out any pending fsync requests for the doomed

* relation, else the next mdsync() will fail. There can't be any such

* requests for a temp relation, though. We can send just one request

* even when deleting multiple forks, since the fsync queuing code accepts

* the "InvalidForkNumber = all forks" convention.

*/

if (!RelFileNodeBackendIsTemp(rnode))

ForgetRelationFsyncRequests(rnode.node, forkNum);

/* Now do the per-fork work */

if (forkNum == InvalidForkNumber)

{

for (forkNum = 0; forkNum <= MAX_FORKNUM; forkNum++)

mdunlinkfork(rnode, forkNum, isRedo);

}

else

mdunlinkfork(rnode, forkNum, isRedo);

}

| void mdwrite | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | blocknum, | |||

| char * | buffer, | |||

| bool | skipFsync | |||

| ) |

Definition at line 725 of file md.c.

References _mdfd_getseg(), Assert, RelFileNodeBackend::backend, RelFileNode::dbNode, ereport, errcode(), errcode_for_file_access(), errhint(), errmsg(), ERROR, EXTENSION_FAIL, FilePathName(), FileSeek(), FileWrite(), _MdfdVec::mdfd_vfd, mdnblocks(), RelFileNodeBackend::node, register_dirty_segment(), RelFileNode::relNode, SMgrRelationData::smgr_rnode, SmgrIsTemp, and RelFileNode::spcNode.

{

off_t seekpos;

int nbytes;

MdfdVec *v;

/* This assert is too expensive to have on normally ... */

#ifdef CHECK_WRITE_VS_EXTEND

Assert(blocknum < mdnblocks(reln, forknum));

#endif

TRACE_POSTGRESQL_SMGR_MD_WRITE_START(forknum, blocknum,

reln->smgr_rnode.node.spcNode,

reln->smgr_rnode.node.dbNode,

reln->smgr_rnode.node.relNode,

reln->smgr_rnode.backend);

v = _mdfd_getseg(reln, forknum, blocknum, skipFsync, EXTENSION_FAIL);

seekpos = (off_t) BLCKSZ *(blocknum % ((BlockNumber) RELSEG_SIZE));

Assert(seekpos < (off_t) BLCKSZ * RELSEG_SIZE);

if (FileSeek(v->mdfd_vfd, seekpos, SEEK_SET) != seekpos)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not seek to block %u in file \"%s\": %m",

blocknum, FilePathName(v->mdfd_vfd))));

nbytes = FileWrite(v->mdfd_vfd, buffer, BLCKSZ);

TRACE_POSTGRESQL_SMGR_MD_WRITE_DONE(forknum, blocknum,

reln->smgr_rnode.node.spcNode,

reln->smgr_rnode.node.dbNode,

reln->smgr_rnode.node.relNode,

reln->smgr_rnode.backend,

nbytes,

BLCKSZ);

if (nbytes != BLCKSZ)

{

if (nbytes < 0)

ereport(ERROR,

(errcode_for_file_access(),

errmsg("could not write block %u in file \"%s\": %m",

blocknum, FilePathName(v->mdfd_vfd))));

/* short write: complain appropriately */

ereport(ERROR,

(errcode(ERRCODE_DISK_FULL),

errmsg("could not write block %u in file \"%s\": wrote only %d of %d bytes",

blocknum,

FilePathName(v->mdfd_vfd),

nbytes, BLCKSZ),

errhint("Check free disk space.")));

}

if (!skipFsync && !SmgrIsTemp(reln))

register_dirty_segment(reln, forknum, v);

}

| void RememberFsyncRequest | ( | RelFileNode | rnode, | |

| ForkNumber | forknum, | |||

| BlockNumber | segno | |||

| ) |

Definition at line 1435 of file md.c.

References Assert, bms_add_member(), bms_free(), PendingOperationEntry::canceled, PendingOperationEntry::cycle_ctr, PendingUnlinkEntry::cycle_ctr, RelFileNode::dbNode, FORGET_DATABASE_FSYNC, FORGET_RELATION_FSYNC, HASH_ENTER, HASH_FIND, hash_search(), hash_seq_init(), hash_seq_search(), InvalidForkNumber, lappend(), lfirst, list_delete_cell(), list_head(), lnext, MAIN_FORKNUM, mdckpt_cycle_ctr, mdsync_cycle_ctr, MemoryContextSwitchTo(), MemSet, NULL, palloc(), pfree(), PendingOperationEntry::requests, PendingUnlinkEntry::rnode, PendingOperationEntry::rnode, and UNLINK_RELATION_REQUEST.

Referenced by AbsorbFsyncRequests(), ForgetDatabaseFsyncRequests(), ForgetRelationFsyncRequests(), register_dirty_segment(), and register_unlink().

{

Assert(pendingOpsTable);

if (segno == FORGET_RELATION_FSYNC)

{

/* Remove any pending requests for the relation (one or all forks) */

PendingOperationEntry *entry;

entry = (PendingOperationEntry *) hash_search(pendingOpsTable,

&rnode,

HASH_FIND,

NULL);

if (entry)

{

/*

* We can't just delete the entry since mdsync could have an

* active hashtable scan. Instead we delete the bitmapsets; this

* is safe because of the way mdsync is coded. We also set the

* "canceled" flags so that mdsync can tell that a cancel arrived

* for the fork(s).

*/

if (forknum == InvalidForkNumber)

{

/* remove requests for all forks */

for (forknum = 0; forknum <= MAX_FORKNUM; forknum++)

{

bms_free(entry->requests[forknum]);

entry->requests[forknum] = NULL;

entry->canceled[forknum] = true;

}

}

else

{

/* remove requests for single fork */

bms_free(entry->requests[forknum]);

entry->requests[forknum] = NULL;

entry->canceled[forknum] = true;

}

}

}

else if (segno == FORGET_DATABASE_FSYNC)

{

/* Remove any pending requests for the entire database */

HASH_SEQ_STATUS hstat;

PendingOperationEntry *entry;

ListCell *cell,

*prev,

*next;

/* Remove fsync requests */

hash_seq_init(&hstat, pendingOpsTable);

while ((entry = (PendingOperationEntry *) hash_seq_search(&hstat)) != NULL)

{

if (entry->rnode.dbNode == rnode.dbNode)

{

/* remove requests for all forks */

for (forknum = 0; forknum <= MAX_FORKNUM; forknum++)

{

bms_free(entry->requests[forknum]);

entry->requests[forknum] = NULL;

entry->canceled[forknum] = true;

}

}

}

/* Remove unlink requests */

prev = NULL;

for (cell = list_head(pendingUnlinks); cell; cell = next)

{

PendingUnlinkEntry *entry = (PendingUnlinkEntry *) lfirst(cell);

next = lnext(cell);

if (entry->rnode.dbNode == rnode.dbNode)

{

pendingUnlinks = list_delete_cell(pendingUnlinks, cell, prev);

pfree(entry);

}

else

prev = cell;

}

}

else if (segno == UNLINK_RELATION_REQUEST)

{

/* Unlink request: put it in the linked list */

MemoryContext oldcxt = MemoryContextSwitchTo(MdCxt);

PendingUnlinkEntry *entry;

/* PendingUnlinkEntry doesn't store forknum, since it's always MAIN */

Assert(forknum == MAIN_FORKNUM);

entry = palloc(sizeof(PendingUnlinkEntry));

entry->rnode = rnode;

entry->cycle_ctr = mdckpt_cycle_ctr;

pendingUnlinks = lappend(pendingUnlinks, entry);

MemoryContextSwitchTo(oldcxt);

}

else

{

/* Normal case: enter a request to fsync this segment */

MemoryContext oldcxt = MemoryContextSwitchTo(MdCxt);

PendingOperationEntry *entry;

bool found;

entry = (PendingOperationEntry *) hash_search(pendingOpsTable,

&rnode,

HASH_ENTER,

&found);

/* if new entry, initialize it */

if (!found)

{

entry->cycle_ctr = mdsync_cycle_ctr;

MemSet(entry->requests, 0, sizeof(entry->requests));

MemSet(entry->canceled, 0, sizeof(entry->canceled));

}

/*

* NB: it's intentional that we don't change cycle_ctr if the entry

* already exists. The cycle_ctr must represent the oldest fsync

* request that could be in the entry.

*/

entry->requests[forknum] = bms_add_member(entry->requests[forknum],

(int) segno);

MemoryContextSwitchTo(oldcxt);

}

}

| void SetForwardFsyncRequests | ( | void | ) |

Definition at line 233 of file md.c.

References Assert, hash_destroy(), mdsync(), and NIL.

Referenced by StartupXLOG().

{

/* Perform any pending fsyncs we may have queued up, then drop table */

if (pendingOpsTable)

{

mdsync();

hash_destroy(pendingOpsTable);

}

pendingOpsTable = NULL;

/*

* We should not have any pending unlink requests, since mdunlink doesn't

* queue unlink requests when isRedo.

*/

Assert(pendingUnlinks == NIL);

}

| void smgrclose | ( | SMgrRelation | reln | ) |

Definition at line 261 of file smgr.c.

References elog, ERROR, HASH_REMOVE, hash_search(), NULL, remove_from_unowned_list(), f_smgr::smgr_close, SMgrRelationData::smgr_owner, SMgrRelationData::smgr_rnode, and SMgrRelationData::smgr_which.

Referenced by AtEOXact_SMgr(), ATExecSetTableSpace(), FinishPreparedTransaction(), smgrcloseall(), smgrclosenode(), smgrDoPendingDeletes(), xact_redo_abort(), and xact_redo_commit_internal().

{

SMgrRelation *owner;

ForkNumber forknum;

for (forknum = 0; forknum <= MAX_FORKNUM; forknum++)

(*(smgrsw[reln->smgr_which].smgr_close)) (reln, forknum);

owner = reln->smgr_owner;

if (!owner)

remove_from_unowned_list(reln);

if (hash_search(SMgrRelationHash,

(void *) &(reln->smgr_rnode),

HASH_REMOVE, NULL) == NULL)

elog(ERROR, "SMgrRelation hashtable corrupted");

/*

* Unhook the owner pointer, if any. We do this last since in the remote

* possibility of failure above, the SMgrRelation object will still exist.

*/

if (owner)

*owner = NULL;

}

| void smgrcloseall | ( | void | ) |

Definition at line 291 of file smgr.c.

References hash_seq_init(), hash_seq_search(), NULL, and smgrclose().

Referenced by BackgroundWriterMain(), CheckpointerMain(), RelationCacheInvalidate(), RequestCheckpoint(), WalWriterMain(), and XLogDropDatabase().

{

HASH_SEQ_STATUS status;

SMgrRelation reln;

/* Nothing to do if hashtable not set up */

if (SMgrRelationHash == NULL)

return;

hash_seq_init(&status, SMgrRelationHash);

while ((reln = (SMgrRelation) hash_seq_search(&status)) != NULL)

smgrclose(reln);

}

| void smgrclosenode | ( | RelFileNodeBackend | rnode | ) |

Definition at line 315 of file smgr.c.

References hash_search(), NULL, and smgrclose().

Referenced by LocalExecuteInvalidationMessage(), and RelationSetNewRelfilenode().

{

SMgrRelation reln;

/* Nothing to do if hashtable not set up */

if (SMgrRelationHash == NULL)

return;

reln = (SMgrRelation) hash_search(SMgrRelationHash,

(void *) &rnode,

HASH_FIND, NULL);

if (reln != NULL)

smgrclose(reln);

}

| void smgrcreate | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| bool | isRedo | |||

| ) |

Definition at line 341 of file smgr.c.

References RelFileNode::dbNode, SMgrRelationData::md_fd, RelFileNodeBackend::node, NULL, f_smgr::smgr_create, SMgrRelationData::smgr_rnode, SMgrRelationData::smgr_which, RelFileNode::spcNode, and TablespaceCreateDbspace().

Referenced by ATExecSetTableSpace(), fsm_extend(), heap_create_init_fork(), index_build(), RelationCreateStorage(), smgr_redo(), vm_extend(), and XLogReadBufferExtended().

{

/*

* Exit quickly in WAL replay mode if we've already opened the file. If

* it's open, it surely must exist.

*/

if (isRedo && reln->md_fd[forknum] != NULL)

return;

/*

* We may be using the target table space for the first time in this

* database, so create a per-database subdirectory if needed.

*

* XXX this is a fairly ugly violation of module layering, but this seems

* to be the best place to put the check. Maybe TablespaceCreateDbspace

* should be here and not in commands/tablespace.c? But that would imply

* importing a lot of stuff that smgr.c oughtn't know, either.

*/

TablespaceCreateDbspace(reln->smgr_rnode.node.spcNode,

reln->smgr_rnode.node.dbNode,

isRedo);

(*(smgrsw[reln->smgr_which].smgr_create)) (reln, forknum, isRedo);

}

| void smgrdounlink | ( | SMgrRelation | reln, | |

| bool | isRedo | |||

| ) |

Definition at line 379 of file smgr.c.

References CacheInvalidateSmgr(), DropRelFileNodesAllBuffers(), SMgrRelationData::smgr_rnode, f_smgr::smgr_unlink, and SMgrRelationData::smgr_which.

Referenced by FinishPreparedTransaction(), xact_redo_abort(), and xact_redo_commit_internal().

{

RelFileNodeBackend rnode = reln->smgr_rnode;

int which = reln->smgr_which;

ForkNumber forknum;

/* Close the forks at smgr level */

for (forknum = 0; forknum <= MAX_FORKNUM; forknum++)

(*(smgrsw[which].smgr_close)) (reln, forknum);

/*

* Get rid of any remaining buffers for the relation. bufmgr will just

* drop them without bothering to write the contents.

*/

DropRelFileNodesAllBuffers(&rnode, 1);

/*

* It'd be nice to tell the stats collector to forget it immediately, too.

* But we can't because we don't know the OID (and in cases involving

* relfilenode swaps, it's not always clear which table OID to forget,

* anyway).

*/

/*

* Send a shared-inval message to force other backends to close any

* dangling smgr references they may have for this rel. We should do this

* before starting the actual unlinking, in case we fail partway through

* that step. Note that the sinval message will eventually come back to

* this backend, too, and thereby provide a backstop that we closed our

* own smgr rel.

*/

CacheInvalidateSmgr(rnode);

/*

* Delete the physical file(s).

*

* Note: smgr_unlink must treat deletion failure as a WARNING, not an

* ERROR, because we've already decided to commit or abort the current

* xact.

*/

(*(smgrsw[which].smgr_unlink)) (rnode, InvalidForkNumber, isRedo);

}

| void smgrdounlinkall | ( | SMgrRelation * | rels, | |

| int | nrels, | |||

| bool | isRedo | |||

| ) |

Definition at line 436 of file smgr.c.

References CacheInvalidateSmgr(), DropRelFileNodesAllBuffers(), i, palloc(), pfree(), SMgrRelationData::smgr_rnode, and SMgrRelationData::smgr_which.

Referenced by smgrDoPendingDeletes().

{

int i = 0;

RelFileNodeBackend *rnodes;

ForkNumber forknum;

if (nrels == 0)

return;

/*

* create an array which contains all relations to be dropped, and

* close each relation's forks at the smgr level while at it

*/

rnodes = palloc(sizeof(RelFileNodeBackend) * nrels);

for (i = 0; i < nrels; i++)

{

RelFileNodeBackend rnode = rels[i]->smgr_rnode;

int which = rels[i]->smgr_which;

rnodes[i] = rnode;

/* Close the forks at smgr level */

for (forknum = 0; forknum <= MAX_FORKNUM; forknum++)

(*(smgrsw[which].smgr_close)) (rels[i], forknum);

}

/*

* Get rid of any remaining buffers for the relations. bufmgr will just

* drop them without bothering to write the contents.

*/

DropRelFileNodesAllBuffers(rnodes, nrels);

/*

* It'd be nice to tell the stats collector to forget them immediately, too.

* But we can't because we don't know the OIDs.

*/

/*

* Send a shared-inval message to force other backends to close any

* dangling smgr references they may have for these rels. We should do

* this before starting the actual unlinking, in case we fail partway

* through that step. Note that the sinval messages will eventually come

* back to this backend, too, and thereby provide a backstop that we closed

* our own smgr rel.

*/

for (i = 0; i < nrels; i++)

CacheInvalidateSmgr(rnodes[i]);

/*

* Delete the physical file(s).

*

* Note: smgr_unlink must treat deletion failure as a WARNING, not an

* ERROR, because we've already decided to commit or abort the current

* xact.

*/

for (i = 0; i < nrels; i++)

{

int which = rels[i]->smgr_which;

for (forknum = 0; forknum <= MAX_FORKNUM; forknum++)

(*(smgrsw[which].smgr_unlink)) (rnodes[i], forknum, isRedo);

}

pfree(rnodes);

}

| void smgrdounlinkfork | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| bool | isRedo | |||

| ) |

Definition at line 513 of file smgr.c.

References CacheInvalidateSmgr(), DropRelFileNodeBuffers(), f_smgr::smgr_close, SMgrRelationData::smgr_rnode, f_smgr::smgr_unlink, and SMgrRelationData::smgr_which.

{

RelFileNodeBackend rnode = reln->smgr_rnode;

int which = reln->smgr_which;

/* Close the fork at smgr level */

(*(smgrsw[which].smgr_close)) (reln, forknum);

/*

* Get rid of any remaining buffers for the fork. bufmgr will just drop

* them without bothering to write the contents.

*/

DropRelFileNodeBuffers(rnode, forknum, 0);

/*

* It'd be nice to tell the stats collector to forget it immediately, too.

* But we can't because we don't know the OID (and in cases involving

* relfilenode swaps, it's not always clear which table OID to forget,

* anyway).

*/

/*

* Send a shared-inval message to force other backends to close any

* dangling smgr references they may have for this rel. We should do this

* before starting the actual unlinking, in case we fail partway through

* that step. Note that the sinval message will eventually come back to

* this backend, too, and thereby provide a backstop that we closed our

* own smgr rel.

*/

CacheInvalidateSmgr(rnode);

/*

* Delete the physical file(s).

*

* Note: smgr_unlink must treat deletion failure as a WARNING, not an

* ERROR, because we've already decided to commit or abort the current

* xact.

*/

(*(smgrsw[which].smgr_unlink)) (rnode, forknum, isRedo);

}

| Datum smgreq | ( | PG_FUNCTION_ARGS | ) |

Definition at line 64 of file smgrtype.c.

References PG_GETARG_INT16, and PG_RETURN_BOOL.

{

int16 a = PG_GETARG_INT16(0);

int16 b = PG_GETARG_INT16(1);

PG_RETURN_BOOL(a == b);

}

| bool smgrexists | ( | SMgrRelation | reln, | |

| ForkNumber | forknum | |||

| ) |

Definition at line 252 of file smgr.c.

References f_smgr::smgr_exists, and SMgrRelationData::smgr_which.

Referenced by ATExecSetTableSpace(), FreeSpaceMapTruncateRel(), fsm_extend(), fsm_readbuf(), heap_is_matview_init_state(), index_build(), RelationTruncate(), smgr_redo(), visibilitymap_truncate(), vm_extend(), and vm_readbuf().

{

return (*(smgrsw[reln->smgr_which].smgr_exists)) (reln, forknum);

}

| void smgrextend | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | blocknum, | |||

| char * | buffer, | |||

| bool | skipFsync | |||

| ) |

Definition at line 564 of file smgr.c.

References f_smgr::smgr_extend, and SMgrRelationData::smgr_which.

Referenced by _bt_blwritepage(), _hash_alloc_buckets(), copy_relation_data(), end_heap_rewrite(), fsm_extend(), raw_heap_insert(), ReadBuffer_common(), SetMatViewToPopulated(), and vm_extend().

{

(*(smgrsw[reln->smgr_which].smgr_extend)) (reln, forknum, blocknum,

buffer, skipFsync);

}

| void smgrimmedsync | ( | SMgrRelation | reln, | |

| ForkNumber | forknum | |||

| ) |

Definition at line 685 of file smgr.c.

References f_smgr::smgr_immedsync, and SMgrRelationData::smgr_which.

Referenced by _bt_load(), btbuildempty(), copy_relation_data(), heap_create_init_fork(), heap_sync(), SetMatViewToPopulated(), and spgbuildempty().

{

(*(smgrsw[reln->smgr_which].smgr_immedsync)) (reln, forknum);

}

| Datum smgrin | ( | PG_FUNCTION_ARGS | ) |

Definition at line 36 of file smgrtype.c.

References elog, ERROR, i, NStorageManagers, PG_GETARG_CSTRING, and PG_RETURN_INT16.

{

char *s = PG_GETARG_CSTRING(0);

int16 i;

for (i = 0; i < NStorageManagers; i++)

{

if (strcmp(s, StorageManager[i].smgr_name) == 0)

PG_RETURN_INT16(i);

}

elog(ERROR, "unrecognized storage manager name \"%s\"", s);

PG_RETURN_INT16(0);

}

| void smgrinit | ( | void | ) |

Definition at line 99 of file smgr.c.

References i, NSmgr, on_proc_exit(), f_smgr::smgr_init, and smgrshutdown().

Referenced by BaseInit().

{

int i;

for (i = 0; i < NSmgr; i++)

{

if (smgrsw[i].smgr_init)

(*(smgrsw[i].smgr_init)) ();

}

/* register the shutdown proc */

on_proc_exit(smgrshutdown, 0);

}

| BlockNumber smgrnblocks | ( | SMgrRelation | reln, | |

| ForkNumber | forknum | |||

| ) |

Definition at line 623 of file smgr.c.

References f_smgr::smgr_nblocks, and SMgrRelationData::smgr_which.

Referenced by copy_relation_data(), FreeSpaceMapTruncateRel(), fsm_extend(), fsm_readbuf(), gistBuildCallback(), heap_is_matview_init_state(), ReadBuffer_common(), RelationGetNumberOfBlocksInFork(), visibilitymap_truncate(), vm_extend(), vm_readbuf(), and XLogReadBufferExtended().

{

return (*(smgrsw[reln->smgr_which].smgr_nblocks)) (reln, forknum);

}

| Datum smgrne | ( | PG_FUNCTION_ARGS | ) |

Definition at line 73 of file smgrtype.c.

References PG_GETARG_INT16, and PG_RETURN_BOOL.

{

int16 a = PG_GETARG_INT16(0);

int16 b = PG_GETARG_INT16(1);

PG_RETURN_BOOL(a != b);

}

| SMgrRelation smgropen | ( | RelFileNode | rnode, | |

| BackendId | backend | |||

| ) |

Definition at line 134 of file smgr.c.

References RelFileNodeBackend::backend, HASHCTL::entrysize, HASHCTL::hash, hash_create(), HASH_ELEM, HASH_FUNCTION, hash_search(), HASHCTL::keysize, SMgrRelationData::md_fd, MemSet, SMgrRelationData::next_unowned_reln, RelFileNodeBackend::node, NULL, SMgrRelationData::smgr_fsm_nblocks, SMgrRelationData::smgr_owner, SMgrRelationData::smgr_targblock, SMgrRelationData::smgr_vm_nblocks, and SMgrRelationData::smgr_which.

Referenced by ATExecSetTableSpace(), FinishPreparedTransaction(), FlushBuffer(), LocalBufferAlloc(), mdsync(), ReadBufferWithoutRelcache(), RelationCreateStorage(), smgr_redo(), smgrDoPendingDeletes(), xact_redo_abort(), xact_redo_commit_internal(), and XLogReadBufferExtended().

{

RelFileNodeBackend brnode;

SMgrRelation reln;

bool found;

if (SMgrRelationHash == NULL)

{

/* First time through: initialize the hash table */

HASHCTL ctl;

MemSet(&ctl, 0, sizeof(ctl));

ctl.keysize = sizeof(RelFileNodeBackend);

ctl.entrysize = sizeof(SMgrRelationData);

ctl.hash = tag_hash;

SMgrRelationHash = hash_create("smgr relation table", 400,

&ctl, HASH_ELEM | HASH_FUNCTION);

first_unowned_reln = NULL;

}

/* Look up or create an entry */

brnode.node = rnode;

brnode.backend = backend;

reln = (SMgrRelation) hash_search(SMgrRelationHash,

(void *) &brnode,

HASH_ENTER, &found);

/* Initialize it if not present before */

if (!found)

{

int forknum;

/* hash_search already filled in the lookup key */

reln->smgr_owner = NULL;

reln->smgr_targblock = InvalidBlockNumber;

reln->smgr_fsm_nblocks = InvalidBlockNumber;

reln->smgr_vm_nblocks = InvalidBlockNumber;

reln->smgr_which = 0; /* we only have md.c at present */

/* mark it not open */

for (forknum = 0; forknum <= MAX_FORKNUM; forknum++)

reln->md_fd[forknum] = NULL;

/* place it at head of unowned list (to make smgrsetowner cheap) */

reln->next_unowned_reln = first_unowned_reln;

first_unowned_reln = reln;

}

return reln;

}

| Datum smgrout | ( | PG_FUNCTION_ARGS | ) |

Definition at line 51 of file smgrtype.c.

References elog, ERROR, i, NStorageManagers, PG_GETARG_INT16, PG_RETURN_CSTRING, and pstrdup().

{

int16 i = PG_GETARG_INT16(0);

char *s;

if (i >= NStorageManagers || i < 0)

elog(ERROR, "invalid storage manager ID: %d", i);

s = pstrdup(StorageManager[i].smgr_name);

PG_RETURN_CSTRING(s);

}

| void smgrpostckpt | ( | void | ) |

Definition at line 725 of file smgr.c.

References i, NSmgr, and f_smgr::smgr_post_ckpt.

Referenced by CreateCheckPoint().

{

int i;

for (i = 0; i < NSmgr; i++)

{

if (smgrsw[i].smgr_post_ckpt)

(*(smgrsw[i].smgr_post_ckpt)) ();

}

}

| void smgrpreckpt | ( | void | ) |

Definition at line 695 of file smgr.c.

References i, NSmgr, and f_smgr::smgr_pre_ckpt.

Referenced by CreateCheckPoint().

{

int i;

for (i = 0; i < NSmgr; i++)

{

if (smgrsw[i].smgr_pre_ckpt)

(*(smgrsw[i].smgr_pre_ckpt)) ();

}

}

| void smgrprefetch | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | blocknum | |||

| ) |

Definition at line 575 of file smgr.c.

References f_smgr::smgr_prefetch, and SMgrRelationData::smgr_which.

Referenced by LocalPrefetchBuffer(), and PrefetchBuffer().

{

(*(smgrsw[reln->smgr_which].smgr_prefetch)) (reln, forknum, blocknum);

}

| void smgrread | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | blocknum, | |||

| char * | buffer | |||

| ) |

Definition at line 589 of file smgr.c.

References f_smgr::smgr_read, and SMgrRelationData::smgr_which.

Referenced by copy_relation_data(), and ReadBuffer_common().

{

(*(smgrsw[reln->smgr_which].smgr_read)) (reln, forknum, blocknum, buffer);

}

| void smgrsetowner | ( | SMgrRelation * | owner, | |

| SMgrRelation | reln | |||

| ) |

Definition at line 192 of file smgr.c.

References Assert, NULL, remove_from_unowned_list(), and SMgrRelationData::smgr_owner.

{

/* We don't currently support "disowning" an SMgrRelation here */

Assert(owner != NULL);

/*

* First, unhook any old owner. (Normally there shouldn't be any, but it

* seems possible that this can happen during swap_relation_files()

* depending on the order of processing. It's ok to close the old

* relcache entry early in that case.)

*

* If there isn't an old owner, then the reln should be in the unowned

* list, and we need to remove it.

*/

if (reln->smgr_owner)

*(reln->smgr_owner) = NULL;

else

remove_from_unowned_list(reln);

/* Now establish the ownership relationship. */

reln->smgr_owner = owner;

*owner = reln;

}

| void smgrsync | ( | void | ) |

| void smgrtruncate | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | nblocks | |||

| ) |

Definition at line 635 of file smgr.c.

References CacheInvalidateSmgr(), DropRelFileNodeBuffers(), SMgrRelationData::smgr_rnode, f_smgr::smgr_truncate, and SMgrRelationData::smgr_which.

Referenced by FreeSpaceMapTruncateRel(), RelationTruncate(), smgr_redo(), and visibilitymap_truncate().

{

/*

* Get rid of any buffers for the about-to-be-deleted blocks. bufmgr will

* just drop them without bothering to write the contents.

*/

DropRelFileNodeBuffers(reln->smgr_rnode, forknum, nblocks);

/*

* Send a shared-inval message to force other backends to close any smgr

* references they may have for this rel. This is useful because they

* might have open file pointers to segments that got removed, and/or

* smgr_targblock variables pointing past the new rel end. (The inval

* message will come back to our backend, too, causing a

* probably-unnecessary local smgr flush. But we don't expect that this

* is a performance-critical path.) As in the unlink code, we want to be

* sure the message is sent before we start changing things on-disk.

*/

CacheInvalidateSmgr(reln->smgr_rnode);

/*

* Do the truncation.

*/

(*(smgrsw[reln->smgr_which].smgr_truncate)) (reln, forknum, nblocks);

}

| void smgrwrite | ( | SMgrRelation | reln, | |

| ForkNumber | forknum, | |||

| BlockNumber | blocknum, | |||

| char * | buffer, | |||

| bool | skipFsync | |||

| ) |

Definition at line 611 of file smgr.c.

References SMgrRelationData::smgr_which, and f_smgr::smgr_write.

Referenced by _bt_blwritepage(), btbuildempty(), FlushBuffer(), FlushRelationBuffers(), LocalBufferAlloc(), and spgbuildempty().

{

(*(smgrsw[reln->smgr_which].smgr_write)) (reln, forknum, blocknum,

buffer, skipFsync);

}

1.7.1

1.7.1