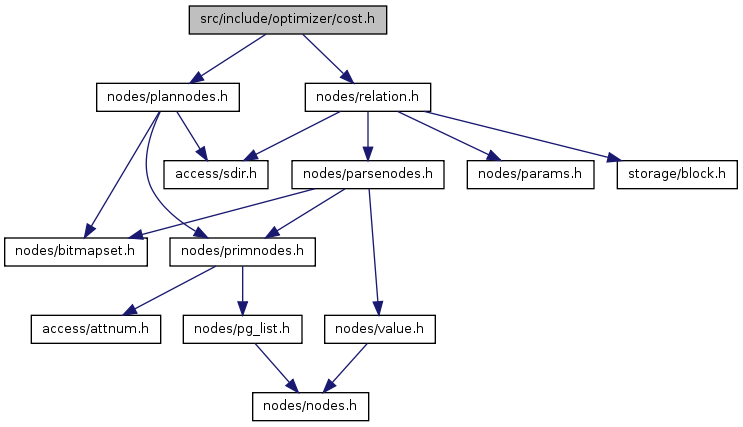

#include "nodes/plannodes.h"#include "nodes/relation.h"

Go to the source code of this file.

Definition at line 32 of file cost.h.

{

CONSTRAINT_EXCLUSION_OFF, /* do not use c_e */

CONSTRAINT_EXCLUSION_ON, /* apply c_e to all rels */

CONSTRAINT_EXCLUSION_PARTITION /* apply c_e to otherrels only */

} ConstraintExclusionType;

| double clamp_row_est | ( | double | nrows | ) |

Definition at line 154 of file costsize.c.

References rint().

Referenced by approx_tuple_count(), calc_joinrel_size_estimate(), cost_bitmap_heap_scan(), cost_index(), create_bitmap_subplan(), estimate_path_cost_size(), estimate_size(), expression_returns_set_rows(), final_cost_hashjoin(), get_parameterized_baserel_size(), initial_cost_mergejoin(), make_limit(), and set_baserel_size_estimates().

{

/*

* Force estimate to be at least one row, to make explain output look

* better and to avoid possible divide-by-zero when interpolating costs.

* Make it an integer, too.

*/

if (nrows <= 1.0)

nrows = 1.0;

else

nrows = rint(nrows);

return nrows;

}

| Selectivity clause_selectivity | ( | PlannerInfo * | root, | |

| Node * | clause, | |||

| int | varRelid, | |||

| JoinType | jointype, | |||

| SpecialJoinInfo * | sjinfo | |||

| ) |

Definition at line 484 of file clausesel.c.

References and_clause(), arg, OpExpr::args, bms_is_subset_singleton(), BooleanEqualOperator, booltestsel(), RestrictInfo::clause, RestrictInfo::clause_relids, clause_selectivity(), clauselist_selectivity(), Const::constisnull, Const::constvalue, CurrentOfExpr::cvarno, DatumGetBool, DEBUG4, elog, estimate_expression_value(), find_base_rel(), get_notclausearg(), OpExpr::inputcollid, InvalidOid, is_funcclause, is_opclause, IsA, JOIN_INNER, join_selectivity(), lfirst, list_make2, makeBoolConst(), RestrictInfo::norm_selec, not_clause(), NULL, nulltestsel(), OpExpr::opno, or_clause(), RestrictInfo::orclause, RestrictInfo::outer_selec, RestrictInfo::pseudoconstant, restriction_selectivity(), rowcomparesel(), s1, s2, scalararraysel(), treat_as_join_clause(), RelOptInfo::tuples, RangeQueryClause::var, Var::varlevelsup, and Var::varno.

Referenced by approx_tuple_count(), booltestsel(), clause_selectivity(), clauselist_selectivity(), and create_or_index_quals().

{

Selectivity s1 = 0.5; /* default for any unhandled clause type */

RestrictInfo *rinfo = NULL;

bool cacheable = false;

if (clause == NULL) /* can this still happen? */

return s1;

if (IsA(clause, RestrictInfo))

{

rinfo = (RestrictInfo *) clause;

/*

* If the clause is marked pseudoconstant, then it will be used as a

* gating qual and should not affect selectivity estimates; hence

* return 1.0. The only exception is that a constant FALSE may be

* taken as having selectivity 0.0, since it will surely mean no rows

* out of the plan. This case is simple enough that we need not

* bother caching the result.

*/

if (rinfo->pseudoconstant)

{

if (!IsA(rinfo->clause, Const))

return (Selectivity) 1.0;

}

/*

* If the clause is marked redundant, always return 1.0.

*/

if (rinfo->norm_selec > 1)

return (Selectivity) 1.0;

/*

* If possible, cache the result of the selectivity calculation for

* the clause. We can cache if varRelid is zero or the clause

* contains only vars of that relid --- otherwise varRelid will affect

* the result, so mustn't cache. Outer join quals might be examined

* with either their join's actual jointype or JOIN_INNER, so we need

* two cache variables to remember both cases. Note: we assume the

* result won't change if we are switching the input relations or

* considering a unique-ified case, so we only need one cache variable

* for all non-JOIN_INNER cases.

*/

if (varRelid == 0 ||

bms_is_subset_singleton(rinfo->clause_relids, varRelid))

{

/* Cacheable --- do we already have the result? */

if (jointype == JOIN_INNER)

{

if (rinfo->norm_selec >= 0)

return rinfo->norm_selec;

}

else

{

if (rinfo->outer_selec >= 0)

return rinfo->outer_selec;

}

cacheable = true;

}

/*

* Proceed with examination of contained clause. If the clause is an

* OR-clause, we want to look at the variant with sub-RestrictInfos,

* so that per-subclause selectivities can be cached.

*/

if (rinfo->orclause)

clause = (Node *) rinfo->orclause;

else

clause = (Node *) rinfo->clause;

}

if (IsA(clause, Var))

{

Var *var = (Var *) clause;

/*

* We probably shouldn't ever see an uplevel Var here, but if we do,

* return the default selectivity...

*/

if (var->varlevelsup == 0 &&

(varRelid == 0 || varRelid == (int) var->varno))

{

/*

* A Var at the top of a clause must be a bool Var. This is

* equivalent to the clause reln.attribute = 't', so we compute

* the selectivity as if that is what we have.

*/

s1 = restriction_selectivity(root,

BooleanEqualOperator,

list_make2(var,

makeBoolConst(true,

false)),

InvalidOid,

varRelid);

}

}

else if (IsA(clause, Const))

{

/* bool constant is pretty easy... */

Const *con = (Const *) clause;

s1 = con->constisnull ? 0.0 :

DatumGetBool(con->constvalue) ? 1.0 : 0.0;

}

else if (IsA(clause, Param))

{

/* see if we can replace the Param */

Node *subst = estimate_expression_value(root, clause);

if (IsA(subst, Const))

{

/* bool constant is pretty easy... */

Const *con = (Const *) subst;

s1 = con->constisnull ? 0.0 :

DatumGetBool(con->constvalue) ? 1.0 : 0.0;

}

else

{

/* XXX any way to do better than default? */

}

}

else if (not_clause(clause))

{

/* inverse of the selectivity of the underlying clause */

s1 = 1.0 - clause_selectivity(root,

(Node *) get_notclausearg((Expr *) clause),

varRelid,

jointype,

sjinfo);

}

else if (and_clause(clause))

{

/* share code with clauselist_selectivity() */

s1 = clauselist_selectivity(root,

((BoolExpr *) clause)->args,

varRelid,

jointype,

sjinfo);

}

else if (or_clause(clause))

{

/*

* Selectivities for an OR clause are computed as s1+s2 - s1*s2 to

* account for the probable overlap of selected tuple sets.

*

* XXX is this too conservative?

*/

ListCell *arg;

s1 = 0.0;

foreach(arg, ((BoolExpr *) clause)->args)

{

Selectivity s2 = clause_selectivity(root,

(Node *) lfirst(arg),

varRelid,

jointype,

sjinfo);

s1 = s1 + s2 - s1 * s2;

}

}

else if (is_opclause(clause) || IsA(clause, DistinctExpr))

{

OpExpr *opclause = (OpExpr *) clause;

Oid opno = opclause->opno;

if (treat_as_join_clause(clause, rinfo, varRelid, sjinfo))

{

/* Estimate selectivity for a join clause. */

s1 = join_selectivity(root, opno,

opclause->args,

opclause->inputcollid,

jointype,

sjinfo);

}

else

{

/* Estimate selectivity for a restriction clause. */

s1 = restriction_selectivity(root, opno,

opclause->args,

opclause->inputcollid,

varRelid);

}

/*

* DistinctExpr has the same representation as OpExpr, but the

* contained operator is "=" not "<>", so we must negate the result.

* This estimation method doesn't give the right behavior for nulls,

* but it's better than doing nothing.

*/

if (IsA(clause, DistinctExpr))

s1 = 1.0 - s1;

}

else if (is_funcclause(clause))

{

/*

* This is not an operator, so we guess at the selectivity. THIS IS A

* HACK TO GET V4 OUT THE DOOR. FUNCS SHOULD BE ABLE TO HAVE

* SELECTIVITIES THEMSELVES. -- JMH 7/9/92

*/

s1 = (Selectivity) 0.3333333;

}

#ifdef NOT_USED

else if (IsA(clause, SubPlan) ||

IsA(clause, AlternativeSubPlan))

{

/*

* Just for the moment! FIX ME! - vadim 02/04/98

*/

s1 = (Selectivity) 0.5;

}

#endif

else if (IsA(clause, ScalarArrayOpExpr))

{

/* Use node specific selectivity calculation function */

s1 = scalararraysel(root,

(ScalarArrayOpExpr *) clause,

treat_as_join_clause(clause, rinfo,

varRelid, sjinfo),

varRelid,

jointype,

sjinfo);

}

else if (IsA(clause, RowCompareExpr))

{

/* Use node specific selectivity calculation function */

s1 = rowcomparesel(root,

(RowCompareExpr *) clause,

varRelid,

jointype,

sjinfo);

}

else if (IsA(clause, NullTest))

{

/* Use node specific selectivity calculation function */

s1 = nulltestsel(root,

((NullTest *) clause)->nulltesttype,

(Node *) ((NullTest *) clause)->arg,

varRelid,

jointype,

sjinfo);

}

else if (IsA(clause, BooleanTest))

{

/* Use node specific selectivity calculation function */

s1 = booltestsel(root,

((BooleanTest *) clause)->booltesttype,

(Node *) ((BooleanTest *) clause)->arg,

varRelid,

jointype,

sjinfo);

}

else if (IsA(clause, CurrentOfExpr))

{

/* CURRENT OF selects at most one row of its table */

CurrentOfExpr *cexpr = (CurrentOfExpr *) clause;

RelOptInfo *crel = find_base_rel(root, cexpr->cvarno);

if (crel->tuples > 0)

s1 = 1.0 / crel->tuples;

}

else if (IsA(clause, RelabelType))

{

/* Not sure this case is needed, but it can't hurt */

s1 = clause_selectivity(root,

(Node *) ((RelabelType *) clause)->arg,

varRelid,

jointype,

sjinfo);

}

else if (IsA(clause, CoerceToDomain))

{

/* Not sure this case is needed, but it can't hurt */

s1 = clause_selectivity(root,

(Node *) ((CoerceToDomain *) clause)->arg,

varRelid,

jointype,

sjinfo);

}

/* Cache the result if possible */

if (cacheable)

{

if (jointype == JOIN_INNER)

rinfo->norm_selec = s1;

else

rinfo->outer_selec = s1;

}

#ifdef SELECTIVITY_DEBUG

elog(DEBUG4, "clause_selectivity: s1 %f", s1);

#endif /* SELECTIVITY_DEBUG */

return s1;

}

| Selectivity clauselist_selectivity | ( | PlannerInfo * | root, | |

| List * | clauses, | |||

| int | varRelid, | |||

| JoinType | jointype, | |||

| SpecialJoinInfo * | sjinfo | |||

| ) |

Definition at line 93 of file clausesel.c.

References addRangeClause(), OpExpr::args, bms_membership(), RestrictInfo::clause, RestrictInfo::clause_relids, clause_selectivity(), DEFAULT_INEQ_SEL, get_oprrest(), RangeQueryClause::have_hibound, RangeQueryClause::have_lobound, RangeQueryClause::hibound, IS_NULL, is_opclause, is_pseudo_constant_clause(), is_pseudo_constant_clause_relids(), IsA, RestrictInfo::left_relids, lfirst, linitial, list_length(), RangeQueryClause::lobound, lsecond, RangeQueryClause::next, NULL, nulltestsel(), NumRelids(), OpExpr::opno, pfree(), RestrictInfo::pseudoconstant, RestrictInfo::right_relids, s1, s2, and RangeQueryClause::var.

Referenced by btcostestimate(), calc_joinrel_size_estimate(), clause_selectivity(), compute_semi_anti_join_factors(), estimate_size(), genericcostestimate(), get_parameterized_baserel_size(), gincostestimate(), postgresGetForeignRelSize(), and set_baserel_size_estimates().

{

Selectivity s1 = 1.0;

RangeQueryClause *rqlist = NULL;

ListCell *l;

/*

* If there's exactly one clause, then no use in trying to match up pairs,

* so just go directly to clause_selectivity().

*/

if (list_length(clauses) == 1)

return clause_selectivity(root, (Node *) linitial(clauses),

varRelid, jointype, sjinfo);

/*

* Initial scan over clauses. Anything that doesn't look like a potential

* rangequery clause gets multiplied into s1 and forgotten. Anything that

* does gets inserted into an rqlist entry.

*/

foreach(l, clauses)

{

Node *clause = (Node *) lfirst(l);

RestrictInfo *rinfo;

Selectivity s2;

/* Always compute the selectivity using clause_selectivity */

s2 = clause_selectivity(root, clause, varRelid, jointype, sjinfo);

/*

* Check for being passed a RestrictInfo.

*

* If it's a pseudoconstant RestrictInfo, then s2 is either 1.0 or

* 0.0; just use that rather than looking for range pairs.

*/

if (IsA(clause, RestrictInfo))

{

rinfo = (RestrictInfo *) clause;

if (rinfo->pseudoconstant)

{

s1 = s1 * s2;

continue;

}

clause = (Node *) rinfo->clause;

}

else

rinfo = NULL;

/*

* See if it looks like a restriction clause with a pseudoconstant on

* one side. (Anything more complicated than that might not behave in

* the simple way we are expecting.) Most of the tests here can be

* done more efficiently with rinfo than without.

*/

if (is_opclause(clause) && list_length(((OpExpr *) clause)->args) == 2)

{

OpExpr *expr = (OpExpr *) clause;

bool varonleft = true;

bool ok;

if (rinfo)

{

ok = (bms_membership(rinfo->clause_relids) == BMS_SINGLETON) &&

(is_pseudo_constant_clause_relids(lsecond(expr->args),

rinfo->right_relids) ||

(varonleft = false,

is_pseudo_constant_clause_relids(linitial(expr->args),

rinfo->left_relids)));

}

else

{

ok = (NumRelids(clause) == 1) &&

(is_pseudo_constant_clause(lsecond(expr->args)) ||

(varonleft = false,

is_pseudo_constant_clause(linitial(expr->args))));

}

if (ok)

{

/*

* If it's not a "<" or ">" operator, just merge the

* selectivity in generically. But if it's the right oprrest,

* add the clause to rqlist for later processing.

*/

switch (get_oprrest(expr->opno))

{

case F_SCALARLTSEL:

addRangeClause(&rqlist, clause,

varonleft, true, s2);

break;

case F_SCALARGTSEL:

addRangeClause(&rqlist, clause,

varonleft, false, s2);

break;

default:

/* Just merge the selectivity in generically */

s1 = s1 * s2;

break;

}

continue; /* drop to loop bottom */

}

}

/* Not the right form, so treat it generically. */

s1 = s1 * s2;

}

/*

* Now scan the rangequery pair list.

*/

while (rqlist != NULL)

{

RangeQueryClause *rqnext;

if (rqlist->have_lobound && rqlist->have_hibound)

{

/* Successfully matched a pair of range clauses */

Selectivity s2;

/*

* Exact equality to the default value probably means the

* selectivity function punted. This is not airtight but should

* be good enough.

*/

if (rqlist->hibound == DEFAULT_INEQ_SEL ||

rqlist->lobound == DEFAULT_INEQ_SEL)

{

s2 = DEFAULT_RANGE_INEQ_SEL;

}

else

{

s2 = rqlist->hibound + rqlist->lobound - 1.0;

/* Adjust for double-exclusion of NULLs */

s2 += nulltestsel(root, IS_NULL, rqlist->var,

varRelid, jointype, sjinfo);

/*

* A zero or slightly negative s2 should be converted into a

* small positive value; we probably are dealing with a very

* tight range and got a bogus result due to roundoff errors.

* However, if s2 is very negative, then we probably have

* default selectivity estimates on one or both sides of the

* range that we failed to recognize above for some reason.

*/

if (s2 <= 0.0)

{

if (s2 < -0.01)

{

/*

* No data available --- use a default estimate that

* is small, but not real small.

*/

s2 = DEFAULT_RANGE_INEQ_SEL;

}

else

{

/*

* It's just roundoff error; use a small positive

* value

*/

s2 = 1.0e-10;

}

}

}

/* Merge in the selectivity of the pair of clauses */

s1 *= s2;

}

else

{

/* Only found one of a pair, merge it in generically */

if (rqlist->have_lobound)

s1 *= rqlist->lobound;

else

s1 *= rqlist->hibound;

}

/* release storage and advance */

rqnext = rqlist->next;

pfree(rqlist);

rqlist = rqnext;

}

return s1;

}

| void compute_semi_anti_join_factors | ( | PlannerInfo * | root, | |

| RelOptInfo * | outerrel, | |||

| RelOptInfo * | innerrel, | |||

| JoinType | jointype, | |||

| SpecialJoinInfo * | sjinfo, | |||

| List * | restrictlist, | |||

| SemiAntiJoinFactors * | semifactors | |||

| ) |

Definition at line 3191 of file costsize.c.

References Assert, clauselist_selectivity(), SpecialJoinInfo::delay_upper_joins, RestrictInfo::is_pushed_down, IsA, JOIN_ANTI, JOIN_INNER, SpecialJoinInfo::join_quals, JOIN_SEMI, SpecialJoinInfo::jointype, lappend(), lfirst, SpecialJoinInfo::lhs_strict, list_free(), SemiAntiJoinFactors::match_count, Max, SpecialJoinInfo::min_lefthand, SpecialJoinInfo::min_righthand, SemiAntiJoinFactors::outer_match_frac, RelOptInfo::relids, RelOptInfo::rows, SpecialJoinInfo::syn_lefthand, SpecialJoinInfo::syn_righthand, and SpecialJoinInfo::type.

Referenced by add_paths_to_joinrel().

{

Selectivity jselec;

Selectivity nselec;

Selectivity avgmatch;

SpecialJoinInfo norm_sjinfo;

List *joinquals;

ListCell *l;

/* Should only be called in these cases */

Assert(jointype == JOIN_SEMI || jointype == JOIN_ANTI);

/*

* In an ANTI join, we must ignore clauses that are "pushed down", since

* those won't affect the match logic. In a SEMI join, we do not

* distinguish joinquals from "pushed down" quals, so just use the whole

* restrictinfo list.

*/

if (jointype == JOIN_ANTI)

{

joinquals = NIL;

foreach(l, restrictlist)

{

RestrictInfo *rinfo = (RestrictInfo *) lfirst(l);

Assert(IsA(rinfo, RestrictInfo));

if (!rinfo->is_pushed_down)

joinquals = lappend(joinquals, rinfo);

}

}

else

joinquals = restrictlist;

/*

* Get the JOIN_SEMI or JOIN_ANTI selectivity of the join clauses.

*/

jselec = clauselist_selectivity(root,

joinquals,

0,

jointype,

sjinfo);

/*

* Also get the normal inner-join selectivity of the join clauses.

*/

norm_sjinfo.type = T_SpecialJoinInfo;

norm_sjinfo.min_lefthand = outerrel->relids;

norm_sjinfo.min_righthand = innerrel->relids;

norm_sjinfo.syn_lefthand = outerrel->relids;

norm_sjinfo.syn_righthand = innerrel->relids;

norm_sjinfo.jointype = JOIN_INNER;

/* we don't bother trying to make the remaining fields valid */

norm_sjinfo.lhs_strict = false;

norm_sjinfo.delay_upper_joins = false;

norm_sjinfo.join_quals = NIL;

nselec = clauselist_selectivity(root,

joinquals,

0,

JOIN_INNER,

&norm_sjinfo);

/* Avoid leaking a lot of ListCells */

if (jointype == JOIN_ANTI)

list_free(joinquals);

/*

* jselec can be interpreted as the fraction of outer-rel rows that have

* any matches (this is true for both SEMI and ANTI cases). And nselec is

* the fraction of the Cartesian product that matches. So, the average

* number of matches for each outer-rel row that has at least one match is

* nselec * inner_rows / jselec.

*

* Note: it is correct to use the inner rel's "rows" count here, even

* though we might later be considering a parameterized inner path with

* fewer rows. This is because we have included all the join clauses in

* the selectivity estimate.

*/

if (jselec > 0) /* protect against zero divide */

{

avgmatch = nselec * innerrel->rows / jselec;

/* Clamp to sane range */

avgmatch = Max(1.0, avgmatch);

}

else

avgmatch = 1.0;

semifactors->outer_match_frac = jselec;

semifactors->match_count = avgmatch;

}

| void cost_agg | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| AggStrategy | aggstrategy, | |||

| const AggClauseCosts * | aggcosts, | |||

| int | numGroupCols, | |||

| double | numGroups, | |||

| Cost | input_startup_cost, | |||

| Cost | input_total_cost, | |||

| double | input_tuples | |||

| ) |

Definition at line 1467 of file costsize.c.

References AGG_HASHED, AGG_PLAIN, AGG_SORTED, Assert, cpu_operator_cost, cpu_tuple_cost, AggClauseCosts::finalCost, MemSet, NULL, QualCost::per_tuple, Path::rows, QualCost::startup, Path::startup_cost, Path::total_cost, and AggClauseCosts::transCost.

Referenced by choose_hashed_distinct(), choose_hashed_grouping(), choose_hashed_setop(), create_unique_path(), make_agg(), and optimize_minmax_aggregates().

{

double output_tuples;

Cost startup_cost;

Cost total_cost;

AggClauseCosts dummy_aggcosts;

/* Use all-zero per-aggregate costs if NULL is passed */

if (aggcosts == NULL)

{

Assert(aggstrategy == AGG_HASHED);

MemSet(&dummy_aggcosts, 0, sizeof(AggClauseCosts));

aggcosts = &dummy_aggcosts;

}

/*

* The transCost.per_tuple component of aggcosts should be charged once

* per input tuple, corresponding to the costs of evaluating the aggregate

* transfns and their input expressions (with any startup cost of course

* charged but once). The finalCost component is charged once per output

* tuple, corresponding to the costs of evaluating the finalfns.

*

* If we are grouping, we charge an additional cpu_operator_cost per

* grouping column per input tuple for grouping comparisons.

*

* We will produce a single output tuple if not grouping, and a tuple per

* group otherwise. We charge cpu_tuple_cost for each output tuple.

*

* Note: in this cost model, AGG_SORTED and AGG_HASHED have exactly the

* same total CPU cost, but AGG_SORTED has lower startup cost. If the

* input path is already sorted appropriately, AGG_SORTED should be

* preferred (since it has no risk of memory overflow). This will happen

* as long as the computed total costs are indeed exactly equal --- but if

* there's roundoff error we might do the wrong thing. So be sure that

* the computations below form the same intermediate values in the same

* order.

*/

if (aggstrategy == AGG_PLAIN)

{

startup_cost = input_total_cost;

startup_cost += aggcosts->transCost.startup;

startup_cost += aggcosts->transCost.per_tuple * input_tuples;

startup_cost += aggcosts->finalCost;

/* we aren't grouping */

total_cost = startup_cost + cpu_tuple_cost;

output_tuples = 1;

}

else if (aggstrategy == AGG_SORTED)

{

/* Here we are able to deliver output on-the-fly */

startup_cost = input_startup_cost;

total_cost = input_total_cost;

/* calcs phrased this way to match HASHED case, see note above */

total_cost += aggcosts->transCost.startup;

total_cost += aggcosts->transCost.per_tuple * input_tuples;

total_cost += (cpu_operator_cost * numGroupCols) * input_tuples;

total_cost += aggcosts->finalCost * numGroups;

total_cost += cpu_tuple_cost * numGroups;

output_tuples = numGroups;

}

else

{

/* must be AGG_HASHED */

startup_cost = input_total_cost;

startup_cost += aggcosts->transCost.startup;

startup_cost += aggcosts->transCost.per_tuple * input_tuples;

startup_cost += (cpu_operator_cost * numGroupCols) * input_tuples;

total_cost = startup_cost;

total_cost += aggcosts->finalCost * numGroups;

total_cost += cpu_tuple_cost * numGroups;

output_tuples = numGroups;

}

path->rows = output_tuples;

path->startup_cost = startup_cost;

path->total_cost = total_cost;

}

| void cost_bitmap_and_node | ( | BitmapAndPath * | path, | |

| PlannerInfo * | root | |||

| ) |

Definition at line 776 of file costsize.c.

References BitmapAndPath::bitmapquals, BitmapAndPath::bitmapselectivity, cost_bitmap_tree_node(), cpu_operator_cost, lfirst, list_head(), BitmapAndPath::path, Path::rows, Path::startup_cost, subpath(), and Path::total_cost.

Referenced by bitmap_and_cost_est(), and create_bitmap_and_path().

{

Cost totalCost;

Selectivity selec;

ListCell *l;

/*

* We estimate AND selectivity on the assumption that the inputs are

* independent. This is probably often wrong, but we don't have the info

* to do better.

*

* The runtime cost of the BitmapAnd itself is estimated at 100x

* cpu_operator_cost for each tbm_intersect needed. Probably too small,

* definitely too simplistic?

*/

totalCost = 0.0;

selec = 1.0;

foreach(l, path->bitmapquals)

{

Path *subpath = (Path *) lfirst(l);

Cost subCost;

Selectivity subselec;

cost_bitmap_tree_node(subpath, &subCost, &subselec);

selec *= subselec;

totalCost += subCost;

if (l != list_head(path->bitmapquals))

totalCost += 100.0 * cpu_operator_cost;

}

path->bitmapselectivity = selec;

path->path.rows = 0; /* per above, not used */

path->path.startup_cost = totalCost;

path->path.total_cost = totalCost;

}

| void cost_bitmap_heap_scan | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| RelOptInfo * | baserel, | |||

| ParamPathInfo * | param_info, | |||

| Path * | bitmapqual, | |||

| double | loop_count | |||

| ) |

Definition at line 613 of file costsize.c.

References Assert, clamp_row_est(), cost_bitmap_tree_node(), cpu_tuple_cost, disable_cost, enable_bitmapscan, get_indexpath_pages(), get_restriction_qual_cost(), get_tablespace_page_costs(), index_pages_fetched(), IsA, RelOptInfo::pages, QualCost::per_tuple, ParamPathInfo::ppi_rows, RelOptInfo::relid, RelOptInfo::reltablespace, RelOptInfo::rows, Path::rows, RTE_RELATION, RelOptInfo::rtekind, QualCost::startup, Path::startup_cost, T, Path::total_cost, and RelOptInfo::tuples.

Referenced by bitmap_and_cost_est(), bitmap_scan_cost_est(), and create_bitmap_heap_path().

{

Cost startup_cost = 0;

Cost run_cost = 0;

Cost indexTotalCost;

Selectivity indexSelectivity;

QualCost qpqual_cost;

Cost cpu_per_tuple;

Cost cost_per_page;

double tuples_fetched;

double pages_fetched;

double spc_seq_page_cost,

spc_random_page_cost;

double T;

/* Should only be applied to base relations */

Assert(IsA(baserel, RelOptInfo));

Assert(baserel->relid > 0);

Assert(baserel->rtekind == RTE_RELATION);

/* Mark the path with the correct row estimate */

if (param_info)

path->rows = param_info->ppi_rows;

else

path->rows = baserel->rows;

if (!enable_bitmapscan)

startup_cost += disable_cost;

/*

* Fetch total cost of obtaining the bitmap, as well as its total

* selectivity.

*/

cost_bitmap_tree_node(bitmapqual, &indexTotalCost, &indexSelectivity);

startup_cost += indexTotalCost;

/* Fetch estimated page costs for tablespace containing table. */

get_tablespace_page_costs(baserel->reltablespace,

&spc_random_page_cost,

&spc_seq_page_cost);

/*

* Estimate number of main-table pages fetched.

*/

tuples_fetched = clamp_row_est(indexSelectivity * baserel->tuples);

T = (baserel->pages > 1) ? (double) baserel->pages : 1.0;

if (loop_count > 1)

{

/*

* For repeated bitmap scans, scale up the number of tuples fetched in

* the Mackert and Lohman formula by the number of scans, so that we

* estimate the number of pages fetched by all the scans. Then

* pro-rate for one scan.

*/

pages_fetched = index_pages_fetched(tuples_fetched * loop_count,

baserel->pages,

get_indexpath_pages(bitmapqual),

root);

pages_fetched /= loop_count;

}

else

{

/*

* For a single scan, the number of heap pages that need to be fetched

* is the same as the Mackert and Lohman formula for the case T <= b

* (ie, no re-reads needed).

*/

pages_fetched = (2.0 * T * tuples_fetched) / (2.0 * T + tuples_fetched);

}

if (pages_fetched >= T)

pages_fetched = T;

else

pages_fetched = ceil(pages_fetched);

/*

* For small numbers of pages we should charge spc_random_page_cost

* apiece, while if nearly all the table's pages are being read, it's more

* appropriate to charge spc_seq_page_cost apiece. The effect is

* nonlinear, too. For lack of a better idea, interpolate like this to

* determine the cost per page.

*/

if (pages_fetched >= 2.0)

cost_per_page = spc_random_page_cost -

(spc_random_page_cost - spc_seq_page_cost)

* sqrt(pages_fetched / T);

else

cost_per_page = spc_random_page_cost;

run_cost += pages_fetched * cost_per_page;

/*

* Estimate CPU costs per tuple.

*

* Often the indexquals don't need to be rechecked at each tuple ... but

* not always, especially not if there are enough tuples involved that the

* bitmaps become lossy. For the moment, just assume they will be

* rechecked always. This means we charge the full freight for all the

* scan clauses.

*/

get_restriction_qual_cost(root, baserel, param_info, &qpqual_cost);

startup_cost += qpqual_cost.startup;

cpu_per_tuple = cpu_tuple_cost + qpqual_cost.per_tuple;

run_cost += cpu_per_tuple * tuples_fetched;

path->startup_cost = startup_cost;

path->total_cost = startup_cost + run_cost;

}

| void cost_bitmap_or_node | ( | BitmapOrPath * | path, | |

| PlannerInfo * | root | |||

| ) |

Definition at line 820 of file costsize.c.

References BitmapOrPath::bitmapquals, BitmapOrPath::bitmapselectivity, cost_bitmap_tree_node(), cpu_operator_cost, IsA, lfirst, list_head(), Min, BitmapOrPath::path, Path::rows, Path::startup_cost, subpath(), and Path::total_cost.

Referenced by create_bitmap_or_path().

{

Cost totalCost;

Selectivity selec;

ListCell *l;

/*

* We estimate OR selectivity on the assumption that the inputs are

* non-overlapping, since that's often the case in "x IN (list)" type

* situations. Of course, we clamp to 1.0 at the end.

*

* The runtime cost of the BitmapOr itself is estimated at 100x

* cpu_operator_cost for each tbm_union needed. Probably too small,

* definitely too simplistic? We are aware that the tbm_unions are

* optimized out when the inputs are BitmapIndexScans.

*/

totalCost = 0.0;

selec = 0.0;

foreach(l, path->bitmapquals)

{

Path *subpath = (Path *) lfirst(l);

Cost subCost;

Selectivity subselec;

cost_bitmap_tree_node(subpath, &subCost, &subselec);

selec += subselec;

totalCost += subCost;

if (l != list_head(path->bitmapquals) &&

!IsA(subpath, IndexPath))

totalCost += 100.0 * cpu_operator_cost;

}

path->bitmapselectivity = Min(selec, 1.0);

path->path.rows = 0; /* per above, not used */

path->path.startup_cost = totalCost;

path->path.total_cost = totalCost;

}

| void cost_bitmap_tree_node | ( | Path * | path, | |

| Cost * | cost, | |||

| Selectivity * | selec | |||

| ) |

Definition at line 733 of file costsize.c.

References cpu_operator_cost, elog, ERROR, IsA, nodeTag, Path::rows, and Path::total_cost.

Referenced by choose_bitmap_and(), cost_bitmap_and_node(), cost_bitmap_heap_scan(), cost_bitmap_or_node(), and path_usage_comparator().

{

if (IsA(path, IndexPath))

{

*cost = ((IndexPath *) path)->indextotalcost;

*selec = ((IndexPath *) path)->indexselectivity;

/*

* Charge a small amount per retrieved tuple to reflect the costs of

* manipulating the bitmap. This is mostly to make sure that a bitmap

* scan doesn't look to be the same cost as an indexscan to retrieve a

* single tuple.

*/

*cost += 0.1 * cpu_operator_cost * path->rows;

}

else if (IsA(path, BitmapAndPath))

{

*cost = path->total_cost;

*selec = ((BitmapAndPath *) path)->bitmapselectivity;

}

else if (IsA(path, BitmapOrPath))

{

*cost = path->total_cost;

*selec = ((BitmapOrPath *) path)->bitmapselectivity;

}

else

{

elog(ERROR, "unrecognized node type: %d", nodeTag(path));

*cost = *selec = 0; /* keep compiler quiet */

}

}

| void cost_ctescan | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| RelOptInfo * | baserel, | |||

| ParamPathInfo * | param_info | |||

| ) |

Definition at line 1114 of file costsize.c.

References Assert, cpu_tuple_cost, get_restriction_qual_cost(), QualCost::per_tuple, ParamPathInfo::ppi_rows, RelOptInfo::relid, RelOptInfo::rows, Path::rows, RTE_CTE, RelOptInfo::rtekind, QualCost::startup, Path::startup_cost, Path::total_cost, and RelOptInfo::tuples.

Referenced by create_ctescan_path(), and create_worktablescan_path().

{

Cost startup_cost = 0;

Cost run_cost = 0;

QualCost qpqual_cost;

Cost cpu_per_tuple;

/* Should only be applied to base relations that are CTEs */

Assert(baserel->relid > 0);

Assert(baserel->rtekind == RTE_CTE);

/* Mark the path with the correct row estimate */

if (param_info)

path->rows = param_info->ppi_rows;

else

path->rows = baserel->rows;

/* Charge one CPU tuple cost per row for tuplestore manipulation */

cpu_per_tuple = cpu_tuple_cost;

/* Add scanning CPU costs */

get_restriction_qual_cost(root, baserel, param_info, &qpqual_cost);

startup_cost += qpqual_cost.startup;

cpu_per_tuple += cpu_tuple_cost + qpqual_cost.per_tuple;

run_cost += cpu_per_tuple * baserel->tuples;

path->startup_cost = startup_cost;

path->total_cost = startup_cost + run_cost;

}

| void cost_functionscan | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| RelOptInfo * | baserel, | |||

| ParamPathInfo * | param_info | |||

| ) |

Definition at line 1011 of file costsize.c.

References Assert, cost_qual_eval_node(), cpu_tuple_cost, RangeTblEntry::funcexpr, get_restriction_qual_cost(), QualCost::per_tuple, planner_rt_fetch, ParamPathInfo::ppi_rows, RelOptInfo::relid, RelOptInfo::rows, Path::rows, RTE_FUNCTION, RangeTblEntry::rtekind, QualCost::startup, Path::startup_cost, Path::total_cost, and RelOptInfo::tuples.

Referenced by create_functionscan_path().

{

Cost startup_cost = 0;

Cost run_cost = 0;

QualCost qpqual_cost;

Cost cpu_per_tuple;

RangeTblEntry *rte;

QualCost exprcost;

/* Should only be applied to base relations that are functions */

Assert(baserel->relid > 0);

rte = planner_rt_fetch(baserel->relid, root);

Assert(rte->rtekind == RTE_FUNCTION);

/* Mark the path with the correct row estimate */

if (param_info)

path->rows = param_info->ppi_rows;

else

path->rows = baserel->rows;

/*

* Estimate costs of executing the function expression.

*

* Currently, nodeFunctionscan.c always executes the function to

* completion before returning any rows, and caches the results in a

* tuplestore. So the function eval cost is all startup cost, and per-row

* costs are minimal.

*

* XXX in principle we ought to charge tuplestore spill costs if the

* number of rows is large. However, given how phony our rowcount

* estimates for functions tend to be, there's not a lot of point in that

* refinement right now.

*/

cost_qual_eval_node(&exprcost, rte->funcexpr, root);

startup_cost += exprcost.startup + exprcost.per_tuple;

/* Add scanning CPU costs */

get_restriction_qual_cost(root, baserel, param_info, &qpqual_cost);

startup_cost += qpqual_cost.startup;

cpu_per_tuple = cpu_tuple_cost + qpqual_cost.per_tuple;

run_cost += cpu_per_tuple * baserel->tuples;

path->startup_cost = startup_cost;

path->total_cost = startup_cost + run_cost;

}

| void cost_group | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| int | numGroupCols, | |||

| double | numGroups, | |||

| Cost | input_startup_cost, | |||

| Cost | input_total_cost, | |||

| double | input_tuples | |||

| ) |

Definition at line 1621 of file costsize.c.

References cpu_operator_cost, Path::rows, Path::startup_cost, and Path::total_cost.

Referenced by choose_hashed_distinct(), choose_hashed_grouping(), choose_hashed_setop(), and make_group().

{

Cost startup_cost;

Cost total_cost;

startup_cost = input_startup_cost;

total_cost = input_total_cost;

/*

* Charge one cpu_operator_cost per comparison per input tuple. We assume

* all columns get compared at most of the tuples.

*/

total_cost += cpu_operator_cost * input_tuples * numGroupCols;

path->rows = numGroups;

path->startup_cost = startup_cost;

path->total_cost = total_cost;

}

| void cost_index | ( | IndexPath * | path, | |

| PlannerInfo * | root, | |||

| double | loop_count | |||

| ) |

Definition at line 240 of file costsize.c.

References RelOptInfo::allvisfrac, IndexOptInfo::amcostestimate, Assert, RelOptInfo::baserestrictinfo, clamp_row_est(), cost_qual_eval(), cpu_tuple_cost, disable_cost, enable_indexscan, Float8GetDatum(), get_tablespace_page_costs(), index_pages_fetched(), IndexPath::indexinfo, IndexPath::indexquals, IndexPath::indexselectivity, IndexPath::indextotalcost, IsA, list_concat(), list_copy(), list_difference_ptr(), OidFunctionCall7, IndexOptInfo::pages, RelOptInfo::pages, Path::param_info, IndexPath::path, Path::pathtype, QualCost::per_tuple, PointerGetDatum, ParamPathInfo::ppi_clauses, ParamPathInfo::ppi_rows, IndexOptInfo::rel, RelOptInfo::relid, RelOptInfo::reltablespace, RelOptInfo::rows, Path::rows, RTE_RELATION, RelOptInfo::rtekind, QualCost::startup, Path::startup_cost, Path::total_cost, and RelOptInfo::tuples.

Referenced by create_index_path(), and reparameterize_path().

{

IndexOptInfo *index = path->indexinfo;

RelOptInfo *baserel = index->rel;

bool indexonly = (path->path.pathtype == T_IndexOnlyScan);

List *allclauses;

Cost startup_cost = 0;

Cost run_cost = 0;

Cost indexStartupCost;

Cost indexTotalCost;

Selectivity indexSelectivity;

double indexCorrelation,

csquared;

double spc_seq_page_cost,

spc_random_page_cost;

Cost min_IO_cost,

max_IO_cost;

QualCost qpqual_cost;

Cost cpu_per_tuple;

double tuples_fetched;

double pages_fetched;

/* Should only be applied to base relations */

Assert(IsA(baserel, RelOptInfo) &&

IsA(index, IndexOptInfo));

Assert(baserel->relid > 0);

Assert(baserel->rtekind == RTE_RELATION);

/* Mark the path with the correct row estimate */

if (path->path.param_info)

{

path->path.rows = path->path.param_info->ppi_rows;

/* also get the set of clauses that should be enforced by the scan */

allclauses = list_concat(list_copy(path->path.param_info->ppi_clauses),

baserel->baserestrictinfo);

}

else

{

path->path.rows = baserel->rows;

/* allclauses should just be the rel's restriction clauses */

allclauses = baserel->baserestrictinfo;

}

if (!enable_indexscan)

startup_cost += disable_cost;

/* we don't need to check enable_indexonlyscan; indxpath.c does that */

/*

* Call index-access-method-specific code to estimate the processing cost

* for scanning the index, as well as the selectivity of the index (ie,

* the fraction of main-table tuples we will have to retrieve) and its

* correlation to the main-table tuple order.

*/

OidFunctionCall7(index->amcostestimate,

PointerGetDatum(root),

PointerGetDatum(path),

Float8GetDatum(loop_count),

PointerGetDatum(&indexStartupCost),

PointerGetDatum(&indexTotalCost),

PointerGetDatum(&indexSelectivity),

PointerGetDatum(&indexCorrelation));

/*

* Save amcostestimate's results for possible use in bitmap scan planning.

* We don't bother to save indexStartupCost or indexCorrelation, because a

* bitmap scan doesn't care about either.

*/

path->indextotalcost = indexTotalCost;

path->indexselectivity = indexSelectivity;

/* all costs for touching index itself included here */

startup_cost += indexStartupCost;

run_cost += indexTotalCost - indexStartupCost;

/* estimate number of main-table tuples fetched */

tuples_fetched = clamp_row_est(indexSelectivity * baserel->tuples);

/* fetch estimated page costs for tablespace containing table */

get_tablespace_page_costs(baserel->reltablespace,

&spc_random_page_cost,

&spc_seq_page_cost);

/*----------

* Estimate number of main-table pages fetched, and compute I/O cost.

*

* When the index ordering is uncorrelated with the table ordering,

* we use an approximation proposed by Mackert and Lohman (see

* index_pages_fetched() for details) to compute the number of pages

* fetched, and then charge spc_random_page_cost per page fetched.

*

* When the index ordering is exactly correlated with the table ordering

* (just after a CLUSTER, for example), the number of pages fetched should

* be exactly selectivity * table_size. What's more, all but the first

* will be sequential fetches, not the random fetches that occur in the

* uncorrelated case. So if the number of pages is more than 1, we

* ought to charge

* spc_random_page_cost + (pages_fetched - 1) * spc_seq_page_cost

* For partially-correlated indexes, we ought to charge somewhere between

* these two estimates. We currently interpolate linearly between the

* estimates based on the correlation squared (XXX is that appropriate?).

*

* If it's an index-only scan, then we will not need to fetch any heap

* pages for which the visibility map shows all tuples are visible.

* Hence, reduce the estimated number of heap fetches accordingly.

* We use the measured fraction of the entire heap that is all-visible,

* which might not be particularly relevant to the subset of the heap

* that this query will fetch; but it's not clear how to do better.

*----------

*/

if (loop_count > 1)

{

/*

* For repeated indexscans, the appropriate estimate for the

* uncorrelated case is to scale up the number of tuples fetched in

* the Mackert and Lohman formula by the number of scans, so that we

* estimate the number of pages fetched by all the scans; then

* pro-rate the costs for one scan. In this case we assume all the

* fetches are random accesses.

*/

pages_fetched = index_pages_fetched(tuples_fetched * loop_count,

baserel->pages,

(double) index->pages,

root);

if (indexonly)

pages_fetched = ceil(pages_fetched * (1.0 - baserel->allvisfrac));

max_IO_cost = (pages_fetched * spc_random_page_cost) / loop_count;

/*

* In the perfectly correlated case, the number of pages touched by

* each scan is selectivity * table_size, and we can use the Mackert

* and Lohman formula at the page level to estimate how much work is

* saved by caching across scans. We still assume all the fetches are

* random, though, which is an overestimate that's hard to correct for

* without double-counting the cache effects. (But in most cases

* where such a plan is actually interesting, only one page would get

* fetched per scan anyway, so it shouldn't matter much.)

*/

pages_fetched = ceil(indexSelectivity * (double) baserel->pages);

pages_fetched = index_pages_fetched(pages_fetched * loop_count,

baserel->pages,

(double) index->pages,

root);

if (indexonly)

pages_fetched = ceil(pages_fetched * (1.0 - baserel->allvisfrac));

min_IO_cost = (pages_fetched * spc_random_page_cost) / loop_count;

}

else

{

/*

* Normal case: apply the Mackert and Lohman formula, and then

* interpolate between that and the correlation-derived result.

*/

pages_fetched = index_pages_fetched(tuples_fetched,

baserel->pages,

(double) index->pages,

root);

if (indexonly)

pages_fetched = ceil(pages_fetched * (1.0 - baserel->allvisfrac));

/* max_IO_cost is for the perfectly uncorrelated case (csquared=0) */

max_IO_cost = pages_fetched * spc_random_page_cost;

/* min_IO_cost is for the perfectly correlated case (csquared=1) */

pages_fetched = ceil(indexSelectivity * (double) baserel->pages);

if (indexonly)

pages_fetched = ceil(pages_fetched * (1.0 - baserel->allvisfrac));

if (pages_fetched > 0)

{

min_IO_cost = spc_random_page_cost;

if (pages_fetched > 1)

min_IO_cost += (pages_fetched - 1) * spc_seq_page_cost;

}

else

min_IO_cost = 0;

}

/*

* Now interpolate based on estimated index order correlation to get total

* disk I/O cost for main table accesses.

*/

csquared = indexCorrelation * indexCorrelation;

run_cost += max_IO_cost + csquared * (min_IO_cost - max_IO_cost);

/*

* Estimate CPU costs per tuple.

*

* What we want here is cpu_tuple_cost plus the evaluation costs of any

* qual clauses that we have to evaluate as qpquals. We approximate that

* list as allclauses minus any clauses appearing in indexquals. (We

* assume that pointer equality is enough to recognize duplicate

* RestrictInfos.) This method neglects some considerations such as

* clauses that needn't be checked because they are implied by a partial

* index's predicate. It does not seem worth the cycles to try to factor

* those things in at this stage, even though createplan.c will take pains

* to remove such unnecessary clauses from the qpquals list if this path

* is selected for use.

*/

cost_qual_eval(&qpqual_cost,

list_difference_ptr(allclauses, path->indexquals),

root);

startup_cost += qpqual_cost.startup;

cpu_per_tuple = cpu_tuple_cost + qpqual_cost.per_tuple;

run_cost += cpu_per_tuple * tuples_fetched;

path->path.startup_cost = startup_cost;

path->path.total_cost = startup_cost + run_cost;

}

| void cost_material | ( | Path * | path, | |

| Cost | input_startup_cost, | |||

| Cost | input_total_cost, | |||

| double | tuples, | |||

| int | width | |||

| ) |

Definition at line 1413 of file costsize.c.

References cpu_operator_cost, relation_byte_size(), Path::rows, seq_page_cost, Path::startup_cost, Path::total_cost, and work_mem.

Referenced by create_material_path(), and materialize_finished_plan().

{

Cost startup_cost = input_startup_cost;

Cost run_cost = input_total_cost - input_startup_cost;

double nbytes = relation_byte_size(tuples, width);

long work_mem_bytes = work_mem * 1024L;

path->rows = tuples;

/*

* Whether spilling or not, charge 2x cpu_operator_cost per tuple to

* reflect bookkeeping overhead. (This rate must be more than what

* cost_rescan charges for materialize, ie, cpu_operator_cost per tuple;

* if it is exactly the same then there will be a cost tie between

* nestloop with A outer, materialized B inner and nestloop with B outer,

* materialized A inner. The extra cost ensures we'll prefer

* materializing the smaller rel.) Note that this is normally a good deal

* less than cpu_tuple_cost; which is OK because a Material plan node

* doesn't do qual-checking or projection, so it's got less overhead than

* most plan nodes.

*/

run_cost += 2 * cpu_operator_cost * tuples;

/*

* If we will spill to disk, charge at the rate of seq_page_cost per page.

* This cost is assumed to be evenly spread through the plan run phase,

* which isn't exactly accurate but our cost model doesn't allow for

* nonuniform costs within the run phase.

*/

if (nbytes > work_mem_bytes)

{

double npages = ceil(nbytes / BLCKSZ);

run_cost += seq_page_cost * npages;

}

path->startup_cost = startup_cost;

path->total_cost = startup_cost + run_cost;

}

| void cost_merge_append | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| List * | pathkeys, | |||

| int | n_streams, | |||

| Cost | input_startup_cost, | |||

| Cost | input_total_cost, | |||

| double | tuples | |||

| ) |

Definition at line 1362 of file costsize.c.

References cpu_operator_cost, LOG2, Path::startup_cost, and Path::total_cost.

Referenced by create_merge_append_path().

{

Cost startup_cost = 0;

Cost run_cost = 0;

Cost comparison_cost;

double N;

double logN;

/*

* Avoid log(0)...

*/

N = (n_streams < 2) ? 2.0 : (double) n_streams;

logN = LOG2(N);

/* Assumed cost per tuple comparison */

comparison_cost = 2.0 * cpu_operator_cost;

/* Heap creation cost */

startup_cost += comparison_cost * N * logN;

/* Per-tuple heap maintenance cost */

run_cost += tuples * comparison_cost * 2.0 * logN;

/*

* Also charge a small amount (arbitrarily set equal to operator cost) per

* extracted tuple. We don't charge cpu_tuple_cost because a MergeAppend

* node doesn't do qual-checking or projection, so it has less overhead

* than most plan nodes.

*/

run_cost += cpu_operator_cost * tuples;

path->startup_cost = startup_cost + input_startup_cost;

path->total_cost = startup_cost + run_cost + input_total_cost;

}

| void cost_qual_eval | ( | QualCost * | cost, | |

| List * | quals, | |||

| PlannerInfo * | root | |||

| ) |

Definition at line 2898 of file costsize.c.

References cost_qual_eval_walker(), lfirst, QualCost::per_tuple, cost_qual_eval_context::root, QualCost::startup, and cost_qual_eval_context::total.

Referenced by add_tlist_costs_to_plan(), cost_index(), cost_subplan(), cost_tidscan(), final_cost_hashjoin(), final_cost_mergejoin(), final_cost_nestloop(), genericcostestimate(), get_restriction_qual_cost(), gincostestimate(), inline_function(), make_agg(), make_group(), make_result(), plan_cluster_use_sort(), postgresGetForeignRelSize(), set_baserel_size_estimates(), and set_foreign_size_estimates().

{

cost_qual_eval_context context;

ListCell *l;

context.root = root;

context.total.startup = 0;

context.total.per_tuple = 0;

/* We don't charge any cost for the implicit ANDing at top level ... */

foreach(l, quals)

{

Node *qual = (Node *) lfirst(l);

cost_qual_eval_walker(qual, &context);

}

*cost = context.total;

}

| void cost_qual_eval_node | ( | QualCost * | cost, | |

| Node * | qual, | |||

| PlannerInfo * | root | |||

| ) |

Definition at line 2924 of file costsize.c.

References cost_qual_eval_walker(), QualCost::per_tuple, cost_qual_eval_context::root, QualCost::startup, and cost_qual_eval_context::total.

Referenced by cost_functionscan(), cost_windowagg(), count_agg_clauses_walker(), and order_qual_clauses().

{

cost_qual_eval_context context;

context.root = root;

context.total.startup = 0;

context.total.per_tuple = 0;

cost_qual_eval_walker(qual, &context);

*cost = context.total;

}

Definition at line 1158 of file costsize.c.

References cpu_tuple_cost, Max, Plan::plan_rows, Plan::plan_width, Plan::startup_cost, and Plan::total_cost.

Referenced by make_recursive_union().

{

Cost startup_cost;

Cost total_cost;

double total_rows;

/* We probably have decent estimates for the non-recursive term */

startup_cost = nrterm->startup_cost;

total_cost = nrterm->total_cost;

total_rows = nrterm->plan_rows;

/*

* We arbitrarily assume that about 10 recursive iterations will be

* needed, and that we've managed to get a good fix on the cost and output

* size of each one of them. These are mighty shaky assumptions but it's

* hard to see how to do better.

*/

total_cost += 10 * rterm->total_cost;

total_rows += 10 * rterm->plan_rows;

/*

* Also charge cpu_tuple_cost per row to account for the costs of

* manipulating the tuplestores. (We don't worry about possible

* spill-to-disk costs.)

*/

total_cost += cpu_tuple_cost * total_rows;

runion->startup_cost = startup_cost;

runion->total_cost = total_cost;

runion->plan_rows = total_rows;

runion->plan_width = Max(nrterm->plan_width, rterm->plan_width);

}

| void cost_seqscan | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| RelOptInfo * | baserel, | |||

| ParamPathInfo * | param_info | |||

| ) |

Definition at line 178 of file costsize.c.

References Assert, cpu_tuple_cost, disable_cost, enable_seqscan, get_restriction_qual_cost(), get_tablespace_page_costs(), NULL, RelOptInfo::pages, QualCost::per_tuple, ParamPathInfo::ppi_rows, RelOptInfo::relid, RelOptInfo::reltablespace, RelOptInfo::rows, Path::rows, RTE_RELATION, RelOptInfo::rtekind, QualCost::startup, Path::startup_cost, Path::total_cost, and RelOptInfo::tuples.

Referenced by create_seqscan_path().

{

Cost startup_cost = 0;

Cost run_cost = 0;

double spc_seq_page_cost;

QualCost qpqual_cost;

Cost cpu_per_tuple;

/* Should only be applied to base relations */

Assert(baserel->relid > 0);

Assert(baserel->rtekind == RTE_RELATION);

/* Mark the path with the correct row estimate */

if (param_info)

path->rows = param_info->ppi_rows;

else

path->rows = baserel->rows;

if (!enable_seqscan)

startup_cost += disable_cost;

/* fetch estimated page cost for tablespace containing table */

get_tablespace_page_costs(baserel->reltablespace,

NULL,

&spc_seq_page_cost);

/*

* disk costs

*/

run_cost += spc_seq_page_cost * baserel->pages;

/* CPU costs */

get_restriction_qual_cost(root, baserel, param_info, &qpqual_cost);

startup_cost += qpqual_cost.startup;

cpu_per_tuple = cpu_tuple_cost + qpqual_cost.per_tuple;

run_cost += cpu_per_tuple * baserel->tuples;

path->startup_cost = startup_cost;

path->total_cost = startup_cost + run_cost;

}

| void cost_sort | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| List * | pathkeys, | |||

| Cost | input_cost, | |||

| double | tuples, | |||

| int | width, | |||

| Cost | comparison_cost, | |||

| int | sort_mem, | |||

| double | limit_tuples | |||

| ) |

Definition at line 1237 of file costsize.c.

References cpu_operator_cost, disable_cost, enable_sort, LOG2, random_page_cost, relation_byte_size(), Path::rows, seq_page_cost, Path::startup_cost, Path::total_cost, and tuplesort_merge_order().

Referenced by choose_hashed_distinct(), choose_hashed_grouping(), choose_hashed_setop(), create_merge_append_path(), create_unique_path(), initial_cost_mergejoin(), make_sort(), plan_cluster_use_sort(), and query_planner().

{

Cost startup_cost = input_cost;

Cost run_cost = 0;

double input_bytes = relation_byte_size(tuples, width);

double output_bytes;

double output_tuples;

long sort_mem_bytes = sort_mem * 1024L;

if (!enable_sort)

startup_cost += disable_cost;

path->rows = tuples;

/*

* We want to be sure the cost of a sort is never estimated as zero, even

* if passed-in tuple count is zero. Besides, mustn't do log(0)...

*/

if (tuples < 2.0)

tuples = 2.0;

/* Include the default cost-per-comparison */

comparison_cost += 2.0 * cpu_operator_cost;

/* Do we have a useful LIMIT? */

if (limit_tuples > 0 && limit_tuples < tuples)

{

output_tuples = limit_tuples;

output_bytes = relation_byte_size(output_tuples, width);

}

else

{

output_tuples = tuples;

output_bytes = input_bytes;

}

if (output_bytes > sort_mem_bytes)

{

/*

* We'll have to use a disk-based sort of all the tuples

*/

double npages = ceil(input_bytes / BLCKSZ);

double nruns = (input_bytes / sort_mem_bytes) * 0.5;

double mergeorder = tuplesort_merge_order(sort_mem_bytes);

double log_runs;

double npageaccesses;

/*

* CPU costs

*

* Assume about N log2 N comparisons

*/

startup_cost += comparison_cost * tuples * LOG2(tuples);

/* Disk costs */

/* Compute logM(r) as log(r) / log(M) */

if (nruns > mergeorder)

log_runs = ceil(log(nruns) / log(mergeorder));

else

log_runs = 1.0;

npageaccesses = 2.0 * npages * log_runs;

/* Assume 3/4ths of accesses are sequential, 1/4th are not */

startup_cost += npageaccesses *

(seq_page_cost * 0.75 + random_page_cost * 0.25);

}

else if (tuples > 2 * output_tuples || input_bytes > sort_mem_bytes)

{

/*

* We'll use a bounded heap-sort keeping just K tuples in memory, for

* a total number of tuple comparisons of N log2 K; but the constant

* factor is a bit higher than for quicksort. Tweak it so that the

* cost curve is continuous at the crossover point.

*/

startup_cost += comparison_cost * tuples * LOG2(2.0 * output_tuples);

}

else

{

/* We'll use plain quicksort on all the input tuples */

startup_cost += comparison_cost * tuples * LOG2(tuples);

}

/*

* Also charge a small amount (arbitrarily set equal to operator cost) per

* extracted tuple. We don't charge cpu_tuple_cost because a Sort node

* doesn't do qual-checking or projection, so it has less overhead than

* most plan nodes. Note it's correct to use tuples not output_tuples

* here --- the upper LIMIT will pro-rate the run cost so we'd be double

* counting the LIMIT otherwise.

*/

run_cost += cpu_operator_cost * tuples;

path->startup_cost = startup_cost;

path->total_cost = startup_cost + run_cost;

}

| void cost_subplan | ( | PlannerInfo * | root, | |

| SubPlan * | subplan, | |||

| Plan * | plan | |||

| ) |

Definition at line 2708 of file costsize.c.

References ALL_SUBLINK, ANY_SUBLINK, cost_qual_eval(), cpu_operator_cost, ExecMaterializesOutput(), EXISTS_SUBLINK, make_ands_implicit(), NIL, nodeTag, SubPlan::parParam, SubPlan::per_call_cost, QualCost::per_tuple, Plan::plan_rows, QualCost::startup, SubPlan::startup_cost, Plan::startup_cost, SubPlan::subLinkType, SubPlan::testexpr, Plan::total_cost, and SubPlan::useHashTable.

Referenced by build_subplan(), SS_make_initplan_from_plan(), and SS_process_ctes().

{

QualCost sp_cost;

/* Figure any cost for evaluating the testexpr */

cost_qual_eval(&sp_cost,

make_ands_implicit((Expr *) subplan->testexpr),

root);

if (subplan->useHashTable)

{

/*

* If we are using a hash table for the subquery outputs, then the

* cost of evaluating the query is a one-time cost. We charge one

* cpu_operator_cost per tuple for the work of loading the hashtable,

* too.

*/

sp_cost.startup += plan->total_cost +

cpu_operator_cost * plan->plan_rows;

/*

* The per-tuple costs include the cost of evaluating the lefthand

* expressions, plus the cost of probing the hashtable. We already

* accounted for the lefthand expressions as part of the testexpr, and

* will also have counted one cpu_operator_cost for each comparison

* operator. That is probably too low for the probing cost, but it's

* hard to make a better estimate, so live with it for now.

*/

}

else

{

/*

* Otherwise we will be rescanning the subplan output on each

* evaluation. We need to estimate how much of the output we will

* actually need to scan. NOTE: this logic should agree with the

* tuple_fraction estimates used by make_subplan() in

* plan/subselect.c.

*/

Cost plan_run_cost = plan->total_cost - plan->startup_cost;

if (subplan->subLinkType == EXISTS_SUBLINK)

{

/* we only need to fetch 1 tuple */

sp_cost.per_tuple += plan_run_cost / plan->plan_rows;

}

else if (subplan->subLinkType == ALL_SUBLINK ||

subplan->subLinkType == ANY_SUBLINK)

{

/* assume we need 50% of the tuples */

sp_cost.per_tuple += 0.50 * plan_run_cost;

/* also charge a cpu_operator_cost per row examined */

sp_cost.per_tuple += 0.50 * plan->plan_rows * cpu_operator_cost;

}

else

{

/* assume we need all tuples */

sp_cost.per_tuple += plan_run_cost;

}

/*

* Also account for subplan's startup cost. If the subplan is

* uncorrelated or undirect correlated, AND its topmost node is one

* that materializes its output, assume that we'll only need to pay

* its startup cost once; otherwise assume we pay the startup cost

* every time.

*/

if (subplan->parParam == NIL &&

ExecMaterializesOutput(nodeTag(plan)))

sp_cost.startup += plan->startup_cost;

else

sp_cost.per_tuple += plan->startup_cost;

}

subplan->startup_cost = sp_cost.startup;

subplan->per_call_cost = sp_cost.per_tuple;

}

| void cost_subqueryscan | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| RelOptInfo * | baserel, | |||

| ParamPathInfo * | param_info | |||

| ) |

Definition at line 967 of file costsize.c.

References Assert, cpu_tuple_cost, get_restriction_qual_cost(), QualCost::per_tuple, ParamPathInfo::ppi_rows, RelOptInfo::relid, RelOptInfo::rows, Path::rows, RTE_SUBQUERY, RelOptInfo::rtekind, QualCost::startup, Plan::startup_cost, Path::startup_cost, RelOptInfo::subplan, Plan::total_cost, Path::total_cost, and RelOptInfo::tuples.

Referenced by create_subqueryscan_path().

{

Cost startup_cost;

Cost run_cost;

QualCost qpqual_cost;

Cost cpu_per_tuple;

/* Should only be applied to base relations that are subqueries */

Assert(baserel->relid > 0);

Assert(baserel->rtekind == RTE_SUBQUERY);

/* Mark the path with the correct row estimate */

if (param_info)

path->rows = param_info->ppi_rows;

else

path->rows = baserel->rows;

/*

* Cost of path is cost of evaluating the subplan, plus cost of evaluating

* any restriction clauses that will be attached to the SubqueryScan node,

* plus cpu_tuple_cost to account for selection and projection overhead.

*/

path->startup_cost = baserel->subplan->startup_cost;

path->total_cost = baserel->subplan->total_cost;

get_restriction_qual_cost(root, baserel, param_info, &qpqual_cost);

startup_cost = qpqual_cost.startup;

cpu_per_tuple = cpu_tuple_cost + qpqual_cost.per_tuple;

run_cost = cpu_per_tuple * baserel->tuples;

path->startup_cost += startup_cost;

path->total_cost += startup_cost + run_cost;

}

| void cost_tidscan | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| RelOptInfo * | baserel, | |||

| List * | tidquals, | |||

| ParamPathInfo * | param_info | |||

| ) |

Definition at line 868 of file costsize.c.

References ScalarArrayOpExpr::args, Assert, RelOptInfo::baserestrictcost, cost_qual_eval(), cpu_tuple_cost, disable_cost, enable_tidscan, estimate_array_length(), get_restriction_qual_cost(), get_tablespace_page_costs(), IsA, lfirst, lsecond, NULL, QualCost::per_tuple, ParamPathInfo::ppi_rows, RelOptInfo::relid, RelOptInfo::reltablespace, RelOptInfo::rows, Path::rows, RTE_RELATION, RelOptInfo::rtekind, QualCost::startup, Path::startup_cost, and Path::total_cost.

Referenced by create_tidscan_path().

{

Cost startup_cost = 0;

Cost run_cost = 0;

bool isCurrentOf = false;

QualCost qpqual_cost;

Cost cpu_per_tuple;

QualCost tid_qual_cost;

int ntuples;

ListCell *l;

double spc_random_page_cost;

/* Should only be applied to base relations */

Assert(baserel->relid > 0);

Assert(baserel->rtekind == RTE_RELATION);

/* Mark the path with the correct row estimate */

if (param_info)

path->rows = param_info->ppi_rows;

else

path->rows = baserel->rows;

/* Count how many tuples we expect to retrieve */

ntuples = 0;

foreach(l, tidquals)

{

if (IsA(lfirst(l), ScalarArrayOpExpr))

{

/* Each element of the array yields 1 tuple */

ScalarArrayOpExpr *saop = (ScalarArrayOpExpr *) lfirst(l);

Node *arraynode = (Node *) lsecond(saop->args);

ntuples += estimate_array_length(arraynode);

}

else if (IsA(lfirst(l), CurrentOfExpr))

{

/* CURRENT OF yields 1 tuple */

isCurrentOf = true;

ntuples++;

}

else

{

/* It's just CTID = something, count 1 tuple */

ntuples++;

}

}

/*

* We must force TID scan for WHERE CURRENT OF, because only nodeTidscan.c

* understands how to do it correctly. Therefore, honor enable_tidscan

* only when CURRENT OF isn't present. Also note that cost_qual_eval

* counts a CurrentOfExpr as having startup cost disable_cost, which we

* subtract off here; that's to prevent other plan types such as seqscan

* from winning.

*/

if (isCurrentOf)

{

Assert(baserel->baserestrictcost.startup >= disable_cost);

startup_cost -= disable_cost;

}

else if (!enable_tidscan)

startup_cost += disable_cost;

/*

* The TID qual expressions will be computed once, any other baserestrict

* quals once per retrived tuple.

*/

cost_qual_eval(&tid_qual_cost, tidquals, root);

/* fetch estimated page cost for tablespace containing table */

get_tablespace_page_costs(baserel->reltablespace,

&spc_random_page_cost,

NULL);

/* disk costs --- assume each tuple on a different page */

run_cost += spc_random_page_cost * ntuples;

/* Add scanning CPU costs */

get_restriction_qual_cost(root, baserel, param_info, &qpqual_cost);

/* XXX currently we assume TID quals are a subset of qpquals */

startup_cost += qpqual_cost.startup + tid_qual_cost.per_tuple;

cpu_per_tuple = cpu_tuple_cost + qpqual_cost.per_tuple -

tid_qual_cost.per_tuple;

run_cost += cpu_per_tuple * ntuples;

path->startup_cost = startup_cost;

path->total_cost = startup_cost + run_cost;

}

| void cost_valuesscan | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| RelOptInfo * | baserel, | |||

| ParamPathInfo * | param_info | |||

| ) |

Definition at line 1068 of file costsize.c.

References Assert, cpu_operator_cost, cpu_tuple_cost, get_restriction_qual_cost(), QualCost::per_tuple, ParamPathInfo::ppi_rows, RelOptInfo::relid, RelOptInfo::rows, Path::rows, RTE_VALUES, RelOptInfo::rtekind, QualCost::startup, Path::startup_cost, Path::total_cost, and RelOptInfo::tuples.

Referenced by create_valuesscan_path().

{

Cost startup_cost = 0;

Cost run_cost = 0;

QualCost qpqual_cost;

Cost cpu_per_tuple;

/* Should only be applied to base relations that are values lists */

Assert(baserel->relid > 0);

Assert(baserel->rtekind == RTE_VALUES);

/* Mark the path with the correct row estimate */

if (param_info)

path->rows = param_info->ppi_rows;

else

path->rows = baserel->rows;

/*

* For now, estimate list evaluation cost at one operator eval per list

* (probably pretty bogus, but is it worth being smarter?)

*/

cpu_per_tuple = cpu_operator_cost;

/* Add scanning CPU costs */

get_restriction_qual_cost(root, baserel, param_info, &qpqual_cost);

startup_cost += qpqual_cost.startup;

cpu_per_tuple += cpu_tuple_cost + qpqual_cost.per_tuple;

run_cost += cpu_per_tuple * baserel->tuples;

path->startup_cost = startup_cost;

path->total_cost = startup_cost + run_cost;

}

| void cost_windowagg | ( | Path * | path, | |

| PlannerInfo * | root, | |||

| List * | windowFuncs, | |||

| int | numPartCols, | |||

| int | numOrderCols, | |||

| Cost | input_startup_cost, | |||

| Cost | input_total_cost, | |||

| double | input_tuples | |||

| ) |

Definition at line 1557 of file costsize.c.

References WindowFunc::args, Assert, cost_qual_eval_node(), cpu_operator_cost, cpu_tuple_cost, get_func_cost(), IsA, lfirst, QualCost::per_tuple, Path::rows, QualCost::startup, Path::startup_cost, Path::total_cost, and WindowFunc::winfnoid.

Referenced by make_windowagg().

{

Cost startup_cost;

Cost total_cost;

ListCell *lc;

startup_cost = input_startup_cost;

total_cost = input_total_cost;

/*

* Window functions are assumed to cost their stated execution cost, plus

* the cost of evaluating their input expressions, per tuple. Since they

* may in fact evaluate their inputs at multiple rows during each cycle,

* this could be a drastic underestimate; but without a way to know how

* many rows the window function will fetch, it's hard to do better. In

* any case, it's a good estimate for all the built-in window functions,

* so we'll just do this for now.

*/

foreach(lc, windowFuncs)

{

WindowFunc *wfunc = (WindowFunc *) lfirst(lc);

Cost wfunccost;

QualCost argcosts;

Assert(IsA(wfunc, WindowFunc));

wfunccost = get_func_cost(wfunc->winfnoid) * cpu_operator_cost;

/* also add the input expressions' cost to per-input-row costs */

cost_qual_eval_node(&argcosts, (Node *) wfunc->args, root);

startup_cost += argcosts.startup;

wfunccost += argcosts.per_tuple;

total_cost += wfunccost * input_tuples;

}

/*

* We also charge cpu_operator_cost per grouping column per tuple for

* grouping comparisons, plus cpu_tuple_cost per tuple for general

* overhead.

*

* XXX this neglects costs of spooling the data to disk when it overflows

* work_mem. Sooner or later that should get accounted for.

*/

total_cost += cpu_operator_cost * (numPartCols + numOrderCols) * input_tuples;

total_cost += cpu_tuple_cost * input_tuples;

path->rows = input_tuples;

path->startup_cost = startup_cost;

path->total_cost = total_cost;

}

| void final_cost_hashjoin | ( | PlannerInfo * | root, | |

| HashPath * | path, | |||

| JoinCostWorkspace * | workspace, | |||

| SpecialJoinInfo * | sjinfo, | |||

| SemiAntiJoinFactors * | semifactors | |||

| ) |

Definition at line 2495 of file costsize.c.

References approx_tuple_count(), Assert, bms_is_subset(), clamp_row_est(), RestrictInfo::clause, cost_qual_eval(), cpu_tuple_cost, disable_cost, enable_hashjoin, estimate_hash_bucketsize(), get_leftop(), get_rightop(), JoinPath::innerjoinpath, IsA, JOIN_ANTI, JOIN_SEMI, JoinPath::joinrestrictinfo, JoinPath::jointype, HashPath::jpath, RestrictInfo::left_bucketsize, RestrictInfo::left_relids, lfirst, SemiAntiJoinFactors::match_count, HashPath::num_batches, JoinCostWorkspace::numbatches, JoinCostWorkspace::numbuckets, SemiAntiJoinFactors::outer_match_frac, JoinPath::outerjoinpath, Path::param_info, Path::parent, JoinPath::path, HashPath::path_hashclauses, QualCost::per_tuple, ParamPathInfo::ppi_rows, RelOptInfo::relids, RestrictInfo::right_bucketsize, RestrictInfo::right_relids, rint(), RelOptInfo::rows, Path::rows, JoinCostWorkspace::run_cost, QualCost::startup, Path::startup_cost, JoinCostWorkspace::startup_cost, and Path::total_cost.

Referenced by create_hashjoin_path().

{

Path *outer_path = path->jpath.outerjoinpath;

Path *inner_path = path->jpath.innerjoinpath;

double outer_path_rows = outer_path->rows;

double inner_path_rows = inner_path->rows;

List *hashclauses = path->path_hashclauses;

Cost startup_cost = workspace->startup_cost;

Cost run_cost = workspace->run_cost;

int numbuckets = workspace->numbuckets;

int numbatches = workspace->numbatches;

Cost cpu_per_tuple;

QualCost hash_qual_cost;

QualCost qp_qual_cost;

double hashjointuples;

double virtualbuckets;

Selectivity innerbucketsize;

ListCell *hcl;

/* Mark the path with the correct row estimate */

if (path->jpath.path.param_info)

path->jpath.path.rows = path->jpath.path.param_info->ppi_rows;

else

path->jpath.path.rows = path->jpath.path.parent->rows;

/*

* We could include disable_cost in the preliminary estimate, but that

* would amount to optimizing for the case where the join method is

* disabled, which doesn't seem like the way to bet.

*/

if (!enable_hashjoin)

startup_cost += disable_cost;

/* mark the path with estimated # of batches */

path->num_batches = numbatches;

/* and compute the number of "virtual" buckets in the whole join */

virtualbuckets = (double) numbuckets *(double) numbatches;

/*

* Determine bucketsize fraction for inner relation. We use the smallest

* bucketsize estimated for any individual hashclause; this is undoubtedly

* conservative.

*

* BUT: if inner relation has been unique-ified, we can assume it's good

* for hashing. This is important both because it's the right answer, and

* because we avoid contaminating the cache with a value that's wrong for

* non-unique-ified paths.

*/

if (IsA(inner_path, UniquePath))

innerbucketsize = 1.0 / virtualbuckets;

else

{

innerbucketsize = 1.0;

foreach(hcl, hashclauses)

{

RestrictInfo *restrictinfo = (RestrictInfo *) lfirst(hcl);

Selectivity thisbucketsize;

Assert(IsA(restrictinfo, RestrictInfo));

/*

* First we have to figure out which side of the hashjoin clause

* is the inner side.

*

* Since we tend to visit the same clauses over and over when

* planning a large query, we cache the bucketsize estimate in the

* RestrictInfo node to avoid repeated lookups of statistics.

*/

if (bms_is_subset(restrictinfo->right_relids,

inner_path->parent->relids))

{

/* righthand side is inner */

thisbucketsize = restrictinfo->right_bucketsize;

if (thisbucketsize < 0)

{

/* not cached yet */

thisbucketsize =

estimate_hash_bucketsize(root,

get_rightop(restrictinfo->clause),

virtualbuckets);

restrictinfo->right_bucketsize = thisbucketsize;

}

}

else

{

Assert(bms_is_subset(restrictinfo->left_relids,

inner_path->parent->relids));

/* lefthand side is inner */

thisbucketsize = restrictinfo->left_bucketsize;

if (thisbucketsize < 0)

{

/* not cached yet */

thisbucketsize =

estimate_hash_bucketsize(root,

get_leftop(restrictinfo->clause),

virtualbuckets);

restrictinfo->left_bucketsize = thisbucketsize;

}

}

if (innerbucketsize > thisbucketsize)

innerbucketsize = thisbucketsize;

}

}

/*

* Compute cost of the hashquals and qpquals (other restriction clauses)

* separately.

*/

cost_qual_eval(&hash_qual_cost, hashclauses, root);

cost_qual_eval(&qp_qual_cost, path->jpath.joinrestrictinfo, root);

qp_qual_cost.startup -= hash_qual_cost.startup;

qp_qual_cost.per_tuple -= hash_qual_cost.per_tuple;

/* CPU costs */

if (path->jpath.jointype == JOIN_SEMI || path->jpath.jointype == JOIN_ANTI)

{

double outer_matched_rows;

Selectivity inner_scan_frac;

/*

* SEMI or ANTI join: executor will stop after first match.

*

* For an outer-rel row that has at least one match, we can expect the

* bucket scan to stop after a fraction 1/(match_count+1) of the

* bucket's rows, if the matches are evenly distributed. Since they

* probably aren't quite evenly distributed, we apply a fuzz factor of

* 2.0 to that fraction. (If we used a larger fuzz factor, we'd have

* to clamp inner_scan_frac to at most 1.0; but since match_count is

* at least 1, no such clamp is needed now.)

*/

outer_matched_rows = rint(outer_path_rows * semifactors->outer_match_frac);

inner_scan_frac = 2.0 / (semifactors->match_count + 1.0);

startup_cost += hash_qual_cost.startup;