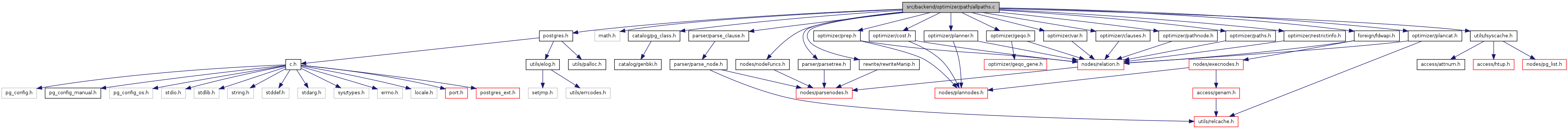

#include "postgres.h"#include <math.h>#include "catalog/pg_class.h"#include "foreign/fdwapi.h"#include "nodes/nodeFuncs.h"#include "optimizer/clauses.h"#include "optimizer/cost.h"#include "optimizer/geqo.h"#include "optimizer/pathnode.h"#include "optimizer/paths.h"#include "optimizer/plancat.h"#include "optimizer/planner.h"#include "optimizer/prep.h"#include "optimizer/restrictinfo.h"#include "optimizer/var.h"#include "parser/parse_clause.h"#include "parser/parsetree.h"#include "rewrite/rewriteManip.h"#include "utils/lsyscache.h"

Go to the source code of this file.

Definition at line 974 of file allpaths.c.

References IsA, lappend(), list_concat(), list_copy(), and AppendPath::subpaths.

Referenced by generate_mergeappend_paths(), and set_append_rel_pathlist().

{

if (IsA(path, AppendPath))

{

AppendPath *apath = (AppendPath *) path;

/* list_copy is important here to avoid sharing list substructure */

return list_concat(subpaths, list_copy(apath->subpaths));

}

else

return lappend(subpaths, path);

}

| static void compare_tlist_datatypes | ( | List * | tlist, | |

| List * | colTypes, | |||

| bool * | differentTypes | |||

| ) | [static] |

Definition at line 1651 of file allpaths.c.

References elog, ERROR, TargetEntry::expr, exprType(), lfirst, lfirst_oid, list_head(), lnext, NULL, TargetEntry::resjunk, and TargetEntry::resno.

Referenced by subquery_is_pushdown_safe().

{

ListCell *l;

ListCell *colType = list_head(colTypes);

foreach(l, tlist)

{

TargetEntry *tle = (TargetEntry *) lfirst(l);

if (tle->resjunk)

continue; /* ignore resjunk columns */

if (colType == NULL)

elog(ERROR, "wrong number of tlist entries");

if (exprType((Node *) tle->expr) != lfirst_oid(colType))

differentTypes[tle->resno] = true;

colType = lnext(colType);

}

if (colType != NULL)

elog(ERROR, "wrong number of tlist entries");

}

| static void generate_mergeappend_paths | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| List * | live_childrels, | |||

| List * | all_child_pathkeys | |||

| ) | [static] |

Definition at line 890 of file allpaths.c.

References accumulate_append_subpath(), add_path(), Assert, RelOptInfo::cheapest_total_path, create_merge_append_path(), get_cheapest_path_for_pathkeys(), lfirst, NULL, Path::param_info, RelOptInfo::pathlist, STARTUP_COST, and TOTAL_COST.

Referenced by set_append_rel_pathlist().

{

ListCell *lcp;

foreach(lcp, all_child_pathkeys)

{

List *pathkeys = (List *) lfirst(lcp);

List *startup_subpaths = NIL;

List *total_subpaths = NIL;

bool startup_neq_total = false;

ListCell *lcr;

/* Select the child paths for this ordering... */

foreach(lcr, live_childrels)

{

RelOptInfo *childrel = (RelOptInfo *) lfirst(lcr);

Path *cheapest_startup,

*cheapest_total;

/* Locate the right paths, if they are available. */

cheapest_startup =

get_cheapest_path_for_pathkeys(childrel->pathlist,

pathkeys,

NULL,

STARTUP_COST);

cheapest_total =

get_cheapest_path_for_pathkeys(childrel->pathlist,

pathkeys,

NULL,

TOTAL_COST);

/*

* If we can't find any paths with the right order just use the

* cheapest-total path; we'll have to sort it later.

*/

if (cheapest_startup == NULL || cheapest_total == NULL)

{

cheapest_startup = cheapest_total =

childrel->cheapest_total_path;

/* Assert we do have an unparameterized path for this child */

Assert(cheapest_total->param_info == NULL);

}

/*

* Notice whether we actually have different paths for the

* "cheapest" and "total" cases; frequently there will be no point

* in two create_merge_append_path() calls.

*/

if (cheapest_startup != cheapest_total)

startup_neq_total = true;

startup_subpaths =

accumulate_append_subpath(startup_subpaths, cheapest_startup);

total_subpaths =

accumulate_append_subpath(total_subpaths, cheapest_total);

}

/* ... and build the MergeAppend paths */

add_path(rel, (Path *) create_merge_append_path(root,

rel,

startup_subpaths,

pathkeys,

NULL));

if (startup_neq_total)

add_path(rel, (Path *) create_merge_append_path(root,

rel,

total_subpaths,

pathkeys,

NULL));

}

}

| static bool has_multiple_baserels | ( | PlannerInfo * | root | ) | [static] |

Definition at line 1012 of file allpaths.c.

References NULL, RELOPT_BASEREL, RelOptInfo::reloptkind, PlannerInfo::simple_rel_array, and PlannerInfo::simple_rel_array_size.

Referenced by set_subquery_pathlist().

{

int num_base_rels = 0;

Index rti;

for (rti = 1; rti < root->simple_rel_array_size; rti++)

{

RelOptInfo *brel = root->simple_rel_array[rti];

if (brel == NULL)

continue;

/* ignore RTEs that are "other rels" */

if (brel->reloptkind == RELOPT_BASEREL)

if (++num_base_rels > 1)

return true;

}

return false;

}

| RelOptInfo* make_one_rel | ( | PlannerInfo * | root, | |

| List * | joinlist | |||

| ) |

Definition at line 104 of file allpaths.c.

References PlannerInfo::all_baserels, Assert, bms_add_member(), bms_equal(), make_rel_from_joinlist(), NULL, RelOptInfo::relid, RelOptInfo::relids, RELOPT_BASEREL, RelOptInfo::reloptkind, set_base_rel_pathlists(), set_base_rel_sizes(), PlannerInfo::simple_rel_array, and PlannerInfo::simple_rel_array_size.

Referenced by query_planner().

{

RelOptInfo *rel;

Index rti;

/*

* Construct the all_baserels Relids set.

*/

root->all_baserels = NULL;

for (rti = 1; rti < root->simple_rel_array_size; rti++)

{

RelOptInfo *brel = root->simple_rel_array[rti];

/* there may be empty slots corresponding to non-baserel RTEs */

if (brel == NULL)

continue;

Assert(brel->relid == rti); /* sanity check on array */

/* ignore RTEs that are "other rels" */

if (brel->reloptkind != RELOPT_BASEREL)

continue;

root->all_baserels = bms_add_member(root->all_baserels, brel->relid);

}

/*

* Generate access paths for the base rels.

*/

set_base_rel_sizes(root);

set_base_rel_pathlists(root);

/*

* Generate access paths for the entire join tree.

*/

rel = make_rel_from_joinlist(root, joinlist);

/*

* The result should join all and only the query's base rels.

*/

Assert(bms_equal(rel->relids, root->all_baserels));

return rel;

}

| static RelOptInfo * make_rel_from_joinlist | ( | PlannerInfo * | root, | |

| List * | joinlist | |||

| ) | [static] |

Definition at line 1364 of file allpaths.c.

References elog, enable_geqo, ERROR, find_base_rel(), geqo(), geqo_threshold, PlannerInfo::initial_rels, IsA, join_search_hook, lappend(), lfirst, linitial, list_length(), nodeTag, and standard_join_search().

Referenced by make_one_rel().

{

int levels_needed;

List *initial_rels;

ListCell *jl;

/*

* Count the number of child joinlist nodes. This is the depth of the

* dynamic-programming algorithm we must employ to consider all ways of

* joining the child nodes.

*/

levels_needed = list_length(joinlist);

if (levels_needed <= 0)

return NULL; /* nothing to do? */

/*

* Construct a list of rels corresponding to the child joinlist nodes.

* This may contain both base rels and rels constructed according to

* sub-joinlists.

*/

initial_rels = NIL;

foreach(jl, joinlist)

{

Node *jlnode = (Node *) lfirst(jl);

RelOptInfo *thisrel;

if (IsA(jlnode, RangeTblRef))

{

int varno = ((RangeTblRef *) jlnode)->rtindex;

thisrel = find_base_rel(root, varno);

}

else if (IsA(jlnode, List))

{

/* Recurse to handle subproblem */

thisrel = make_rel_from_joinlist(root, (List *) jlnode);

}

else

{

elog(ERROR, "unrecognized joinlist node type: %d",

(int) nodeTag(jlnode));

thisrel = NULL; /* keep compiler quiet */

}

initial_rels = lappend(initial_rels, thisrel);

}

if (levels_needed == 1)

{

/*

* Single joinlist node, so we're done.

*/

return (RelOptInfo *) linitial(initial_rels);

}

else

{

/*

* Consider the different orders in which we could join the rels,

* using a plugin, GEQO, or the regular join search code.

*

* We put the initial_rels list into a PlannerInfo field because

* has_legal_joinclause() needs to look at it (ugly :-().

*/

root->initial_rels = initial_rels;

if (join_search_hook)

return (*join_search_hook) (root, levels_needed, initial_rels);

else if (enable_geqo && levels_needed >= geqo_threshold)

return geqo(root, levels_needed, initial_rels);

else

return standard_join_search(root, levels_needed, initial_rels);

}

}

| static bool qual_is_pushdown_safe | ( | Query * | subquery, | |

| Index | rti, | |||

| Node * | qual, | |||

| bool * | differentTypes | |||

| ) | [static] |

Definition at line 1710 of file allpaths.c.

References Assert, bms_add_member(), bms_free(), bms_is_member(), contain_subplans(), contain_volatile_functions(), contain_window_function(), Query::distinctClause, TargetEntry::expr, expression_returns_set(), get_tle_by_resno(), Query::hasDistinctOn, InvalidOid, IsA, lfirst, list_free(), NULL, pull_var_clause(), PVC_INCLUDE_PLACEHOLDERS, PVC_REJECT_AGGREGATES, TargetEntry::resjunk, targetIsInSortList(), Query::targetList, Var::varattno, and Var::varno.

Referenced by set_subquery_pathlist().

{

bool safe = true;

List *vars;

ListCell *vl;

Bitmapset *tested = NULL;

/* Refuse subselects (point 1) */

if (contain_subplans(qual))

return false;

/*

* It would be unsafe to push down window function calls, but at least for

* the moment we could never see any in a qual anyhow. (The same applies

* to aggregates, which we check for in pull_var_clause below.)

*/

Assert(!contain_window_function(qual));

/*

* Examine all Vars used in clause; since it's a restriction clause, all

* such Vars must refer to subselect output columns.

*/

vars = pull_var_clause(qual,

PVC_REJECT_AGGREGATES,

PVC_INCLUDE_PLACEHOLDERS);

foreach(vl, vars)

{

Var *var = (Var *) lfirst(vl);

TargetEntry *tle;

/*

* XXX Punt if we find any PlaceHolderVars in the restriction clause.

* It's not clear whether a PHV could safely be pushed down, and even

* less clear whether such a situation could arise in any cases of

* practical interest anyway. So for the moment, just refuse to push

* down.

*/

if (!IsA(var, Var))

{

safe = false;

break;

}

Assert(var->varno == rti);

/* Check point 2 */

if (var->varattno == 0)

{

safe = false;

break;

}

/*

* We use a bitmapset to avoid testing the same attno more than once.

* (NB: this only works because subquery outputs can't have negative

* attnos.)

*/

if (bms_is_member(var->varattno, tested))

continue;

tested = bms_add_member(tested, var->varattno);

/* Check point 3 */

if (differentTypes[var->varattno])

{

safe = false;

break;

}

/* Must find the tlist element referenced by the Var */

tle = get_tle_by_resno(subquery->targetList, var->varattno);

Assert(tle != NULL);

Assert(!tle->resjunk);

/* If subquery uses DISTINCT ON, check point 4 */

if (subquery->hasDistinctOn &&

!targetIsInSortList(tle, InvalidOid, subquery->distinctClause))

{

/* non-DISTINCT column, so fail */

safe = false;

break;

}

/* Refuse functions returning sets (point 5) */

if (expression_returns_set((Node *) tle->expr))

{

safe = false;

break;

}

/* Refuse volatile functions (point 6) */

if (contain_volatile_functions((Node *) tle->expr))

{

safe = false;

break;

}

}

list_free(vars);

bms_free(tested);

return safe;

}

| static void recurse_push_qual | ( | Node * | setOp, | |

| Query * | topquery, | |||

| RangeTblEntry * | rte, | |||

| Index | rti, | |||

| Node * | qual | |||

| ) | [static] |

Definition at line 1865 of file allpaths.c.

References Assert, elog, ERROR, IsA, SetOperationStmt::larg, nodeTag, NULL, SetOperationStmt::rarg, rt_fetch, Query::rtable, RangeTblRef::rtindex, RangeTblEntry::subquery, and subquery_push_qual().

Referenced by subquery_push_qual().

{

if (IsA(setOp, RangeTblRef))

{

RangeTblRef *rtr = (RangeTblRef *) setOp;

RangeTblEntry *subrte = rt_fetch(rtr->rtindex, topquery->rtable);

Query *subquery = subrte->subquery;

Assert(subquery != NULL);

subquery_push_qual(subquery, rte, rti, qual);

}

else if (IsA(setOp, SetOperationStmt))

{

SetOperationStmt *op = (SetOperationStmt *) setOp;

recurse_push_qual(op->larg, topquery, rte, rti, qual);

recurse_push_qual(op->rarg, topquery, rte, rti, qual);

}

else

{

elog(ERROR, "unrecognized node type: %d",

(int) nodeTag(setOp));

}

}

| static bool recurse_pushdown_safe | ( | Node * | setOp, | |

| Query * | topquery, | |||

| bool * | differentTypes | |||

| ) | [static] |

Definition at line 1608 of file allpaths.c.

References Assert, elog, ERROR, IsA, SetOperationStmt::larg, nodeTag, NULL, SetOperationStmt::op, SetOperationStmt::rarg, rt_fetch, Query::rtable, RangeTblRef::rtindex, SETOP_EXCEPT, RangeTblEntry::subquery, and subquery_is_pushdown_safe().

Referenced by subquery_is_pushdown_safe().

{

if (IsA(setOp, RangeTblRef))

{

RangeTblRef *rtr = (RangeTblRef *) setOp;

RangeTblEntry *rte = rt_fetch(rtr->rtindex, topquery->rtable);

Query *subquery = rte->subquery;

Assert(subquery != NULL);

return subquery_is_pushdown_safe(subquery, topquery, differentTypes);

}

else if (IsA(setOp, SetOperationStmt))

{

SetOperationStmt *op = (SetOperationStmt *) setOp;

/* EXCEPT is no good */

if (op->op == SETOP_EXCEPT)

return false;

/* Else recurse */

if (!recurse_pushdown_safe(op->larg, topquery, differentTypes))

return false;

if (!recurse_pushdown_safe(op->rarg, topquery, differentTypes))

return false;

}

else

{

elog(ERROR, "unrecognized node type: %d",

(int) nodeTag(setOp));

}

return true;

}

| static void set_append_rel_pathlist | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| Index | rti, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 667 of file allpaths.c.

References accumulate_append_subpath(), add_path(), PlannerInfo::append_rel_list, Assert, bms_equal(), RelOptInfo::cheapest_total_path, AppendRelInfo::child_relid, compare_pathkeys(), create_append_path(), generate_mergeappend_paths(), get_cheapest_path_for_pathkeys(), IS_DUMMY_REL, lappend(), lfirst, NIL, NULL, Path::param_info, AppendRelInfo::parent_relid, PATH_REQ_OUTER, Path::pathkeys, RelOptInfo::pathlist, reparameterize_path(), set_cheapest(), set_rel_pathlist(), PlannerInfo::simple_rel_array, PlannerInfo::simple_rte_array, and TOTAL_COST.

Referenced by set_rel_pathlist().

{

int parentRTindex = rti;

List *live_childrels = NIL;

List *subpaths = NIL;

bool subpaths_valid = true;

List *all_child_pathkeys = NIL;

List *all_child_outers = NIL;

ListCell *l;

/*

* Generate access paths for each member relation, and remember the

* cheapest path for each one. Also, identify all pathkeys (orderings)

* and parameterizations (required_outer sets) available for the member

* relations.

*/

foreach(l, root->append_rel_list)

{

AppendRelInfo *appinfo = (AppendRelInfo *) lfirst(l);

int childRTindex;

RangeTblEntry *childRTE;

RelOptInfo *childrel;

ListCell *lcp;

/* append_rel_list contains all append rels; ignore others */

if (appinfo->parent_relid != parentRTindex)

continue;

/* Re-locate the child RTE and RelOptInfo */

childRTindex = appinfo->child_relid;

childRTE = root->simple_rte_array[childRTindex];

childrel = root->simple_rel_array[childRTindex];

/*

* Compute the child's access paths.

*/

set_rel_pathlist(root, childrel, childRTindex, childRTE);

/*

* If child is dummy, ignore it.

*/

if (IS_DUMMY_REL(childrel))

continue;

/*

* Child is live, so add it to the live_childrels list for use below.

*/

live_childrels = lappend(live_childrels, childrel);

/*

* If child has an unparameterized cheapest-total path, add that to

* the unparameterized Append path we are constructing for the parent.

* If not, there's no workable unparameterized path.

*/

if (childrel->cheapest_total_path->param_info == NULL)

subpaths = accumulate_append_subpath(subpaths,

childrel->cheapest_total_path);

else

subpaths_valid = false;

/*

* Collect lists of all the available path orderings and

* parameterizations for all the children. We use these as a

* heuristic to indicate which sort orderings and parameterizations we

* should build Append and MergeAppend paths for.

*/

foreach(lcp, childrel->pathlist)

{

Path *childpath = (Path *) lfirst(lcp);

List *childkeys = childpath->pathkeys;

Relids childouter = PATH_REQ_OUTER(childpath);

/* Unsorted paths don't contribute to pathkey list */

if (childkeys != NIL)

{

ListCell *lpk;

bool found = false;

/* Have we already seen this ordering? */

foreach(lpk, all_child_pathkeys)

{

List *existing_pathkeys = (List *) lfirst(lpk);

if (compare_pathkeys(existing_pathkeys,

childkeys) == PATHKEYS_EQUAL)

{

found = true;

break;

}

}

if (!found)

{

/* No, so add it to all_child_pathkeys */

all_child_pathkeys = lappend(all_child_pathkeys,

childkeys);

}

}

/* Unparameterized paths don't contribute to param-set list */

if (childouter)

{

ListCell *lco;

bool found = false;

/* Have we already seen this param set? */

foreach(lco, all_child_outers)

{

Relids existing_outers = (Relids) lfirst(lco);

if (bms_equal(existing_outers, childouter))

{

found = true;

break;

}

}

if (!found)

{

/* No, so add it to all_child_outers */

all_child_outers = lappend(all_child_outers,

childouter);

}

}

}

}

/*

* If we found unparameterized paths for all children, build an unordered,

* unparameterized Append path for the rel. (Note: this is correct even

* if we have zero or one live subpath due to constraint exclusion.)

*/

if (subpaths_valid)

add_path(rel, (Path *) create_append_path(rel, subpaths, NULL));

/*

* Also build unparameterized MergeAppend paths based on the collected

* list of child pathkeys.

*/

if (subpaths_valid)

generate_mergeappend_paths(root, rel, live_childrels,

all_child_pathkeys);

/*

* Build Append paths for each parameterization seen among the child rels.

* (This may look pretty expensive, but in most cases of practical

* interest, the child rels will expose mostly the same parameterizations,

* so that not that many cases actually get considered here.)

*

* The Append node itself cannot enforce quals, so all qual checking must

* be done in the child paths. This means that to have a parameterized

* Append path, we must have the exact same parameterization for each

* child path; otherwise some children might be failing to check the

* moved-down quals. To make them match up, we can try to increase the

* parameterization of lesser-parameterized paths.

*/

foreach(l, all_child_outers)

{

Relids required_outer = (Relids) lfirst(l);

ListCell *lcr;

/* Select the child paths for an Append with this parameterization */

subpaths = NIL;

subpaths_valid = true;

foreach(lcr, live_childrels)

{

RelOptInfo *childrel = (RelOptInfo *) lfirst(lcr);

Path *cheapest_total;

cheapest_total =

get_cheapest_path_for_pathkeys(childrel->pathlist,

NIL,

required_outer,

TOTAL_COST);

Assert(cheapest_total != NULL);

/* Children must have exactly the desired parameterization */

if (!bms_equal(PATH_REQ_OUTER(cheapest_total), required_outer))

{

cheapest_total = reparameterize_path(root, cheapest_total,

required_outer, 1.0);

if (cheapest_total == NULL)

{

subpaths_valid = false;

break;

}

}

subpaths = accumulate_append_subpath(subpaths, cheapest_total);

}

if (subpaths_valid)

add_path(rel, (Path *)

create_append_path(rel, subpaths, required_outer));

}

/* Select cheapest paths */

set_cheapest(rel);

}

| static void set_append_rel_size | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| Index | rti, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 443 of file allpaths.c.

References add_child_rel_equivalences(), adjust_appendrel_attrs(), PlannerInfo::append_rel_list, Assert, RelOptInfo::attr_widths, RelOptInfo::baserestrictinfo, AppendRelInfo::child_relid, DatumGetBool, eval_const_expressions(), exprType(), exprTypmod(), find_base_rel(), forboth, get_all_actual_clauses(), get_typavgwidth(), RelOptInfo::has_eclass_joins, has_useful_pathkeys(), i, IS_DUMMY_REL, IsA, RelOptInfo::joininfo, lfirst, make_ands_explicit(), make_ands_implicit(), make_restrictinfos_from_actual_clauses(), RelOptInfo::max_attr, RelOptInfo::min_attr, palloc0(), AppendRelInfo::parent_relid, pfree(), relation_excluded_by_constraints(), RelOptInfo::relid, RELOPT_OTHER_MEMBER_REL, RelOptInfo::reloptkind, RelOptInfo::reltargetlist, rint(), RelOptInfo::rows, set_dummy_rel_pathlist(), set_rel_size(), PlannerInfo::simple_rte_array, RelOptInfo::tuples, Var::varattno, and RelOptInfo::width.

Referenced by set_rel_size().

{

int parentRTindex = rti;

double parent_rows;

double parent_size;

double *parent_attrsizes;

int nattrs;

ListCell *l;

/*

* Initialize to compute size estimates for whole append relation.

*

* We handle width estimates by weighting the widths of different child

* rels proportionally to their number of rows. This is sensible because

* the use of width estimates is mainly to compute the total relation

* "footprint" if we have to sort or hash it. To do this, we sum the

* total equivalent size (in "double" arithmetic) and then divide by the

* total rowcount estimate. This is done separately for the total rel

* width and each attribute.

*

* Note: if you consider changing this logic, beware that child rels could

* have zero rows and/or width, if they were excluded by constraints.

*/

parent_rows = 0;

parent_size = 0;

nattrs = rel->max_attr - rel->min_attr + 1;

parent_attrsizes = (double *) palloc0(nattrs * sizeof(double));

foreach(l, root->append_rel_list)

{

AppendRelInfo *appinfo = (AppendRelInfo *) lfirst(l);

int childRTindex;

RangeTblEntry *childRTE;

RelOptInfo *childrel;

List *childquals;

Node *childqual;

ListCell *parentvars;

ListCell *childvars;

/* append_rel_list contains all append rels; ignore others */

if (appinfo->parent_relid != parentRTindex)

continue;

childRTindex = appinfo->child_relid;

childRTE = root->simple_rte_array[childRTindex];

/*

* The child rel's RelOptInfo was already created during

* add_base_rels_to_query.

*/

childrel = find_base_rel(root, childRTindex);

Assert(childrel->reloptkind == RELOPT_OTHER_MEMBER_REL);

/*

* We have to copy the parent's targetlist and quals to the child,

* with appropriate substitution of variables. However, only the

* baserestrictinfo quals are needed before we can check for

* constraint exclusion; so do that first and then check to see if we

* can disregard this child.

*

* As of 8.4, the child rel's targetlist might contain non-Var

* expressions, which means that substitution into the quals could

* produce opportunities for const-simplification, and perhaps even

* pseudoconstant quals. To deal with this, we strip the RestrictInfo

* nodes, do the substitution, do const-simplification, and then

* reconstitute the RestrictInfo layer.

*/

childquals = get_all_actual_clauses(rel->baserestrictinfo);

childquals = (List *) adjust_appendrel_attrs(root,

(Node *) childquals,

appinfo);

childqual = eval_const_expressions(root, (Node *)

make_ands_explicit(childquals));

if (childqual && IsA(childqual, Const) &&

(((Const *) childqual)->constisnull ||

!DatumGetBool(((Const *) childqual)->constvalue)))

{

/*

* Restriction reduces to constant FALSE or constant NULL after

* substitution, so this child need not be scanned.

*/

set_dummy_rel_pathlist(childrel);

continue;

}

childquals = make_ands_implicit((Expr *) childqual);

childquals = make_restrictinfos_from_actual_clauses(root,

childquals);

childrel->baserestrictinfo = childquals;

if (relation_excluded_by_constraints(root, childrel, childRTE))

{

/*

* This child need not be scanned, so we can omit it from the

* appendrel.

*/

set_dummy_rel_pathlist(childrel);

continue;

}

/*

* CE failed, so finish copying/modifying targetlist and join quals.

*

* Note: the resulting childrel->reltargetlist may contain arbitrary

* expressions, which otherwise would not occur in a reltargetlist.

* Code that might be looking at an appendrel child must cope with

* such. Note in particular that "arbitrary expression" can include

* "Var belonging to another relation", due to LATERAL references.

*/

childrel->joininfo = (List *)

adjust_appendrel_attrs(root,

(Node *) rel->joininfo,

appinfo);

childrel->reltargetlist = (List *)

adjust_appendrel_attrs(root,

(Node *) rel->reltargetlist,

appinfo);

/*

* We have to make child entries in the EquivalenceClass data

* structures as well. This is needed either if the parent

* participates in some eclass joins (because we will want to consider

* inner-indexscan joins on the individual children) or if the parent

* has useful pathkeys (because we should try to build MergeAppend

* paths that produce those sort orderings).

*/

if (rel->has_eclass_joins || has_useful_pathkeys(root, rel))

add_child_rel_equivalences(root, appinfo, rel, childrel);

childrel->has_eclass_joins = rel->has_eclass_joins;

/*

* Note: we could compute appropriate attr_needed data for the child's

* variables, by transforming the parent's attr_needed through the

* translated_vars mapping. However, currently there's no need

* because attr_needed is only examined for base relations not

* otherrels. So we just leave the child's attr_needed empty.

*/

/*

* Compute the child's size.

*/

set_rel_size(root, childrel, childRTindex, childRTE);

/*

* It is possible that constraint exclusion detected a contradiction

* within a child subquery, even though we didn't prove one above. If

* so, we can skip this child.

*/

if (IS_DUMMY_REL(childrel))

continue;

/*

* Accumulate size information from each live child.

*/

if (childrel->rows > 0)

{

parent_rows += childrel->rows;

parent_size += childrel->width * childrel->rows;

/*

* Accumulate per-column estimates too. We need not do anything

* for PlaceHolderVars in the parent list. If child expression

* isn't a Var, or we didn't record a width estimate for it, we

* have to fall back on a datatype-based estimate.

*

* By construction, child's reltargetlist is 1-to-1 with parent's.

*/

forboth(parentvars, rel->reltargetlist,

childvars, childrel->reltargetlist)

{

Var *parentvar = (Var *) lfirst(parentvars);

Node *childvar = (Node *) lfirst(childvars);

if (IsA(parentvar, Var))

{

int pndx = parentvar->varattno - rel->min_attr;

int32 child_width = 0;

if (IsA(childvar, Var) &&

((Var *) childvar)->varno == childrel->relid)

{

int cndx = ((Var *) childvar)->varattno - childrel->min_attr;

child_width = childrel->attr_widths[cndx];

}

if (child_width <= 0)

child_width = get_typavgwidth(exprType(childvar),

exprTypmod(childvar));

Assert(child_width > 0);

parent_attrsizes[pndx] += child_width * childrel->rows;

}

}

}

}

/*

* Save the finished size estimates.

*/

rel->rows = parent_rows;

if (parent_rows > 0)

{

int i;

rel->width = rint(parent_size / parent_rows);

for (i = 0; i < nattrs; i++)

rel->attr_widths[i] = rint(parent_attrsizes[i] / parent_rows);

}

else

rel->width = 0; /* attr_widths should be zero already */

/*

* Set "raw tuples" count equal to "rows" for the appendrel; needed

* because some places assume rel->tuples is valid for any baserel.

*/

rel->tuples = parent_rows;

pfree(parent_attrsizes);

}

| static void set_base_rel_pathlists | ( | PlannerInfo * | root | ) | [static] |

Definition at line 186 of file allpaths.c.

References Assert, NULL, RelOptInfo::relid, RELOPT_BASEREL, RelOptInfo::reloptkind, set_rel_pathlist(), PlannerInfo::simple_rel_array, PlannerInfo::simple_rel_array_size, and PlannerInfo::simple_rte_array.

Referenced by make_one_rel().

{

Index rti;

for (rti = 1; rti < root->simple_rel_array_size; rti++)

{

RelOptInfo *rel = root->simple_rel_array[rti];

/* there may be empty slots corresponding to non-baserel RTEs */

if (rel == NULL)

continue;

Assert(rel->relid == rti); /* sanity check on array */

/* ignore RTEs that are "other rels" */

if (rel->reloptkind != RELOPT_BASEREL)

continue;

set_rel_pathlist(root, rel, rti, root->simple_rte_array[rti]);

}

}

| static void set_base_rel_sizes | ( | PlannerInfo * | root | ) | [static] |

Definition at line 157 of file allpaths.c.

References Assert, NULL, RelOptInfo::relid, RELOPT_BASEREL, RelOptInfo::reloptkind, set_rel_size(), PlannerInfo::simple_rel_array, PlannerInfo::simple_rel_array_size, and PlannerInfo::simple_rte_array.

Referenced by make_one_rel().

{

Index rti;

for (rti = 1; rti < root->simple_rel_array_size; rti++)

{

RelOptInfo *rel = root->simple_rel_array[rti];

/* there may be empty slots corresponding to non-baserel RTEs */

if (rel == NULL)

continue;

Assert(rel->relid == rti); /* sanity check on array */

/* ignore RTEs that are "other rels" */

if (rel->reloptkind != RELOPT_BASEREL)

continue;

set_rel_size(root, rel, rti, root->simple_rte_array[rti]);

}

}

| static void set_cte_pathlist | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 1239 of file allpaths.c.

References add_path(), Assert, create_ctescan_path(), PlannerInfo::cte_plan_ids, RangeTblEntry::ctelevelsup, Query::cteList, CommonTableExpr::ctename, RangeTblEntry::ctename, elog, ERROR, PlannerInfo::glob, RelOptInfo::lateral_relids, lfirst, list_length(), list_nth(), list_nth_int(), NULL, PlannerInfo::parent_root, PlannerInfo::parse, set_cheapest(), set_cte_size_estimates(), and PlannerGlobal::subplans.

Referenced by set_rel_size().

{

Plan *cteplan;

PlannerInfo *cteroot;

Index levelsup;

int ndx;

ListCell *lc;

int plan_id;

Relids required_outer;

/*

* Find the referenced CTE, and locate the plan previously made for it.

*/

levelsup = rte->ctelevelsup;

cteroot = root;

while (levelsup-- > 0)

{

cteroot = cteroot->parent_root;

if (!cteroot) /* shouldn't happen */

elog(ERROR, "bad levelsup for CTE \"%s\"", rte->ctename);

}

/*

* Note: cte_plan_ids can be shorter than cteList, if we are still working

* on planning the CTEs (ie, this is a side-reference from another CTE).

* So we mustn't use forboth here.

*/

ndx = 0;

foreach(lc, cteroot->parse->cteList)

{

CommonTableExpr *cte = (CommonTableExpr *) lfirst(lc);

if (strcmp(cte->ctename, rte->ctename) == 0)

break;

ndx++;

}

if (lc == NULL) /* shouldn't happen */

elog(ERROR, "could not find CTE \"%s\"", rte->ctename);

if (ndx >= list_length(cteroot->cte_plan_ids))

elog(ERROR, "could not find plan for CTE \"%s\"", rte->ctename);

plan_id = list_nth_int(cteroot->cte_plan_ids, ndx);

Assert(plan_id > 0);

cteplan = (Plan *) list_nth(root->glob->subplans, plan_id - 1);

/* Mark rel with estimated output rows, width, etc */

set_cte_size_estimates(root, rel, cteplan);

/*

* We don't support pushing join clauses into the quals of a CTE scan, but

* it could still have required parameterization due to LATERAL refs in

* its tlist. (That can only happen if the CTE scan is on a relation

* pulled up out of a UNION ALL appendrel.)

*/

required_outer = rel->lateral_relids;

/* Generate appropriate path */

add_path(rel, create_ctescan_path(root, rel, required_outer));

/* Select cheapest path (pretty easy in this case...) */

set_cheapest(rel);

}

| static void set_dummy_rel_pathlist | ( | RelOptInfo * | rel | ) | [static] |

Definition at line 995 of file allpaths.c.

References add_path(), create_append_path(), NIL, NULL, RelOptInfo::pathlist, RelOptInfo::rows, set_cheapest(), and RelOptInfo::width.

Referenced by set_append_rel_size(), set_rel_size(), and set_subquery_pathlist().

{

/* Set dummy size estimates --- we leave attr_widths[] as zeroes */

rel->rows = 0;

rel->width = 0;

/* Discard any pre-existing paths; no further need for them */

rel->pathlist = NIL;

add_path(rel, (Path *) create_append_path(rel, NIL, NULL));

/* Select cheapest path (pretty easy in this case...) */

set_cheapest(rel);

}

| static void set_foreign_pathlist | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 422 of file allpaths.c.

References RelOptInfo::fdwroutine, FdwRoutine::GetForeignPaths, RangeTblEntry::relid, and set_cheapest().

Referenced by set_rel_pathlist().

{

/* Call the FDW's GetForeignPaths function to generate path(s) */

rel->fdwroutine->GetForeignPaths(root, rel, rte->relid);

/* Select cheapest path */

set_cheapest(rel);

}

| static void set_foreign_size | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 408 of file allpaths.c.

References RelOptInfo::fdwroutine, FdwRoutine::GetForeignRelSize, RangeTblEntry::relid, and set_foreign_size_estimates().

Referenced by set_rel_size().

{

/* Mark rel with estimated output rows, width, etc */

set_foreign_size_estimates(root, rel);

/* Let FDW adjust the size estimates, if it can */

rel->fdwroutine->GetForeignRelSize(root, rel, rte->relid);

}

| static void set_function_pathlist | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 1190 of file allpaths.c.

References add_path(), create_functionscan_path(), RelOptInfo::lateral_relids, and set_cheapest().

Referenced by set_rel_pathlist().

{

Relids required_outer;

/*

* We don't support pushing join clauses into the quals of a function

* scan, but it could still have required parameterization due to LATERAL

* refs in the function expression.

*/

required_outer = rel->lateral_relids;

/* Generate appropriate path */

add_path(rel, create_functionscan_path(root, rel, required_outer));

/* Select cheapest path (pretty easy in this case...) */

set_cheapest(rel);

}

| static void set_plain_rel_pathlist | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 378 of file allpaths.c.

References add_path(), create_index_paths(), create_seqscan_path(), create_tidscan_paths(), RelOptInfo::lateral_relids, and set_cheapest().

Referenced by set_rel_pathlist().

{

Relids required_outer;

/*

* We don't support pushing join clauses into the quals of a seqscan, but

* it could still have required parameterization due to LATERAL refs in

* its tlist. (That can only happen if the seqscan is on a relation

* pulled up out of a UNION ALL appendrel.)

*/

required_outer = rel->lateral_relids;

/* Consider sequential scan */

add_path(rel, create_seqscan_path(root, rel, required_outer));

/* Consider index scans */

create_index_paths(root, rel);

/* Consider TID scans */

create_tidscan_paths(root, rel);

/* Now find the cheapest of the paths for this rel */

set_cheapest(rel);

}

| static void set_plain_rel_size | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 350 of file allpaths.c.

References check_partial_indexes(), create_or_index_quals(), and set_baserel_size_estimates().

Referenced by set_rel_size().

{

/*

* Test any partial indexes of rel for applicability. We must do this

* first since partial unique indexes can affect size estimates.

*/

check_partial_indexes(root, rel);

/* Mark rel with estimated output rows, width, etc */

set_baserel_size_estimates(root, rel);

/*

* Check to see if we can extract any restriction conditions from join

* quals that are OR-of-AND structures. If so, add them to the rel's

* restriction list, and redo the above steps.

*/

if (create_or_index_quals(root, rel))

{

check_partial_indexes(root, rel);

set_baserel_size_estimates(root, rel);

}

}

| static void set_rel_pathlist | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| Index | rti, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 292 of file allpaths.c.

References elog, ERROR, RangeTblEntry::inh, IS_DUMMY_REL, RangeTblEntry::relkind, RELKIND_FOREIGN_TABLE, RTE_CTE, RTE_FUNCTION, RTE_RELATION, RTE_SUBQUERY, RTE_VALUES, RelOptInfo::rtekind, set_append_rel_pathlist(), set_foreign_pathlist(), set_function_pathlist(), set_plain_rel_pathlist(), and set_values_pathlist().

Referenced by set_append_rel_pathlist(), and set_base_rel_pathlists().

{

if (IS_DUMMY_REL(rel))

{

/* We already proved the relation empty, so nothing more to do */

}

else if (rte->inh)

{

/* It's an "append relation", process accordingly */

set_append_rel_pathlist(root, rel, rti, rte);

}

else

{

switch (rel->rtekind)

{

case RTE_RELATION:

if (rte->relkind == RELKIND_FOREIGN_TABLE)

{

/* Foreign table */

set_foreign_pathlist(root, rel, rte);

}

else

{

/* Plain relation */

set_plain_rel_pathlist(root, rel, rte);

}

break;

case RTE_SUBQUERY:

/* Subquery --- fully handled during set_rel_size */

break;

case RTE_FUNCTION:

/* RangeFunction */

set_function_pathlist(root, rel, rte);

break;

case RTE_VALUES:

/* Values list */

set_values_pathlist(root, rel, rte);

break;

case RTE_CTE:

/* CTE reference --- fully handled during set_rel_size */

break;

default:

elog(ERROR, "unexpected rtekind: %d", (int) rel->rtekind);

break;

}

}

#ifdef OPTIMIZER_DEBUG

debug_print_rel(root, rel);

#endif

}

| static void set_rel_size | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| Index | rti, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 213 of file allpaths.c.

References elog, ERROR, RangeTblEntry::inh, relation_excluded_by_constraints(), RangeTblEntry::relkind, RELKIND_FOREIGN_TABLE, RELOPT_BASEREL, RelOptInfo::reloptkind, RTE_CTE, RTE_FUNCTION, RTE_RELATION, RTE_SUBQUERY, RTE_VALUES, RelOptInfo::rtekind, RangeTblEntry::self_reference, set_append_rel_size(), set_cte_pathlist(), set_dummy_rel_pathlist(), set_foreign_size(), set_function_size_estimates(), set_plain_rel_size(), set_subquery_pathlist(), set_values_size_estimates(), and set_worktable_pathlist().

Referenced by set_append_rel_size(), and set_base_rel_sizes().

{

if (rel->reloptkind == RELOPT_BASEREL &&

relation_excluded_by_constraints(root, rel, rte))

{

/*

* We proved we don't need to scan the rel via constraint exclusion,

* so set up a single dummy path for it. Here we only check this for

* regular baserels; if it's an otherrel, CE was already checked in

* set_append_rel_pathlist().

*

* In this case, we go ahead and set up the relation's path right away

* instead of leaving it for set_rel_pathlist to do. This is because

* we don't have a convention for marking a rel as dummy except by

* assigning a dummy path to it.

*/

set_dummy_rel_pathlist(rel);

}

else if (rte->inh)

{

/* It's an "append relation", process accordingly */

set_append_rel_size(root, rel, rti, rte);

}

else

{

switch (rel->rtekind)

{

case RTE_RELATION:

if (rte->relkind == RELKIND_FOREIGN_TABLE)

{

/* Foreign table */

set_foreign_size(root, rel, rte);

}

else

{

/* Plain relation */

set_plain_rel_size(root, rel, rte);

}

break;

case RTE_SUBQUERY:

/*

* Subqueries don't support making a choice between

* parameterized and unparameterized paths, so just go ahead

* and build their paths immediately.

*/

set_subquery_pathlist(root, rel, rti, rte);

break;

case RTE_FUNCTION:

set_function_size_estimates(root, rel);

break;

case RTE_VALUES:

set_values_size_estimates(root, rel);

break;

case RTE_CTE:

/*

* CTEs don't support making a choice between parameterized

* and unparameterized paths, so just go ahead and build their

* paths immediately.

*/

if (rte->self_reference)

set_worktable_pathlist(root, rel, rte);

else

set_cte_pathlist(root, rel, rte);

break;

default:

elog(ERROR, "unexpected rtekind: %d", (int) rel->rtekind);

break;

}

}

}

| static void set_subquery_pathlist | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| Index | rti, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 1045 of file allpaths.c.

References add_path(), Assert, RelOptInfo::baserestrictinfo, RestrictInfo::clause, contain_leaky_functions(), convert_subquery_pathkeys(), copyObject(), create_subqueryscan_path(), Query::distinctClause, PlannerInfo::glob, Query::groupClause, has_multiple_baserels(), Query::hasAggs, Query::havingQual, is_dummy_plan(), lappend(), RelOptInfo::lateral_relids, lfirst, list_length(), NIL, palloc0(), PlannerInfo::parse, parse(), pfree(), PlannerInfo::plan_params, RestrictInfo::pseudoconstant, qual_is_pushdown_safe(), PlannerInfo::query_pathkeys, RangeTblEntry::security_barrier, set_cheapest(), set_dummy_rel_pathlist(), set_subquery_size_estimates(), Query::sortClause, RelOptInfo::subplan, RelOptInfo::subplan_params, RangeTblEntry::subquery, subquery_is_pushdown_safe(), subquery_planner(), subquery_push_qual(), RelOptInfo::subroot, Query::targetList, and PlannerInfo::tuple_fraction.

Referenced by set_rel_size().

{

Query *parse = root->parse;

Query *subquery = rte->subquery;

Relids required_outer;

bool *differentTypes;

double tuple_fraction;

PlannerInfo *subroot;

List *pathkeys;

/*

* Must copy the Query so that planning doesn't mess up the RTE contents

* (really really need to fix the planner to not scribble on its input,

* someday).

*/

subquery = copyObject(subquery);

/*

* If it's a LATERAL subquery, it might contain some Vars of the current

* query level, requiring it to be treated as parameterized, even though

* we don't support pushing down join quals into subqueries.

*/

required_outer = rel->lateral_relids;

/* We need a workspace for keeping track of set-op type coercions */

differentTypes = (bool *)

palloc0((list_length(subquery->targetList) + 1) * sizeof(bool));

/*

* If there are any restriction clauses that have been attached to the

* subquery relation, consider pushing them down to become WHERE or HAVING

* quals of the subquery itself. This transformation is useful because it

* may allow us to generate a better plan for the subquery than evaluating

* all the subquery output rows and then filtering them.

*

* There are several cases where we cannot push down clauses. Restrictions

* involving the subquery are checked by subquery_is_pushdown_safe().

* Restrictions on individual clauses are checked by

* qual_is_pushdown_safe(). Also, we don't want to push down

* pseudoconstant clauses; better to have the gating node above the

* subquery.

*

* Also, if the sub-query has the "security_barrier" flag, it means the

* sub-query originated from a view that must enforce row-level security.

* Then we must not push down quals that contain leaky functions.

*

* Non-pushed-down clauses will get evaluated as qpquals of the

* SubqueryScan node.

*

* XXX Are there any cases where we want to make a policy decision not to

* push down a pushable qual, because it'd result in a worse plan?

*/

if (rel->baserestrictinfo != NIL &&

subquery_is_pushdown_safe(subquery, subquery, differentTypes))

{

/* OK to consider pushing down individual quals */

List *upperrestrictlist = NIL;

ListCell *l;

foreach(l, rel->baserestrictinfo)

{

RestrictInfo *rinfo = (RestrictInfo *) lfirst(l);

Node *clause = (Node *) rinfo->clause;

if (!rinfo->pseudoconstant &&

(!rte->security_barrier ||

!contain_leaky_functions(clause)) &&

qual_is_pushdown_safe(subquery, rti, clause, differentTypes))

{

/* Push it down */

subquery_push_qual(subquery, rte, rti, clause);

}

else

{

/* Keep it in the upper query */

upperrestrictlist = lappend(upperrestrictlist, rinfo);

}

}

rel->baserestrictinfo = upperrestrictlist;

}

pfree(differentTypes);

/*

* We can safely pass the outer tuple_fraction down to the subquery if the

* outer level has no joining, aggregation, or sorting to do. Otherwise

* we'd better tell the subquery to plan for full retrieval. (XXX This

* could probably be made more intelligent ...)

*/

if (parse->hasAggs ||

parse->groupClause ||

parse->havingQual ||

parse->distinctClause ||

parse->sortClause ||

has_multiple_baserels(root))

tuple_fraction = 0.0; /* default case */

else

tuple_fraction = root->tuple_fraction;

/* plan_params should not be in use in current query level */

Assert(root->plan_params == NIL);

/* Generate the plan for the subquery */

rel->subplan = subquery_planner(root->glob, subquery,

root,

false, tuple_fraction,

&subroot);

rel->subroot = subroot;

/* Isolate the params needed by this specific subplan */

rel->subplan_params = root->plan_params;

root->plan_params = NIL;

/*

* It's possible that constraint exclusion proved the subquery empty. If

* so, it's convenient to turn it back into a dummy path so that we will

* recognize appropriate optimizations at this query level. (But see

* create_append_plan in createplan.c, which has to reverse this

* substitution.)

*/

if (is_dummy_plan(rel->subplan))

{

set_dummy_rel_pathlist(rel);

return;

}

/* Mark rel with estimated output rows, width, etc */

set_subquery_size_estimates(root, rel);

/* Convert subquery pathkeys to outer representation */

pathkeys = convert_subquery_pathkeys(root, rel, subroot->query_pathkeys);

/* Generate appropriate path */

add_path(rel, create_subqueryscan_path(root, rel, pathkeys, required_outer));

/* Select cheapest path (pretty easy in this case...) */

set_cheapest(rel);

}

| static void set_values_pathlist | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 1213 of file allpaths.c.

References add_path(), create_valuesscan_path(), RelOptInfo::lateral_relids, and set_cheapest().

Referenced by set_rel_pathlist().

{

Relids required_outer;

/*

* We don't support pushing join clauses into the quals of a values scan,

* but it could still have required parameterization due to LATERAL refs

* in the values expressions.

*/

required_outer = rel->lateral_relids;

/* Generate appropriate path */

add_path(rel, create_valuesscan_path(root, rel, required_outer));

/* Select cheapest path (pretty easy in this case...) */

set_cheapest(rel);

}

| static void set_worktable_pathlist | ( | PlannerInfo * | root, | |

| RelOptInfo * | rel, | |||

| RangeTblEntry * | rte | |||

| ) | [static] |

Definition at line 1309 of file allpaths.c.

References add_path(), create_worktablescan_path(), RangeTblEntry::ctelevelsup, RangeTblEntry::ctename, elog, ERROR, RelOptInfo::lateral_relids, PlannerInfo::non_recursive_plan, PlannerInfo::parent_root, set_cheapest(), and set_cte_size_estimates().

Referenced by set_rel_size().

{

Plan *cteplan;

PlannerInfo *cteroot;

Index levelsup;

Relids required_outer;

/*

* We need to find the non-recursive term's plan, which is in the plan

* level that's processing the recursive UNION, which is one level *below*

* where the CTE comes from.

*/

levelsup = rte->ctelevelsup;

if (levelsup == 0) /* shouldn't happen */

elog(ERROR, "bad levelsup for CTE \"%s\"", rte->ctename);

levelsup--;

cteroot = root;

while (levelsup-- > 0)

{

cteroot = cteroot->parent_root;

if (!cteroot) /* shouldn't happen */

elog(ERROR, "bad levelsup for CTE \"%s\"", rte->ctename);

}

cteplan = cteroot->non_recursive_plan;

if (!cteplan) /* shouldn't happen */

elog(ERROR, "could not find plan for CTE \"%s\"", rte->ctename);

/* Mark rel with estimated output rows, width, etc */

set_cte_size_estimates(root, rel, cteplan);

/*

* We don't support pushing join clauses into the quals of a worktable

* scan, but it could still have required parameterization due to LATERAL

* refs in its tlist. (That can only happen if the worktable scan is on a

* relation pulled up out of a UNION ALL appendrel. I'm not sure this is

* actually possible given the restrictions on recursive references, but

* it's easy enough to support.)

*/

required_outer = rel->lateral_relids;

/* Generate appropriate path */

add_path(rel, create_worktablescan_path(root, rel, required_outer));

/* Select cheapest path (pretty easy in this case...) */

set_cheapest(rel);

}

| RelOptInfo* standard_join_search | ( | PlannerInfo * | root, | |

| int | levels_needed, | |||

| List * | initial_rels | |||

| ) |

Definition at line 1469 of file allpaths.c.

References Assert, elog, ERROR, PlannerInfo::join_rel_level, join_search_one_level(), lfirst, linitial, list_length(), NIL, NULL, palloc0(), and set_cheapest().

Referenced by make_rel_from_joinlist().

{

int lev;

RelOptInfo *rel;

/*

* This function cannot be invoked recursively within any one planning

* problem, so join_rel_level[] can't be in use already.

*/

Assert(root->join_rel_level == NULL);

/*

* We employ a simple "dynamic programming" algorithm: we first find all

* ways to build joins of two jointree items, then all ways to build joins

* of three items (from two-item joins and single items), then four-item

* joins, and so on until we have considered all ways to join all the

* items into one rel.

*

* root->join_rel_level[j] is a list of all the j-item rels. Initially we

* set root->join_rel_level[1] to represent all the single-jointree-item

* relations.

*/

root->join_rel_level = (List **) palloc0((levels_needed + 1) * sizeof(List *));

root->join_rel_level[1] = initial_rels;

for (lev = 2; lev <= levels_needed; lev++)

{

ListCell *lc;

/*

* Determine all possible pairs of relations to be joined at this

* level, and build paths for making each one from every available

* pair of lower-level relations.

*/

join_search_one_level(root, lev);

/*

* Do cleanup work on each just-processed rel.

*/

foreach(lc, root->join_rel_level[lev])

{

rel = (RelOptInfo *) lfirst(lc);

/* Find and save the cheapest paths for this rel */

set_cheapest(rel);

#ifdef OPTIMIZER_DEBUG

debug_print_rel(root, rel);

#endif

}

}

/*

* We should have a single rel at the final level.

*/

if (root->join_rel_level[levels_needed] == NIL)

elog(ERROR, "failed to build any %d-way joins", levels_needed);

Assert(list_length(root->join_rel_level[levels_needed]) == 1);

rel = (RelOptInfo *) linitial(root->join_rel_level[levels_needed]);

root->join_rel_level = NULL;

return rel;

}

| static bool subquery_is_pushdown_safe | ( | Query * | subquery, | |

| Query * | topquery, | |||

| bool * | differentTypes | |||

| ) | [static] |

Definition at line 1567 of file allpaths.c.

References Assert, SetOperationStmt::colTypes, compare_tlist_datatypes(), Query::hasWindowFuncs, IsA, Query::limitCount, Query::limitOffset, NULL, recurse_pushdown_safe(), Query::setOperations, and Query::targetList.

Referenced by recurse_pushdown_safe(), and set_subquery_pathlist().

{

SetOperationStmt *topop;

/* Check point 1 */

if (subquery->limitOffset != NULL || subquery->limitCount != NULL)

return false;

/* Check point 2 */

if (subquery->hasWindowFuncs)

return false;

/* Are we at top level, or looking at a setop component? */

if (subquery == topquery)

{

/* Top level, so check any component queries */

if (subquery->setOperations != NULL)

if (!recurse_pushdown_safe(subquery->setOperations, topquery,

differentTypes))

return false;

}

else

{

/* Setop component must not have more components (too weird) */

if (subquery->setOperations != NULL)

return false;

/* Check whether setop component output types match top level */

topop = (SetOperationStmt *) topquery->setOperations;

Assert(topop && IsA(topop, SetOperationStmt));

compare_tlist_datatypes(subquery->targetList,

topop->colTypes,

differentTypes);

}

return true;

}

| static void subquery_push_qual | ( | Query * | subquery, | |

| RangeTblEntry * | rte, | |||

| Index | rti, | |||

| Node * | qual | |||

| ) | [static] |

Definition at line 1818 of file allpaths.c.

References Query::groupClause, Query::hasAggs, Query::hasSubLinks, Query::havingQual, Query::jointree, make_and_qual(), NULL, FromExpr::quals, recurse_push_qual(), REPLACEVARS_REPORT_ERROR, ReplaceVarsFromTargetList(), Query::setOperations, and Query::targetList.

Referenced by recurse_push_qual(), and set_subquery_pathlist().

{

if (subquery->setOperations != NULL)

{

/* Recurse to push it separately to each component query */

recurse_push_qual(subquery->setOperations, subquery,

rte, rti, qual);

}

else

{

/*

* We need to replace Vars in the qual (which must refer to outputs of

* the subquery) with copies of the subquery's targetlist expressions.

* Note that at this point, any uplevel Vars in the qual should have

* been replaced with Params, so they need no work.

*

* This step also ensures that when we are pushing into a setop tree,

* each component query gets its own copy of the qual.

*/

qual = ReplaceVarsFromTargetList(qual, rti, 0, rte,

subquery->targetList,

REPLACEVARS_REPORT_ERROR, 0,

&subquery->hasSubLinks);

/*

* Now attach the qual to the proper place: normally WHERE, but if the

* subquery uses grouping or aggregation, put it in HAVING (since the

* qual really refers to the group-result rows).

*/

if (subquery->hasAggs || subquery->groupClause || subquery->havingQual)

subquery->havingQual = make_and_qual(subquery->havingQual, qual);

else

subquery->jointree->quals =

make_and_qual(subquery->jointree->quals, qual);

/*

* We need not change the subquery's hasAggs or hasSublinks flags,

* since we can't be pushing down any aggregates that weren't there

* before, and we don't push down subselects at all.

*/

}

}

| bool enable_geqo = false |

Definition at line 43 of file allpaths.c.

Referenced by make_rel_from_joinlist().

| int geqo_threshold |

Definition at line 44 of file allpaths.c.

Referenced by make_rel_from_joinlist().

Definition at line 47 of file allpaths.c.

Referenced by make_rel_from_joinlist().

1.7.1

1.7.1