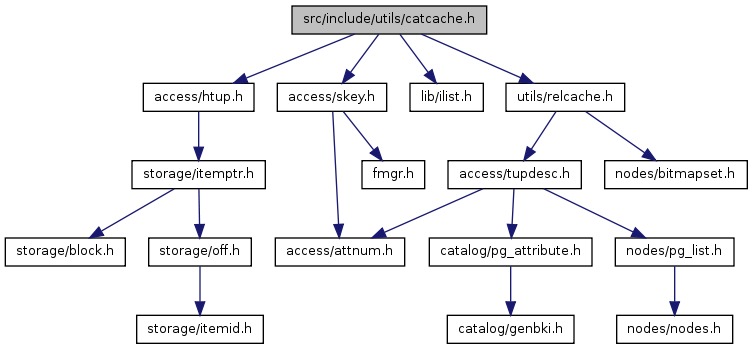

#include "access/htup.h"#include "access/skey.h"#include "lib/ilist.h"#include "utils/relcache.h"

Go to the source code of this file.

| #define CATCACHE_MAXKEYS 4 |

Definition at line 35 of file catcache.h.

| #define CL_MAGIC 0x52765103 |

Definition at line 118 of file catcache.h.

Referenced by AtEOXact_CatCache(), and ReleaseCatCacheList().

| #define CT_MAGIC 0x57261502 |

Definition at line 76 of file catcache.h.

Referenced by AtEOXact_CatCache(), PrintCatCacheLeakWarning(), and ReleaseCatCache().

| typedef struct catcacheheader CatCacheHeader |

| void AtEOXact_CatCache | ( | bool | isCommit | ) |

Definition at line 552 of file catcache.c.

References Assert, assert_enabled, catcache::cc_bucket, catcache::cc_lists, catcache::cc_nbuckets, catcacheheader::ch_caches, CL_MAGIC, catclist::cl_magic, CT_MAGIC, catctup::ct_magic, dlist_iter::cur, slist_iter::cur, catctup::dead, catclist::dead, dlist_container, dlist_foreach, i, catctup::refcount, catclist::refcount, slist_container, and slist_foreach.

Referenced by AbortTransaction(), CommitTransaction(), and PrepareTransaction().

{

#ifdef USE_ASSERT_CHECKING

if (assert_enabled)

{

slist_iter cache_iter;

slist_foreach(cache_iter, &CacheHdr->ch_caches)

{

CatCache *ccp = slist_container(CatCache, cc_next, cache_iter.cur);

dlist_iter iter;

int i;

/* Check CatCLists */

dlist_foreach(iter, &ccp->cc_lists)

{

CatCList *cl = dlist_container(CatCList, cache_elem, iter.cur);

Assert(cl->cl_magic == CL_MAGIC);

Assert(cl->refcount == 0);

Assert(!cl->dead);

}

/* Check individual tuples */

for (i = 0; i < ccp->cc_nbuckets; i++)

{

dlist_head *bucket = &ccp->cc_bucket[i];

dlist_foreach(iter, bucket)

{

CatCTup *ct = dlist_container(CatCTup, cache_elem, iter.cur);

Assert(ct->ct_magic == CT_MAGIC);

Assert(ct->refcount == 0);

Assert(!ct->dead);

}

}

}

}

#endif

}

| void CatalogCacheFlushCatalog | ( | Oid | catId | ) |

Definition at line 680 of file catcache.c.

References CACHE1_elog, CACHE2_elog, CallSyscacheCallbacks(), catcache::cc_reloid, catcacheheader::ch_caches, slist_iter::cur, DEBUG2, catcache::id, ResetCatalogCache(), slist_container, and slist_foreach.

Referenced by LocalExecuteInvalidationMessage().

{

slist_iter iter;

CACHE2_elog(DEBUG2, "CatalogCacheFlushCatalog called for %u", catId);

slist_foreach(iter, &CacheHdr->ch_caches)

{

CatCache *cache = slist_container(CatCache, cc_next, iter.cur);

/* Does this cache store tuples of the target catalog? */

if (cache->cc_reloid == catId)

{

/* Yes, so flush all its contents */

ResetCatalogCache(cache);

/* Tell inval.c to call syscache callbacks for this cache */

CallSyscacheCallbacks(cache->id, 0);

}

}

CACHE1_elog(DEBUG2, "end of CatalogCacheFlushCatalog call");

}

| void CatalogCacheIdInvalidate | ( | int | cacheId, | |

| uint32 | hashValue | |||

| ) |

Definition at line 445 of file catcache.c.

References Assert, catctup::c_list, CACHE1_elog, CatCacheRemoveCList(), CatCacheRemoveCTup(), catcache::cc_bucket, catcache::cc_lists, catcache::cc_nbuckets, catcacheheader::ch_caches, dlist_mutable_iter::cur, slist_iter::cur, catctup::dead, catclist::dead, DEBUG2, dlist_container, dlist_foreach_modify, HASH_INDEX, catctup::hash_value, catcache::id, NULL, catctup::refcount, catclist::refcount, slist_container, and slist_foreach.

Referenced by LocalExecuteInvalidationMessage().

{

slist_iter cache_iter;

CACHE1_elog(DEBUG2, "CatalogCacheIdInvalidate: called");

/*

* inspect caches to find the proper cache

*/

slist_foreach(cache_iter, &CacheHdr->ch_caches)

{

CatCache *ccp = slist_container(CatCache, cc_next, cache_iter.cur);

Index hashIndex;

dlist_mutable_iter iter;

if (cacheId != ccp->id)

continue;

/*

* We don't bother to check whether the cache has finished

* initialization yet; if not, there will be no entries in it so no

* problem.

*/

/*

* Invalidate *all* CatCLists in this cache; it's too hard to tell

* which searches might still be correct, so just zap 'em all.

*/

dlist_foreach_modify(iter, &ccp->cc_lists)

{

CatCList *cl = dlist_container(CatCList, cache_elem, iter.cur);

if (cl->refcount > 0)

cl->dead = true;

else

CatCacheRemoveCList(ccp, cl);

}

/*

* inspect the proper hash bucket for tuple matches

*/

hashIndex = HASH_INDEX(hashValue, ccp->cc_nbuckets);

dlist_foreach_modify(iter, &ccp->cc_bucket[hashIndex])

{

CatCTup *ct = dlist_container(CatCTup, cache_elem, iter.cur);

if (hashValue == ct->hash_value)

{

if (ct->refcount > 0 ||

(ct->c_list && ct->c_list->refcount > 0))

{

ct->dead = true;

/* list, if any, was marked dead above */

Assert(ct->c_list == NULL || ct->c_list->dead);

}

else

CatCacheRemoveCTup(ccp, ct);

CACHE1_elog(DEBUG2, "CatalogCacheIdInvalidate: invalidated");

#ifdef CATCACHE_STATS

ccp->cc_invals++;

#endif

/* could be multiple matches, so keep looking! */

}

}

break; /* need only search this one cache */

}

}

| void CreateCacheMemoryContext | ( | void | ) |

Definition at line 527 of file catcache.c.

References ALLOCSET_DEFAULT_INITSIZE, ALLOCSET_DEFAULT_MAXSIZE, ALLOCSET_DEFAULT_MINSIZE, AllocSetContextCreate(), CacheMemoryContext, and TopMemoryContext.

Referenced by assign_record_type_typmod(), BuildEventTriggerCache(), init_ts_config_cache(), InitCatCache(), InitializeAttoptCache(), InitializeTableSpaceCache(), lookup_ts_dictionary_cache(), lookup_ts_parser_cache(), lookup_type_cache(), LookupOpclassInfo(), RelationBuildLocalRelation(), and RelationCacheInitialize().

{

/*

* Purely for paranoia, check that context doesn't exist; caller probably

* did so already.

*/

if (!CacheMemoryContext)

CacheMemoryContext = AllocSetContextCreate(TopMemoryContext,

"CacheMemoryContext",

ALLOCSET_DEFAULT_MINSIZE,

ALLOCSET_DEFAULT_INITSIZE,

ALLOCSET_DEFAULT_MAXSIZE);

}

Definition at line 1297 of file catcache.c.

References CatalogCacheComputeHashValue(), CatalogCacheInitializeCache(), catcache::cc_nkeys, catcache::cc_skey, catcache::cc_tupdesc, NULL, and ScanKeyData::sk_argument.

Referenced by GetSysCacheHashValue().

{

ScanKeyData cur_skey[CATCACHE_MAXKEYS];

/*

* one-time startup overhead for each cache

*/

if (cache->cc_tupdesc == NULL)

CatalogCacheInitializeCache(cache);

/*

* initialize the search key information

*/

memcpy(cur_skey, cache->cc_skey, sizeof(cur_skey));

cur_skey[0].sk_argument = v1;

cur_skey[1].sk_argument = v2;

cur_skey[2].sk_argument = v3;

cur_skey[3].sk_argument = v4;

/*

* calculate the hash value

*/

return CatalogCacheComputeHashValue(cache, cache->cc_nkeys, cur_skey);

}

| CatCache* InitCatCache | ( | int | id, | |

| Oid | reloid, | |||

| Oid | indexoid, | |||

| int | nkeys, | |||

| const int * | key, | |||

| int | nbuckets | |||

| ) |

Definition at line 724 of file catcache.c.

References Assert, CacheMemoryContext, catcache::cc_indexoid, catcache::cc_key, catcache::cc_nbuckets, catcache::cc_next, catcache::cc_nkeys, catcache::cc_ntup, catcache::cc_relisshared, catcache::cc_relname, catcache::cc_reloid, catcache::cc_tupdesc, catcacheheader::ch_caches, catcacheheader::ch_ntup, CreateCacheMemoryContext(), i, catcache::id, InitCatCache_DEBUG2, MemoryContextSwitchTo(), NULL, on_proc_exit(), palloc(), palloc0(), slist_init(), and slist_push_head().

Referenced by InitCatalogCache().

{

CatCache *cp;

MemoryContext oldcxt;

int i;

/*

* nbuckets is the number of hash buckets to use in this catcache.

* Currently we just use a hard-wired estimate of an appropriate size for

* each cache; maybe later make them dynamically resizable?

*

* nbuckets must be a power of two. We check this via Assert rather than

* a full runtime check because the values will be coming from constant

* tables.

*

* If you're confused by the power-of-two check, see comments in

* bitmapset.c for an explanation.

*/

Assert(nbuckets > 0 && (nbuckets & -nbuckets) == nbuckets);

/*

* first switch to the cache context so our allocations do not vanish at

* the end of a transaction

*/

if (!CacheMemoryContext)

CreateCacheMemoryContext();

oldcxt = MemoryContextSwitchTo(CacheMemoryContext);

/*

* if first time through, initialize the cache group header

*/

if (CacheHdr == NULL)

{

CacheHdr = (CatCacheHeader *) palloc(sizeof(CatCacheHeader));

slist_init(&CacheHdr->ch_caches);

CacheHdr->ch_ntup = 0;

#ifdef CATCACHE_STATS

/* set up to dump stats at backend exit */

on_proc_exit(CatCachePrintStats, 0);

#endif

}

/*

* allocate a new cache structure

*

* Note: we rely on zeroing to initialize all the dlist headers correctly

*/

cp = (CatCache *) palloc0(sizeof(CatCache) + nbuckets * sizeof(dlist_head));

/*

* initialize the cache's relation information for the relation

* corresponding to this cache, and initialize some of the new cache's

* other internal fields. But don't open the relation yet.

*/

cp->id = id;

cp->cc_relname = "(not known yet)";

cp->cc_reloid = reloid;

cp->cc_indexoid = indexoid;

cp->cc_relisshared = false; /* temporary */

cp->cc_tupdesc = (TupleDesc) NULL;

cp->cc_ntup = 0;

cp->cc_nbuckets = nbuckets;

cp->cc_nkeys = nkeys;

for (i = 0; i < nkeys; ++i)

cp->cc_key[i] = key[i];

/*

* new cache is initialized as far as we can go for now. print some

* debugging information, if appropriate.

*/

InitCatCache_DEBUG2;

/*

* add completed cache to top of group header's list

*/

slist_push_head(&CacheHdr->ch_caches, &cp->cc_next);

/*

* back to the old context before we return...

*/

MemoryContextSwitchTo(oldcxt);

return cp;

}

Definition at line 949 of file catcache.c.

References AccessShareLock, AMNAME, AMOID, CatalogCacheInitializeCache(), catcache::cc_indexoid, catcache::cc_reloid, catcache::cc_tupdesc, catcache::id, index_close(), index_open(), LockRelationOid(), NULL, and UnlockRelationOid().

Referenced by InitCatalogCachePhase2(), and SysCacheGetAttr().

{

if (cache->cc_tupdesc == NULL)

CatalogCacheInitializeCache(cache);

if (touch_index &&

cache->id != AMOID &&

cache->id != AMNAME)

{

Relation idesc;

/*

* We must lock the underlying catalog before opening the index to

* avoid deadlock, since index_open could possibly result in reading

* this same catalog, and if anyone else is exclusive-locking this

* catalog and index they'll be doing it in that order.

*/

LockRelationOid(cache->cc_reloid, AccessShareLock);

idesc = index_open(cache->cc_indexoid, AccessShareLock);

index_close(idesc, AccessShareLock);

UnlockRelationOid(cache->cc_reloid, AccessShareLock);

}

}

| void PrepareToInvalidateCacheTuple | ( | Relation | relation, | |

| HeapTuple | tuple, | |||

| HeapTuple | newtuple, | |||

| void(*)(int, uint32, Oid) | function | |||

| ) |

Definition at line 1787 of file catcache.c.

References Assert, CACHE1_elog, CatalogCacheComputeTupleHashValue(), CatalogCacheInitializeCache(), catcache::cc_relisshared, catcache::cc_reloid, catcache::cc_tupdesc, catcacheheader::ch_caches, slist_iter::cur, DEBUG2, function, HeapTupleIsValid, catcache::id, MyDatabaseId, NULL, PointerIsValid, RelationGetRelid, RelationIsValid, slist_container, and slist_foreach.

Referenced by CacheInvalidateHeapTuple().

{

slist_iter iter;

Oid reloid;

CACHE1_elog(DEBUG2, "PrepareToInvalidateCacheTuple: called");

/*

* sanity checks

*/

Assert(RelationIsValid(relation));

Assert(HeapTupleIsValid(tuple));

Assert(PointerIsValid(function));

Assert(CacheHdr != NULL);

reloid = RelationGetRelid(relation);

/* ----------------

* for each cache

* if the cache contains tuples from the specified relation

* compute the tuple's hash value(s) in this cache,

* and call the passed function to register the information.

* ----------------

*/

slist_foreach(iter, &CacheHdr->ch_caches)

{

CatCache *ccp = slist_container(CatCache, cc_next, iter.cur);

uint32 hashvalue;

Oid dbid;

if (ccp->cc_reloid != reloid)

continue;

/* Just in case cache hasn't finished initialization yet... */

if (ccp->cc_tupdesc == NULL)

CatalogCacheInitializeCache(ccp);

hashvalue = CatalogCacheComputeTupleHashValue(ccp, tuple);

dbid = ccp->cc_relisshared ? (Oid) 0 : MyDatabaseId;

(*function) (ccp->id, hashvalue, dbid);

if (newtuple)

{

uint32 newhashvalue;

newhashvalue = CatalogCacheComputeTupleHashValue(ccp, newtuple);

if (newhashvalue != hashvalue)

(*function) (ccp->id, newhashvalue, dbid);

}

}

}

| void PrintCatCacheLeakWarning | ( | HeapTuple | tuple | ) |

Definition at line 1851 of file catcache.c.

References Assert, catcache::cc_relname, CT_MAGIC, catctup::ct_magic, elog, catcache::id, ItemPointerGetBlockNumber, ItemPointerGetOffsetNumber, catctup::my_cache, offsetof, catctup::refcount, and WARNING.

Referenced by ResourceOwnerReleaseInternal().

{

CatCTup *ct = (CatCTup *) (((char *) tuple) -

offsetof(CatCTup, tuple));

/* Safety check to ensure we were handed a cache entry */

Assert(ct->ct_magic == CT_MAGIC);

elog(WARNING, "cache reference leak: cache %s (%d), tuple %u/%u has count %d",

ct->my_cache->cc_relname, ct->my_cache->id,

ItemPointerGetBlockNumber(&(tuple->t_self)),

ItemPointerGetOffsetNumber(&(tuple->t_self)),

ct->refcount);

}

| void PrintCatCacheListLeakWarning | ( | CatCList * | list | ) |

Definition at line 1867 of file catcache.c.

References catcache::cc_relname, elog, catcache::id, catclist::my_cache, catclist::refcount, and WARNING.

Referenced by ResourceOwnerReleaseInternal().

| void ReleaseCatCache | ( | HeapTuple | tuple | ) |

Definition at line 1265 of file catcache.c.

References Assert, catctup::c_list, CatCacheRemoveCTup(), CT_MAGIC, catctup::ct_magic, CurrentResourceOwner, catctup::dead, catctup::my_cache, NULL, offsetof, catclist::refcount, catctup::refcount, ResourceOwnerForgetCatCacheRef(), and catctup::tuple.

Referenced by ReleaseSysCache(), and ResourceOwnerReleaseInternal().

{

CatCTup *ct = (CatCTup *) (((char *) tuple) -

offsetof(CatCTup, tuple));

/* Safety checks to ensure we were handed a cache entry */

Assert(ct->ct_magic == CT_MAGIC);

Assert(ct->refcount > 0);

ct->refcount--;

ResourceOwnerForgetCatCacheRef(CurrentResourceOwner, &ct->tuple);

if (

#ifndef CATCACHE_FORCE_RELEASE

ct->dead &&

#endif

ct->refcount == 0 &&

(ct->c_list == NULL || ct->c_list->refcount == 0))

CatCacheRemoveCTup(ct->my_cache, ct);

}

| void ReleaseCatCacheList | ( | CatCList * | list | ) |

Definition at line 1613 of file catcache.c.

References Assert, CatCacheRemoveCList(), CL_MAGIC, catclist::cl_magic, CurrentResourceOwner, catclist::dead, catclist::my_cache, catclist::refcount, and ResourceOwnerForgetCatCacheListRef().

Referenced by AddEnumLabel(), ResourceOwnerReleaseInternal(), and sepgsql_relation_drop().

{

/* Safety checks to ensure we were handed a cache entry */

Assert(list->cl_magic == CL_MAGIC);

Assert(list->refcount > 0);

list->refcount--;

ResourceOwnerForgetCatCacheListRef(CurrentResourceOwner, list);

if (

#ifndef CATCACHE_FORCE_RELEASE

list->dead &&

#endif

list->refcount == 0)

CatCacheRemoveCList(list->my_cache, list);

}

| void ResetCatalogCaches | ( | void | ) |

Definition at line 650 of file catcache.c.

References CACHE1_elog, catcacheheader::ch_caches, slist_iter::cur, DEBUG2, ResetCatalogCache(), slist_container, and slist_foreach.

Referenced by InvalidateSystemCaches().

{

slist_iter iter;

CACHE1_elog(DEBUG2, "ResetCatalogCaches called");

slist_foreach(iter, &CacheHdr->ch_caches)

{

CatCache *cache = slist_container(CatCache, cc_next, iter.cur);

ResetCatalogCache(cache);

}

CACHE1_elog(DEBUG2, "end of ResetCatalogCaches call");

}

Definition at line 1054 of file catcache.c.

References AccessShareLock, build_dummy_tuple(), CACHE3_elog, CACHE4_elog, catctup::cache_elem, CatalogCacheComputeHashValue(), CatalogCacheCreateEntry(), CatalogCacheInitializeCache(), catcache::cc_bucket, catcache::cc_indexoid, catcache::cc_nbuckets, catcache::cc_nkeys, catcache::cc_ntup, catcache::cc_relname, catcache::cc_reloid, catcache::cc_skey, catcache::cc_tupdesc, catcacheheader::ch_ntup, dlist_iter::cur, CurrentResourceOwner, catctup::dead, DEBUG2, dlist_container, dlist_foreach, dlist_move_head(), HASH_INDEX, catctup::hash_value, heap_close, heap_freetuple(), heap_open(), HeapKeyTest, HeapTupleIsValid, IndexScanOK(), IsBootstrapProcessingMode, catctup::negative, NULL, catctup::refcount, ResourceOwnerEnlargeCatCacheRefs(), ResourceOwnerRememberCatCacheRef(), ScanKeyData::sk_argument, SnapshotNow, systable_beginscan(), systable_endscan(), systable_getnext(), and catctup::tuple.

Referenced by SearchSysCache().

{

ScanKeyData cur_skey[CATCACHE_MAXKEYS];

uint32 hashValue;

Index hashIndex;

dlist_iter iter;

dlist_head *bucket;

CatCTup *ct;

Relation relation;

SysScanDesc scandesc;

HeapTuple ntp;

/*

* one-time startup overhead for each cache

*/

if (cache->cc_tupdesc == NULL)

CatalogCacheInitializeCache(cache);

#ifdef CATCACHE_STATS

cache->cc_searches++;

#endif

/*

* initialize the search key information

*/

memcpy(cur_skey, cache->cc_skey, sizeof(cur_skey));

cur_skey[0].sk_argument = v1;

cur_skey[1].sk_argument = v2;

cur_skey[2].sk_argument = v3;

cur_skey[3].sk_argument = v4;

/*

* find the hash bucket in which to look for the tuple

*/

hashValue = CatalogCacheComputeHashValue(cache, cache->cc_nkeys, cur_skey);

hashIndex = HASH_INDEX(hashValue, cache->cc_nbuckets);

/*

* scan the hash bucket until we find a match or exhaust our tuples

*

* Note: it's okay to use dlist_foreach here, even though we modify the

* dlist within the loop, because we don't continue the loop afterwards.

*/

bucket = &cache->cc_bucket[hashIndex];

dlist_foreach(iter, bucket)

{

bool res;

ct = dlist_container(CatCTup, cache_elem, iter.cur);

if (ct->dead)

continue; /* ignore dead entries */

if (ct->hash_value != hashValue)

continue; /* quickly skip entry if wrong hash val */

/*

* see if the cached tuple matches our key.

*/

HeapKeyTest(&ct->tuple,

cache->cc_tupdesc,

cache->cc_nkeys,

cur_skey,

res);

if (!res)

continue;

/*

* We found a match in the cache. Move it to the front of the list

* for its hashbucket, in order to speed subsequent searches. (The

* most frequently accessed elements in any hashbucket will tend to be

* near the front of the hashbucket's list.)

*/

dlist_move_head(bucket, &ct->cache_elem);

/*

* If it's a positive entry, bump its refcount and return it. If it's

* negative, we can report failure to the caller.

*/

if (!ct->negative)

{

ResourceOwnerEnlargeCatCacheRefs(CurrentResourceOwner);

ct->refcount++;

ResourceOwnerRememberCatCacheRef(CurrentResourceOwner, &ct->tuple);

CACHE3_elog(DEBUG2, "SearchCatCache(%s): found in bucket %d",

cache->cc_relname, hashIndex);

#ifdef CATCACHE_STATS

cache->cc_hits++;

#endif

return &ct->tuple;

}

else

{

CACHE3_elog(DEBUG2, "SearchCatCache(%s): found neg entry in bucket %d",

cache->cc_relname, hashIndex);

#ifdef CATCACHE_STATS

cache->cc_neg_hits++;

#endif

return NULL;

}

}

/*

* Tuple was not found in cache, so we have to try to retrieve it directly

* from the relation. If found, we will add it to the cache; if not

* found, we will add a negative cache entry instead.

*

* NOTE: it is possible for recursive cache lookups to occur while reading

* the relation --- for example, due to shared-cache-inval messages being

* processed during heap_open(). This is OK. It's even possible for one

* of those lookups to find and enter the very same tuple we are trying to

* fetch here. If that happens, we will enter a second copy of the tuple

* into the cache. The first copy will never be referenced again, and

* will eventually age out of the cache, so there's no functional problem.

* This case is rare enough that it's not worth expending extra cycles to

* detect.

*/

relation = heap_open(cache->cc_reloid, AccessShareLock);

scandesc = systable_beginscan(relation,

cache->cc_indexoid,

IndexScanOK(cache, cur_skey),

SnapshotNow,

cache->cc_nkeys,

cur_skey);

ct = NULL;

while (HeapTupleIsValid(ntp = systable_getnext(scandesc)))

{

ct = CatalogCacheCreateEntry(cache, ntp,

hashValue, hashIndex,

false);

/* immediately set the refcount to 1 */

ResourceOwnerEnlargeCatCacheRefs(CurrentResourceOwner);

ct->refcount++;

ResourceOwnerRememberCatCacheRef(CurrentResourceOwner, &ct->tuple);

break; /* assume only one match */

}

systable_endscan(scandesc);

heap_close(relation, AccessShareLock);

/*

* If tuple was not found, we need to build a negative cache entry

* containing a fake tuple. The fake tuple has the correct key columns,

* but nulls everywhere else.

*

* In bootstrap mode, we don't build negative entries, because the cache

* invalidation mechanism isn't alive and can't clear them if the tuple

* gets created later. (Bootstrap doesn't do UPDATEs, so it doesn't need

* cache inval for that.)

*/

if (ct == NULL)

{

if (IsBootstrapProcessingMode())

return NULL;

ntp = build_dummy_tuple(cache, cache->cc_nkeys, cur_skey);

ct = CatalogCacheCreateEntry(cache, ntp,

hashValue, hashIndex,

true);

heap_freetuple(ntp);

CACHE4_elog(DEBUG2, "SearchCatCache(%s): Contains %d/%d tuples",

cache->cc_relname, cache->cc_ntup, CacheHdr->ch_ntup);

CACHE3_elog(DEBUG2, "SearchCatCache(%s): put neg entry in bucket %d",

cache->cc_relname, hashIndex);

/*

* We are not returning the negative entry to the caller, so leave its

* refcount zero.

*/

return NULL;

}

CACHE4_elog(DEBUG2, "SearchCatCache(%s): Contains %d/%d tuples",

cache->cc_relname, cache->cc_ntup, CacheHdr->ch_ntup);

CACHE3_elog(DEBUG2, "SearchCatCache(%s): put in bucket %d",

cache->cc_relname, hashIndex);

#ifdef CATCACHE_STATS

cache->cc_newloads++;

#endif

return &ct->tuple;

}

| CatCList* SearchCatCacheList | ( | CatCache * | cache, | |

| int | nkeys, | |||

| Datum | v1, | |||

| Datum | v2, | |||

| Datum | v3, | |||

| Datum | v4 | |||

| ) |

Definition at line 1337 of file catcache.c.

References AccessShareLock, Assert, build_dummy_tuple(), catctup::c_list, CACHE2_elog, CACHE3_elog, catclist::cache_elem, CacheMemoryContext, CatalogCacheComputeHashValue(), CatalogCacheComputeTupleHashValue(), CatalogCacheCreateEntry(), CatalogCacheInitializeCache(), CatCacheRemoveCTup(), catcache::cc_bucket, catcache::cc_indexoid, catcache::cc_lists, catcache::cc_nbuckets, catcache::cc_relname, catcache::cc_reloid, catcache::cc_skey, catcache::cc_tupdesc, catclist::cl_magic, dlist_iter::cur, CurrentResourceOwner, catctup::dead, catclist::dead, DEBUG2, dlist_container, dlist_foreach, dlist_move_head(), dlist_push_head(), HASH_INDEX, catctup::hash_value, catclist::hash_value, heap_close, heap_copytuple_with_tuple(), heap_freetuple(), heap_open(), HeapKeyTest, HeapTupleIsValid, i, IndexScanOK(), SysScanDescData::irel, ItemPointerEquals(), lappend(), lfirst, list_length(), catclist::members, MemoryContextSwitchTo(), catclist::my_cache, catclist::n_members, catctup::negative, catclist::nkeys, NULL, catclist::ordered, palloc(), PG_CATCH, PG_END_TRY, PG_RE_THROW, PG_TRY, catctup::refcount, catclist::refcount, ResourceOwnerEnlargeCatCacheListRefs(), ResourceOwnerRememberCatCacheListRef(), ScanKeyData::sk_argument, SnapshotNow, systable_beginscan(), systable_endscan(), systable_getnext(), HeapTupleData::t_self, catctup::tuple, and catclist::tuple.

Referenced by SearchSysCacheList().

{

ScanKeyData cur_skey[CATCACHE_MAXKEYS];

uint32 lHashValue;

dlist_iter iter;

CatCList *cl;

CatCTup *ct;

List *volatile ctlist;

ListCell *ctlist_item;

int nmembers;

bool ordered;

HeapTuple ntp;

MemoryContext oldcxt;

int i;

/*

* one-time startup overhead for each cache

*/

if (cache->cc_tupdesc == NULL)

CatalogCacheInitializeCache(cache);

Assert(nkeys > 0 && nkeys < cache->cc_nkeys);

#ifdef CATCACHE_STATS

cache->cc_lsearches++;

#endif

/*

* initialize the search key information

*/

memcpy(cur_skey, cache->cc_skey, sizeof(cur_skey));

cur_skey[0].sk_argument = v1;

cur_skey[1].sk_argument = v2;

cur_skey[2].sk_argument = v3;

cur_skey[3].sk_argument = v4;

/*

* compute a hash value of the given keys for faster search. We don't

* presently divide the CatCList items into buckets, but this still lets

* us skip non-matching items quickly most of the time.

*/

lHashValue = CatalogCacheComputeHashValue(cache, nkeys, cur_skey);

/*

* scan the items until we find a match or exhaust our list

*

* Note: it's okay to use dlist_foreach here, even though we modify the

* dlist within the loop, because we don't continue the loop afterwards.

*/

dlist_foreach(iter, &cache->cc_lists)

{

bool res;

cl = dlist_container(CatCList, cache_elem, iter.cur);

if (cl->dead)

continue; /* ignore dead entries */

if (cl->hash_value != lHashValue)

continue; /* quickly skip entry if wrong hash val */

/*

* see if the cached list matches our key.

*/

if (cl->nkeys != nkeys)

continue;

HeapKeyTest(&cl->tuple,

cache->cc_tupdesc,

nkeys,

cur_skey,

res);

if (!res)

continue;

/*

* We found a matching list. Move the list to the front of the

* cache's list-of-lists, to speed subsequent searches. (We do not

* move the members to the fronts of their hashbucket lists, however,

* since there's no point in that unless they are searched for

* individually.)

*/

dlist_move_head(&cache->cc_lists, &cl->cache_elem);

/* Bump the list's refcount and return it */

ResourceOwnerEnlargeCatCacheListRefs(CurrentResourceOwner);

cl->refcount++;

ResourceOwnerRememberCatCacheListRef(CurrentResourceOwner, cl);

CACHE2_elog(DEBUG2, "SearchCatCacheList(%s): found list",

cache->cc_relname);

#ifdef CATCACHE_STATS

cache->cc_lhits++;

#endif

return cl;

}

/*

* List was not found in cache, so we have to build it by reading the

* relation. For each matching tuple found in the relation, use an

* existing cache entry if possible, else build a new one.

*

* We have to bump the member refcounts temporarily to ensure they won't

* get dropped from the cache while loading other members. We use a PG_TRY

* block to ensure we can undo those refcounts if we get an error before

* we finish constructing the CatCList.

*/

ResourceOwnerEnlargeCatCacheListRefs(CurrentResourceOwner);

ctlist = NIL;

PG_TRY();

{

Relation relation;

SysScanDesc scandesc;

relation = heap_open(cache->cc_reloid, AccessShareLock);

scandesc = systable_beginscan(relation,

cache->cc_indexoid,

IndexScanOK(cache, cur_skey),

SnapshotNow,

nkeys,

cur_skey);

/* The list will be ordered iff we are doing an index scan */

ordered = (scandesc->irel != NULL);

while (HeapTupleIsValid(ntp = systable_getnext(scandesc)))

{

uint32 hashValue;

Index hashIndex;

bool found = false;

dlist_head *bucket;

/*

* See if there's an entry for this tuple already.

*/

ct = NULL;

hashValue = CatalogCacheComputeTupleHashValue(cache, ntp);

hashIndex = HASH_INDEX(hashValue, cache->cc_nbuckets);

bucket = &cache->cc_bucket[hashIndex];

dlist_foreach(iter, bucket)

{

ct = dlist_container(CatCTup, cache_elem, iter.cur);

if (ct->dead || ct->negative)

continue; /* ignore dead and negative entries */

if (ct->hash_value != hashValue)

continue; /* quickly skip entry if wrong hash val */

if (!ItemPointerEquals(&(ct->tuple.t_self), &(ntp->t_self)))

continue; /* not same tuple */

/*

* Found a match, but can't use it if it belongs to another

* list already

*/

if (ct->c_list)

continue;

found = true;

break; /* A-OK */

}

if (!found)

{

/* We didn't find a usable entry, so make a new one */

ct = CatalogCacheCreateEntry(cache, ntp,

hashValue, hashIndex,

false);

}

/* Careful here: add entry to ctlist, then bump its refcount */

/* This way leaves state correct if lappend runs out of memory */

ctlist = lappend(ctlist, ct);

ct->refcount++;

}

systable_endscan(scandesc);

heap_close(relation, AccessShareLock);

/*

* Now we can build the CatCList entry. First we need a dummy tuple

* containing the key values...

*/

ntp = build_dummy_tuple(cache, nkeys, cur_skey);

oldcxt = MemoryContextSwitchTo(CacheMemoryContext);

nmembers = list_length(ctlist);

cl = (CatCList *)

palloc(sizeof(CatCList) + nmembers * sizeof(CatCTup *));

heap_copytuple_with_tuple(ntp, &cl->tuple);

MemoryContextSwitchTo(oldcxt);

heap_freetuple(ntp);

/*

* We are now past the last thing that could trigger an elog before we

* have finished building the CatCList and remembering it in the

* resource owner. So it's OK to fall out of the PG_TRY, and indeed

* we'd better do so before we start marking the members as belonging

* to the list.

*/

}

PG_CATCH();

{

foreach(ctlist_item, ctlist)

{

ct = (CatCTup *) lfirst(ctlist_item);

Assert(ct->c_list == NULL);

Assert(ct->refcount > 0);

ct->refcount--;

if (

#ifndef CATCACHE_FORCE_RELEASE

ct->dead &&

#endif

ct->refcount == 0 &&

(ct->c_list == NULL || ct->c_list->refcount == 0))

CatCacheRemoveCTup(cache, ct);

}

PG_RE_THROW();

}

PG_END_TRY();

cl->cl_magic = CL_MAGIC;

cl->my_cache = cache;

cl->refcount = 0; /* for the moment */

cl->dead = false;

cl->ordered = ordered;

cl->nkeys = nkeys;

cl->hash_value = lHashValue;

cl->n_members = nmembers;

i = 0;

foreach(ctlist_item, ctlist)

{

cl->members[i++] = ct = (CatCTup *) lfirst(ctlist_item);

Assert(ct->c_list == NULL);

ct->c_list = cl;

/* release the temporary refcount on the member */

Assert(ct->refcount > 0);

ct->refcount--;

/* mark list dead if any members already dead */

if (ct->dead)

cl->dead = true;

}

Assert(i == nmembers);

dlist_push_head(&cache->cc_lists, &cl->cache_elem);

/* Finally, bump the list's refcount and return it */

cl->refcount++;

ResourceOwnerRememberCatCacheListRef(CurrentResourceOwner, cl);

CACHE3_elog(DEBUG2, "SearchCatCacheList(%s): made list of %d members",

cache->cc_relname, nmembers);

return cl;

}

| PGDLLIMPORT MemoryContext CacheMemoryContext |

1.7.1

1.7.1