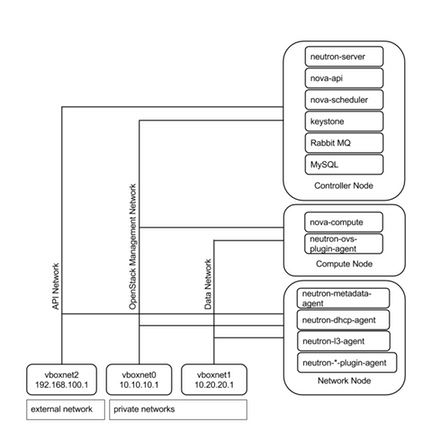

Network Diagram :

Publicly editable image source at https://docs.google.com/drawings/d/1GX3FXmkz3c_tUDpZXUVMpyIxicWuHs5fNsHvYNjwNNk/edit?usp=sharing

Vboxnet0, Vboxnet1, Vboxnet2 - are virtual networks setup up by virtual box with your host machine. This is the way your host can communicate with the virtual machines. These networks are in turn used by virtual box VM’s for OpenStack networks, so that OpenStack’s services can communicate with each other.

Compute Node

Start your Controller Node (the one you setup in the previous section).

Preparing Ubuntu 12.04

After you install Ubuntu Server, go in sudo mode

$ sudo su

Add Havana repositories:

# apt-get install ubuntu-cloud-keyring python-software-properties software-properties-common python-keyring

# echo deb http://ubuntu-cloud.archive.canonical.com/ubuntu precise-updates/icehouse main >> /etc/apt/sources.list.d/icehouse.list

Update your system:

# apt-get update

# apt-get upgrade

# apt-get dist-update

Install NTP and other services:

# apt-get install ntp vlan bridge-utils

Configure NTP Server to Controller Node:

# sed -i 's/server 0.ubuntu.pool.ntp.org/#server0.ubuntu.pool.ntp.org/g' /etc/ntp.conf

# sed -i 's/server 1.ubuntu.pool.ntp.org/#server1.ubuntu.pool.ntp.org/g' /etc/ntp.conf

# sed -i 's/server 2.ubuntu.pool.ntp.org/#server2.ubuntu.pool.ntp.org/g' /etc/ntp.conf

# sed -i 's/server 3.ubuntu.pool.ntp.org/#server3.ubuntu.pool.ntp.org/g' /etc/ntp.conf

Enable IP Forwarding by adding the following to

/etc/sysctl.confnet.ipv4.ip_forward=1 net.ipv4.conf.all.rp_filter=0 net.ipv4.conf.default.rp_filter=0

Run the following commands:

# sysctl net.ipv4.ip_forward=1

# sysctl net.ipv4.conf.all.rp_filter=0

# sysctl net.ipv4.conf.default.rp_filter=0

# sysctl -p

KVM

Install KVM:

# apt-get install -y kvm libvirt-bin pm-utils

Edit

/etc/libvirt/qemu.confcgroup_device_acl = [ "/dev/null", "/dev/full", "/dev/zero", "/dev/random", "/dev/urandom", "/dev/ptmx", "/dev/kvm", "/dev/kqemu", "/dev/rtc", "/dev/hpet","/dev/net/tun" ]

Delete Default Virtual Bridge

# virsh net-destroy default

# virsh net-undefine default

To Enable Live Migration Edit

/etc/libvirt/libvirtd.conflisten_tls = 0 listen_tcp = 1 auth_tcp = "none"

Edit

/etc/init/libvirt-bin.confenv libvirtd_opts="-d -l"

Edit

/etc/default/libvirt-binlibvirtd_opts="-d -l"

Restart libvirt

# service dbus restart

# service libvirt-bin restart

Neutron and OVS

Install Open vSwitch

# apt-get install -y openvswitch-switch openvswitch-datapath-dkms

Create bridges:

# ovs-vsctl add-br br-int

Neutron

Install the Neutron Open vSwitch agent:

# apt-get -y install neutron-plugin-openvswitch-agent

Edit

/etc/neutron/plugins/openvswitch/ovs_neutron_plugin.ini#Under the database section [database] connection = mysql://neutronUser:[email protected]/neutron #Under the OVS section [ovs] tenant_network_type = gre tunnel_id_ranges = 1:1000 integration_bridge = br-int tunnel_bridge = br-tun local_ip = 10.10.10.53 enable_tunneling = True tunnel_type=gre [agent] tunnel_types = gre #Firewall driver for realizing quantum security group function [SECURITYGROUP] firewall_driver = neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

Edit

/etc/neutron/neutron.confrabbit_host = 192.168.100.51 #And update the keystone_authtoken section [keystone_authtoken] auth_host = 192.168.100.51 auth_port = 35357 auth_protocol = http admin_tenant_name = service admin_user = quantum admin_password = service_pass signing_dir = /var/lib/quantum/keystone-signing [database] connection = mysql://neutronUser:[email protected]/neutron

Restart all the services:

# service neutron-plugin-openvswitch-agent restart

Nova

Install Nova

# apt-get install nova-compute-kvm python-guestfs

# chmod 0644 /boot/vmlinuz*

Edit

/etc/nova/api-paste.ini[filter:authtoken] paste.filter_factory = keystoneclient.middleware.auth_token:filter_factory auth_host = 192.168.100.51 auth_port = 35357 auth_protocol = http admin_tenant_name = service admin_user = nova admin_password = service_pass signing_dirname = /tmp/keystone-signing-nova # Workaround for https://bugs.launchpad.net/nova/+bug/1154809 auth_version = v2.0

Edit

/etc/nova/nova-compute.conf[DEFAULT] libvirt_type=qemu libvirt_ovs_bridge=br-int libvirt_vif_type=ethernet libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtHybridOVSBridgeDriver libvirt_use_virtio_for_bridges=True

Edit

/etc/nova/nova.conf[DEFAULT] logdir=/var/log/nova state_path=/var/lib/nova lock_path=/run/lock/nova verbose=True api_paste_config=/etc/nova/api-paste.ini compute_scheduler_driver=nova.scheduler.simple.SimpleScheduler rabbit_host=192.168.100.51 nova_url=http://192.168.100.51:8774/v1.1/ sql_connection=mysql://novaUser:[email protected]/nova root_helper=sudo nova-rootwrap /etc/nova/rootwrap.conf # Auth use_deprecated_auth=false auth_strategy=keystone # Imaging service glance_api_servers=192.168.100.51:9292 image_service=nova.image.glance.GlanceImageService # Vnc configuration novnc_enabled=true novncproxy_base_url=http://192.168.100.51:6080/vnc_auto.html novncproxy_port=6080 vncserver_proxyclient_address=10.10.10.53 vncserver_listen=0.0.0.0 # Network settings network_api_class=nova.network.neutronv2.api.API neutron_url=http://192.168.100.51:9696 neutron_auth_strategy=keystone neutron_admin_tenant_name=service neutron_admin_username=neutron neutron_admin_password=service_pass neutron_admin_auth_url=http://192.168.100.51:35357/v2.0 libvirt_vif_driver=nova.virt.libvirt.vif.LibvirtHybridOVSBridgeDriver linuxnet_interface_driver=nova.network.linux_net.LinuxOVSInterfaceDriver #If you want Neutron + Nova Security groups firewall_driver=nova.virt.firewall.NoopFirewallDriver security_group_api=neutron #If you want Nova Security groups only, comment the two lines above and uncomment line -1-. #-1-firewall_driver=nova.virt.libvirt.firewall.IptablesFirewallDriver #Metadata service_neutron_metadata_proxy = True neutron_metadata_proxy_shared_secret = helloOpenStack # Compute # compute_driver=libvirt.LibvirtDriver # Cinder # volume_api_class=nova.volume.cinder.API osapi_volume_listen_port=5900 cinder_catalog_info=volume:cinder:internalURL

Restart nova services

# cd /etc/init.d/; for i in $( ls nova-* ); do service $i restart; done

List nova services (Check for the Smiley Faces to know if the services are running):

# nova-manage service list