Contents

Networking in OpenStack

OpenStack Networking provides a rich tenant-facing API for defining network connectivity and addressing in the cloud. The OpenStack Networking project gives operators the ability to leverage different networking technologies to power their cloud networking. It is a virtual network service that provides a powerful API to define the network connectivity and addressing used by devices from other services, such as OpenStack Compute. It has a rich API which consists of the following components.

Network: An isolated L2 segment, analogous to VLAN in the physical networking world.

Subnet: A block of v4 or v6 IP addresses and associated configuration state.

Port: A connection point for attaching a single device, such as the NIC of a virtual server, to a virtual network. Also describes the associated network configuration, such as the MAC and IP addresses to be used on that port.

You can configure rich network topologies by creating and configuring networks and subnets, and then instructing other OpenStack services like OpenStack Compute to attach virtual devices to ports on these networks. In particular, OpenStack Networking supports each tenant having multiple private networks, and allows tenants to choose their own IP addressing scheme, even if those IP addresses overlap with those used by other tenants. This enables very advanced cloud networking use cases, such as building multi-tiered web applications and allowing applications to be migrated to the cloud without changing IP addresses.

Plugin Architecture: Flexibility to Choose Different Network Technologies

Enhancing traditional networking solutions to provide rich cloud networking is challenging. Traditional networking is not designed to scale to cloud proportions or to configure automatically.

The original OpenStack Compute network implementation assumed a very basic model of performing all isolation through Linux VLANs and IP tables. OpenStack Networking introduces the concept of a plug-in, which is a pluggable back-end implementation of the OpenStack Networking API. A plug-in can use a variety of technologies to implement the logical API requests. Some OpenStack Networking plug-ins might use basic Linux VLANs and IP tables, while others might use more advanced technologies, such as L2-in-L3 tunneling or OpenFlow, to provide similar benefits.

The current set of plug-ins include:

Big Switch, Floodlight REST Proxy: http://www.openflowhub.org/display/floodlightcontroller/Quantum+REST+Proxy+Plugin

Brocade Plugin

Cisco: Documented externally at: http://wiki.openstack.org/cisco-quantum

Hyper-V Plugin

Linux Bridge: Documentation included in this guide and http://wiki.openstack.org/Quantum-Linux-Bridge-Plugin

Midonet Plugin

NEC OpenFlow: http://wiki.openstack.org/Quantum-NEC-OpenFlow-Plugin

Open vSwitch: Documentation included in this guide.

VMware NSX: Documentation include in this guide, NSX Product Overview , and NSX Product Support.

Plugins can have different properties in terms of hardware requirements, features, performance, scale, operator tools, etc. Supporting many plug-ins enables the cloud administrator to weigh different options and decide which networking technology is right for the deployment.

Components of OpenStack Networking

To deploy OpenStack Networking, it is useful to understand the different components that make up the solution and how those components interact with each other and with other OpenStack services.

OpenStack Networking is a standalone service, just like other OpenStack services such as OpenStack Compute, OpenStack Image Service, OpenStack Identity service, and the OpenStack Dashboard. Like those services, a deployment of OpenStack Networking often involves deploying several processes on a variety of hosts.

The main process of the OpenStack Networking server is quantum-server, which is a Python daemon that exposes the OpenStack Networking API and passes user requests to the configured OpenStack Networking plug-in for additional processing. Typically, the plug-in requires access to a database for persistent storage, similar to other OpenStack services.

If your deployment uses a controller host to run centralized OpenStack Compute components, you can deploy the OpenStack Networking server on that same host. However, OpenStack Networking is entirely standalone and can be deployed on its own server as well. OpenStack Networking also includes additional agents that might be required depending on your deployment:

plugin agent (quantum-*-agent):Runs on each hypervisor to perform local vswitch configuration. Agent to be run depends on which plug-in you are using, as some plug-ins do not require an agent.

dhcp agent (quantum-dhcp-agent):Provides DHCP services to tenant networks. This agent is the same across all plug-ins.

l3 agent (quantum-l3-agent):Provides L3/NAT forwarding to provide external network access for VMs on tenant networks. This agent is the same across all plug-ins.

These agents interact with the main quantum-server process in the following ways:

Through RPC. For example, rabbitmq or qpid.

Through the standard OpenStack Networking API.

OpenStack Networking relies on the OpenStack Identity Project (Keystone) for authentication and authorization of all API request.

OpenStack Compute interacts with OpenStack Networking through calls to its standard API. As part of creating a VM, nova-compute communicates with the OpenStack Networking API to plug each virtual NIC on the VM into a particular network.

The OpenStack Dashboard (Horizon) has integration with the OpenStack Networking API, allowing administrators and tenant users, to create and manage network services through the Horizon GUI.

Place Services on Physical Hosts

Like other OpenStack services, OpenStack Networking provides cloud administrators with significant flexibility in deciding which individual services should run on which physical devices. On one extreme, all service daemons can be run on a single physical host for evaluation purposes. On the other, each service could have its own physical hosts, and some cases be replicated across multiple hosts for redundancy.

In this guide, we focus primarily on a standard architecture that includes a “cloud controller” host, a “network gateway” host, and a set of hypervisors for running VMs. The "cloud controller" and "network gateway" can be combined in simple deployments, though if you expect VMs to send significant amounts of traffic to or from the Internet, a dedicated network gateway host is suggested to avoid potential CPU contention between packet forwarding performed by the quantum-l3-agent and other OpenStack services.

Network Connectivity for Physical Hosts

A standard OpenStack Networking setup has up to four distinct physical data center networks:

Management network:Used for internal communication between OpenStack Components. The IP addresses on this network should be reachable only within the data center.

Data network:Used for VM data communication within the cloud deployment. The IP addressing requirements of this network depend on the OpenStack Networking plug-in in use.

External network:Used to provide VMs with Internet access in some deployment scenarios. The IP addresses on this network should be reachable by anyone on the Internet.

API network:Exposes all OpenStack APIs, including the OpenStack Networking API, to tenants. The IP addresses on this network should be reachable by anyone on the Internet. This may be the same network as the external network, as it is possible to create a subnet for the external network that uses IP allocation ranges to use only less than the full range of IP addresses in an IP block.

Network Types

The OpenStack Networking configuration provided by the Rackspace Private Cloud cookbooks allows you to choose between VLAN or GRE isolated networks, both provider- and tenant-specific. From the provider side, an administrator can also create a flat network.

The type of network that is used for private tenant networks is determined by the network_type attribute, which can be edited in the Chef override_attributes. This attribute sets both the default provider network type and the only type of network that tenants are able to create. Administrators can always create flat and VLAN networks. GRE networks of any type require the network_type to be set to gre.

Namespaces

For each network you create, the Network node (or Controller node, if combined) will have a unique network namespace (netns) created by the DHCP and Metadata agents. The netns hosts an interface and IP addresses for dnsmasq and the quantum-ns-metadata-proxy. You can view the namespaces with the ip netns [list], and can interact with the namespaces with the ip netns exec <namespace> <command> command.

Metadata

Not all networks or VMs need metadata access. Rackspace recommends that you use metadata if you are using a single network. If you need metadata, you may also need a default route. (If you don't need a default route, no-gateway will do.)

To communicate with the metadata IP address inside the namespace, instances need a route for the metadata network that points to the dnsmasq IP address on the same namespaced interface. OpenStack Networking only injects a route when you do not specify a gateway-ip in the subnet.

If you need to use a default route and provide instances with access to the metadata route, create the subnet without specifying a gateway IP and with a static route from 0.0.0.0/0 to your gateway IP address. Adjust the DHCP allocation pool so that it will not assign the gateway IP. With this configuration, dnsmasq will pass both routes to instances. This way, metadata will be routed correctly without any changes on the external gateway.

OVS Bridges

An OVS bridge for provider traffic is created and configured on the nodes where single-network-node and single-compute are applied. Bridges are created, but physical interfaces are not added. An OVS bridge is not created on a Controller-only node.

When creating networks, you can specify the type and properties, such as Flat vs. VLAN, Shared vs. Tenant, or Provider vs. Overlay. These properties identify and determine the behavior and resources of instances attached to the network. The cookbooks will create bridges for the configuration that you specify, although they do not add physical interfaces to provider bridges. For example, if you specify a network type of GRE, a br-tun tunnel bridge will be created to handle overlay traffic.

As of now you must be wondering, how to use these awesome features that OpenStack Networking has given to us.

Use Case: Single Flat Network

In the simplest use case, a single OpenStack Networking network exists. This is a "shared" network, meaning it is visible to all tenants via the OpenStack Networking API. Tenant VMs have a single NIC, and receive a fixed IP address from the subnet(s) associated with that network. This essentially maps to the FlatManager and FlatDHCPManager models provided by OpenStack Compute. Floating IPs are not supported.

It is common that an OpenStack Networking network is a "provider network", meaning it was created by the OpenStack administrator to map directly to an existing physical network in the data center. This allows the provider to use a physical router on that data center network as the gateway for VMs to reach the outside world. For each subnet on an external network, the gateway configuration on the physical router must be manually configured outside of OpenStack.

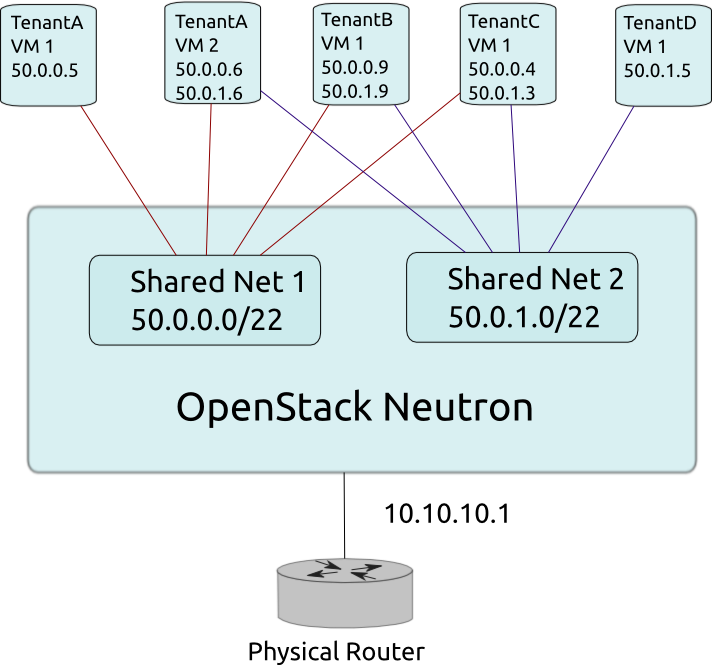

Use Case: Multiple Flat Network

This use case is very similar to the above Single Flat Network use case, except that tenants see multiple shared networks via the OpenStack Networking API and can choose which network (or networks) to plug into.

Use Case: Mixed Flat and Private Network

This use case is an extension of the above flat network use cases, in which tenants also optionally have access to private per-tenant networks. In addition to seeing one or more shared networks via the OpenStack Networking API, tenants can create additional networks that are only visible to users of that tenant. When creating VMs, those VMs can have NICs on any of the shared networks and/or any of the private networks belonging to the tenant. This enables the creation of "multi-tier" topologies using VMs with multiple NICs. It also supports a model where a VM acting as a gateway can provide services such as routing, NAT, or load balancing.

Use Case: Provider Router with Private Networks

This use provides each tenant with one or more private networks, which connect to the outside world via an OpenStack Networking router. The case where each tenant gets exactly one network in this form maps to the same logical topology as the VlanManager in OpenStack Compute (of course, OpenStack Networking doesn't require VLANs). Using the OpenStack Networking API, the tenant would only see a network for each private network assigned to that tenant. The router object in the API is created and owned by the cloud admin.

This model supports giving VMs public addresses using "floating IPs", in which the router maps public addresses from the external network to fixed IPs on private networks. Hosts without floating IPs can still create outbound connections to the external network, as the provider router performs SNAT to the router's external IP. The IP address of the physical router is used as the gateway_ip of the external network subnet, so the provider has a default router for Internet traffic.

The router provides L3 connectivity between private networks, meaning that different tenants can reach each others instances unless additional filtering, such as security groups, is used. Because there is only a single router, tenant networks cannot use overlapping IPs. Thus, it is likely that the admin would create the private networks on behalf of tenants.

Use Case: Per-tenant Routers with Private Networks

A more advanced router scenario in which each tenant gets at least one router, and potentially has access to the OpenStack Networking API to create additional routers. The tenant can create their own networks, potentially uplinking those networks to a router. This model enables tenant-defined multi-tier applications, with each tier being a separate network behind the router. Since there are multiple routers, tenant subnets can be overlapping without conflicting, since access to external networks all happens via SNAT or Floating IPs. Each router uplink and floating IP is allocated from the external network subnet.

Security Groups

Security groups and security group rules allows administrators and tenants the ability to specify the type of traffic and direction (ingress/egress) that is allowed to pass through a port. A security group is a container for security group rules.

When a port is created in OpenStack Networking it is associated with a security group. If a security group is not specified the port will be associated with a 'default' security group. By default this group will drop all ingress traffic and allow all egress. Rules can be added to this group in order to change the behaviour.

If one desires to use the OpenStack Compute security group APIs and/or have OpenStack Compute orchestrate the creation of new ports for instances on specific security groups, additional configuration is needed. To enable this, one must configure the following file /etc/nova/nova.conf and set the config option security_group_api=neutron on every node running nova-compute and nova-api. After this change is made restart nova-api and nova-compute in order to pick up this change. After this change is made one will be able to use both the OpenStack Compute and OpenStack Network security group API at the same time.

Authentication and Authorization

OpenStack Networking uses the OpenStack Identity service (project name keystone) as the default authentication service. When OpenStack Identity is enabled Users submitting requests to the OpenStack Networking service must provide an authentication token in X-Auth-Token request header. The aforementioned token should have been obtained by authenticating with the OpenStack Identity endpoint. For more information concerning authentication with OpenStack Identity, please refer to the OpenStack Identity documentation. When OpenStack Identity is enabled, it is not mandatory to specify tenant_id for resources in create requests, as the tenant identifier will be derived from the Authentication token. Please note that the default authorization settings only allow administrative users to create resources on behalf of a different tenant. OpenStack Networking uses information received from OpenStack Identity to authorize user requests. OpenStack Networking handles two kind of authorization policies:

Operation-based: policies specify access criteria for specific operations, possibly with fine-grained control over specific attributes;

Resource-based:whether access to specific resource might be granted or not according to the permissions configured for the resource (currently available only for the network resource). The actual authorization policies enforced in OpenStack Networking might vary from deployment to deployment.

The policy engine reads entries from the policy.json file. The actual location of this file might vary from distribution to distribution. Entries can be updated while the system is running, and no service restart is required. That is to say, every time the policy file is updated, the policies will be automatically reloaded. Currently the only way of updating such policies is to edit the policy file. Please note that in this section we will use both the terms "policy" and "rule" to refer to objects which are specified in the same way in the policy file; in other words, there are no syntax differences between a rule and a policy. We will define a policy something which is matched directly from the OpenStack Networking policy engine, whereas we will define a rule as the elements of such policies which are then evaluated. For instance in create_subnet: [["admin_or_network_owner"]], create_subnet is regarded as a policy, whereas admin_or_network_owner is regarded as a rule.

Policies are triggered by the OpenStack Networking policy engine whenever one of them matches an OpenStack Networking API operation or a specific attribute being used in a given operation. For instance the create_subnet policy is triggered every time a POST /v2.0/subnets request is sent to the OpenStack Networking server; on the other hand create_network:shared is triggered every time the shared attribute is explicitly specified (and set to a value different from its default) in a POST /v2.0/networks request. It is also worth mentioning that policies can be also related to specific API extensions; for instance extension:provider_network:set will be triggered if the attributes defined by the Provider Network extensions are specified in an API request.

An authorization policy can be composed by one or more rules. If more rules are specified, evaluation policy will be successful if any of the rules evaluates successfully; if an API operation matches multiple policies, then all the policies must evaluate successfully. Also, authorization rules are recursive. Once a rule is matched, the rule(s) can be resolved to another rule, until a terminal rule is reached.

The OpenStack Networking policy engine currently defines the following kinds of terminal rules:

Role-based rules: evaluate successfully if the user submitting the request has the specified role. For instance "role:admin"is successful if the user submitting the request is an administrator.

Field-based rules: evaluate successfully if a field of the resource specified in the current request matches a specific value. For instance "field:networks:shared=True" is successful if the attribute shared of the network resource is set to true.

Generic rules:compare an attribute in the resource with an attribute extracted from the user's security credentials and evaluates successfully if the comparison is successful. For instance "tenant_id:%(tenant_id)s" is successful if the tenant identifier in the resource is equal to the tenant identifier of the user submitting the request.

OpenStack Networking has the concept of Fixed IPs and Floating IPs. Fixed IPs are assigned to an instance on creation and stay the same until the instance is explicitly terminated. Floating ips are ip addresses that can be dynamically associated with an instance. This address can be disassociated and associated with another instance at any time.

Various tasks carried out by Floating IP's as of now.

create IP ranges under a certain group, only available for admin role.

allocate an floating IP to a certain tenant, only available for admin role.

deallocate an floating IP from a certain tenant

associate an floating IP to a given instance

disassociate an floating IP from a certain instance

Just as shown by the above figure, we will have nova-network-api to support nova client floating commands. nova-network-api will invoke neutron cli lib to interactive with neutron server via API. The data about floating IPs will be stored in to neutron DB. Neutron Agent, which is running on compute host will enforce the floating IP.

Multiple Floating IP Pools

The L3 API in OpenStack Networking supports multiple floating IP pools. In OpenStack Networking, a floating IP pool is represented as an external network and a floating IP is allocated from a subnet associated with the external network. Since each L3 agent can be associated with at most one external network, we need to invoke multiple L3 agent to define multiple floating IP pools. 'gateway_external_network_id'in L3 agent configuration file indicates the external network that the L3 agent handles. You can run multiple L3 agent instances on one host.

In addition, when you run multiple L3 agents, make sure that handle_internal_only_routers is set to Trueonly for one L3 agent in an OpenStack Networking deployment and set to Falsefor all other L3 agents. Since the default value of this parameter is True, you need to configure it carefully.

Before starting L3 agents, you need to create routers and external networks, then update the configuration files with UUID of external networks and start L3 agents.

For the first agent, invoke it with the following l3_agent.ini where handle_internal_only_routers is True.