- Replication >

- Replication Concepts >

- Replica Set Deployment Architectures >

- Geographically Distributed Replica Sets

Geographically Distributed Replica Sets¶

On this page

Adding members to a replica set in multiple data centers adds redundancy and provides fault tolerance if one data center is unavailable. Members in additional data centers should have a priority of 0 to prevent them from becoming primary.

For example: the architecture of a geographically distributed replica set may be:

- One primary in the main data center.

- One secondary member in the main data center. This member can become primary at any time.

- One priority 0 member in a second data center. This member cannot become primary.

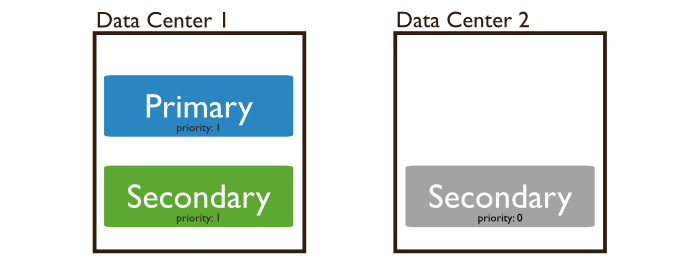

In the following replica set, the primary and one secondary are in Data Center 1, while Data Center 2 has a priority 0 secondary that cannot become a primary.

If the primary is unavailable, the replica set will elect a new primary from Data Center 1. If the data centers cannot connect to each other, the member in Data Center 2 will not become the primary.

If Data Center 1 becomes unavailable, you can manually recover the data set from Data Center 2 with minimal downtime.

To facilitate elections, the main data center should hold a majority of members. Also ensure that the set has an odd number of members. If adding a member in another data center results in a set with an even number of members, deploy an arbiter. For more information on elections, see Replica Set Elections.

Thank you for your feedback!

We're sorry! You can Report a Problem to help us improve this page.