- Replication >

- Replica Set Tutorials >

- Replica Set Deployment Tutorials >

- Deploy a Geographically Redundant Replica Set

Deploy a Geographically Redundant Replica Set¶

On this page

Overview¶

This tutorial outlines the process for deploying a replica set with members in multiple locations. The tutorial addresses three-member sets, four-member sets, and sets with more than four members.

For appropriate background, see Replication and Replica Set Deployment Architectures. For related tutorials, see Deploy a Replica Set and Add Members to a Replica Set.

Considerations¶

While replica sets provide basic protection against single-instance failure, replica sets whose members are all located in a single facility are susceptible to errors in that facility. Power outages, network interruptions, and natural disasters are all issues that can affect replica sets whose members are colocated. To protect against these classes of failures, deploy a replica set with one or more members in a geographically distinct facility or data center to provide redundancy.

Prerequisites¶

In general, the requirements for any geographically redundant replica set are as follows:

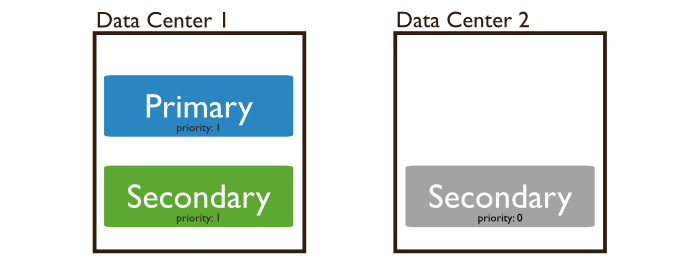

- Ensure that a majority of the voting members are within a primary facility, “Site A”. This includes priority 0 members and arbiters. Deploy other members in secondary facilities, “Site B”, “Site C”, etc., to provide additional copies of the data. See Determine the Distribution of Members for more information on the voting requirements for geographically redundant replica sets.

- If you deploy a replica set with an even number of members, deploy an arbiter on Site A. The arbiter must be on site A to keep the majority there.

For instance, for a three-member replica set you need two instances in a Site A, and one member in a secondary facility, Site B. Site A should be the same facility or very close to your primary application infrastructure (i.e. application servers, caching layer, users, etc.)

A four-member replica set should have at least two members in Site A, with the remaining members in one or more secondary sites, as well as a single arbiter in Site A.

For all configurations in this tutorial, deploy each replica set member on a separate system. Although you may deploy more than one replica set member on a single system, doing so reduces the redundancy and capacity of the replica set. Such deployments are typically for testing purposes and beyond the scope of this tutorial.

This tutorial assumes you have installed MongoDB on each system that will be part of your replica set. If you have not already installed MongoDB, see the installation tutorials.

Procedures¶

General Considerations¶

Architecture¶

In a production, deploy each member of the replica set to its own machine and if possible bind to the standard MongoDB port of 27017. Use the bind_ip option to ensure that MongoDB listens for connections from applications on configured addresses.

For a geographically distributed replica sets, ensure that the majority of the set’s mongod instances reside in the primary site.

See Replica Set Deployment Architectures for more information.

Connectivity¶

Ensure that network traffic can pass between all members of the set and all clients in the network securely and efficiently. Consider the following:

- Establish a virtual private network. Ensure that your network topology routes all traffic between members within a single site over the local area network.

- Configure access control to prevent connections from unknown clients to the replica set.

- Configure networking and firewall rules so that incoming and outgoing packets are permitted only on the default MongoDB port and only from within your deployment.

Finally ensure that each member of a replica set is accessible by way of resolvable DNS or hostnames. You should either configure your DNS names appropriately or set up your systems’ /etc/hosts file to reflect this configuration.

Configuration¶

Specify the run time configuration on each system in a configuration file stored in /etc/mongod.conf or a related location. Create the directory where MongoDB stores data files before deploying MongoDB.

For more information about the run time options used above and other configuration options, see Configuration File Options.

Deploy a Geographically Redundant Three-Member Replica Set¶

Start each member of the replica set with the appropriate options.¶

For each member, start a mongod and specify the replica set name through the replSet option. Specify any other parameters specific to your deployment. For replication-specific parameters, see Replication Options.

If your application connects to more than one replica set, each set should have a distinct name. Some drivers group replica set connections by replica set name.

The following example specifies the replica set name through the --replSet command-line option:

mongod --replSet "rs0"

You can also specify the replica set name in the configuration file. To start mongod with a configuration file, specify the file with the --config option:

mongod --config $HOME/.mongodb/config

In production deployments, you can configure a init script to manage this process. Init scripts are beyond the scope of this document.

Initiate the replica set.¶

Use rs.initiate() on one and only one member of the replica set:

rs.initiate()

MongoDB initiates a set that consists of the current member and that uses the default replica set configuration.

Verify the initial replica set configuration.¶

Use rs.conf() to display the replica set configuration object:

rs.conf()

The replica set configuration object resembles the following:

{

"_id" : "rs0",

"version" : 1,

"members" : [

{

"_id" : 1,

"host" : "mongodb0.example.net:27017"

}

]

}

Add the remaining members to the replica set.¶

Add the remaining members with the rs.add() method. You must be connected to the primary to add members to a replica set.

rs.add() can, in some cases, trigger an election. If the mongod you are connected to becomes a secondary, you need to connect the mongo shell to the new primary to continue adding new replica set members. Use rs.status() to identify the primary in the replica set.

The following example adds two members:

rs.add("mongodb1.example.net")

rs.add("mongodb2.example.net")

When complete, you have a fully functional replica set. The new replica set will elect a primary.

Configure the outside member as priority 0 members.¶

Configure the member located in Site B (in this example, mongodb2.example.net) as a priority 0 member.

View the replica set configuration to determine the members array position for the member. Keep in mind the array position is not the same as the _id:

rs.conf()

Copy the replica set configuration object to a variable (to cfg in the example below). Then, in the variable, set the correct priority for the member. Then pass the variable to rs.reconfig() to update the replica set configuration.

For example, to set priority for the third member in the array (i.e., the member at position 2), issue the following sequence of commands:

cfg = rs.conf() cfg.members[2].priority = 0 rs.reconfig(cfg)

Note

The rs.reconfig() shell method can force the current primary to step down, causing an election. When the primary steps down, all clients will disconnect. This is the intended behavior. While most elections complete within a minute, always make sure any replica configuration changes occur during scheduled maintenance periods.

After these commands return, you have a geographically redundant three-member replica set.

Deploy a Geographically Redundant Four-Member Replica Set¶

A geographically redundant four-member deployment has two additional considerations:

One host (e.g. mongodb4.example.net) must be an arbiter. This host can run on a system that is also used for an application server or on the same machine as another MongoDB process.

You must decide how to distribute your systems. There are three possible architectures for the four-member replica set:

- Three members in Site A, one priority 0 member in Site B, and an arbiter in Site A.

- Two members in Site A, two priority 0 members in Site B, and an arbiter in Site A.

- Two members in Site A, one priority 0 member in Site B, one priority 0 member in Site C, and an arbiter in site A.

In most cases, the first architecture is preferable because it is the least complex.

To deploy a geographically redundant four-member set:¶

Start each member of the replica set with the appropriate options.¶

For each member, start a mongod and specify the replica set name through the replSet option. Specify any other parameters specific to your deployment. For replication-specific parameters, see Replication Options.

If your application connects to more than one replica set, each set should have a distinct name. Some drivers group replica set connections by replica set name.

The following example specifies the replica set name through the --replSet command-line option:

mongod --replSet "rs0"

You can also specify the replica set name in the configuration file. To start mongod with a configuration file, specify the file with the --config option:

mongod --config $HOME/.mongodb/config

In production deployments, you can configure a init script to manage this process. Init scripts are beyond the scope of this document.

Initiate the replica set.¶

Use rs.initiate() on one and only one member of the replica set:

rs.initiate()

MongoDB initiates a set that consists of the current member and that uses the default replica set configuration.

Verify the initial replica set configuration.¶

Use rs.conf() to display the replica set configuration object:

rs.conf()

The replica set configuration object resembles the following:

{

"_id" : "rs0",

"version" : 1,

"members" : [

{

"_id" : 1,

"host" : "mongodb0.example.net:27017"

}

]

}

Add the remaining members to the replica set.¶

Use rs.add() in a mongo shell connected to the current primary. The commands should resemble the following:

rs.add("mongodb1.example.net")

rs.add("mongodb2.example.net")

rs.add("mongodb3.example.net")

When complete, you should have a fully functional replica set. The new replica set will elect a primary.

Add the arbiter.¶

In the same shell session, issue the following command to add the arbiter (e.g. mongodb4.example.net):

rs.addArb("mongodb4.example.net")

Configure outside members as priority 0 members.¶

Configure each member located outside of Site A (e.g. mongodb3.example.net) as a priority 0 member.

View the replica set configuration to determine the members array position for the member. Keep in mind the array position is not the same as the _id:

rs.conf()

Copy the replica set configuration object to a variable (to cfg in the example below). Then, in the variable, set the correct priority for the member. Then pass the variable to rs.reconfig() to update the replica set configuration.

For example, to set priority for the third member in the array (i.e., the member at position 2), issue the following sequence of commands:

cfg = rs.conf() cfg.members[2].priority = 0 rs.reconfig(cfg)

Note

The rs.reconfig() shell method can force the current primary to step down, causing an election. When the primary steps down, all clients will disconnect. This is the intended behavior. While most elections complete within a minute, always make sure any replica configuration changes occur during scheduled maintenance periods.

After these commands return, you have a geographically redundant four-member replica set.

Deploy a Geographically Redundant Set with More than Four Members¶

The above procedures detail the steps necessary for deploying a geographically redundant replica set. Larger replica set deployments follow the same steps, but have additional considerations:

- Never deploy more than seven voting members.

- If you have an even number of members, use the procedure for a four-member set). Ensure that a single facility, “Site A”, always has a majority of the members by deploying the arbiter in that site. For example, if a set has six members, deploy at least three voting members in addition to the arbiter in Site A, and the remaining members in alternate sites.

- If you have an odd number of members, use the procedure for a three-member set. Ensure that a single facility, “Site A” always has a majority of the members of the set. For example, if a set has five members, deploy three members within Site A and two members in other facilities.

- If you have a majority of the members of the set outside of Site A and the network partitions to prevent communication between sites, the current primary in Site A will step down, even if none of the members outside of Site A are eligible to become primary.

Thank you for your feedback!

We're sorry! You can Report a Problem to help us improve this page.