- Replication >

- Replication Concepts >

- Replica Set Members

Replica Set Members¶

A replica set in MongoDB is a group of mongod processes that provide redundancy and high availability. The members of a replica set are:

- Primary.

- The primary receives all write operations.

- Secondaries.

- Secondaries replicate operations from the primary to maintain an identical data set. Secondaries may have additional configurations for special usage profiles. For example, secondaries may be non-voting or priority 0.

You can also maintain an arbiter as part of a replica set. Arbiters do not keep a copy of the data. However, arbiters play a role in the elections that select a primary if the current primary is unavailable.

The minimum requirements for a replica set are: A primary, a secondary, and an arbiter. Most deployments, however, will keep three members that store data: A primary and two secondary members.

Changed in version 3.0.0: A replica set can have up to 50 members but only 7 voting members. [1] In previous versions, replica sets can have up to 12 members.

Primary¶

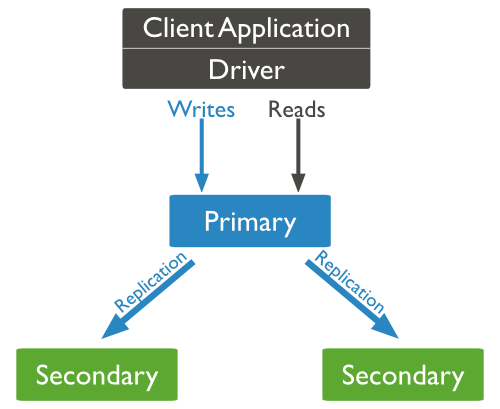

The primary is the only member in the replica set that receives write operations. MongoDB applies write operations on the primary and then records the operations on the primary’s oplog. Secondary members replicate this log and apply the operations to their data sets.

In the following three-member replica set, the primary accepts all write operations. Then the secondaries replicate the oplog to apply to their data sets.

All members of the replica set can accept read operations. However, by default, an application directs its read operations to the primary member. See Read Preference for details on changing the default read behavior.

The replica set can have at most one primary. [2] If the current primary becomes unavailable, an election determines the new primary. See Replica Set Elections for more details.

Secondaries¶

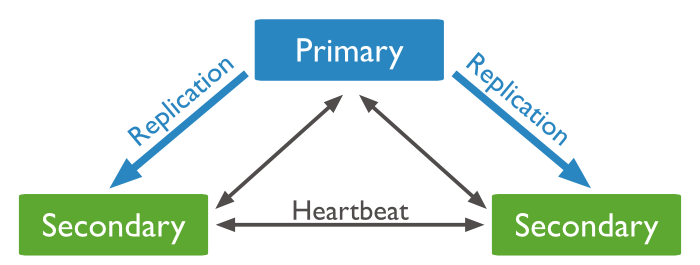

A secondary maintains a copy of the primary’s data set. To replicate data, a secondary applies operations from the primary’s oplog to its own data set in an asynchronous process. A replica set can have one or more secondaries.

The following three-member replica set has two secondary members. The secondaries replicate the primary’s oplog and apply the operations to their data sets.

Although clients cannot write data to secondaries, clients can read data from secondary members. See Read Preference for more information on how clients direct read operations to replica sets.

A secondary can become a primary. If the current primary becomes unavailable, the replica set holds an election to choose which of the secondaries becomes the new primary.

See Replica Set Elections for more details.

You can configure a secondary member for a specific purpose. You can configure a secondary to:

- Prevent it from becoming a primary in an election, which allows it to reside in a secondary data center or to serve as a cold standby. See Priority 0 Replica Set Members.

- Prevent applications from reading from it, which allows it to run applications that require separation from normal traffic. See Hidden Replica Set Members.

- Keep a running “historical” snapshot for use in recovery from certain errors, such as unintentionally deleted databases. See Delayed Replica Set Members.

Arbiter¶

An arbiter does not have a copy of data set and cannot become a primary. Replica sets may have arbiters to add a vote in elections of for primary. Arbiters always have exactly 1 vote election, and thus allow replica sets to have an uneven number of members, without the overhead of a member that replicates data.

Important

Do not run an arbiter on systems that also host the primary or the secondary members of the replica set.

Only add an arbiter to sets with even numbers of members. If you add an arbiter to a set with an odd number of members, the set may suffer from tied elections. To add an arbiter, see Add an Arbiter to Replica Set.

| [1] | While replica sets are the recommended solution for production, a replica set can support up to 50 members in total. If your deployment requires more than 50 members, you’ll need to use master-slave replication. However, master-slave replication lacks the automatic failover capabilities. |

| [2] | In some circumstances, two nodes in a replica set may transiently believe that they are the primary, but at most, one of them will be able to complete writes with { w: "majority" } write concern. The node that can complete { w: "majority" } writes is the current primary, and the other node is a former primary that has not yet recognized its demotion, typically due to a network partition. When this occurs, clients that connect to the former primary may observe stale data despite having requested read preference primary, and new writes to the former primary will eventually roll back. |

Thank you for your feedback!

We're sorry! You can Report a Problem to help us improve this page.