- Sharding >

- Sharding Concepts >

- Sharding Mechanics >

- Chunk Migration Across Shards

Chunk Migration Across Shards¶

On this page

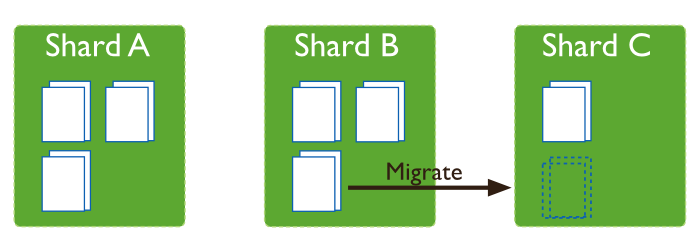

Chunk migration moves the chunks of a sharded collection from one shard to another and is part of the balancer process.

Chunk Migration¶

MongoDB migrates chunks in a sharded cluster to distribute the chunks of a sharded collection evenly among shards. Migrations may be either:

- Manual. Only use manual migration in limited cases, such as to distribute data during bulk inserts. See Migrating Chunks Manually for more details.

- Automatic. The balancer process automatically migrates chunks when there is an uneven distribution of a sharded collection’s chunks across the shards. See Migration Thresholds for more details.

Chunk Migration Procedure¶

All chunk migrations use the following procedure:

The balancer process sends the moveChunk command to the source shard.

The source starts the move with an internal moveChunk command. During the migration process, operations to the chunk route to the source shard. The source shard is responsible for incoming write operations for the chunk.

The destination shard builds any indexes required by the source that do not exist on the destination.

The destination shard begins requesting documents in the chunk and starts receiving copies of the data.

After receiving the final document in the chunk, the destination shard starts a synchronization process to ensure that it has the changes to the migrated documents that occurred during the migration.

When fully synchronized, the destination shard connects to the config database and updates the cluster metadata with the new location for the chunk.

After the destination shard completes the update of the metadata, and once there are no open cursors on the chunk, the source shard deletes its copy of the documents.

Note

If the balancer needs to perform additional chunk migrations from the source shard, the balancer can start the next chunk migration without waiting for the current migration process to finish this deletion step. See Chunk Migration Queuing.

Changed in version 2.6: The source shard automatically archives the migrated documents by default. For more information, see moveChunk directory.

The migration process ensures consistency and maximizes the availability of chunks during balancing.

Chunk Migration Queuing¶

To migrate multiple chunks from a shard, the balancer migrates the chunks one at a time. However, the balancer does not wait for the current migration’s delete phase to complete before starting the next chunk migration. See Chunk Migration for the chunk migration process and the delete phase.

This queuing behavior allows shards to unload chunks more quickly in cases of heavily imbalanced cluster, such as when performing initial data loads without pre-splitting and when adding new shards.

This behavior also affect the moveChunk command, and migration scripts that use the moveChunk command may proceed more quickly.

In some cases, the delete phases may persist longer. If multiple delete phases are queued but not yet complete, a crash of the replica set’s primary can orphan data from multiple migrations.

The _waitForDelete, available as a setting for the balancer as well as the moveChunk command, can alter the behavior so that the delete phase of the current migration blocks the start of the next chunk migration. The _waitForDelete is generally for internal testing purposes. For more information, see Wait for Delete.

Chunk Migration and Replication¶

Changed in version 3.0: The default value secondaryThrottle became true for all chunk migrations.

The new writeConcern field in the balancer configuration document allows you to specify a write concern semantics with the _secondaryThrottle option.

By default, each document operation during chunk migration propagates to at least one secondary before the balancer proceeds with the next document, which is equivalent to a write concern of { w: 2 }. You can set the writeConcern option on the balancer configuration to set different write concern semantics.

To override this behavior and allow the balancer to continue without waiting for replication to a secondary, set the _secondaryThrottle parameter to false. See Change Replication Behavior for Chunk Migration to update the _secondaryThrottle parameter for the balancer.

For the moveChunk command, the secondaryThrottle parameter is independent of the _secondaryThrottle parameter for the balancer.

Independent of the secondaryThrottle setting, certain phases of the chunk migration have the following replication policy:

- MongoDB briefly pauses all application writes to the source shard before updating the config servers with the new location for the chunk, and resumes the application writes after the update. The chunk move requires all writes to be acknowledged by majority of the members of the replica set both before and after committing the chunk move to config servers.

- When an outgoing chunk migration finishes and cleanup occurs, all writes must be replicated to a majority of servers before further cleanup (from other outgoing migrations) or new incoming migrations can proceed.

moveChunk directory¶

Starting in MongoDB 2.6, sharding.archiveMovedChunks is enabled by default. With sharding.archiveMovedChunks enabled, the source shard archives the documents in the migrated chunks in a directory named after the collection namespace under the moveChunk directory in the storage.dbPath.

Jumbo Chunks¶

During chunk migration, if the chunk exceeds the specified chunk size or if the number of documents in the chunk exceeds Maximum Number of Documents Per Chunk to Migrate, MongoDB does not migrate the chunk. Instead, MongoDB attempts to split the chunk. If the split is unsuccessful, MongoDB labels the chunk as jumbo to avoid repeated attempts to migrate the chunk.

Thank you for your feedback!

We're sorry! You can Report a Problem to help us improve this page.