By Sebastien Han from http://www.sebastien-han.fr/blog/2012/06/10/introducing-ceph-to-openstack/

If you use KVM or QEMU as your hypervisor, you can configure the Compute service to use Ceph RADOS block devices (RBD) for volumes.

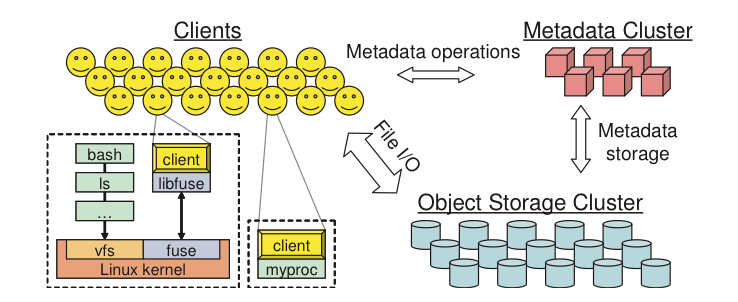

Ceph is a massively scalable, open source, distributed storage system. It is comprised of an object store, block store, and a POSIX-compliant distributed file system. The platform can auto-scale to the exabyte level and beyond. It runs on commodity hardware, is self-healing and self-managing, and has no single point of failure. Ceph is in the Linux kernel and is integrated with the OpenStack cloud operating system. Due to its open source nature, you can install and use this portable storage platform in public or private clouds.

You can easily get confused by the naming: Ceph? RADOS?

RADOS: Reliable Autonomic Distributed Object Store is an object store. RADOS distributes objects across the storage cluster and replicates objects for fault tolerance. RADOS contains the following major components:

Object Storage Device (ODS). The storage daemon - RADOS service, the location of your data. You must run this daemon on each server in your cluster. For each OSD, you can have an associated hard drive disks. For performance purposes, pool your hard drive disk with raid arrays, logical volume management (LVM) or B-tree file system (Btrfs) pooling. By default, the following pools are created: data, metadata, and RBD.

Meta-Data Server (MDS). Stores metadata. MDSs build a POSIX file system on top of objects for Ceph clients. However, if you do not use the Ceph file system, you do not need a metadata server.

Monitor (MON). This lightweight daemon handles all communications with external applications and clients. It also provides a consensus for distributed decision making in a Ceph/RADOS cluster. For instance, when you mount a Ceph shared on a client, you point to the address of a MON server. It checks the state and the consistency of the data. In an ideal setup, you must run at least three

ceph-mondaemons on separate servers.

Ceph developers recommend that you use Btrfs as a file system for storage. XFS might be a better alternative for production environments. Neither Ceph nor Btrfs is ready for production and it could be risky to use them in combination. XFS is an excellent alternative to Btrfs. The ext4 file system is also compatible but does not exploit the power of Ceph.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

Currently, configure Ceph to use the XFS file system. Use Btrfs when it is stable enough for production. |

See ceph.com/docs/master/rec/file system/ for more information about usable file systems.

To store and access your data, you can use the following storage systems:

RADOS. Use as an object, default storage mechanism.

RBD. Use as a block device. The Linux kernel RBD (rados block device) driver allows striping a Linux block device over multiple distributed object store data objects. It is compatible with the kvm RBD image.

CephFS. Use as a file, POSIX-compliant file system.

Ceph exposes its distributed object store (RADOS). You can access it through the following interfaces:

RADOS Gateway. Swift and Amazon-S3 compatible RESTful interface. See RADOS_Gateway for more information.

librados, and the related C/C++ bindings.

rbd and QEMU-RBD. Linux kernel and QEMU block devices that stripe data across multiple objects.

For detailed installation instructions and benchmarking information, see http://www.sebastien-han.fr/blog/2012/06/10/introducing-ceph-to-openstack/.

The following table contains the configuration options supported by the Ceph RADOS Block Device driver.

| Configuration option=Default value | Description |

| rbd_ceph_conf= | (StrOpt) path to the ceph configuration file to use |

| rbd_flatten_volume_from_snapshot=False | (BoolOpt) flatten volumes created from snapshots to remove dependency |

| rbd_max_clone_depth=5 | (IntOpt) maximum number of nested clones that can be taken of a volume before enforcing a flatten prior to next clone. A value of zero disables cloning |

| rbd_pool=rbd | (StrOpt) the RADOS pool in which rbd volumes are stored |

| rbd_secret_uuid=None | (StrOpt) the libvirt uuid of the secret for the rbd_uservolumes |

| rbd_user=None | (StrOpt) the RADOS client name for accessing rbd volumes - only set when using cephx authentication |

| volume_tmp_dir=None | (StrOpt) where to store temporary image files if the volume driver does not write them directly to the volume |