How Diego Allocates Work

Page last updated: December 2, 2015

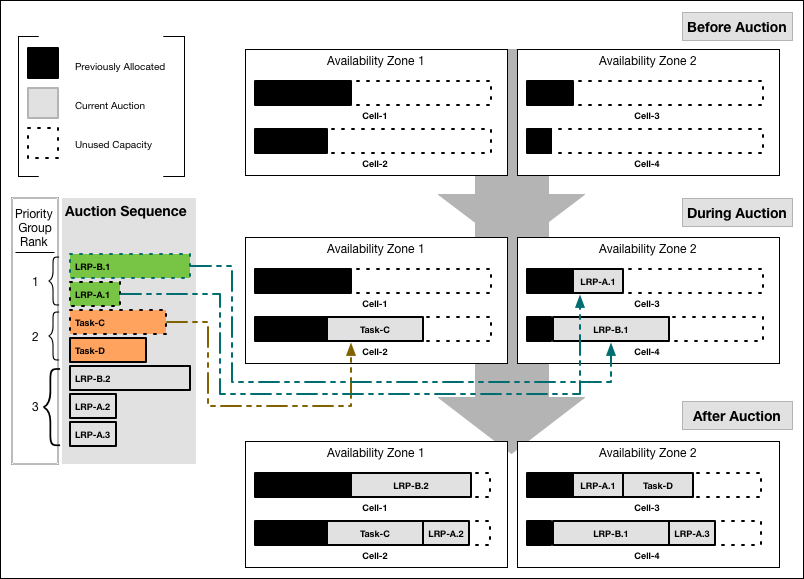

The Diego Auction balances application processes, also called jobs, over the virtual machines in an installation. When new processes need to be allocated to VMs, the Diego Auction determines which ones should run on which machines, to balance the load and optimize application availability and resilience. This topic explains how the Diego Auction works at a conceptual level.

The Diego Auction replaces the Cloud Controller DEA placement algorithm, which performed this function in the pre-Diego Cloud Foundry architecture.

Tasks and Long Running Processes

The Diego Auction distinguishes between two types of jobs: Tasks and Long-Running Processes (LRPs).

Tasks run once, for a finite amount of time. A common example is a staging task that compiles an app’s dependencies to form the droplet that runs the app on the platform. Other examples of tasks include making a database schema change, bulk importing data to initialize a database, and setting up a connected service.

Long-Running Processes run continuously, for an indefinite time. LRPs terminate only if stopped or killed, or if they crash. Examples include web servers, asynchronous background workers, and other applications and services that continuously accept and process input. To make high-demand LRPs more available, Diego may allocate multiple instances of the same application to run simultaneously on different VMs.

Each auction distributes a batch of work that consists of Tasks and LRPs that need to be allocated to VMs. These can include newly created jobs, jobs left unallocated in the previous auction, and jobs left orphaned by failed VMs. Diego does not redistribute jobs already running on VMs Only one auction can take place at a time, which prevents placement collisions from occurring.

Ordering the Auction Batch

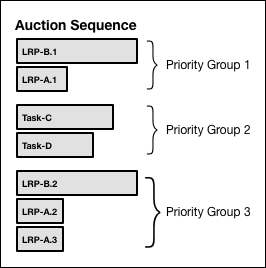

The Diego Auction algorithm allocates jobs to VM resources to fulfill the following outcomes, in decreasing priority order:

Keep at least one instance of each LRP running

Run all of the current Tasks

Distribute as much of the total desired LRP load as possble over the remaining available virtual machine resources, by spreading multiple LRP instances broadly across VMs and their Availability Zones

To accomplish this, each auction begins with the Auctioneer component organizing the batch jobs into a priority order. First, the Auctioneer sorts all jobs in decreasing order of memory load, so that larger units of work are placed first. Some of these jobs may be duplicate instances that Diego allocates for high-demand LRPs, to meet demand.

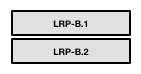

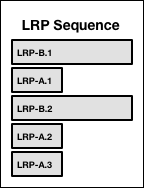

The Auctioneer then builds an ordered sequence of LRP instances by cycling through the LRPs in decreasing order of load, and adding instances of each onto the sequence until all LRPs reach their desired instance count.

For example, if the job LRP-A has a desired instance count of 3 and a load of 2, and job LRP-B has 2 desired instances and load 5, the Auctioneer would create instances as follows:

| Job | Desired Instances | Load | Jobs |

|---|---|---|---|

| LRP-A | 3 |  |

|

| LRP-B | 2 |  |

|

The Auctioneer would then order the instances like this:

The Auctioneer then builds an ordering for all jobs, both LRPs and Tasks. Reflecting the priority order above, first instances of LRPs are first priority, Tasks are next, and additional LRP instances follow. Adding one-time Task-C (load = 4) and Task-D (load = 3) to the above example, the priority order becomes:

Auctioning the Batch to the Cells

With all jobs sorted in priority order, the Auctioneer allocates each in turn to one of the installation’s application-running VMs. This is the auction part. Facilitating this process, each app VM has a resident Cell that monitors and allocates the machine’s operation. Each Cell has a Cell Rep that sends status updates to the BBS, to be read by the Cloud Controller. The Cell also participates in the auction on behalf of the virtual machine that it runs on.

Starting with the highest-priority job in the ordered sequence, the Auctioneer polls all the Cells on their fitness to run the currently-auctioned job. Cells “bid” to host each job according to the following priorities, in decreasing order:

The cell must have the correct software stack to host the job, and sufficient resources given its allocation so far during this auction.

Allocate LRP instances into an Availability Zone that’s not already hosting other instances of the same LRP.

Within an Availability Zone, allocate LRP instances to a Cell that’s not already hosting other instances of the same LRP.

Distribute total load evenly across all cells. In other words, all other things being equal, allocate the job to the cell that has lightest load so far.

To allocate our example jobs into Cells 1, 2, 3, and 4, with available load capacities of 6, 7, 8, and 9, respectively, and deployed into two Availability Zones, the Auctioneer might distribute the work as follows:

If the Auctioneer reaches the end of the sequence, having distributed all jobs to the Cells, it submits requests to the Cells to execute their allotted work. If the Cells ran out of capacity to handle all jobs in the sequence, the Auctioneer carries the unallocated jobs over and merges it into the next auction batch, to be allocated (hopefully) next time.

Triggering Another Auction

The Cloud Controller initiates new auctions to correct the cloud’s workload under two conditions: 1) When the actual number of running instances of LRPs doesn’t match the number desired, and 2) When a Cell fails, and its failure condition triggers an auction request.

In the first case, the Cloud Controller’s BBS component monitors the number of instances of each LRP that are currently running. The Converger component periodically compares this number with the desired number of LRP instances, as configured by the user. If the actual number falls short of what’s desired, the Converger triggers a new auction. In the case of a surplus of application instances, the Converger kills the extra instances and initiates another auction.

After any auction, if a Cell responds to its work request by saying that it cannot perform the work after all, the Auctioneer carries the failed work over into the next batch. If the Cell fails to respond entirely, for example if a connection times out, the Auctioneer does not automatically hold the work over into the next batch, because the Cell may be running it anyway and the system doesn’t want to double-assign. Instead, it defers to the Converger to continue monitoring the states of the Cells, and re-assign unassigned work later if needed.