Based off a blog post by Vish Ishaya

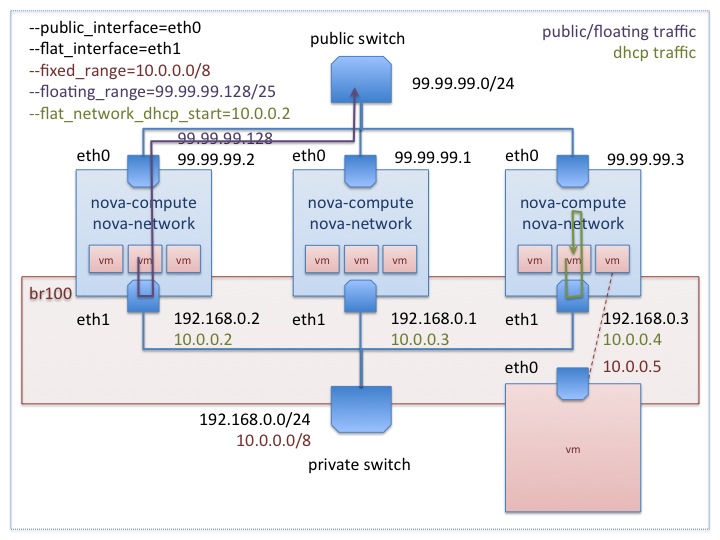

As illustrated in the Flat DHCP diagram in Section Configuring Flat DHCP Networking titled Flat DHCP network, multiple interfaces, multiple servers, traffic from the VM to the public internet has to go through the host running nova network. DHCP is handled by nova-network as well, listening on the gateway address of the fixed_range network. The compute hosts can optionally have their own public IPs, or they can use the network host as their gateway. This mode is pretty simple and it works in the majority of situations, but it has one major drawback: the network host is a single point of failure! If the network host goes down for any reason, it is impossible to communicate with the VMs. Here are some options for avoiding the single point of failure.

To eliminate the network host as a single point of failure, Compute can be configured to allow each compute host to do all of the networking jobs for its own VMs. Each compute host does NAT, DHCP, and acts as a gateway for all of its own VMs. While there is still a single point of failure in this scenario, it is the same point of failure that applies to all virtualized systems.

This setup requires adding an IP on the VM network to each host in the system, and it implies a little more overhead on the compute hosts. It is also possible to combine this with option 4 (HW Gateway) to remove the need for your compute hosts to gateway. In that hybrid version they would no longer gateway for the VMs and their responsibilities would only be DHCP and NAT.

The resulting layout for the new HA networking option looks the following diagram:

In contrast with the earlier diagram, all the hosts in the system are running the nova-compute, nova-network and nova-api services. Each host does DHCP and does NAT for public traffic for the VMs running on that particular host. In this model every compute host requires a connection to the public internet and each host is also assigned an address from the VM network where it listens for DHCP traffic. The nova-api service is needed so that it can act as a metadata server for the instances.

To run in HA mode, each compute host must run the following services:

nova-compute

nova-network

nova-api-metadata or nova-api

If the compute host is not an API endpoint, use the

nova-api-metadata service. The

nova.conf file should

contain:

multi_host=True

send_arp_for_ha=true

The send_arp_for_ha option

facilitates sending of gratuitous arp messages to

ensure the arp caches on compute hosts are up to

date.

If a compute host is also an API endpoint, use the

nova-api service. Your

enabled_apis option will need

to contain metadata, as well as

additional options depending on the API services. For

example, if it supports compute requests, volume

requests, and EC2 compatibility, the

nova.conf file should

contain:

multi_host=True send_arp_for_ha=true enabled_apis=ec2,osapi_compute,osapi_volume,metadata

The multi_host option must be in place when you create the

network and nova-network must be run on every compute host. These created multi

hosts networks will send all network related commands to the host that the specific

VM is on. You need to edit the configuration option enabled_apis

such that it includes metadata in the list of enabled APIs.

Other options become available when you configure multi_host nova networking please

refer to Configuration: nova.conf.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

You must specify the

# nova network-create test --fixed-range-v4=192.168.0.0/24 --multi-host=T

|

The folks at NTT labs came up with a ha-linux configuration that allows for a 4 second failover to a hot backup of the network host. Details on their approach can be found in the following post to the openstack mailing list: https://lists.launchpad.net/openstack/msg02099.html

This solution is definitely an option, although it requires a second host that essentially does nothing unless there is a failure. Also four seconds can be too long for some real-time applications.

To enable this HA option, your nova.conf file must contain

the following option:

send_arp_for_ha=True

See https://bugs.launchpad.net/nova/+bug/782364 for details on why this option is required when configuring for failover.

Recently, nova gained support for multi-nic. This allows us to bridge a given VM into multiple networks. This gives us some more options for high availability. It is possible to set up two networks on separate vlans (or even separate ethernet devices on the host) and give the VMs a NIC and an IP on each network. Each of these networks could have its own network host acting as the gateway.

In this case, the VM has two possible routes out. If one of them fails, it has the option of using the other one. The disadvantage of this approach is it offloads management of failure scenarios to the guest. The guest needs to be aware of multiple networks and have a strategy for switching between them. It also doesn't help with floating IPs. One would have to set up a floating IP associated with each of the IPs on private the private networks to achieve some type of redundancy.

The dnsmasq service can be configured to

use an external gateway instead of acting as the gateway for the VMs. This offloads

HA to standard switching hardware and it has some strong benefits. Unfortunately,

the nova-network service is still

responsible for floating IP natting and DHCP, so some failover strategy needs to be

employed for those options. To configure for hardware gateway:

Create a

dnsmasqconfiguration file (e.g.,/etc/dnsmasq-nova.conf) that contains the IP address of the external gateway. If running in FlatDHCP mode, assuming the IP address of the hardware gateway was 172.16.100.1, the file would contain the line:dhcp-option=option:router,

172.16.100.1If running in VLAN mode, a separate router must be specified for each network. The networks are identified by the first argument when calling nova network-create to create the networks as documented in the Configuring VLAN Networking subsection. Assuming you have three VLANs, that are labeled

red,green, andblue, with corresponding hardware routers at172.16.100.1,172.16.101.1and172.16.102.1, thednsmasqconfiguration file (e.g.,/etc/dnsmasq-nova.conf) would contain the following:dhcp-option=tag:'red',option:router,172.16.100.1 dhcp-option=tag:'green',option:router,172.16.101.1 dhcp-option=tag:'blue',option:router,172.16.102.1

Edit

/etc/nova/nova.confto specify the location of thednsmasqconfiguration file:dnsmasq_config_file=/etc/dnsmasq-nova.conf

Configure the hardware gateway to forward metadata requests to a host that's running the

nova-apiservice with the metadata API enabled.The virtual machine instances access the metadata service at

169.254.169.254port80. The hardware gateway should forward these requests to a host running thenova-apiservice on the port specified as themetadata_hostconfig option in/etc/nova/nova.conf, which defaults to8775.Make sure that the list in the

enabled_apisconfiguration option/etc/nova/nova.confcontainsmetadatain addition to the other APIs. An example that contains the EC2 API, the OpenStack compute API, the OpenStack volume API, and the metadata service would look like:enabled_apis=ec2,osapi_compute,osapi_volume,metadata

Ensure you have set up routes properly so that the subnet that you use for virtual machines is routable.