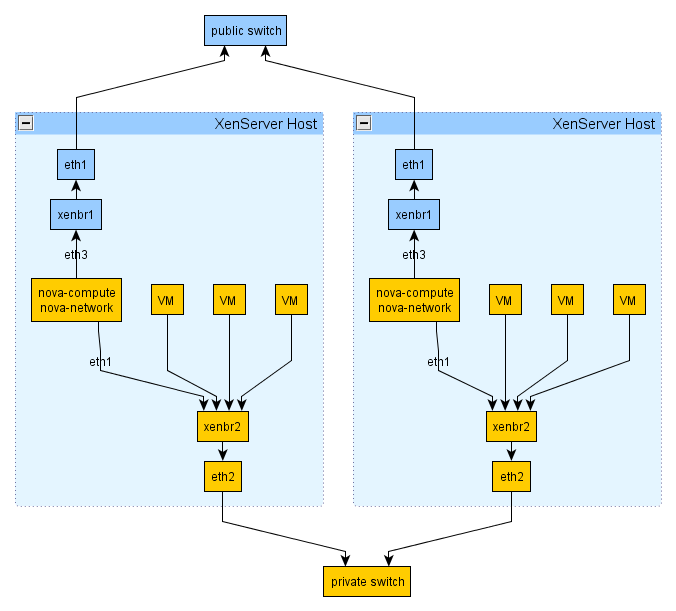

The following figure shows a setup with Flat DHCP networking, network HA, and using multiple interfaces. For simplicity, the management network (on XenServer eth0 and eth2 of the VM running the OpenStack services) has been omitted from the figure below.

Figure 10.5. Flat DHCP network, multiple interfaces, multiple servers, network HA with XenAPI driver

Here is an extract from a nova.conf

file in a system running the above setup:

network_manager=nova.network.manager.FlatDHCPManager xenapi_vif_driver=nova.virt.xenapi.vif.(XenAPIBridgeDriver or XenAPIOpenVswitchDriver) flat_interface=eth1 flat_network_bridge=xenbr2 public_interface=eth3 multi_host=True dhcpbridge_flagfile=/etc/nova/nova.conf fixed_range='' force_dhcp_release=True send_arp_for_ha=True flat_injected=False firewall_driver=nova.virt.xenapi.firewall.Dom0IptablesFirewallDriver

You should notice that

flat_interface

and

public_interface

refer to the network interface on the VM running the OpenStack

services, not the network interface on the Hypervisor.

Secondly

flat_network_bridge

refers to the name of XenAPI network that you wish to have

your instance traffic on, i.e. the network on which the VMs

will be attached. You can either specify the bridge name,

such an xenbr2, or the name label,

such as vmbr.

Specifying the name-label is very useful in cases where your

networks are not uniform across your XenServer hosts.

When you have a limited number of network cards on your

server, it is possible to use networks isolated using VLANs for

the public and network traffic. For example, if you have two

XenServer networks xapi1 and

xapi2 attached on VLAN 102 and 103

on eth0, respectively,

you could use these for eth1 and eth3 on

your VM, and pass the appropriate one to

flat_network_bridge.

When using XenServer, it is best to use the firewall driver

written specifically for XenServer. This pushes the firewall

rules down to the hypervisor, rather than running them in the

VM that is running nova-network.